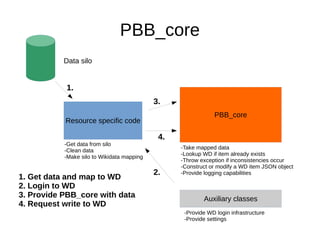

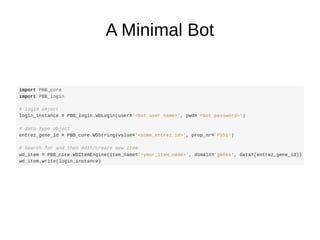

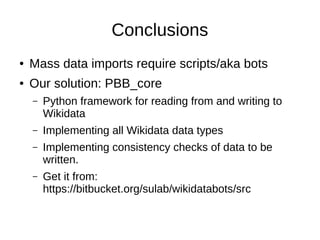

This document describes PBB_core, a Python framework for building bots to import large amounts of data into Wikidata. PBB_core handles retrieving data from external sources, mapping it to Wikidata properties, checking for duplicate entries, constructing Wikidata item JSON objects, and writing the data to Wikidata via its API. The framework implements all Wikidata data types and provides utilities like logging and authentication to streamline bot development. PBB_core allows for fast development and deployment of bots to mass import data while preventing duplicates and maintaining data integrity.