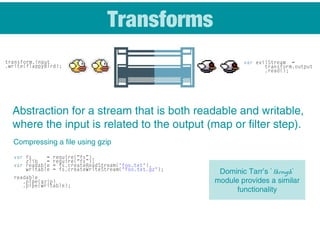

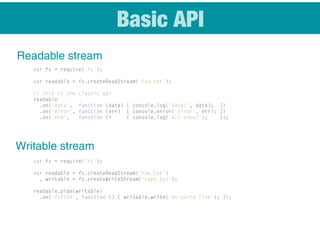

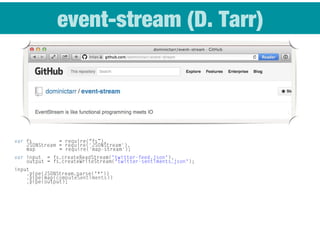

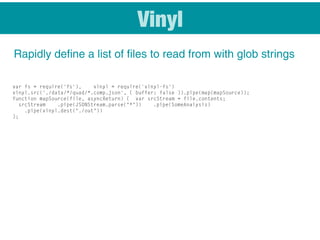

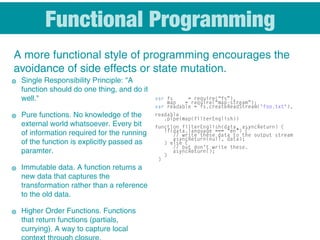

This document provides an introduction to streams and their uses for data processing and analysis. Streams allow processing large datasets in a manageable way by handling data as a sequential flow rather than loading entire files into memory at once. The document discusses readable and writable streams that sources and sink data, as well as transform streams that manipulate data. It provides examples of using streams for tasks like scraping websites, normalizing data, and performing map-reduce operations. The programming benefits of streams like separation of concerns and a functional programming style are also outlined.

![Streams for data analysis

Garden Data. Aggregating data scrapped from a large number of websites.

Parsing them. Normalizing them (Farenheit vs Celsius, March in NH or SH). Reducing

them (converting [55-65] to 55 #1, 60 #1, 65 #1). Rendering them (average vs

visualisation).

➡](https://image.slidesharecdn.com/streams-140827051026-phpapp02/85/Streams-4-320.jpg)

![What are they good for?

๏ Gulp - writing your own modules.

๏ Real-time data obtained from remote servers that would

be too impractical to buffer in a device with limited

memory.

๏ Map-reduce types of computations - a programming

model for processing and generating large data sets. A

map function generates a set of intermediate key/value

pairs ({word: ‘hello’, length: 5}) and a reduce function

merges all intermediate values associated with the same

intermediate key ([‘agile’ , ‘greet’ ,‘hello’] - list of words of

length 5). Great if you want to run computations on

distributed systems.](https://image.slidesharecdn.com/streams-140827051026-phpapp02/85/Streams-6-320.jpg)