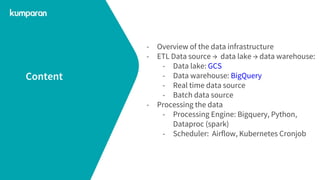

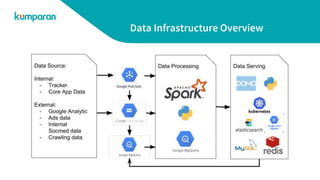

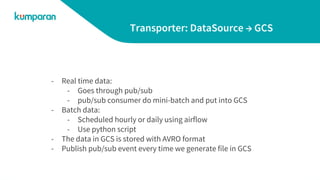

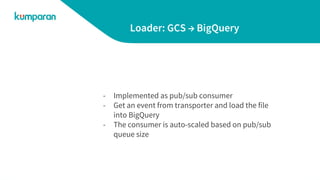

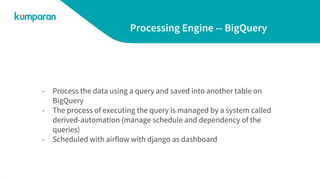

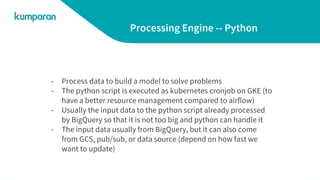

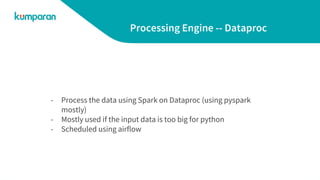

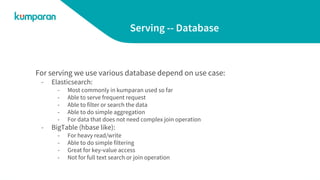

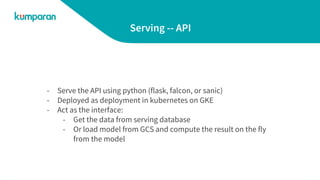

The document provides an overview of Kumparan's data infrastructure. It describes their ETL process of moving data from sources to a data lake (Google Cloud Storage) and data warehouse (BigQuery). It also discusses how they process data using BigQuery, Python, and Dataproc. Processed data is then served from databases like Elasticsearch, Bigtable, MySQL, and Redis. Visualizations and reports are generated in DOMO. Examples of use cases like visualization, recommendation, spam detection, and trend prediction are also outlined.