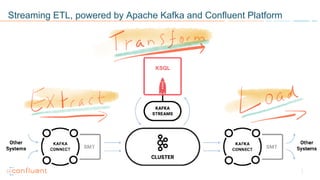

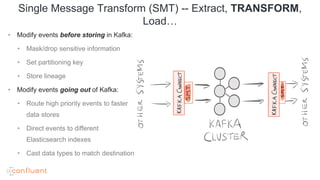

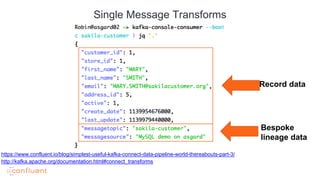

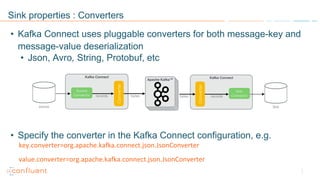

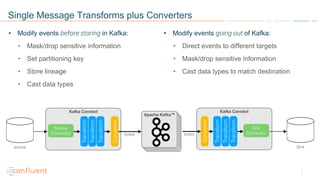

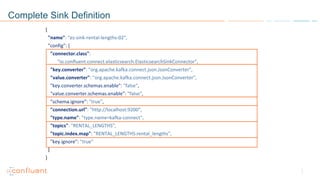

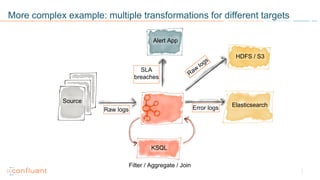

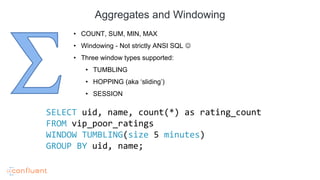

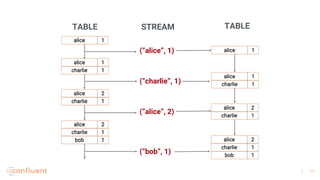

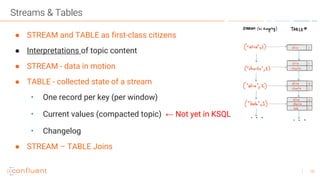

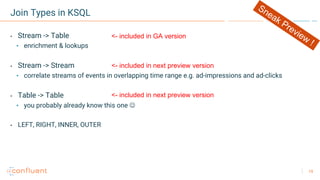

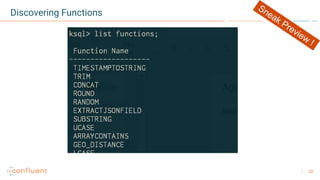

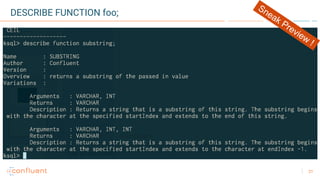

The document presents a session on streaming ETL powered by Apache Kafka and the Confluent platform, focusing on the role of single message transforms (SMTs) and sink properties for data processing. It highlights the capabilities of KSQL for streaming analytics, including various transformation options, windowing techniques, and data enrichment through joins. Attendees are encouraged to engage with the content and submit questions during the presentation.