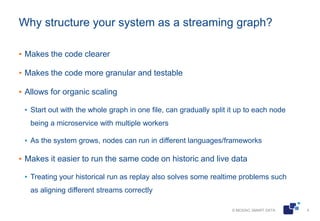

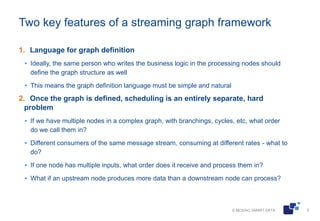

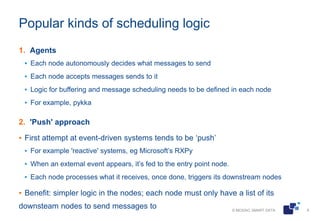

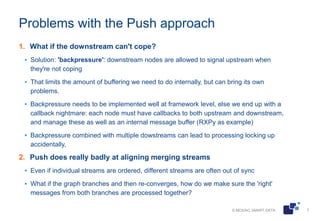

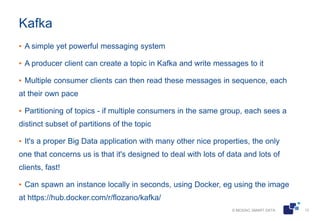

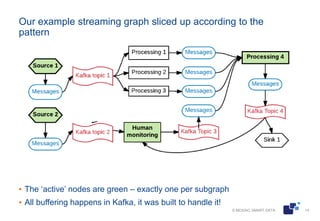

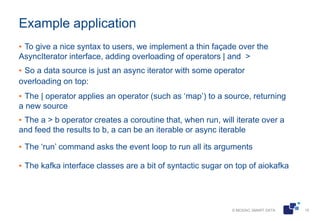

The document discusses the architecture of streaming analytics using asynchronous Python and Kafka, focusing on the benefits and challenges of structuring systems as streaming graphs. It highlights the importance of clear code, scalability, and efficient message scheduling, comparing 'push' and 'pull' approaches to message handling. The presentation concludes with a proposed lightweight design that leverages asyncio and Kafka to manage complex data streams effectively.