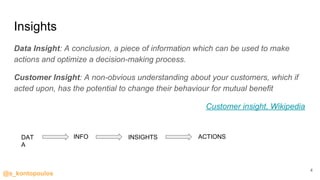

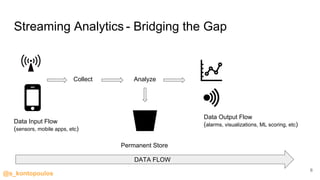

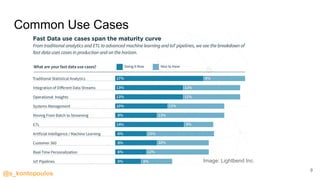

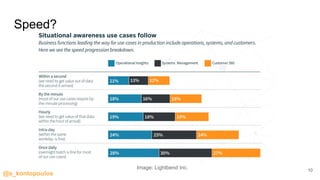

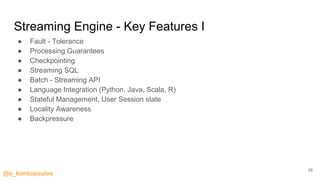

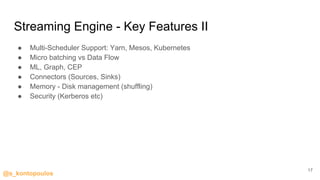

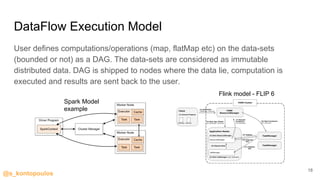

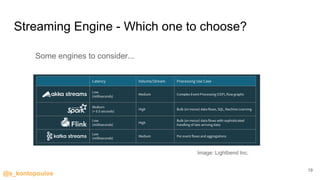

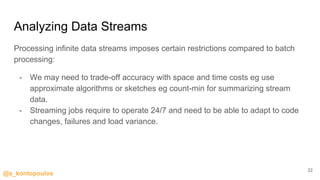

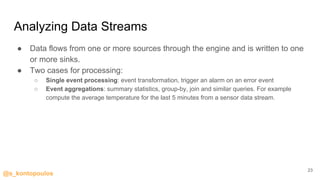

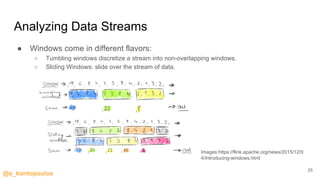

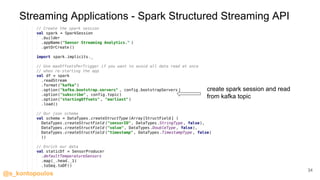

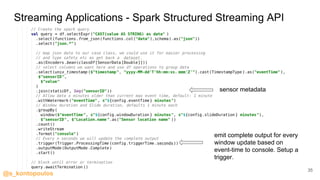

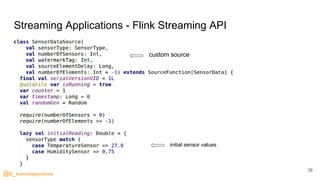

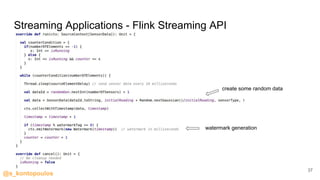

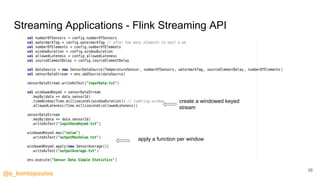

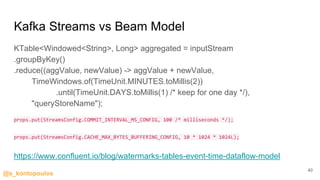

This document provides an overview of streaming analytics, including definitions, common use cases, and key concepts like streaming engines, processing models, and guarantees. It also provides examples of analyzing data streams using Apache Spark Structured Streaming, Apache Flink, and Kafka Streams APIs. Code snippets demonstrate windowing, triggers, and working with event-time.