Spark Streaming provides an easier API for streaming data than Storm, replacing Storm's spouts and bolts with Akka actors. It integrates better with Hadoop and makes time a core part of its API. This document provides instructions for setting up Spark Streaming projects using sbt or Maven and includes a demo reading from Kafka and processing a Twitter stream.

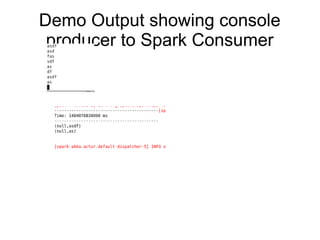

![Producer

Use awaitTermination() to get infinite loop so you

can see what you enter into the producer; Start

w/1 executor

val stream = new StreamingContext("local[2]","TestObject", Seconds(1))

val kafkaMessages=

KafkaUtils.createStream(stream,"localhost:2181","1",Map("testtopic"->1))

//create 5 executors

val kafkaInputs = (1 to 5).map { _ =>

KafkaUtils.createStream(stream,"localhost:2181", "1", Map("testtopic" -> 1))

kafkaMessages.print()

stream.start()

stream.awaitTermination()](https://image.slidesharecdn.com/sparkstreamingintro-140629165356-phpapp01/85/Spark-Streaming-Info-9-320.jpg)

![Producer

Use awaitTermination() to get infinite loop so you

can see what you enter into the producer; Start

w/1 executor

val stream = new StreamingContext("local[2]","TestObject", Seconds(1))

val kafkaMessages=

KafkaUtils.createStream(stream,"localhost:2181","1",Map("testtopic"->1))

//create 5 executors

val kafkaInputs = (1 to 5).map { _ =>

KafkaUtils.createStream(stream,"localhost:2181", "1", Map("testtopic" -> 1))

kafkaMessages.print()

stream.start()

stream.awaitTermination()](https://image.slidesharecdn.com/sparkstreamingintro-140629165356-phpapp01/85/Spark-Streaming-Info-10-320.jpg)