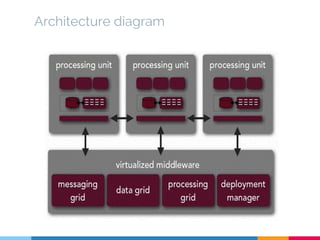

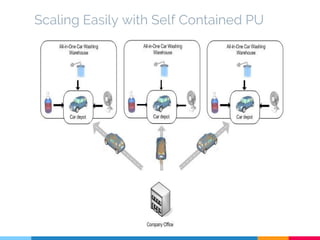

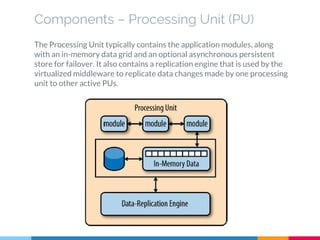

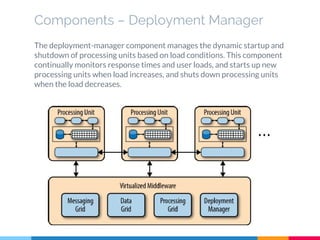

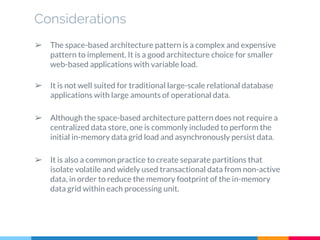

Space-Based Architecture is a software architecture pattern that achieves linear scalability through stateful, high-performance applications using distributed processing units (PUs). Each PU contains business logic, data, and messaging to process end-to-end business transactions. The PUs scale horizontally by adding more units and utilize in-memory data grids, messaging grids, and deployment managers to replicate data changes and distribute workload. While effective for web applications with variable loads, it is a complex pattern and not suited for large relational databases or datasets.