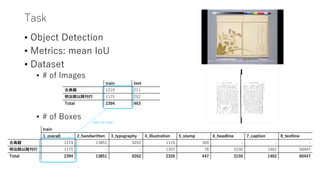

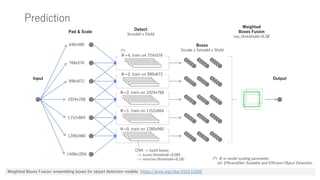

This document summarizes the 1st place solution for detecting objects in images of classical and modern Japanese books for the National Diet Library of Japan. The solution uses an EfficientDet model with BiFPN and CenterNet to detect 7 categories of objects. It is trained on over 2000 images using focal loss, L1 loss, and data augmentation. Multiple models at different scales are ensemble using weighted boxes fusion to achieve mean IoU scores of 0.82340 for public and 0.84978 for private leaderboards.

![CNN Architecture

margin (*)

(1_overall)

keypoint heatmap

(category 2~8)

box size (*)

local offset

EfficientNet

(ImageNet pretrained)

BiFPN

image

[b, 3, h, w]

[b, 4]

[b, 2, h/4, w/4]

[b, 2, h/4, w/4]

[b, 7, h/4, w/4]

EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks https://arxiv.org/abs/1905.11946

EfficientDet: Scalable and Efficient Object Detection https://arxiv.org/abs/1911.09070

Objects as Points https://arxiv.org/abs/1904.07850

(*) normalized by

input image width

category mask

[b, 7, 1, 1]

×

CenterNet

Margin Regression

古典籍:

[1, 1, 1, 1, 0, 0, 0]

明治期以降刊行:

[0, 0, 1, 1, 1, 1, 1]](https://image.slidesharecdn.com/ndl-1st-200308022827/85/SIGNATE-1st-place-solution-3-320.jpg)

![Training Parameters

• 5-Fold CV

• Batch Size: 6 (2 GPU, GTX1080ti x 2)

• Epochs: 104

• Optimizer: RAdam, LR=1.2e-3 (x0.1 at epoch=[64, 96])

• Data Augmentation:

• Random Crop & Scale

• Gray Scale / Thresholding (cv2.adaptiveThreshold)

• Random Rotate (±0.2degree)

• Cutout (side edge)

• Loss Function:

• keypoint heatmap: Focal Loss (weight=1.0)

• box size: L1 Loss (weight=5.0)

• local offset : L1 Loss (weight=0.2)

• margin : L1 Loss (weight=12.5)

On the Variance of the Adaptive Learning Rate and Beyond https://arxiv.org/abs/1908.03265](https://image.slidesharecdn.com/ndl-1st-200308022827/85/SIGNATE-1st-place-solution-4-320.jpg)

![Score History

public private

single model (Φ=4), 5-Fold CV,

NMS ensemble

0.79143 0.82140

single model (Φ=4), 5-Fold CV, TTA (3 scale),

NMS ensemble

0.80315 0.82782

3 model (Φ=[0, 2, 4]), 5-Fold CV, TTA (3 scale),

NMS ensemble

0.80468 0.82961

3 model (Φ=[0, 2, 4]), 5-Fold CV, TTA (3 scale),

WBF ensemble

0.82226 0.84791

5 model (Φ=[0, 1, 2, 3, 4]), 5-Fold CV, TTA (3 scale),

WBF ensemble

0.82340 0.84978](https://image.slidesharecdn.com/ndl-1st-200308022827/85/SIGNATE-1st-place-solution-6-320.jpg)