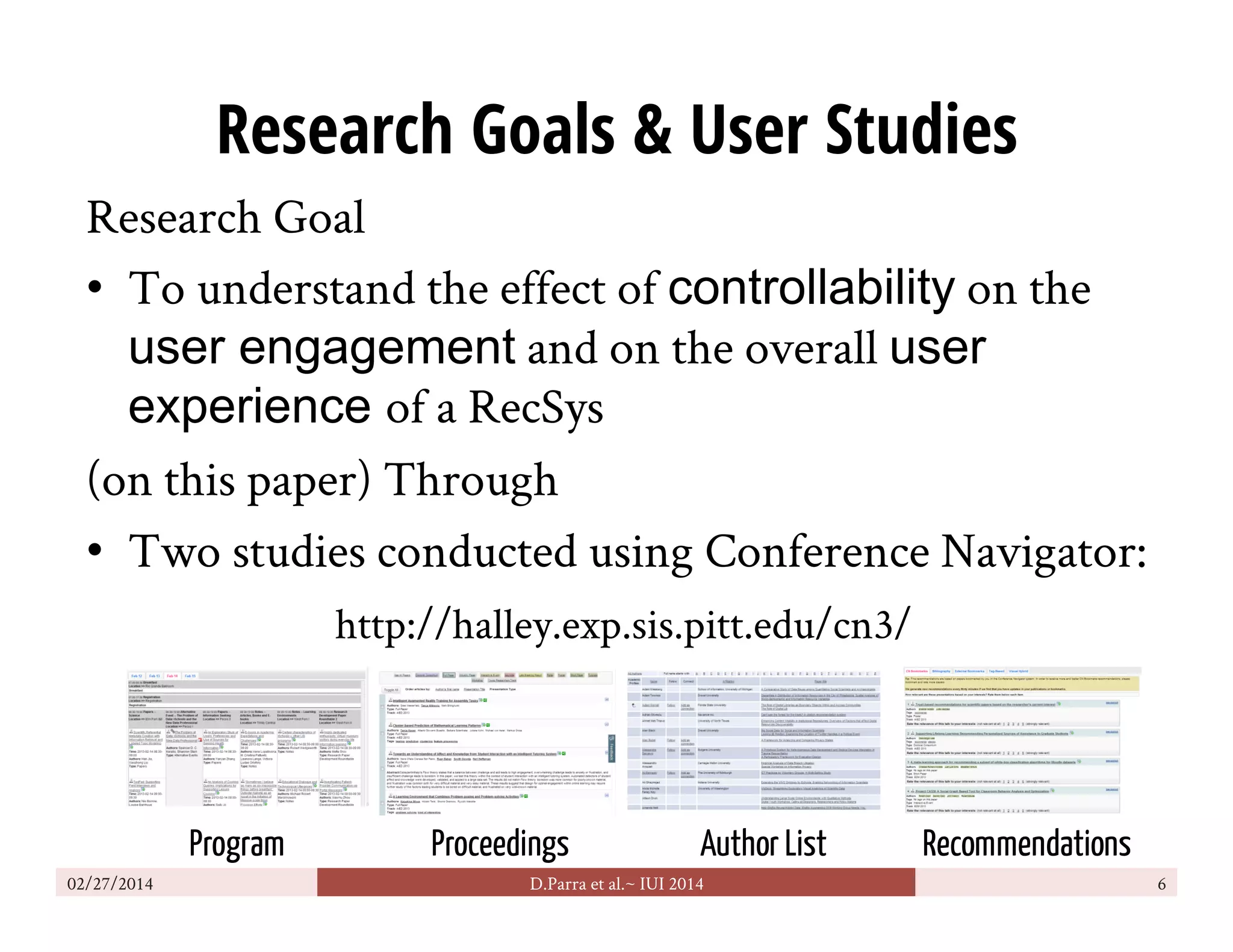

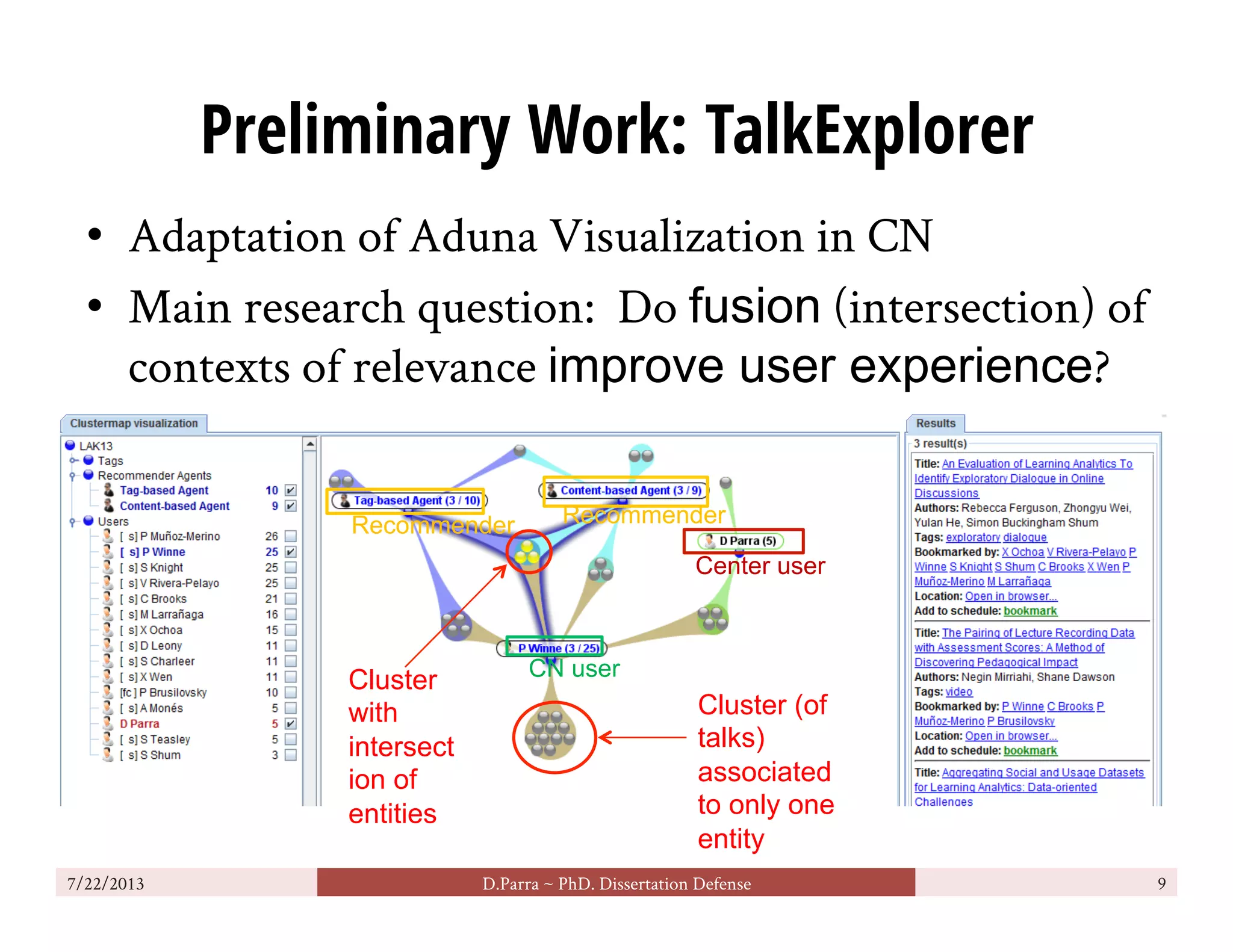

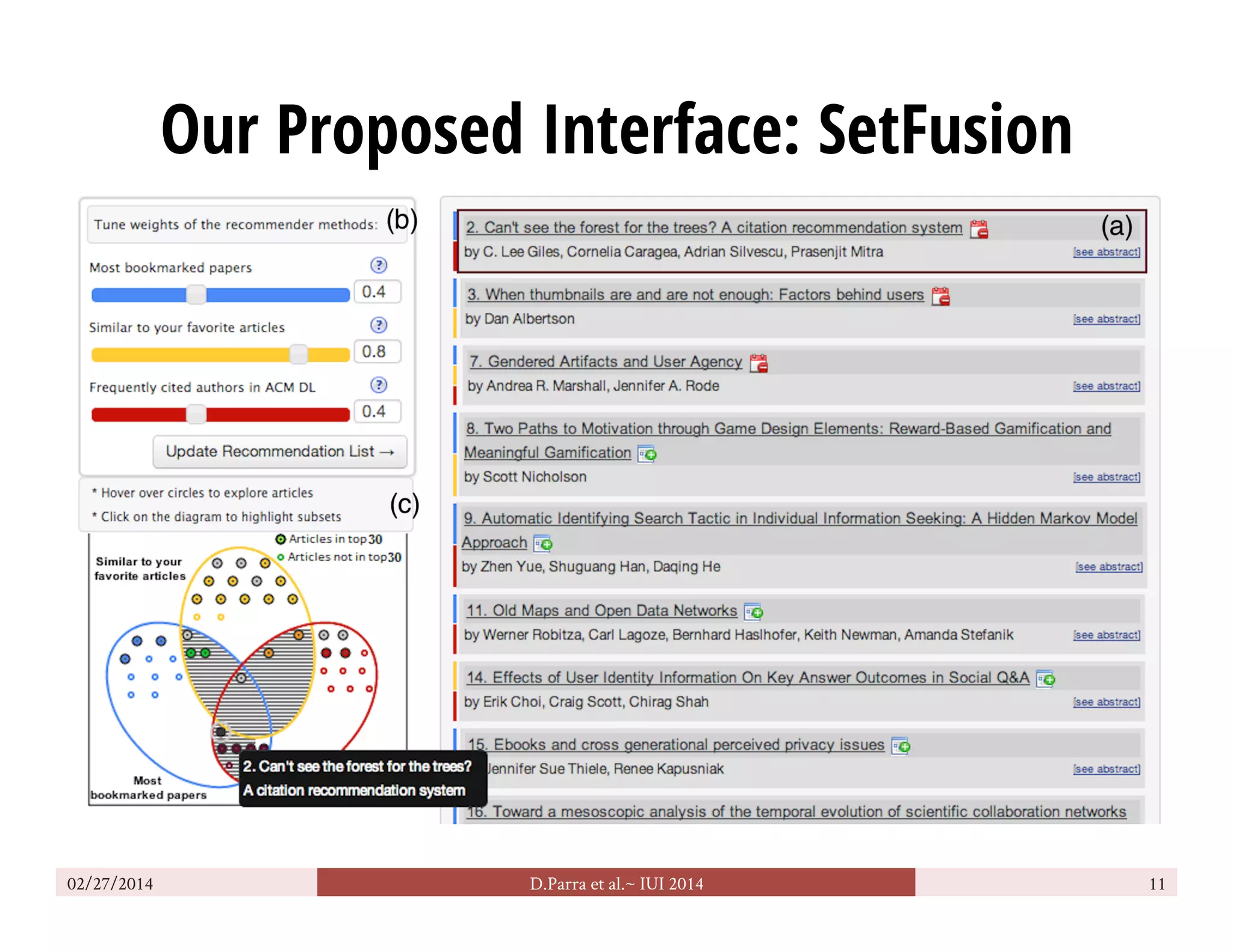

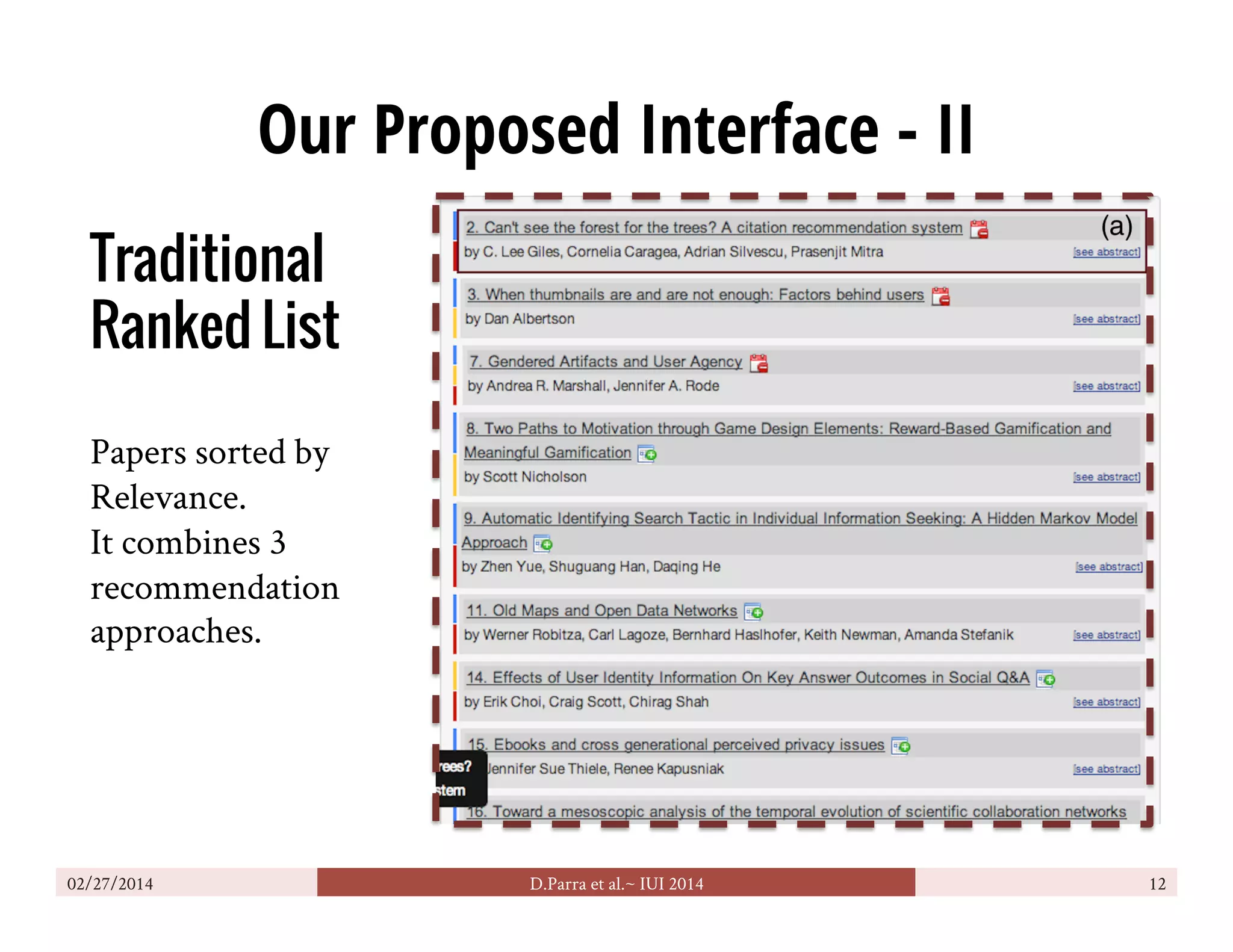

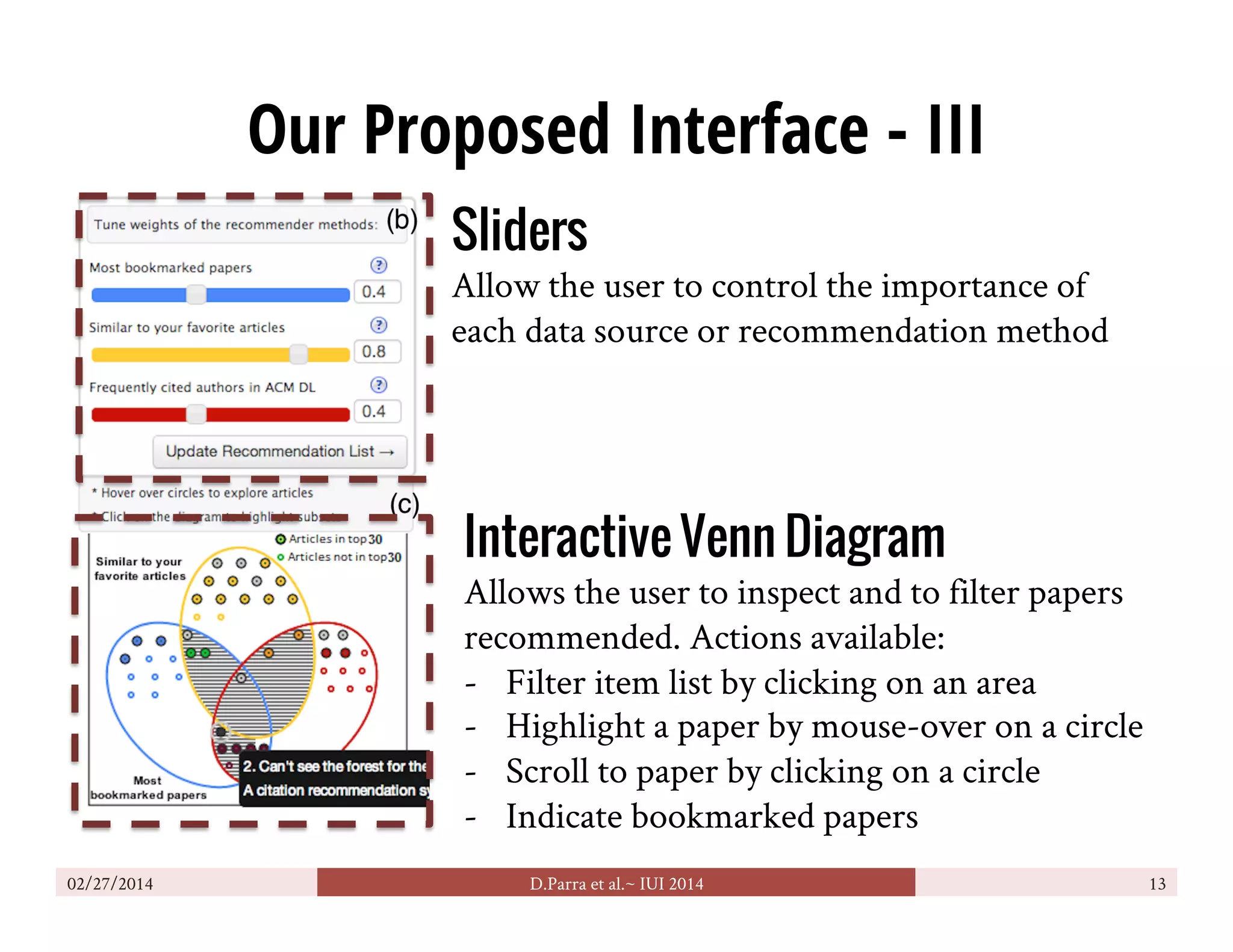

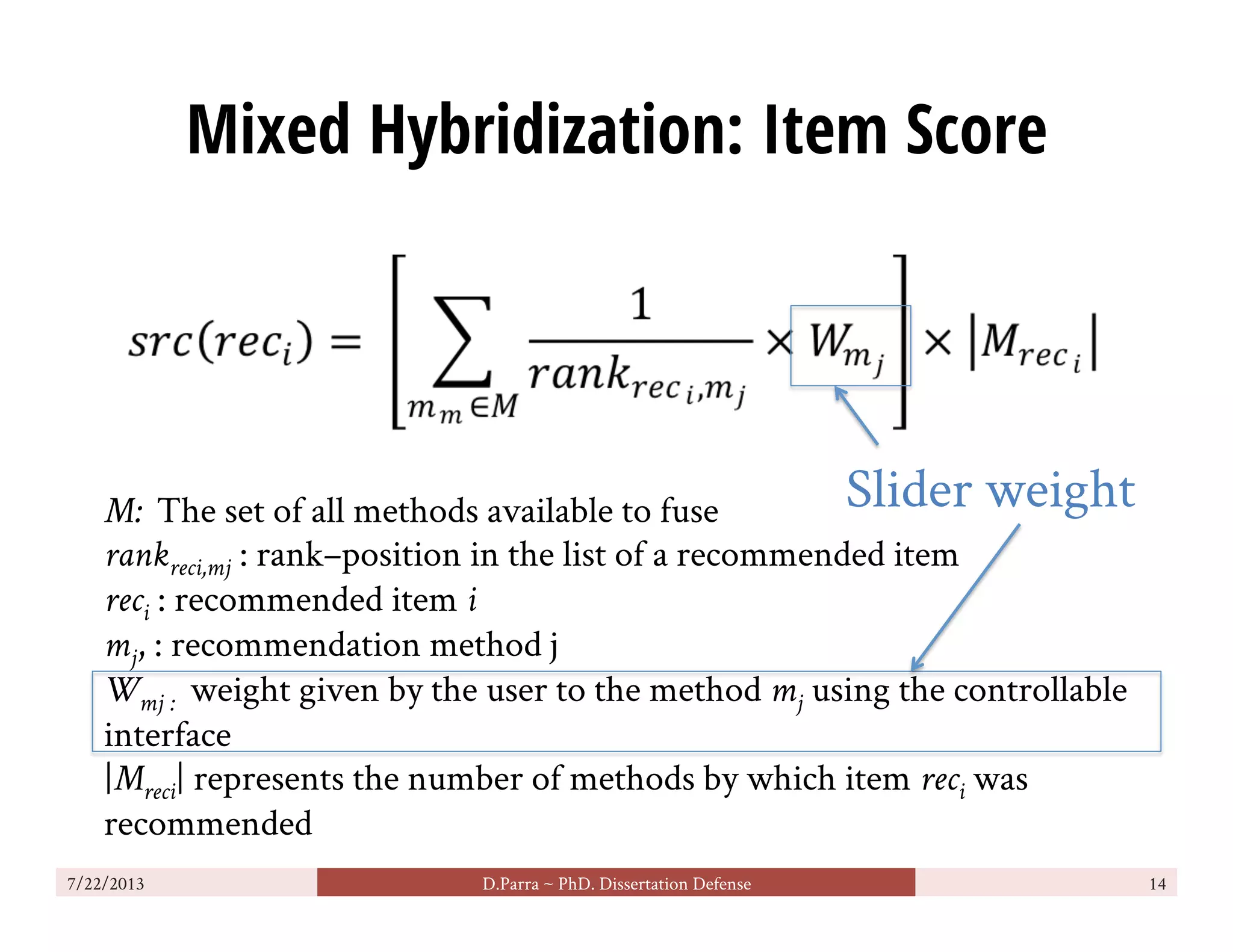

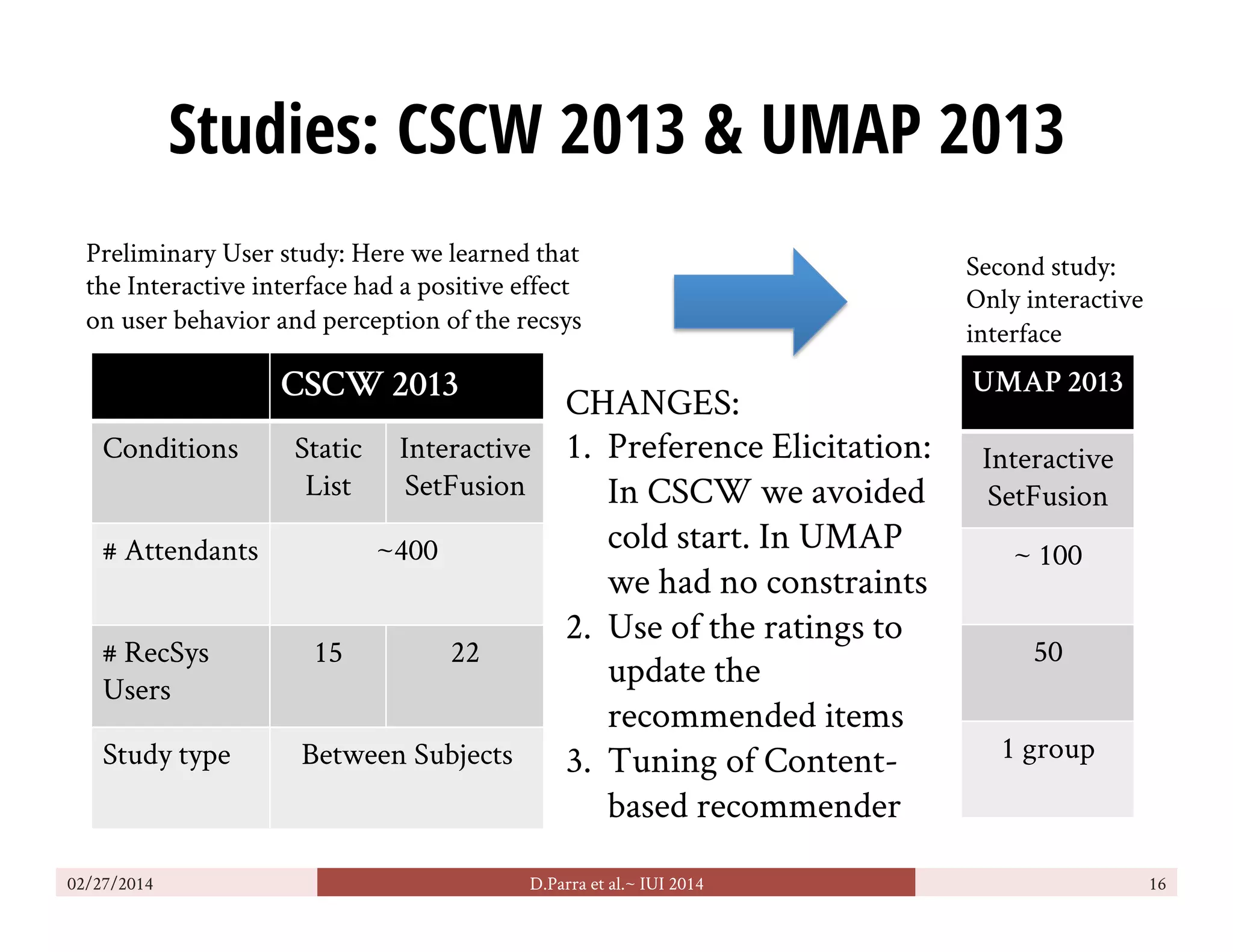

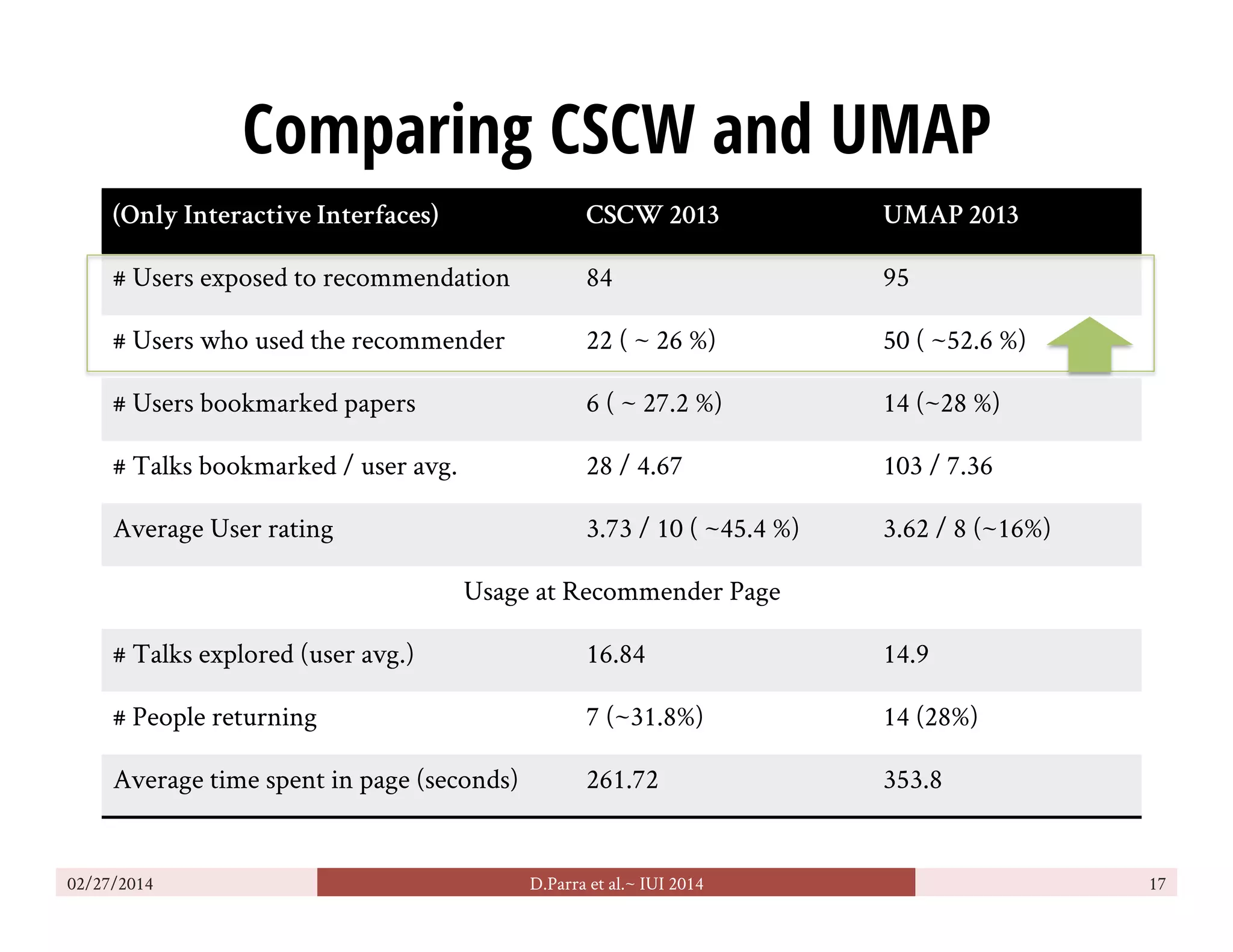

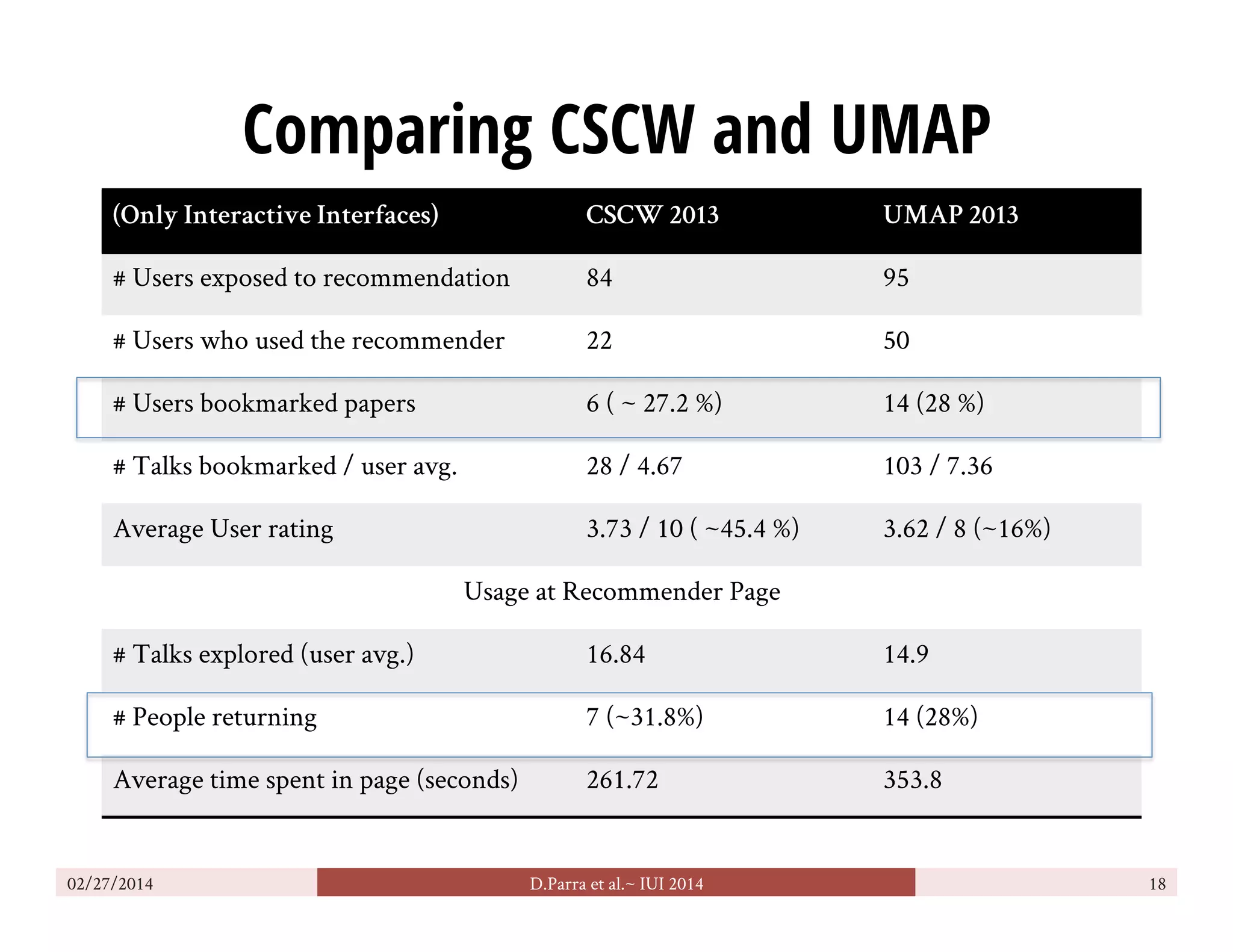

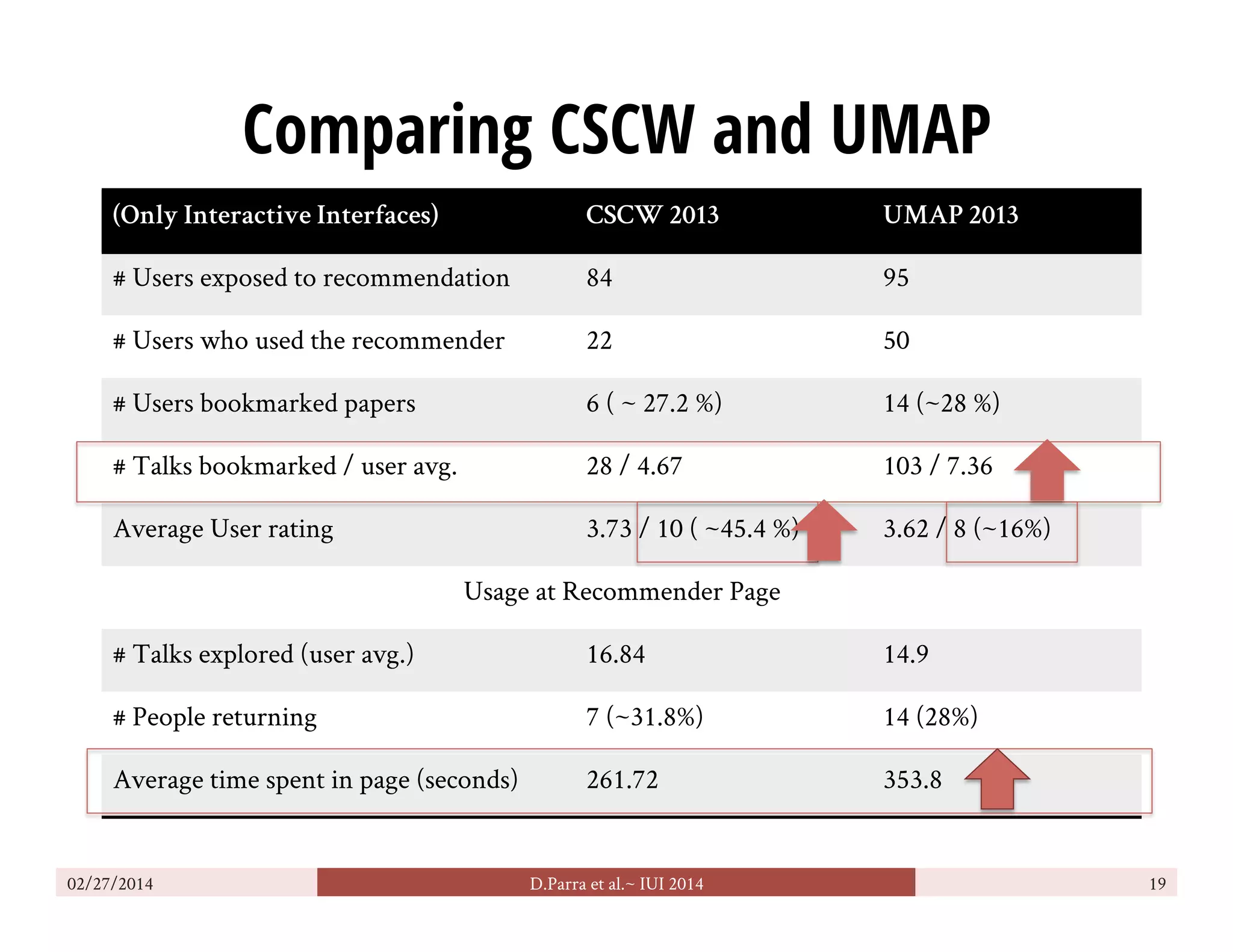

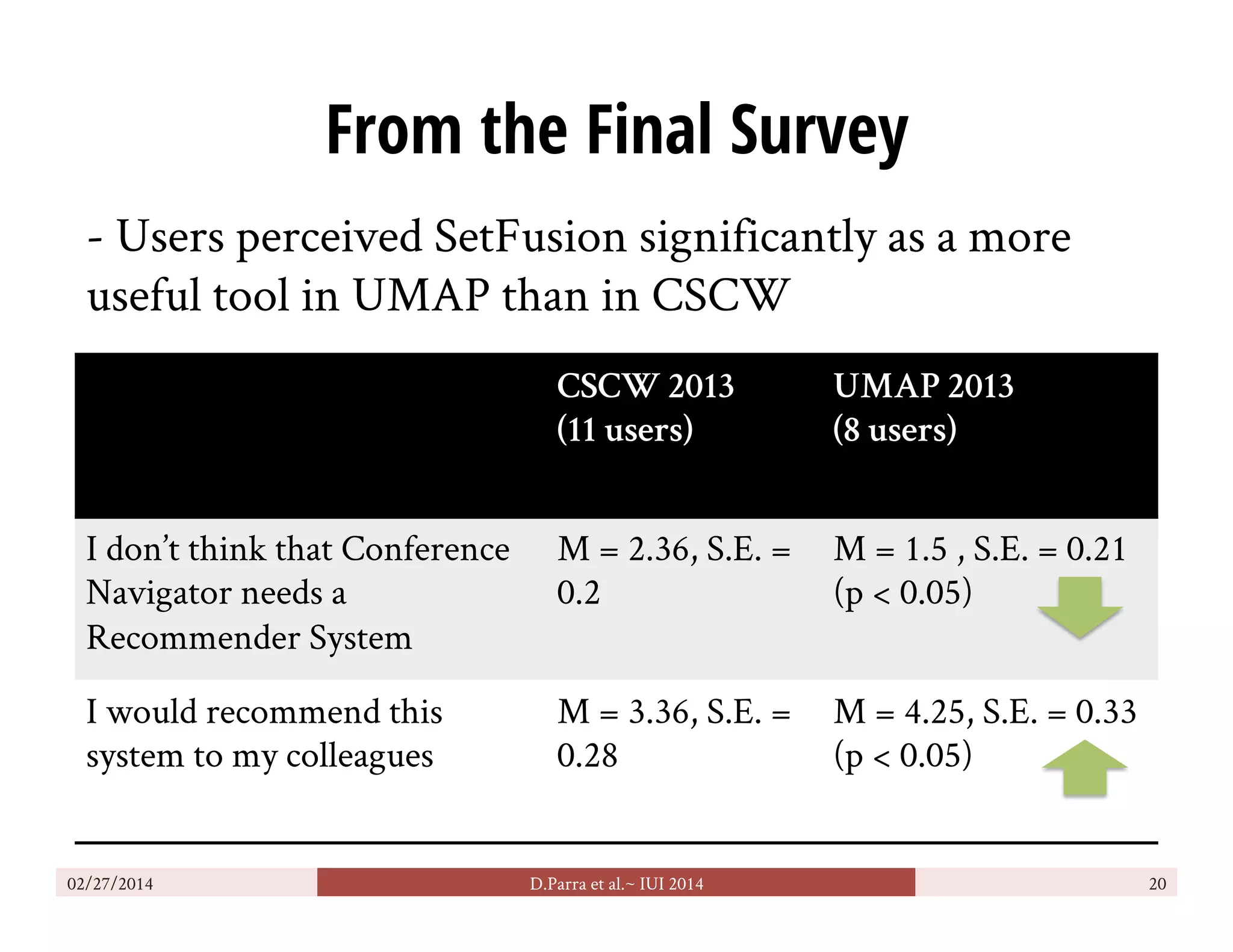

The document discusses a user-driven approach to recommender systems that emphasizes user controllability and includes a novel visualization method called SetFusion. It presents findings from user studies indicating that this approach improves user engagement and perceived usefulness compared to traditional systems. Future work aims to expand the applicability of the approach and explore alternative controls beyond a limited set of data sources.