Hai Lu presented on the Samza Portable Runner for Apache Beam. The key points are:

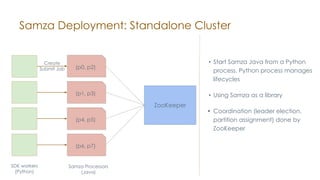

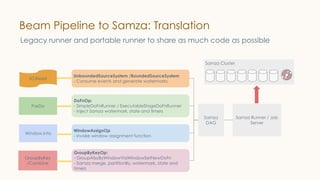

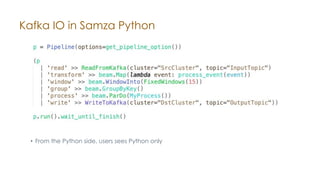

1) The Samza Portable Runner allows stream processing to be done in multiple languages like Python by translating Beam pipelines into the Samza execution engine.

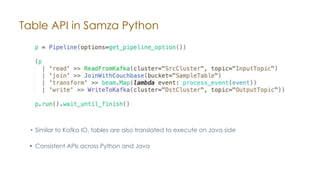

2) It provides a high-level Python SDK for building streaming applications on top of Beam's portability framework. Pipelines are translated from Python into the language-independent Beam representation.

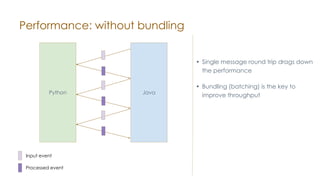

3) Performance is improved through batching/bundling messages between the Python and Java processes to reduce round trips. Initial tests showed throughput increasing with larger bundle sizes.

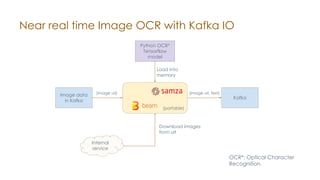

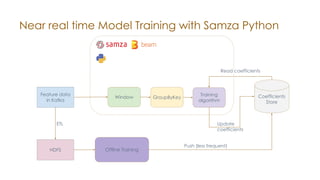

4) Example use cases demonstrated near real-time image OCR, model training,

![Stream Table Join in Samza Python

(K1, V1)

Kafka Input

StreamTableJoinOp

(K1, Entry 1)

(K2, Entry 2)

...

Remote/Local

Table

(K1, [V1, Entry1])

PTransform Output

• Table read is provided as stream-table join.

• Useful for enriching the events to be processed](https://image.slidesharecdn.com/samzaportablerunnerforbeam-191024210240/85/Samza-portable-runner-for-beam-18-320.jpg)