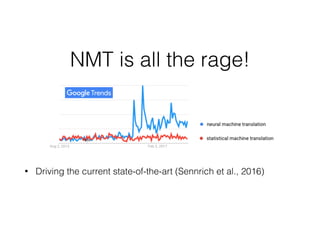

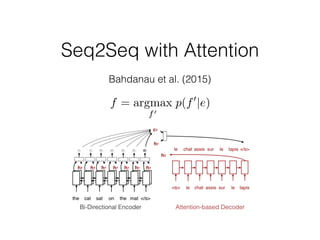

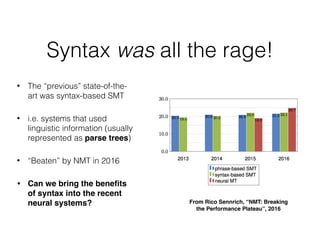

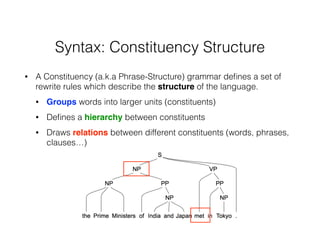

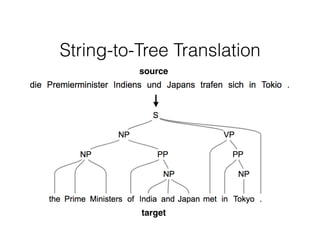

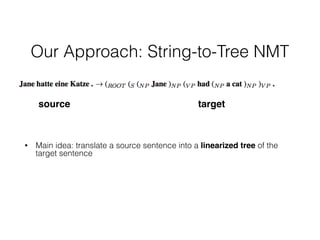

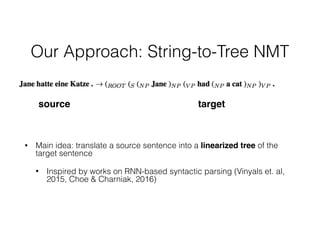

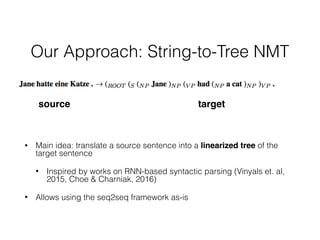

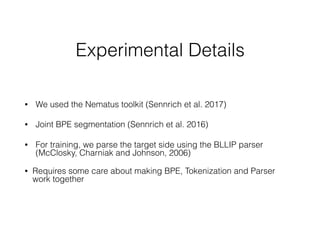

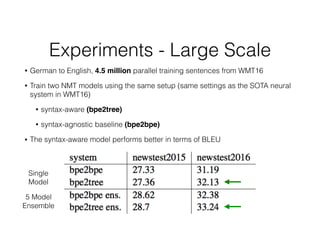

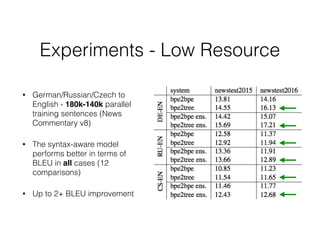

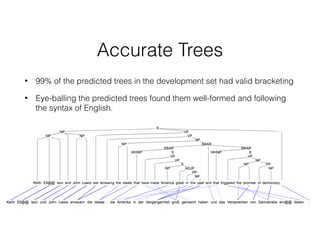

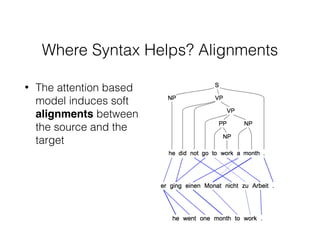

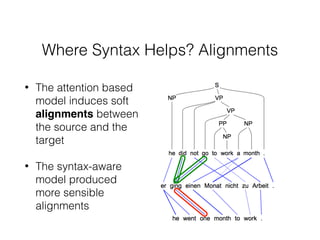

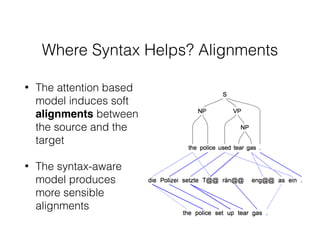

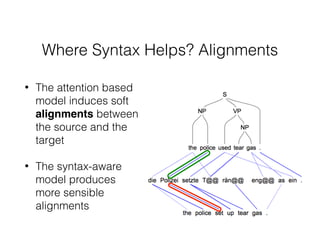

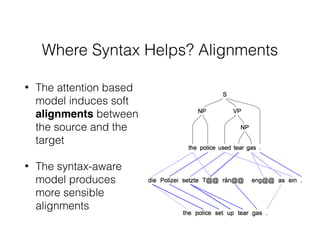

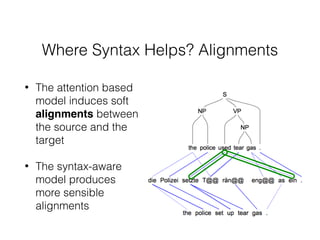

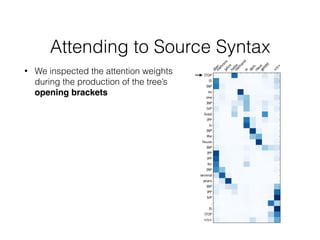

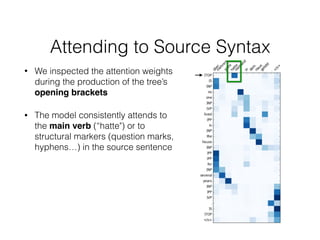

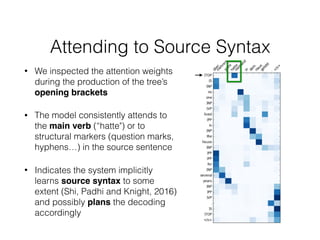

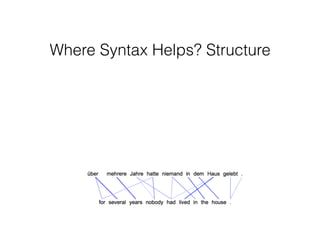

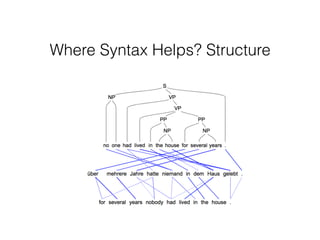

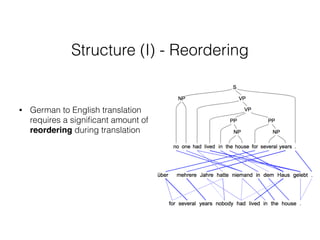

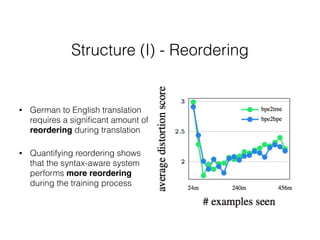

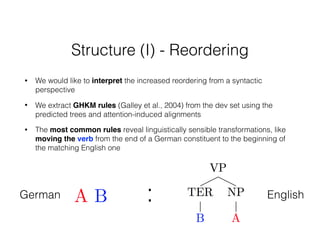

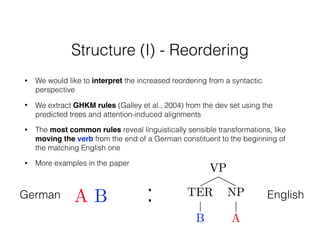

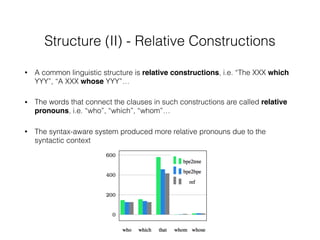

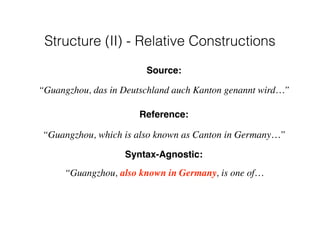

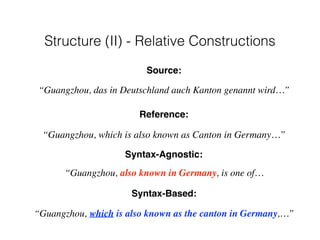

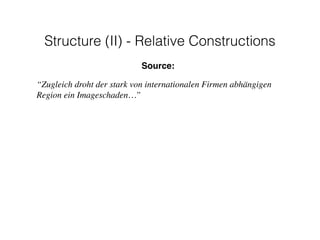

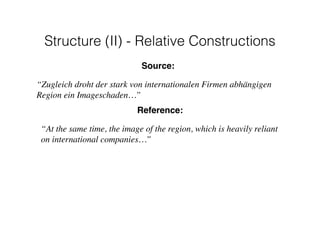

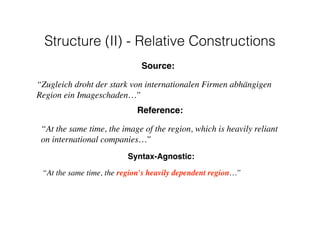

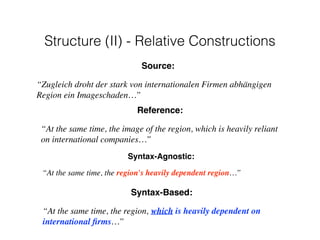

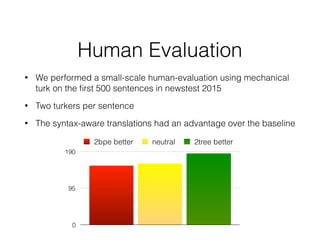

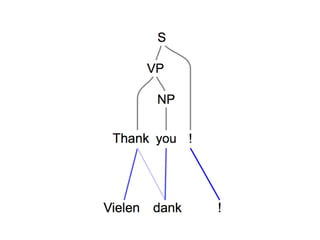

The document discusses using neural machine translation to translate text into tree structures representing the syntax of the target language. It proposes a string-to-tree neural machine translation approach that translates source sentences into linearized trees for the target sentences. Experiments on German-English and other language pairs show the syntax-aware model outperforms a syntax-agnostic baseline in terms of BLEU, producing more accurate trees and sensible alignments. The syntax-aware model is also shown to learn source syntax and perform linguistically motivated reordering like moving verbs in German sentences.