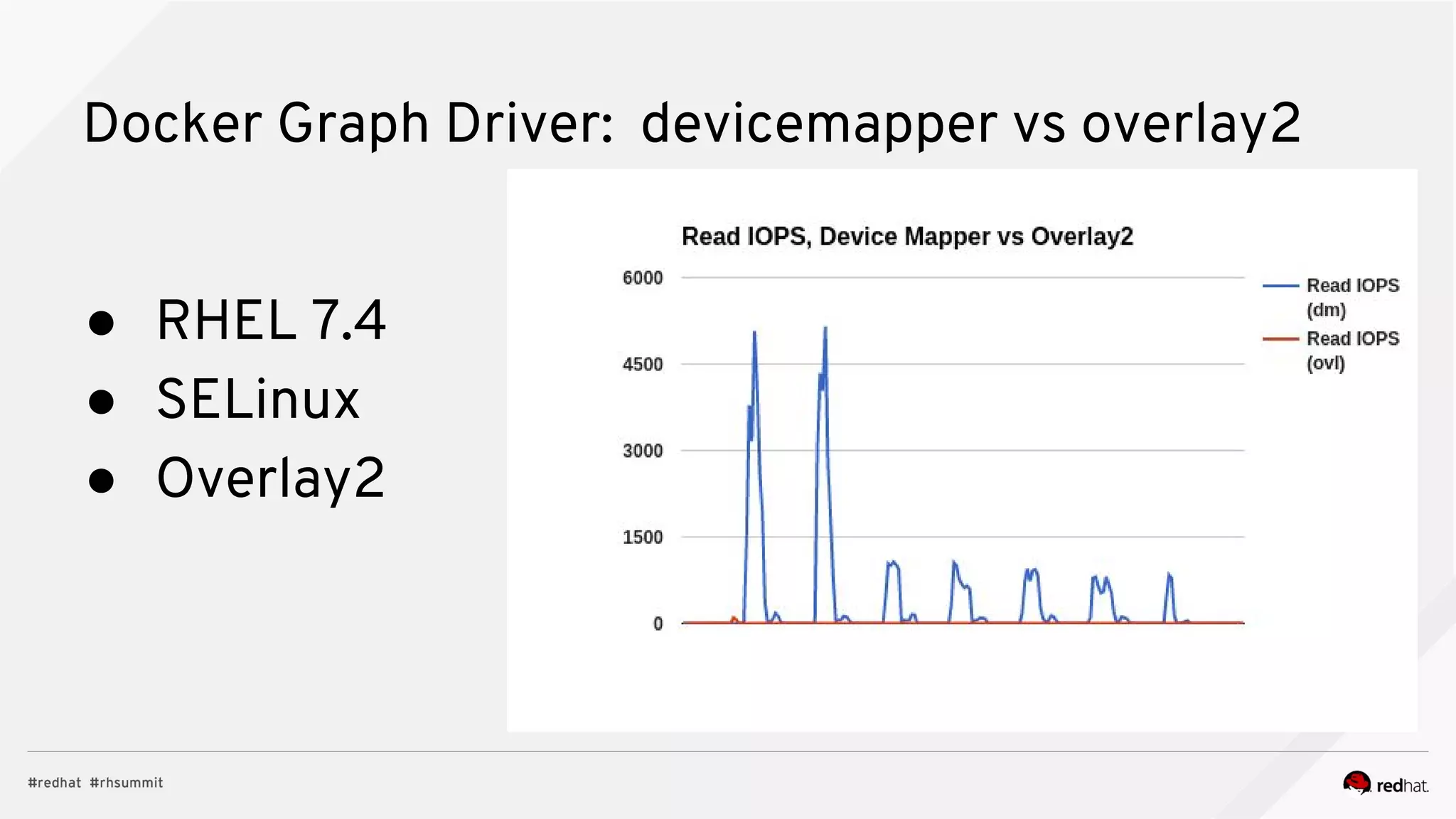

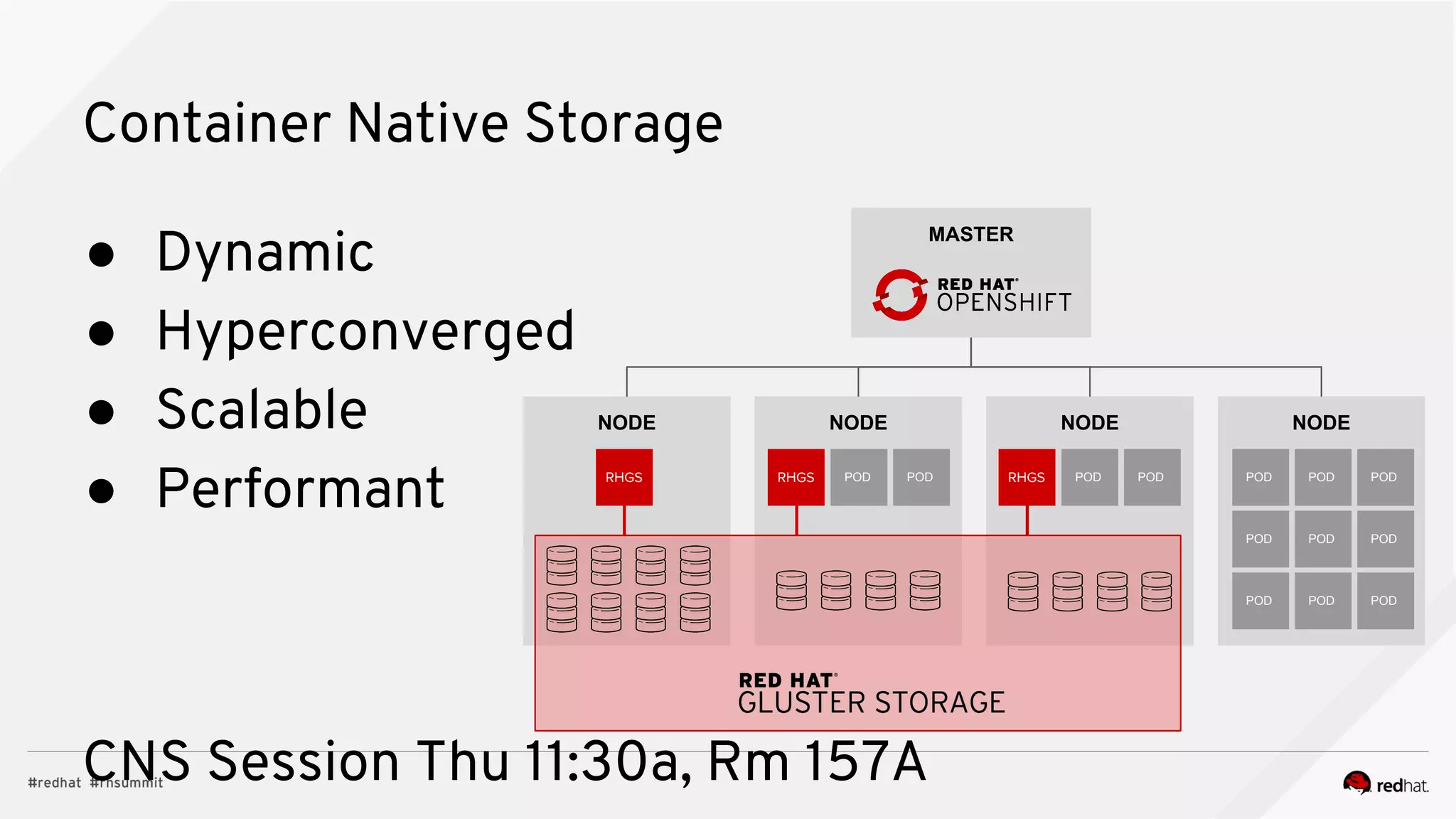

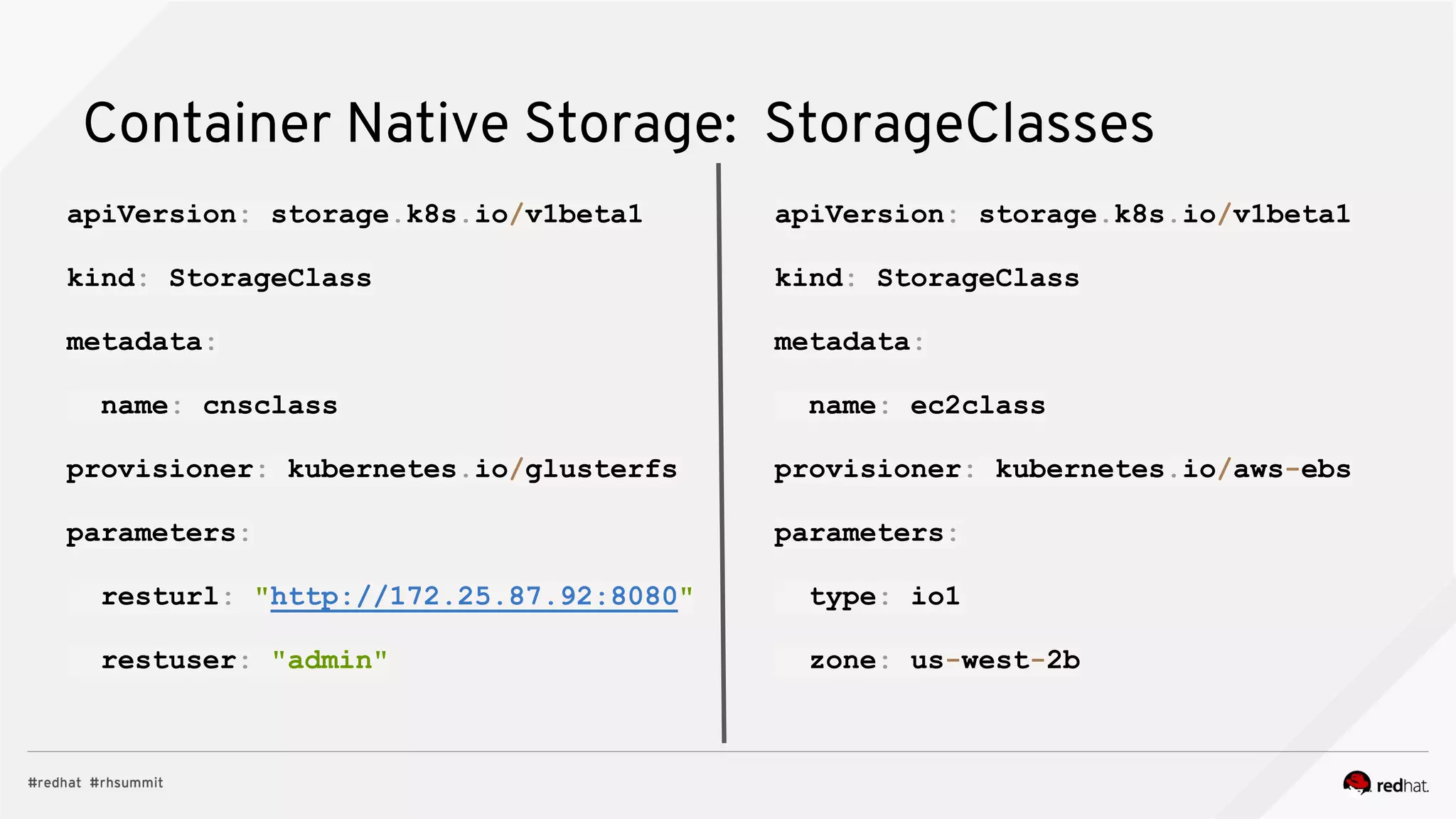

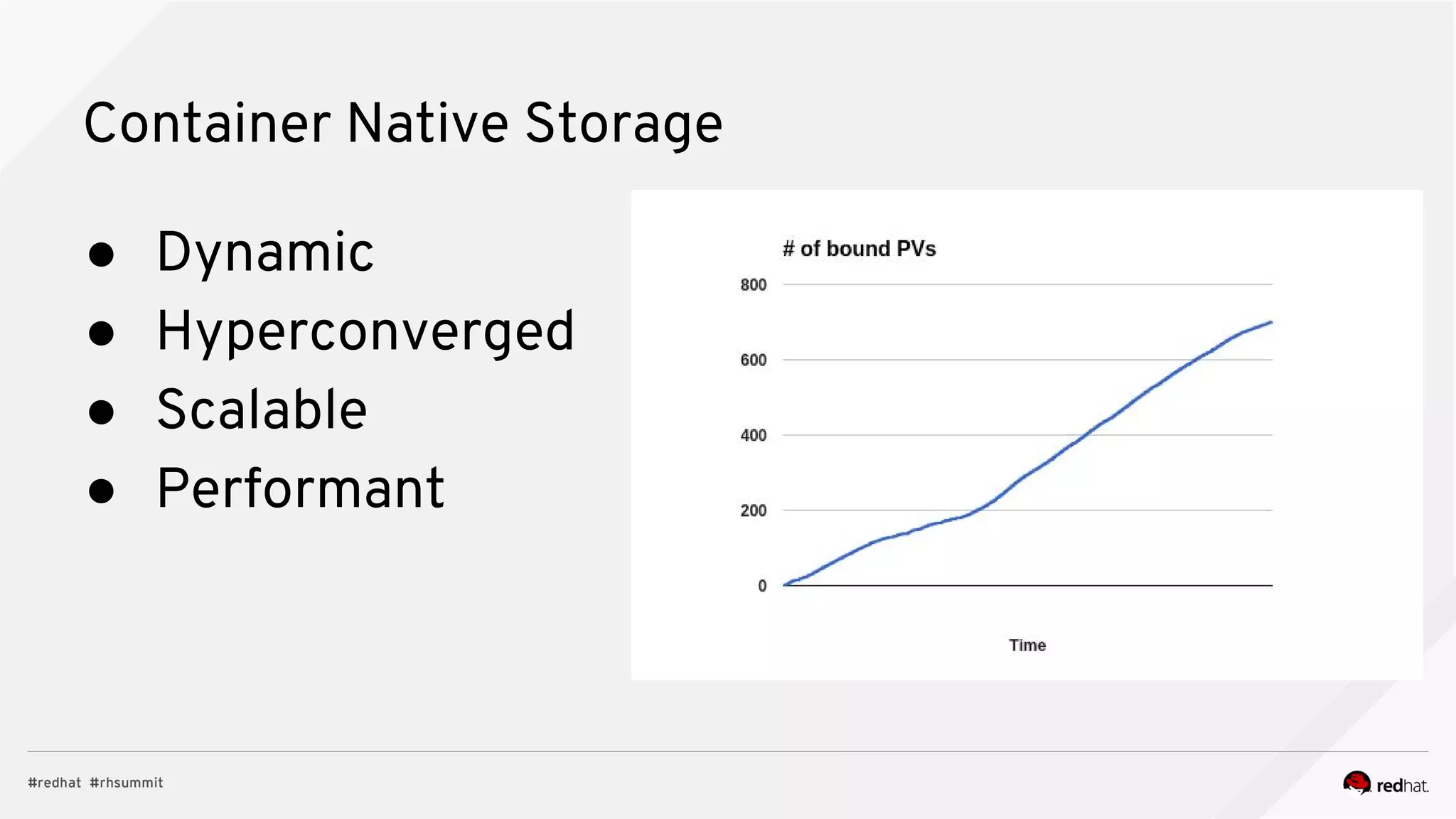

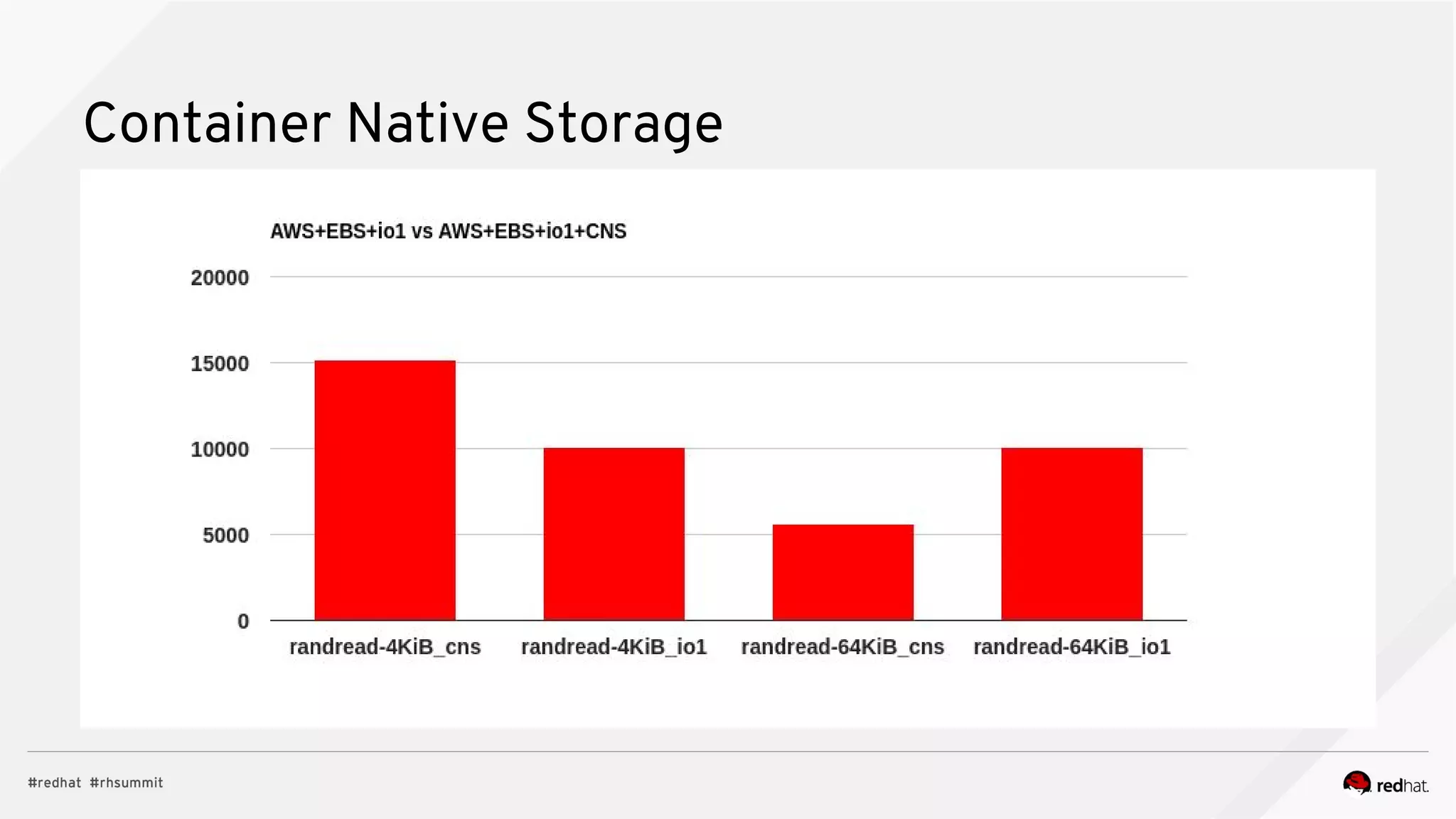

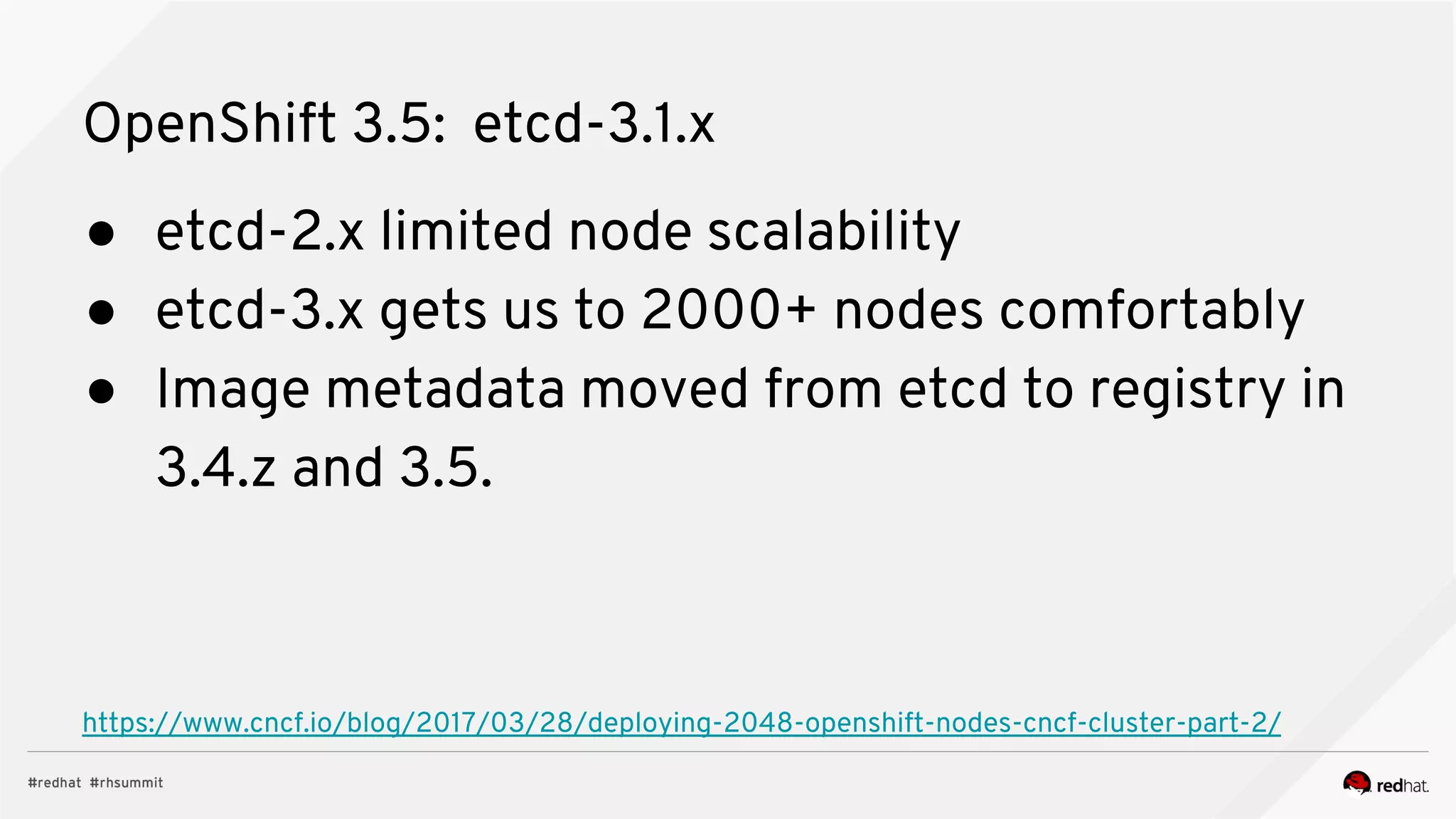

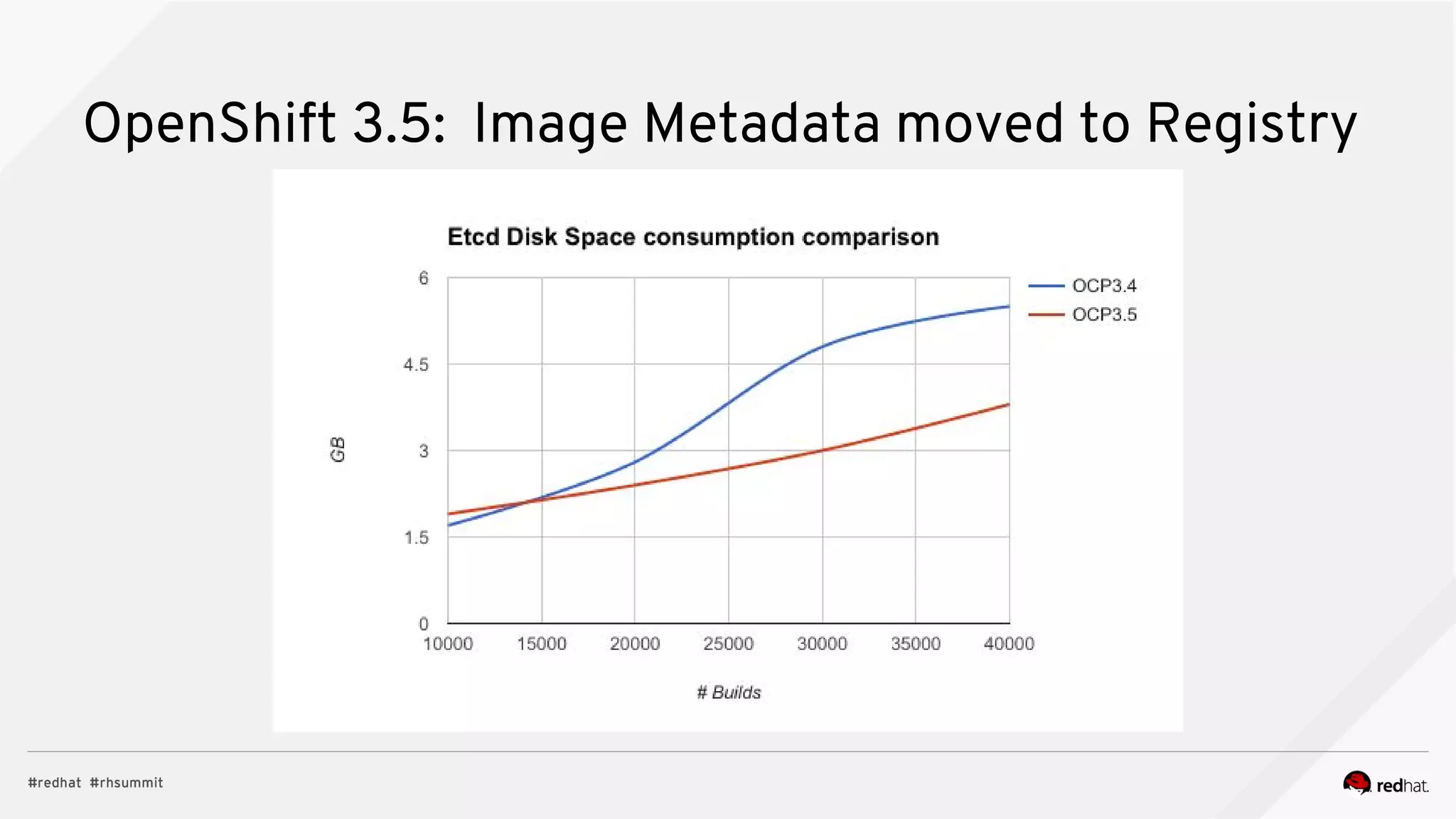

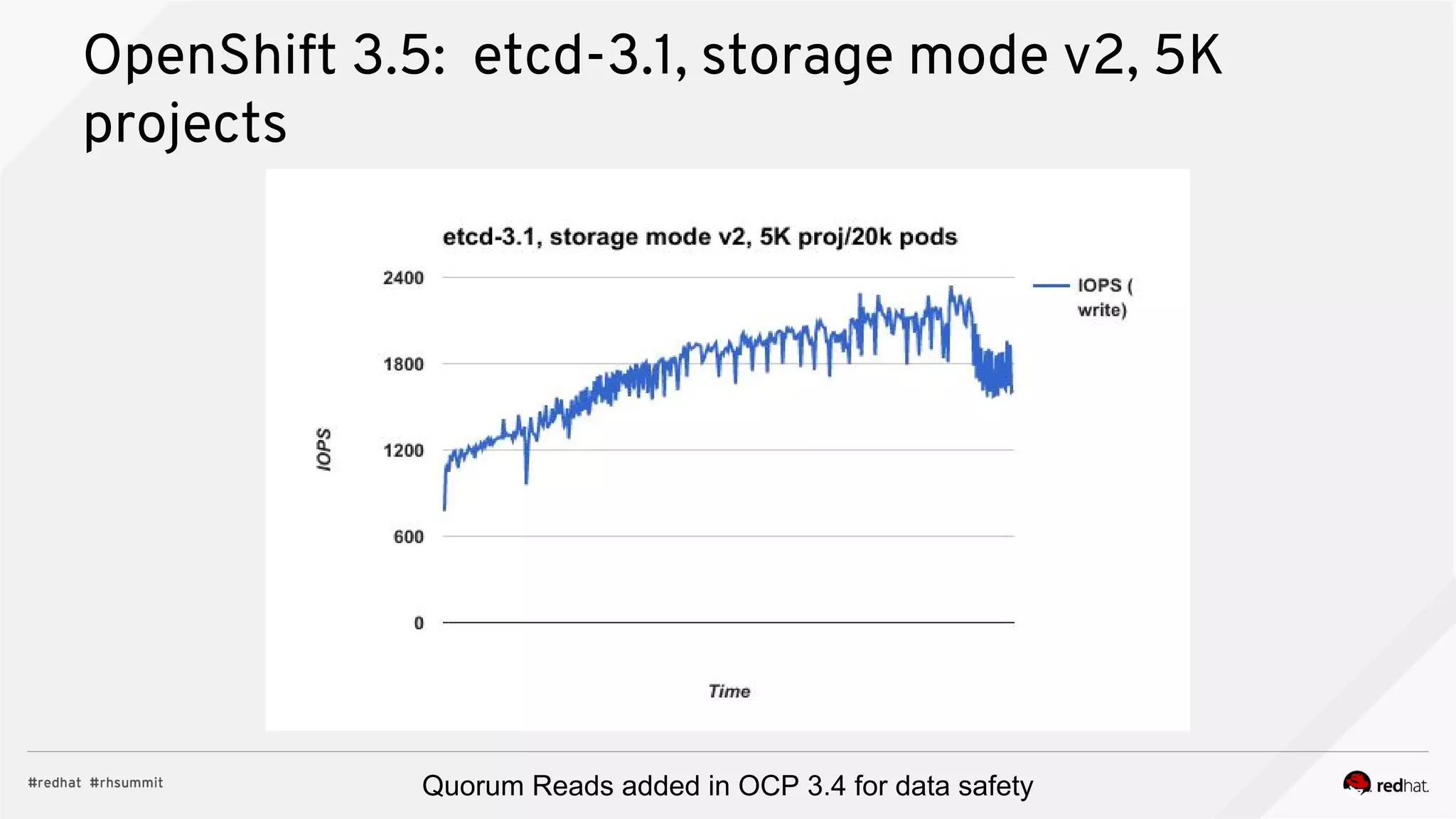

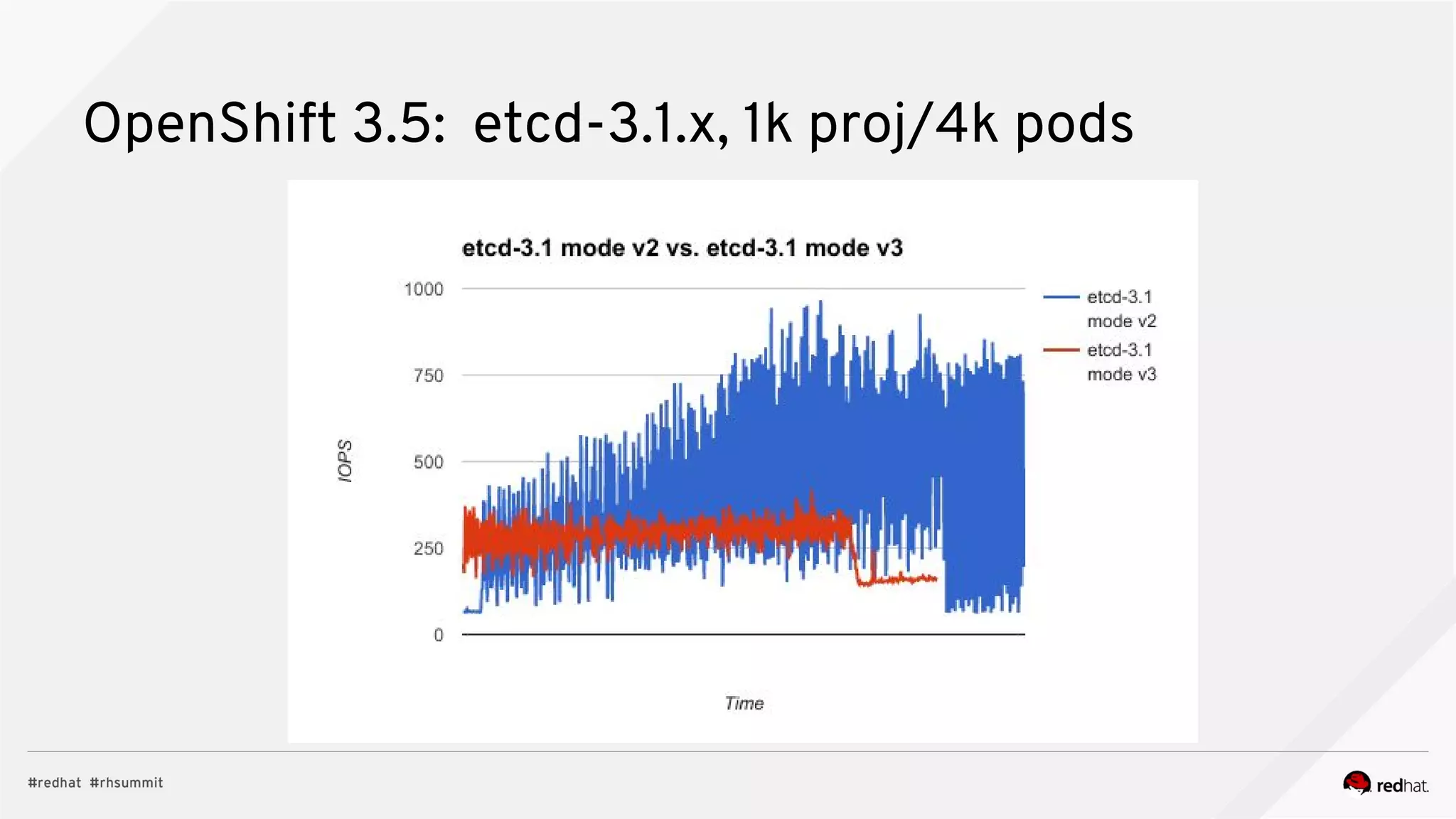

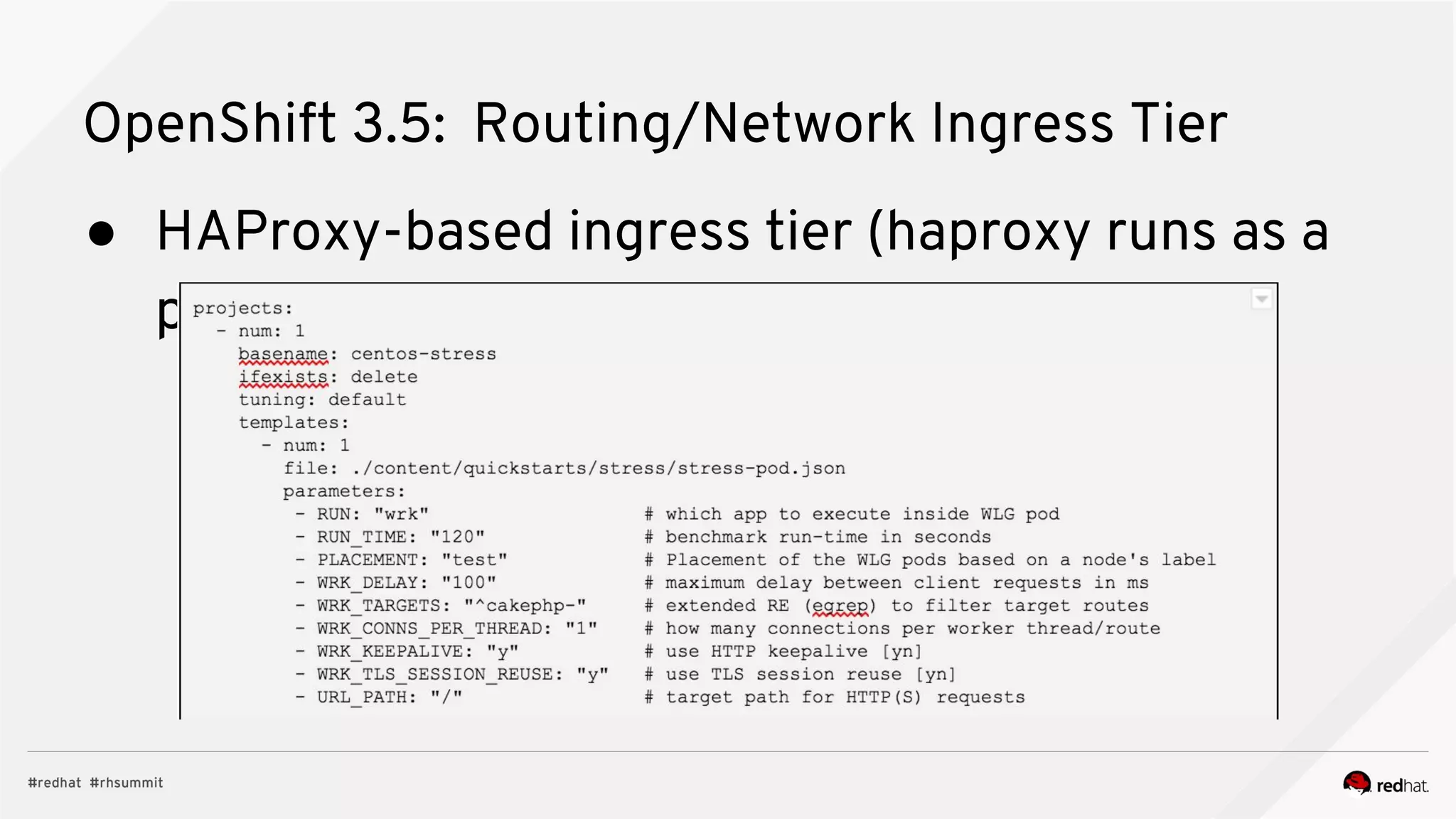

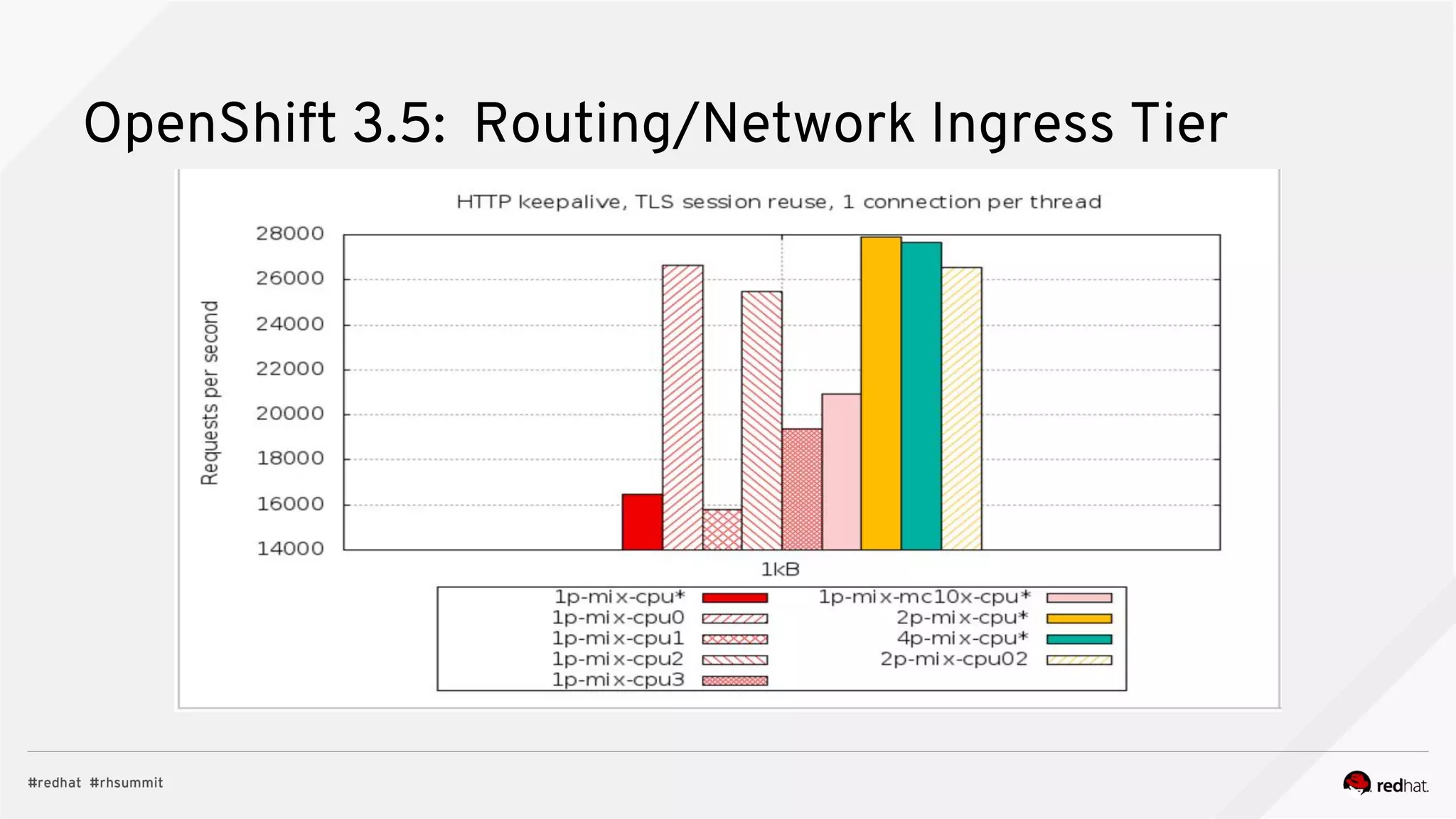

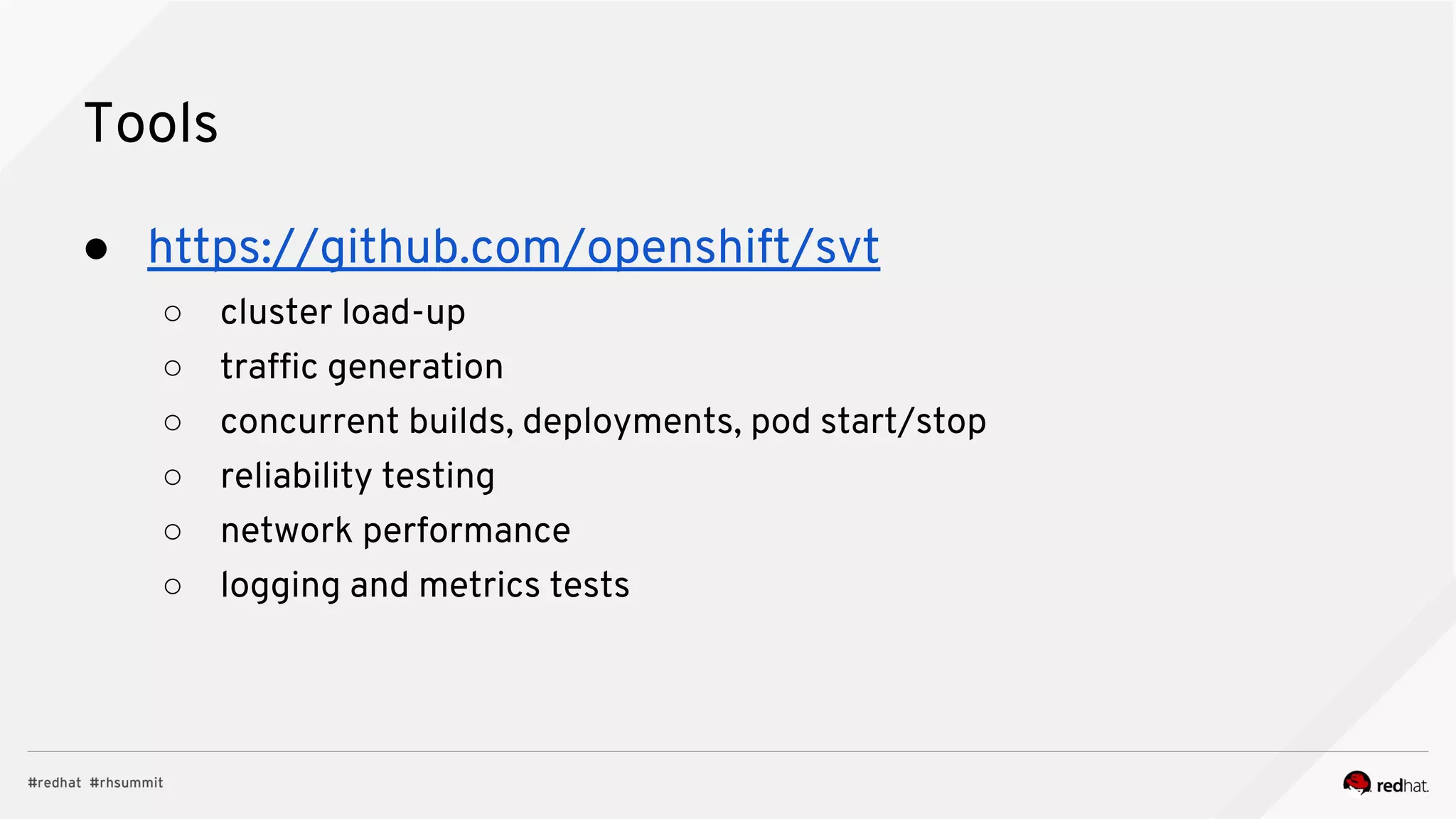

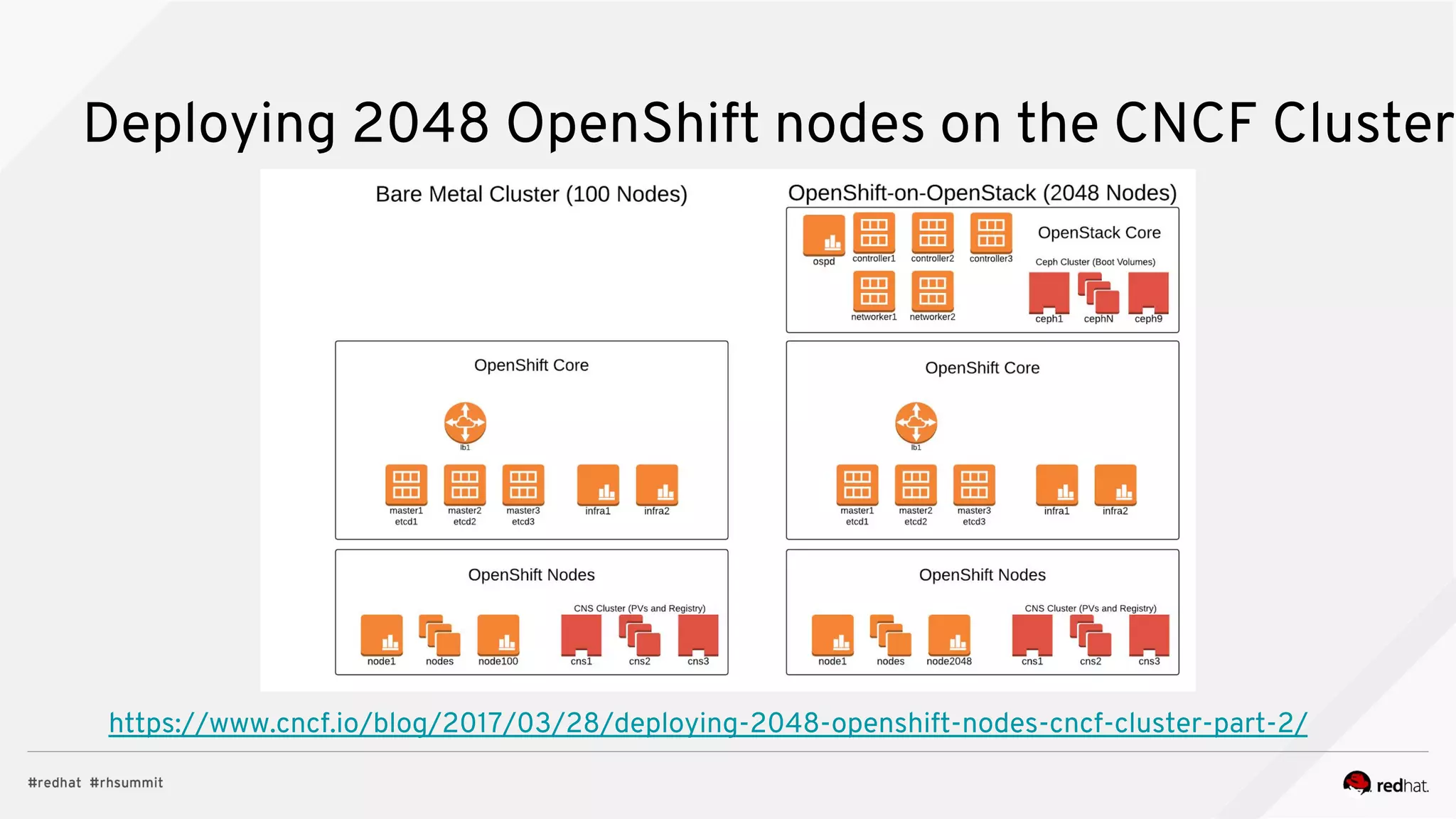

This document summarizes performance tuning techniques for OpenShift 3.5 and Docker 1.12. It discusses optimizing components like etcd, container storage, routing, metrics and logging. It also describes tools for testing OpenShift scalability through cluster loading, traffic generation and concurrent operations. Specific techniques are mentioned like using etcd 3.1, overlay2 storage and moving image metadata to the registry.

![if [ “$containers” = “linux” ];

then

echo “fundamentals don’t change”

fi](https://image.slidesharecdn.com/rh-summit-2017-wicked-fast-paas-performance-tuning-of-openshift-and-docker-v2-170503190334/75/Red-Hat-Summit-2017-Wicked-Fast-PaaS-Performance-Tuning-of-OpenShift-and-Docker-5-2048.jpg)

![OpenShift 3.5: Installation

https://docs.openshift.org/latest/scaling_performance/install_practices.html

[defaults]

forks = 20

gathering = smart

fact_caching = jsonfile

fact_caching_timeout = 600

callback_whitelist = profile_tasks

[ssh_connection]

ssh_args = -o ControlMaster=auto -o

ControlPersist=600s

control_path = %(directory)s/%%h-%%r

pipelining = True

timeout = 10](https://image.slidesharecdn.com/rh-summit-2017-wicked-fast-paas-performance-tuning-of-openshift-and-docker-v2-170503190334/75/Red-Hat-Summit-2017-Wicked-Fast-PaaS-Performance-Tuning-of-OpenShift-and-Docker-10-2048.jpg)