The document provides an agenda and overview of the Ceph Project Update and Ceph Month event in June 2021. Some key points:

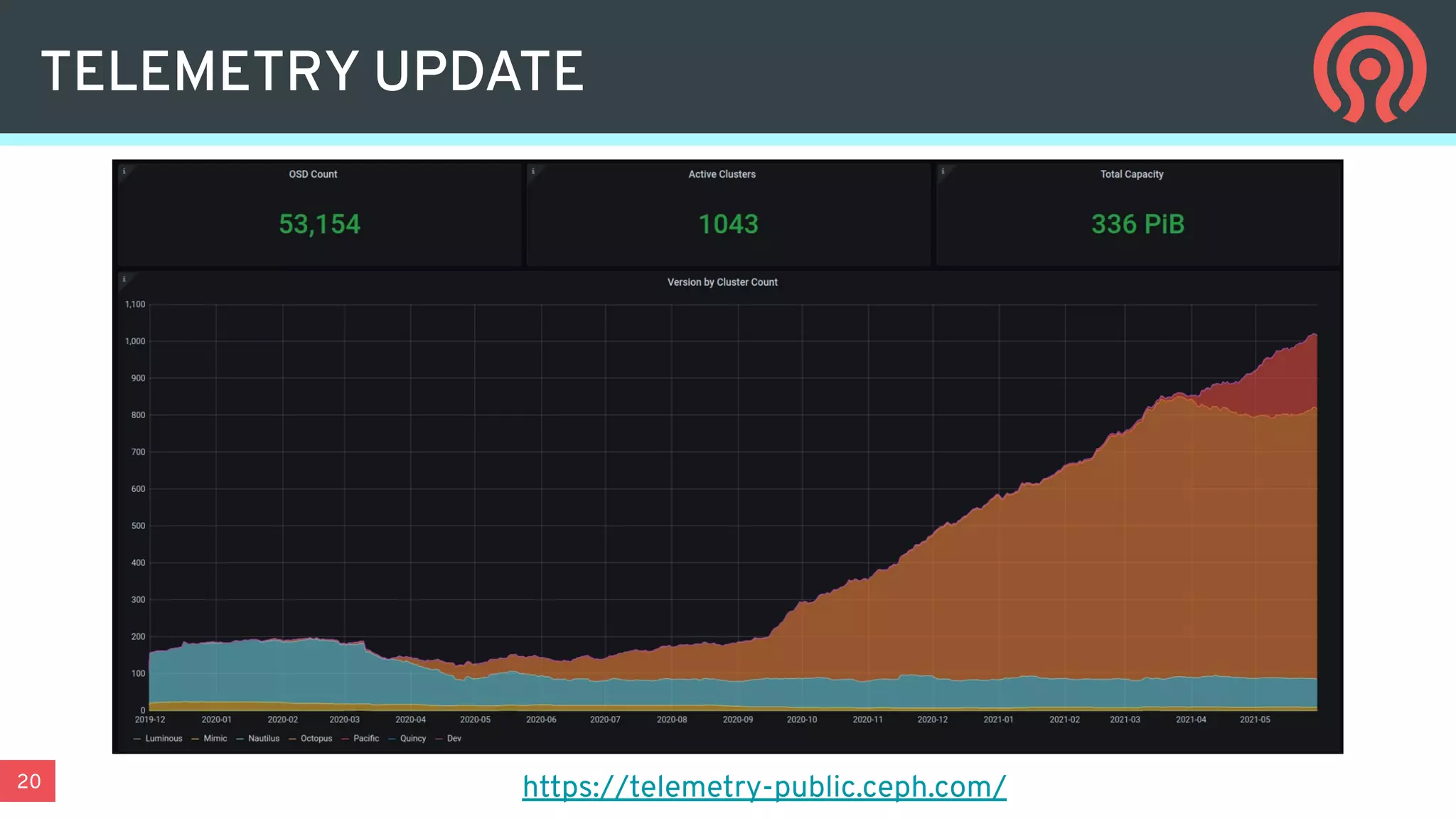

- Ceph is open source software that provides scalable, reliable distributed storage across commodity hardware.

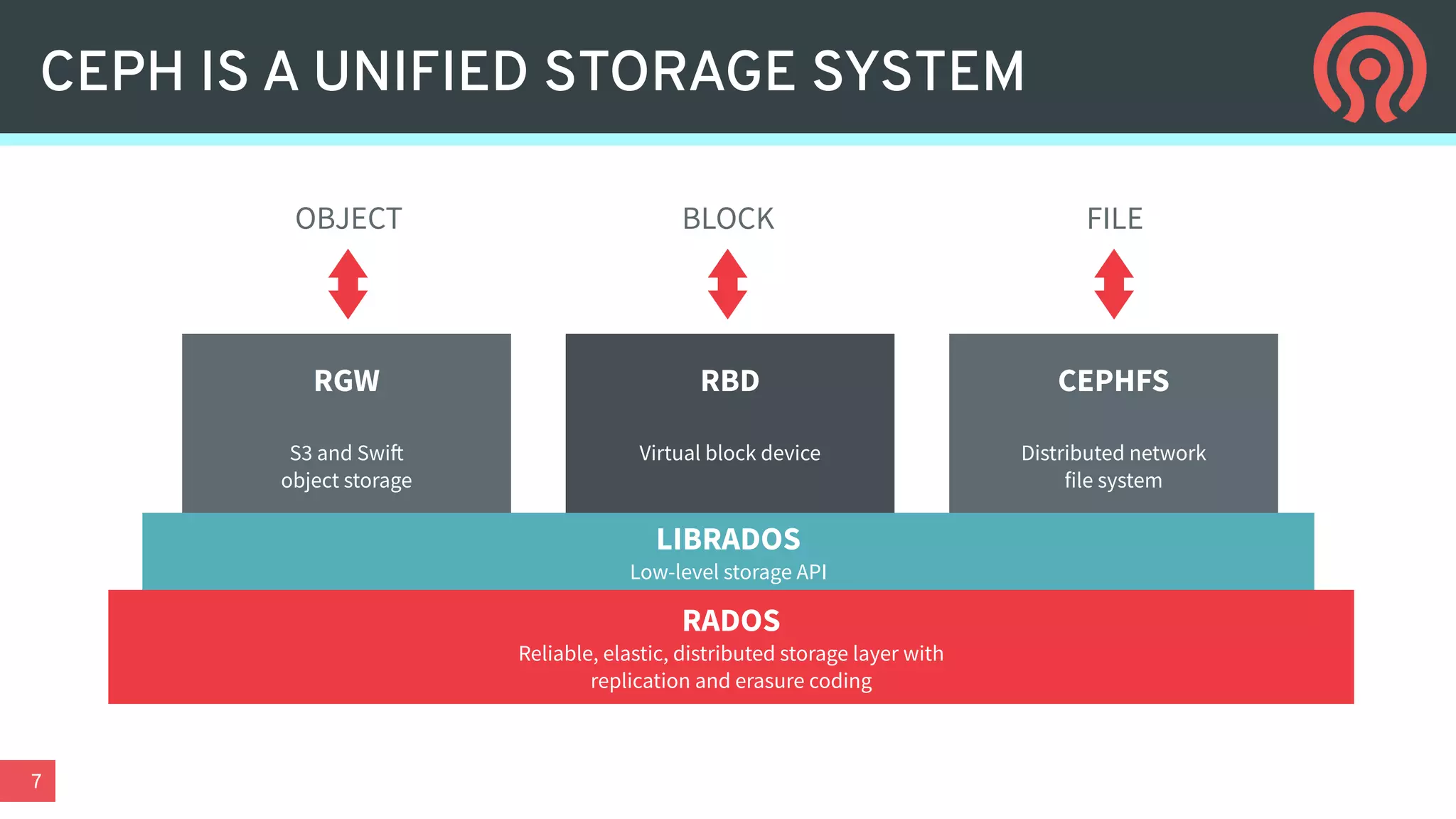

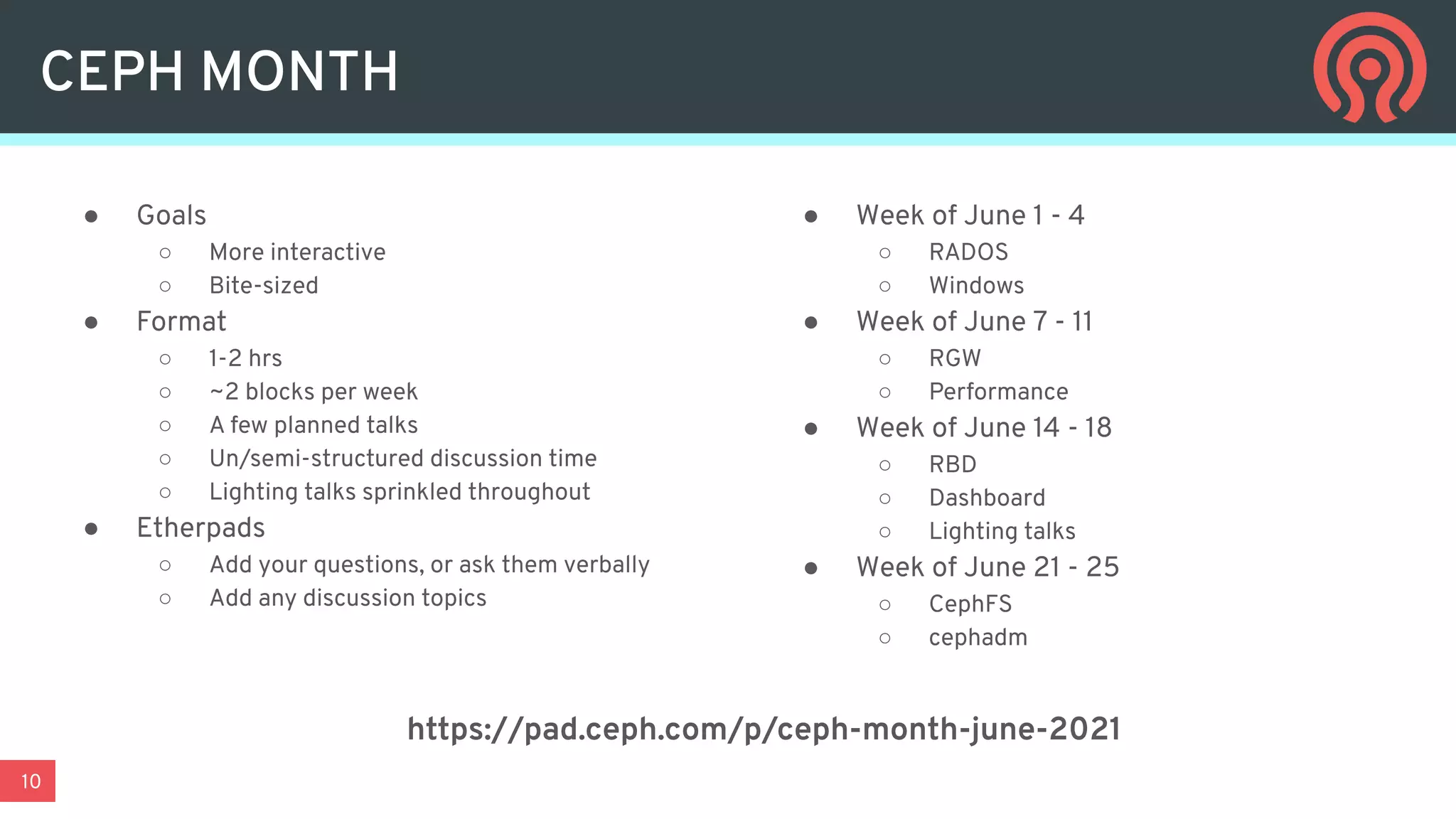

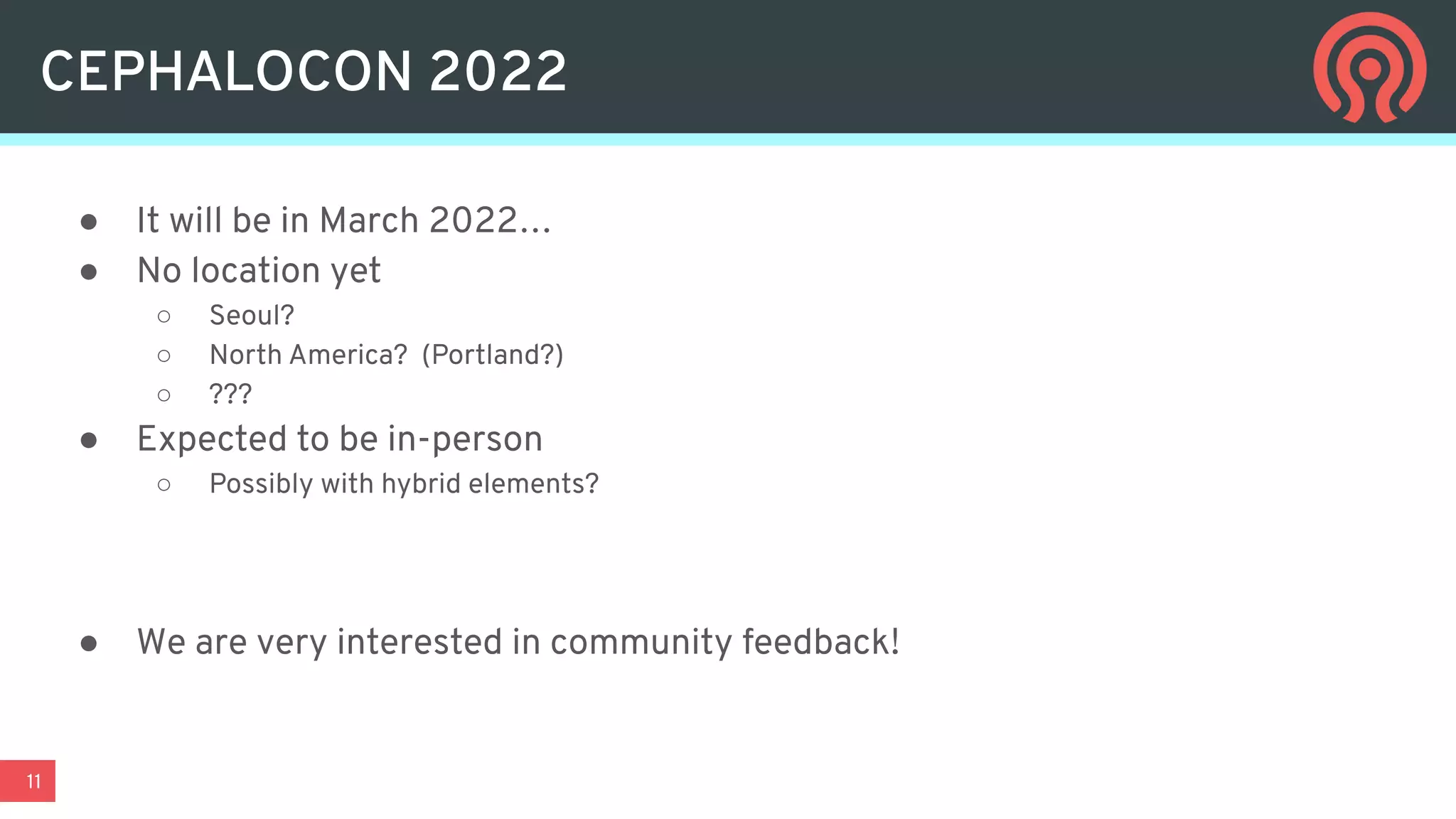

- Ceph Month will include weekly sessions on topics like RADOS, RGW, RBD, and CephFS to promote interactive discussion.

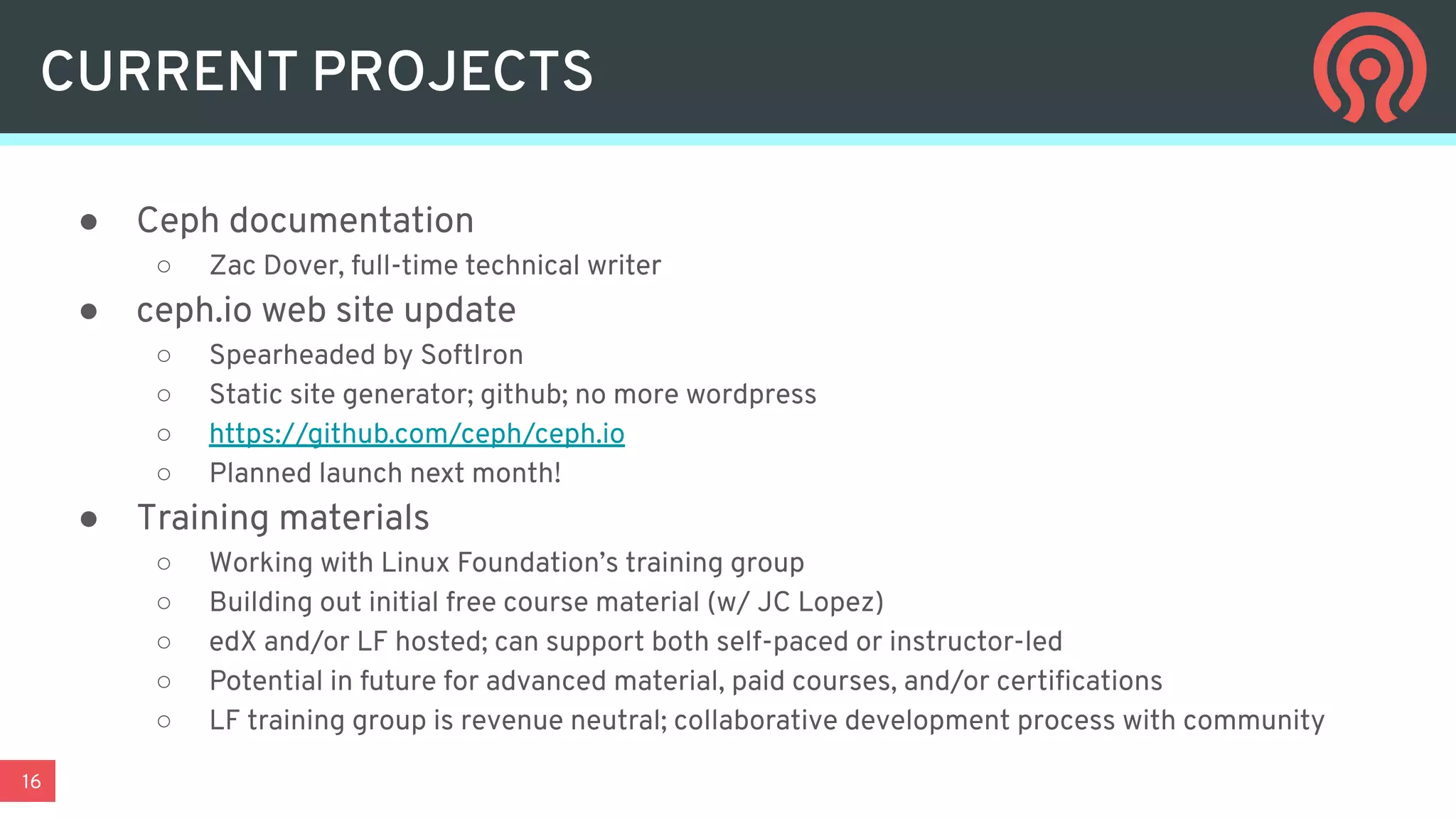

- The Ceph Foundation is working on projects to improve documentation, training materials, and lab infrastructure for testing and development.