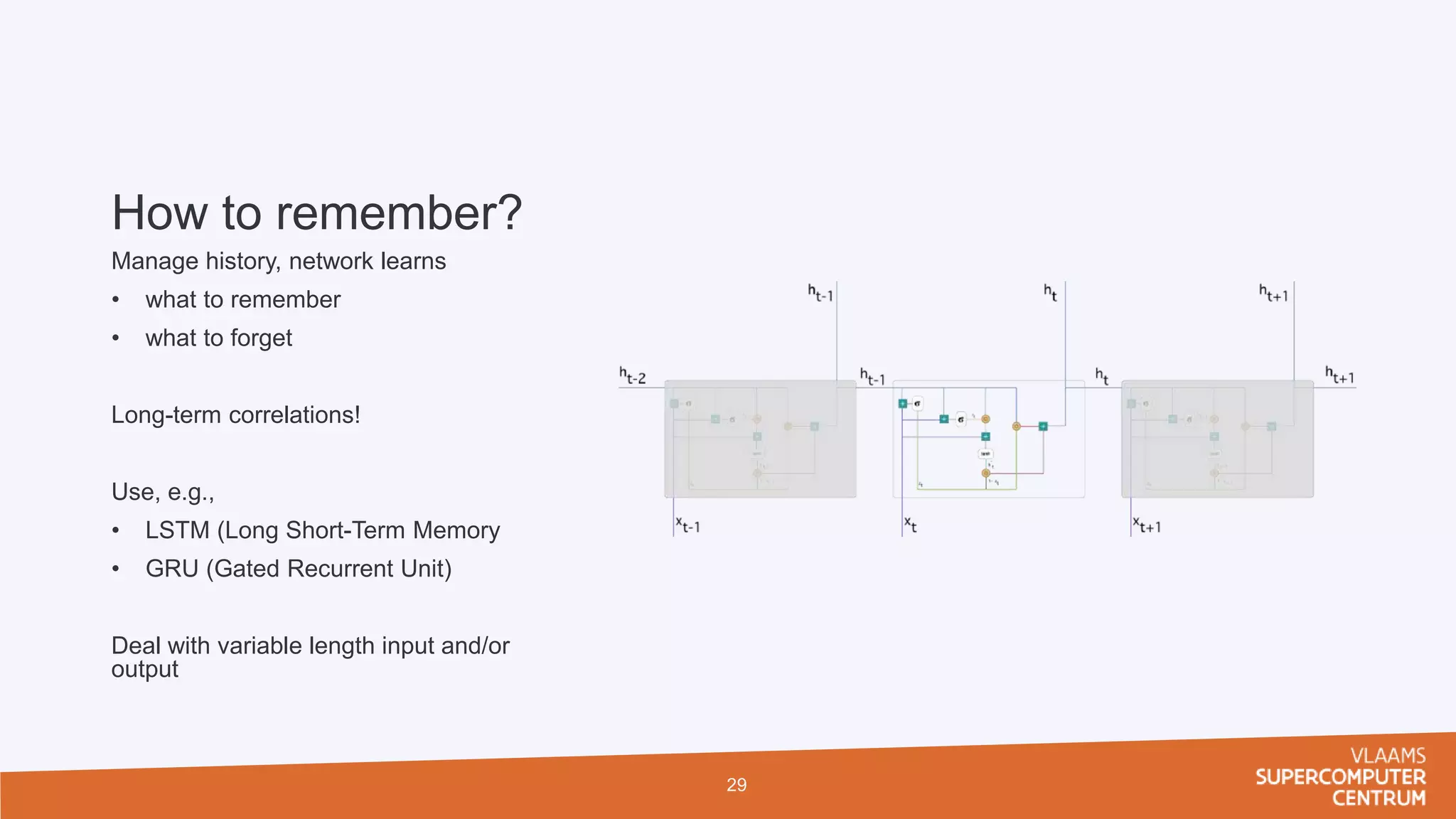

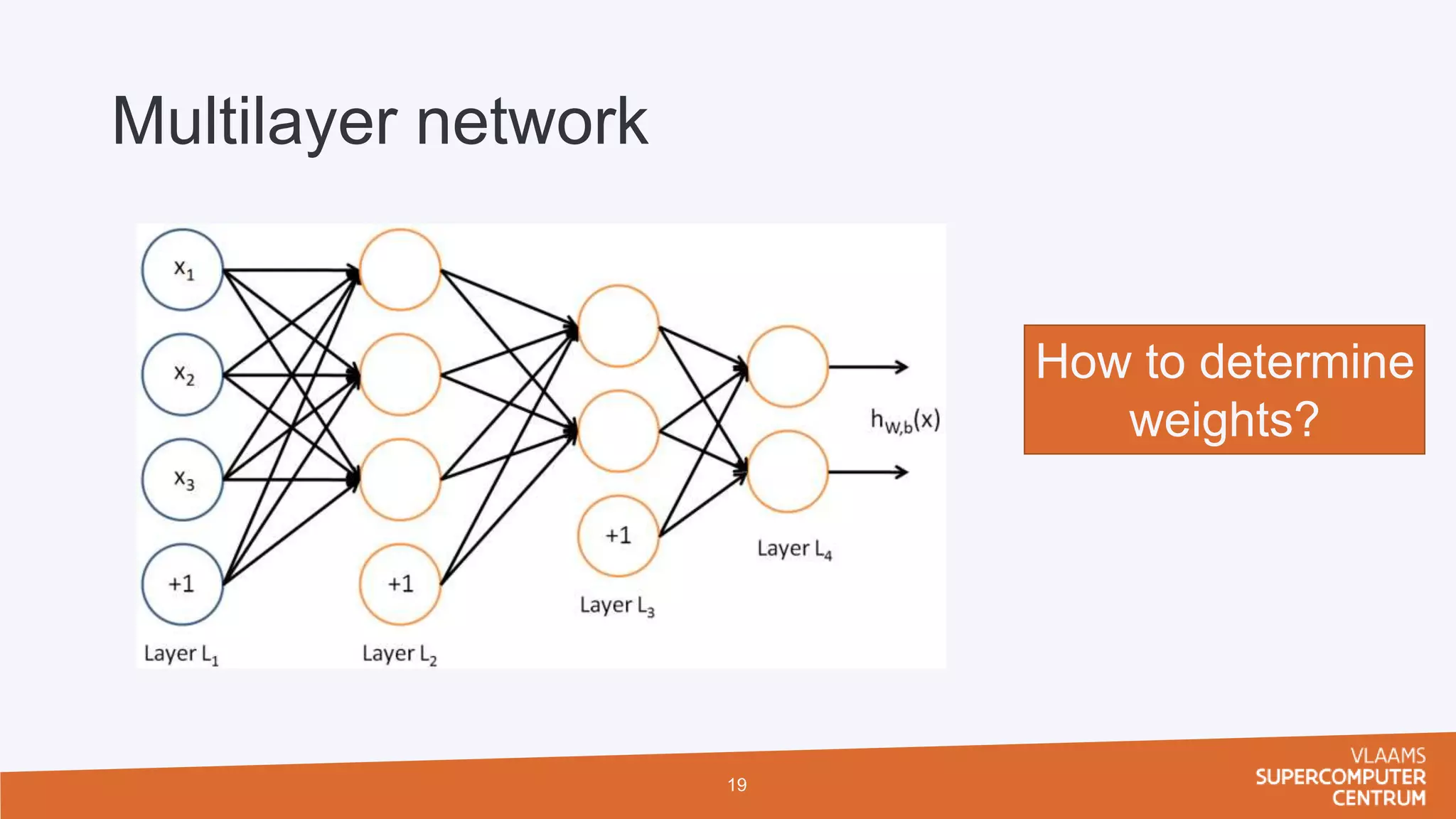

This document provides an introduction to machine learning and artificial intelligence. It discusses the types of machine learning tasks including supervised learning, unsupervised learning, and reinforcement learning. It also summarizes commonly used machine learning algorithms and frameworks. Examples are given of applying machine learning to tasks like image classification, sentiment analysis, and handwritten digit recognition. Issues that can cause machine learning projects to fail are identified and approaches to addressing different machine learning problems are outlined.

![First approach

• Data preprocessing

• Input data as 1D array

• output data as array with

one-hot encoding

• Model: multilayer perceptron

• 758 inputs

• dense hidden layer with 512 units

• ReLU activation function

• dense layer with 512 units

• ReLU activation function

• dense layer with 10 units

• SoftMax activation function

22

array([ 0.0, 0.0,..., 0.951, 0.533,..., 0.0, 0.0], dtype=f

5

array([ 0, 0, 0, 0, 0, 1, 0, 0, 0, 0], dtype=ui

Activation functions: 030_activation_functions.ipynb

Multilayer perceptron: 040_mnist_mlp.ipynb](https://image.slidesharecdn.com/pracedaysml2019-230615155832-bb29dd3e/75/prace_days_ml_2019-pptx-22-2048.jpg)

![Second approach

• Data preprocessing

• Input data as 2D array

• output data as array with

one-hot encoding

• Model: convolutional neural

network (CNN)

• 28 28 inputs

• CNN layer with 32 filters 3 3

• ReLU activation function

• flatten layer

• dense layer with 10 units

• SoftMax activation function

26

array([[ 0.0, 0.0,..., 0.951, 0.533,..., 0.0, 0.0]], dtype

5

array([ 0, 0, 0, 0, 0, 1, 0, 0, 0, 0], dtype=ui

Convolutional neural network: 060_mnist_cnn.ipynb](https://image.slidesharecdn.com/pracedaysml2019-230615155832-bb29dd3e/75/prace_days_ml_2019-pptx-26-2048.jpg)