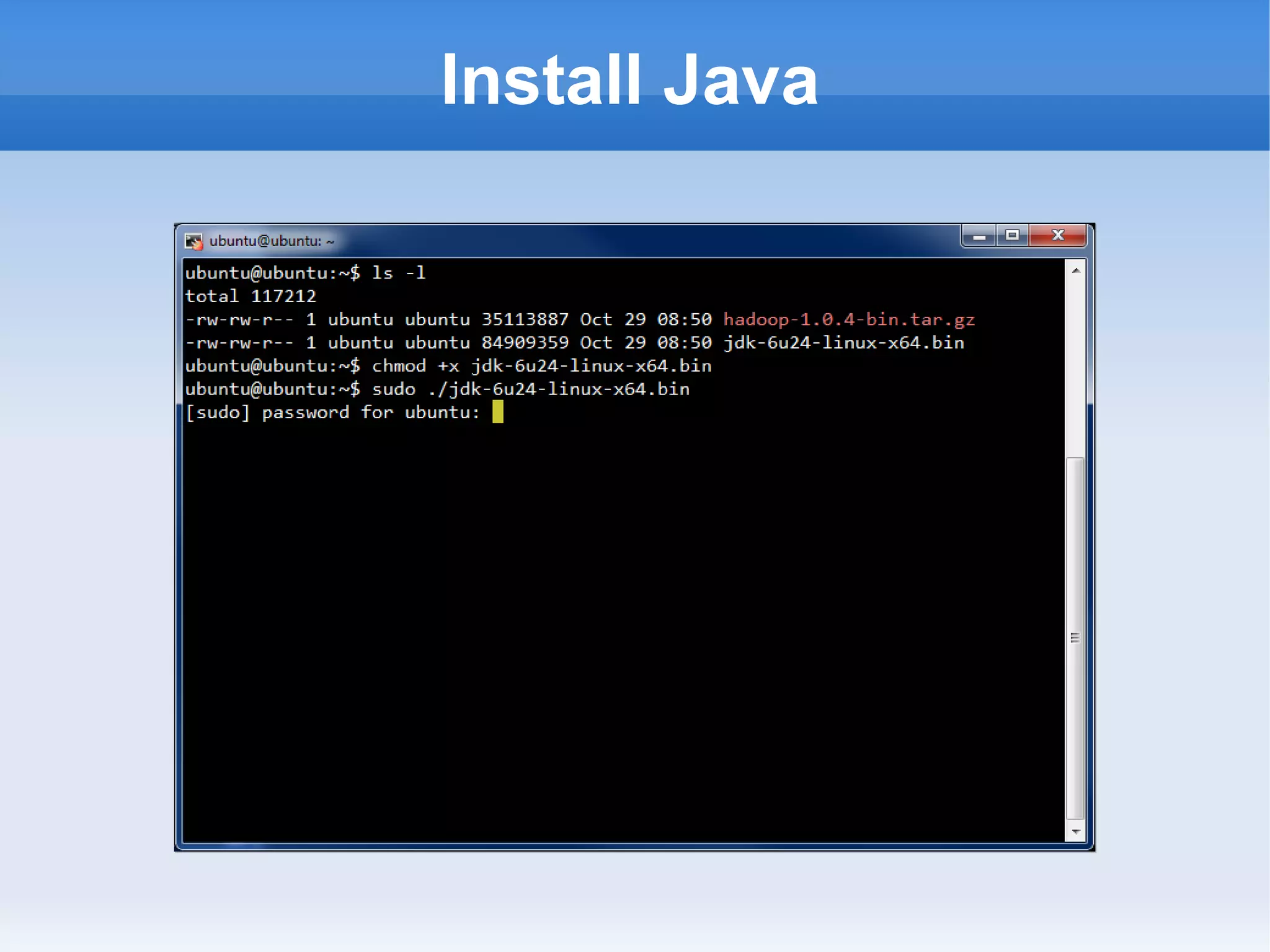

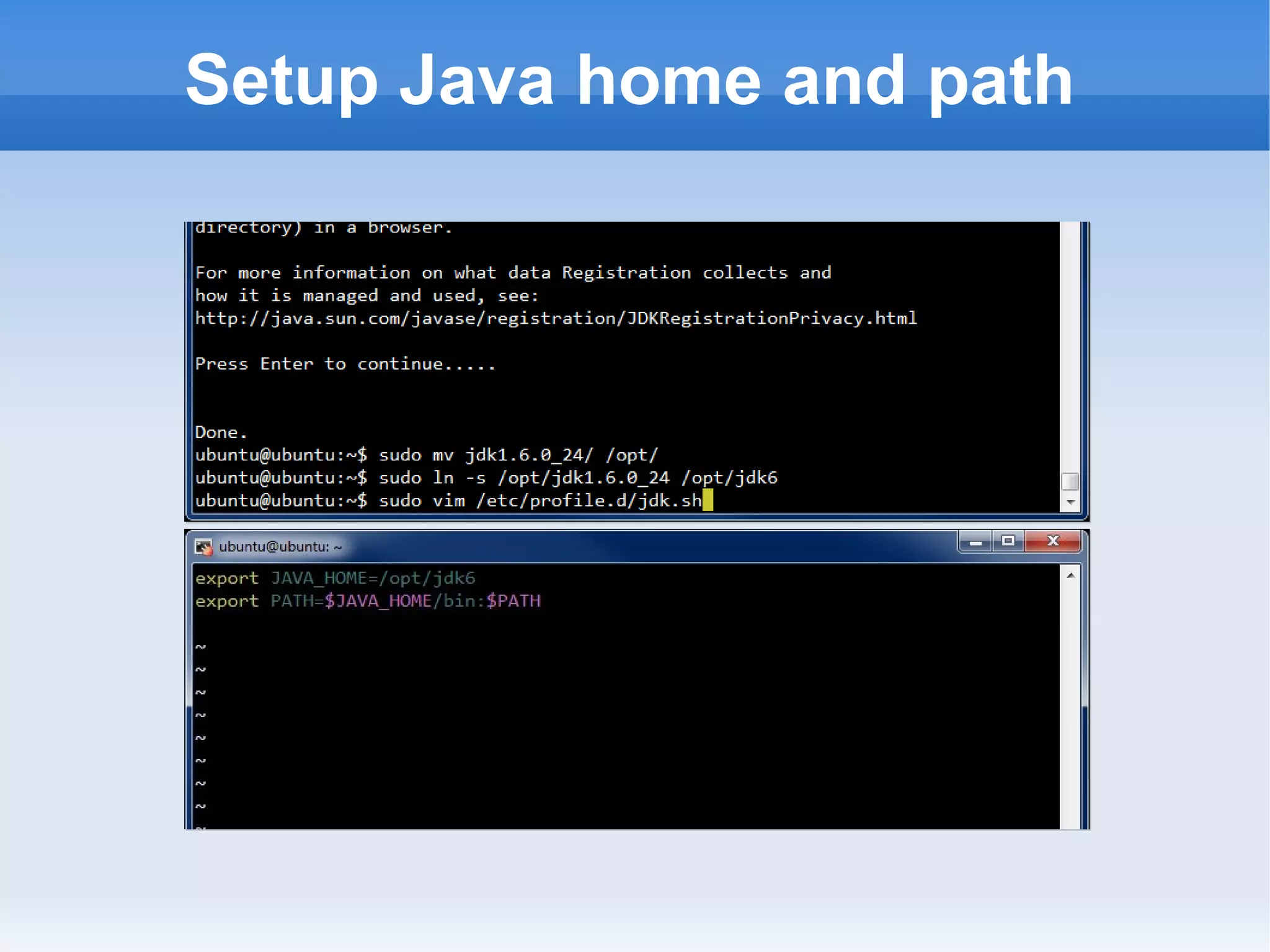

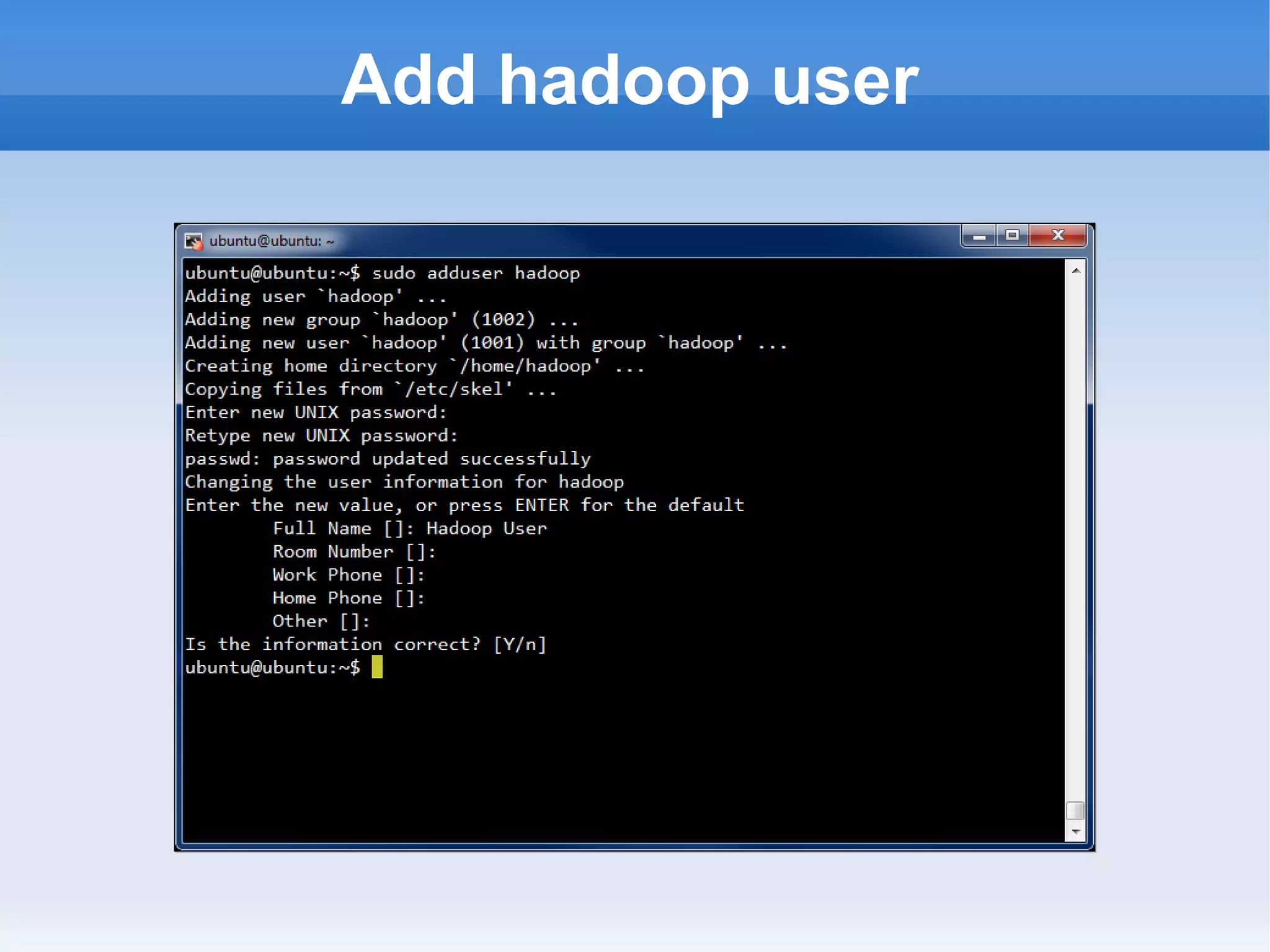

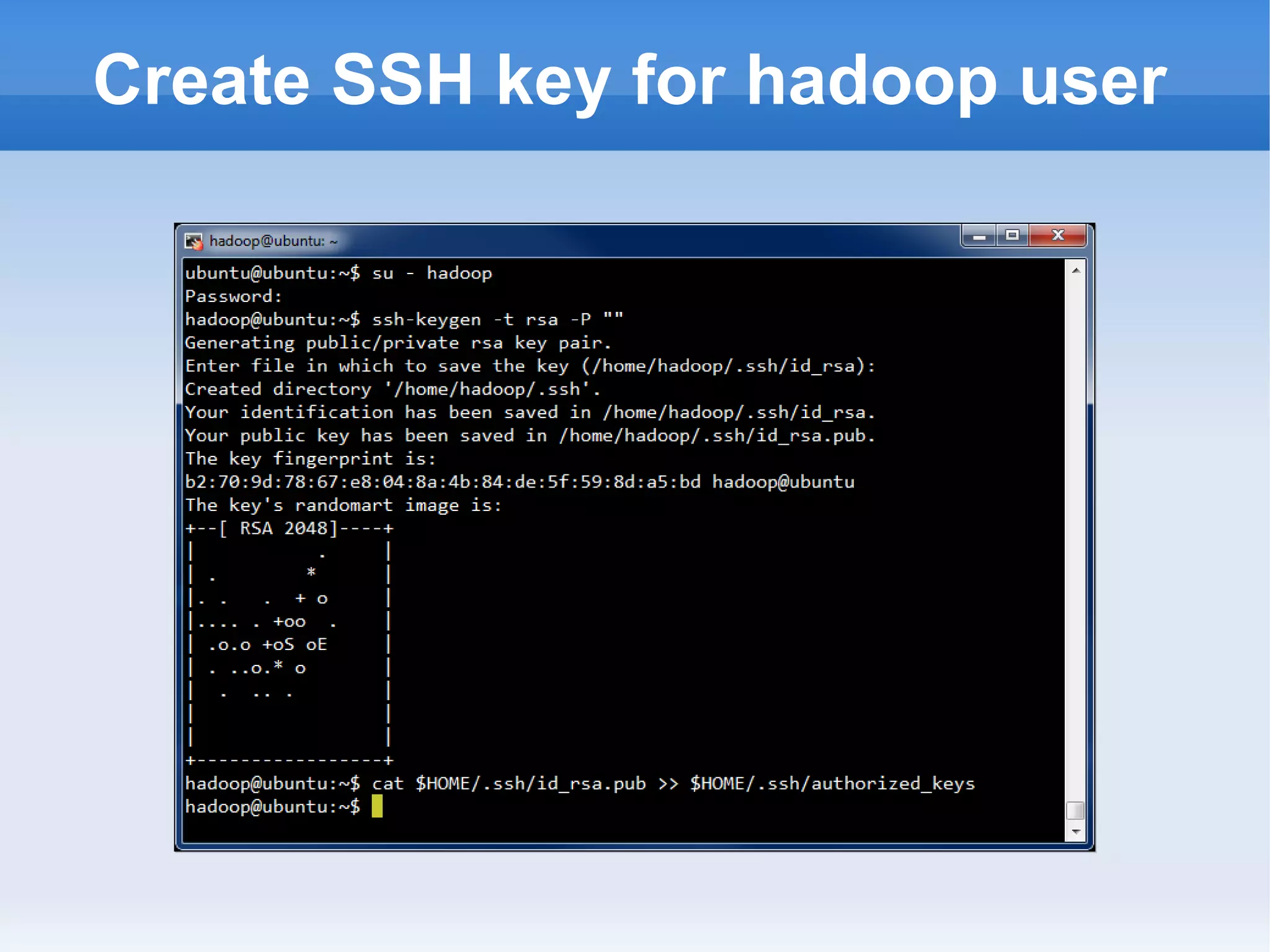

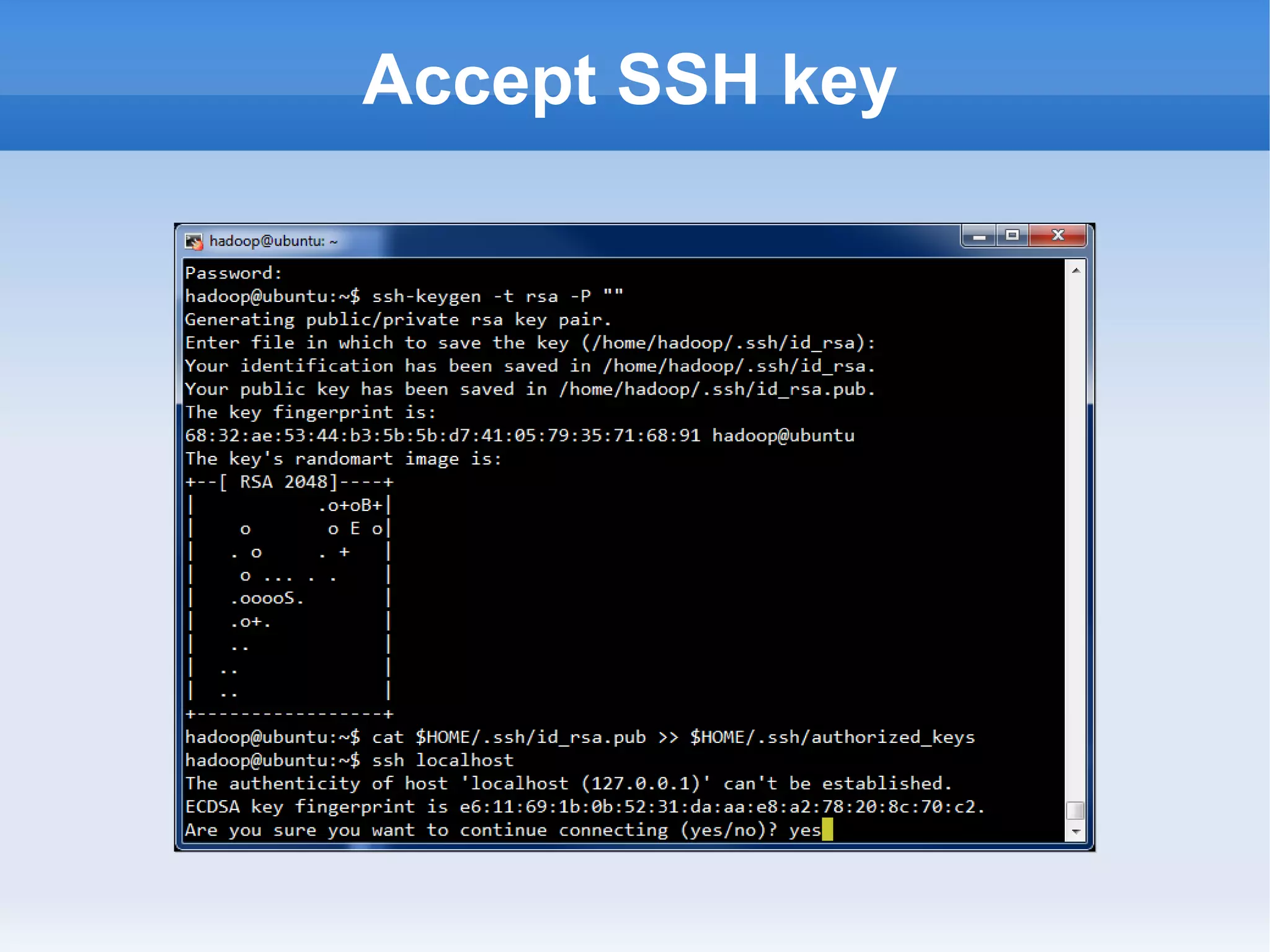

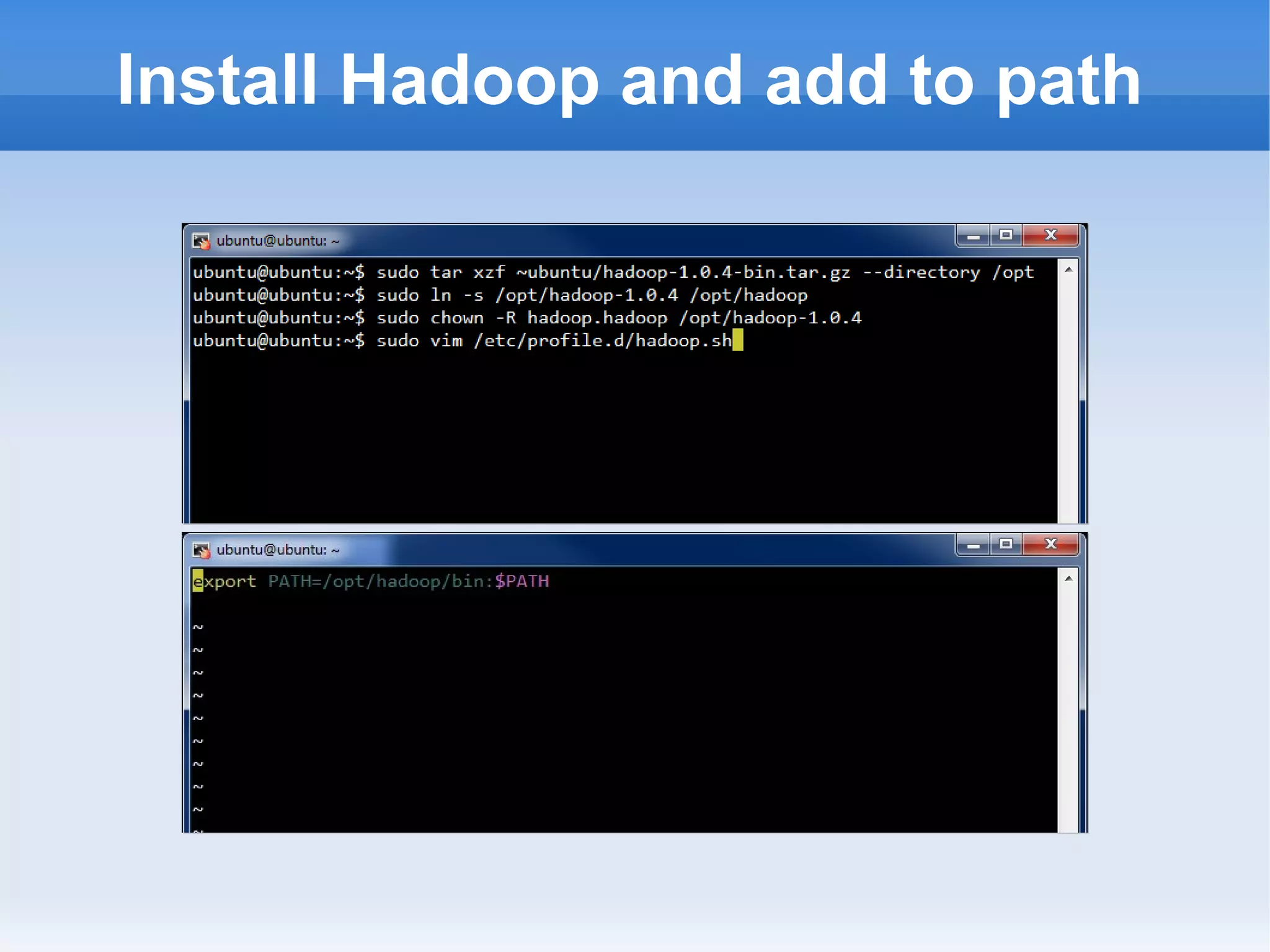

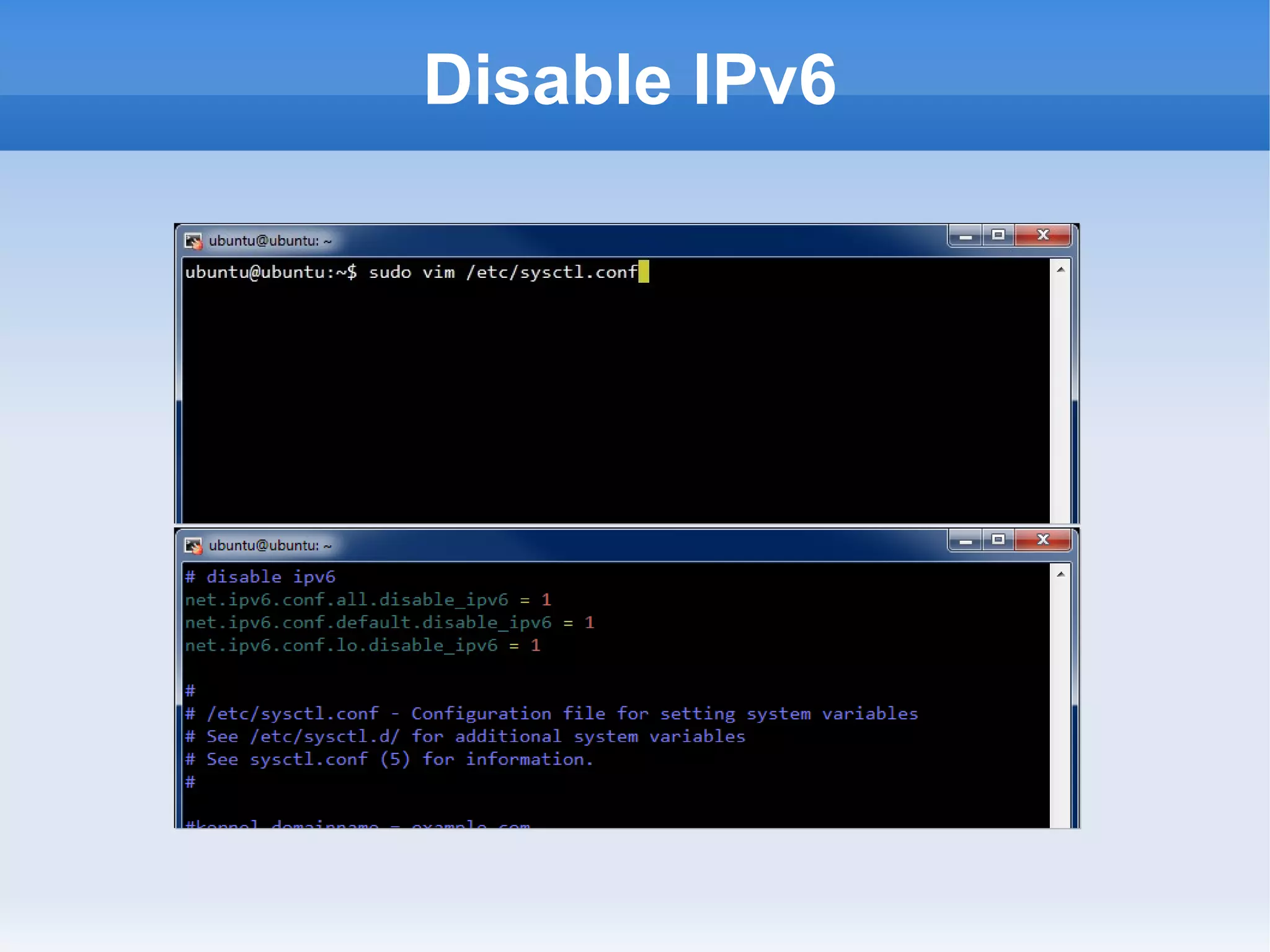

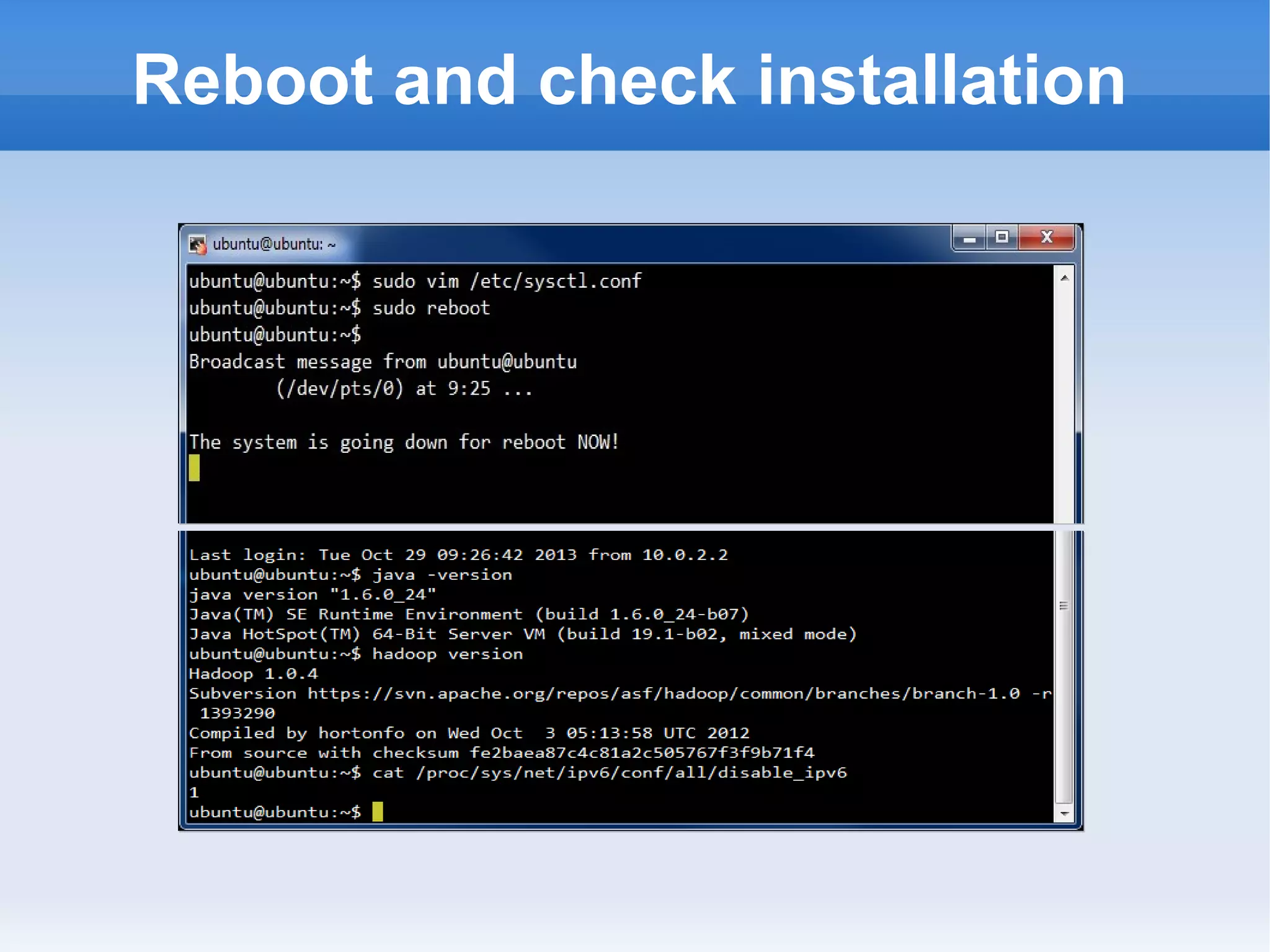

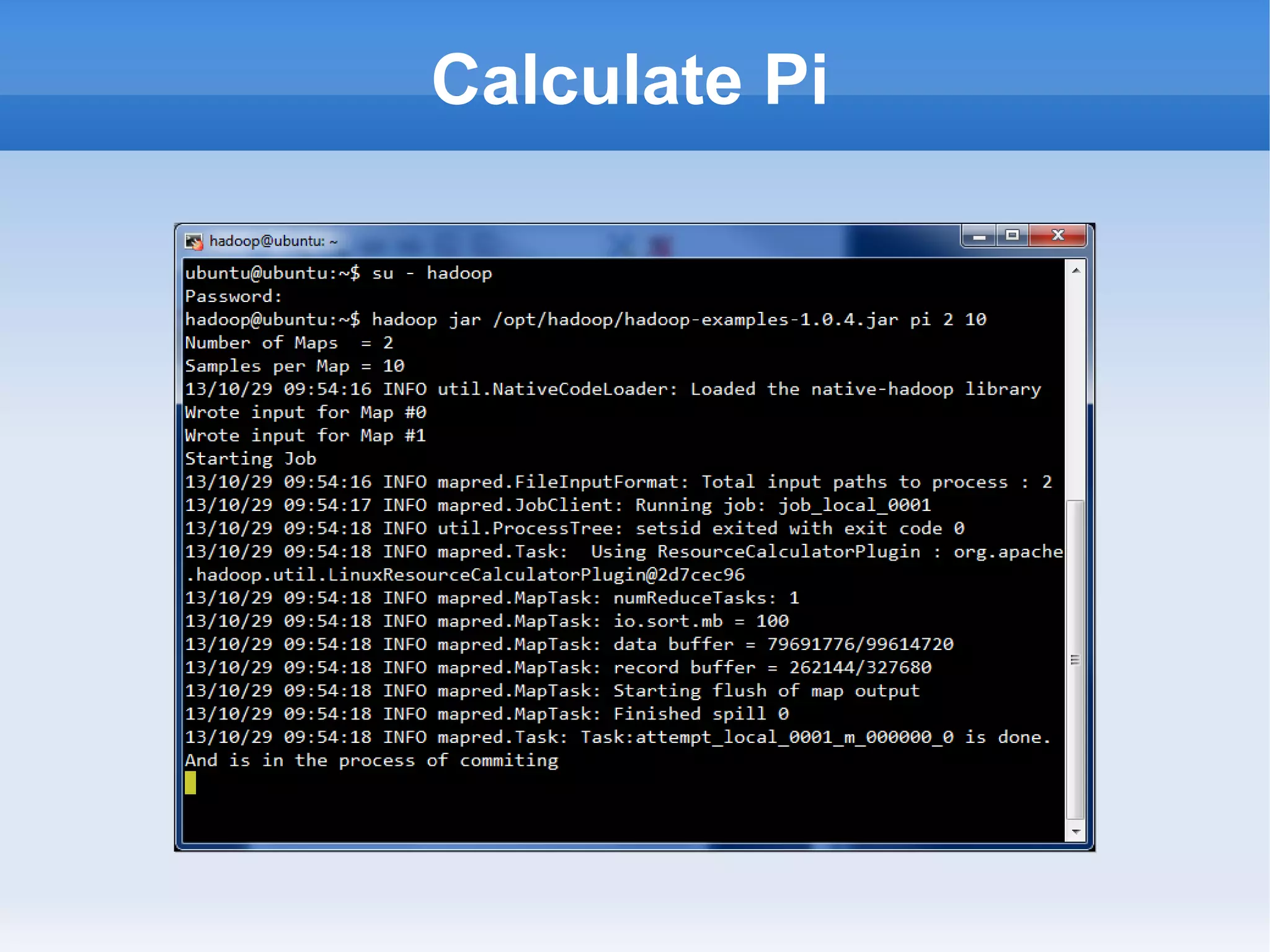

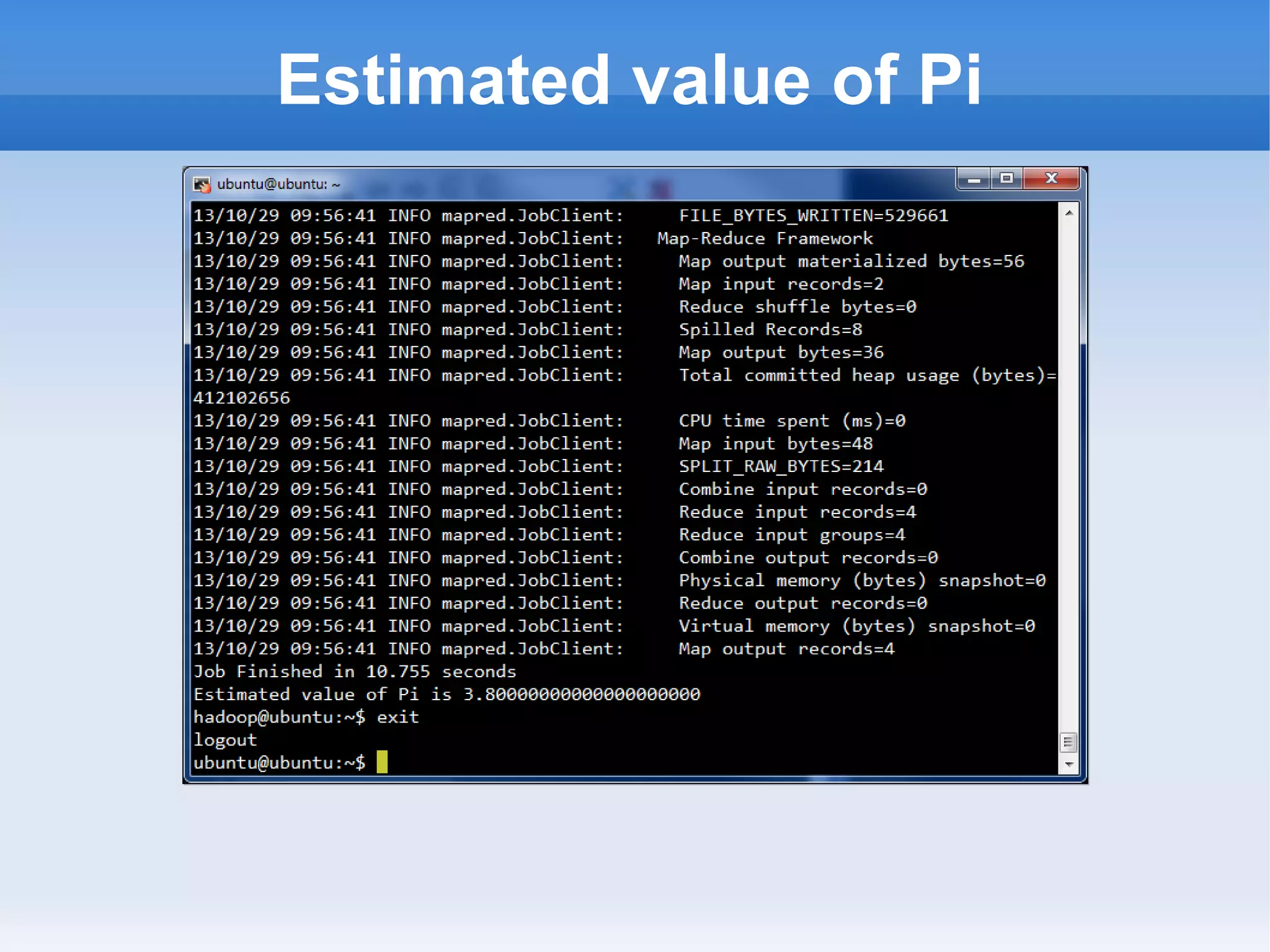

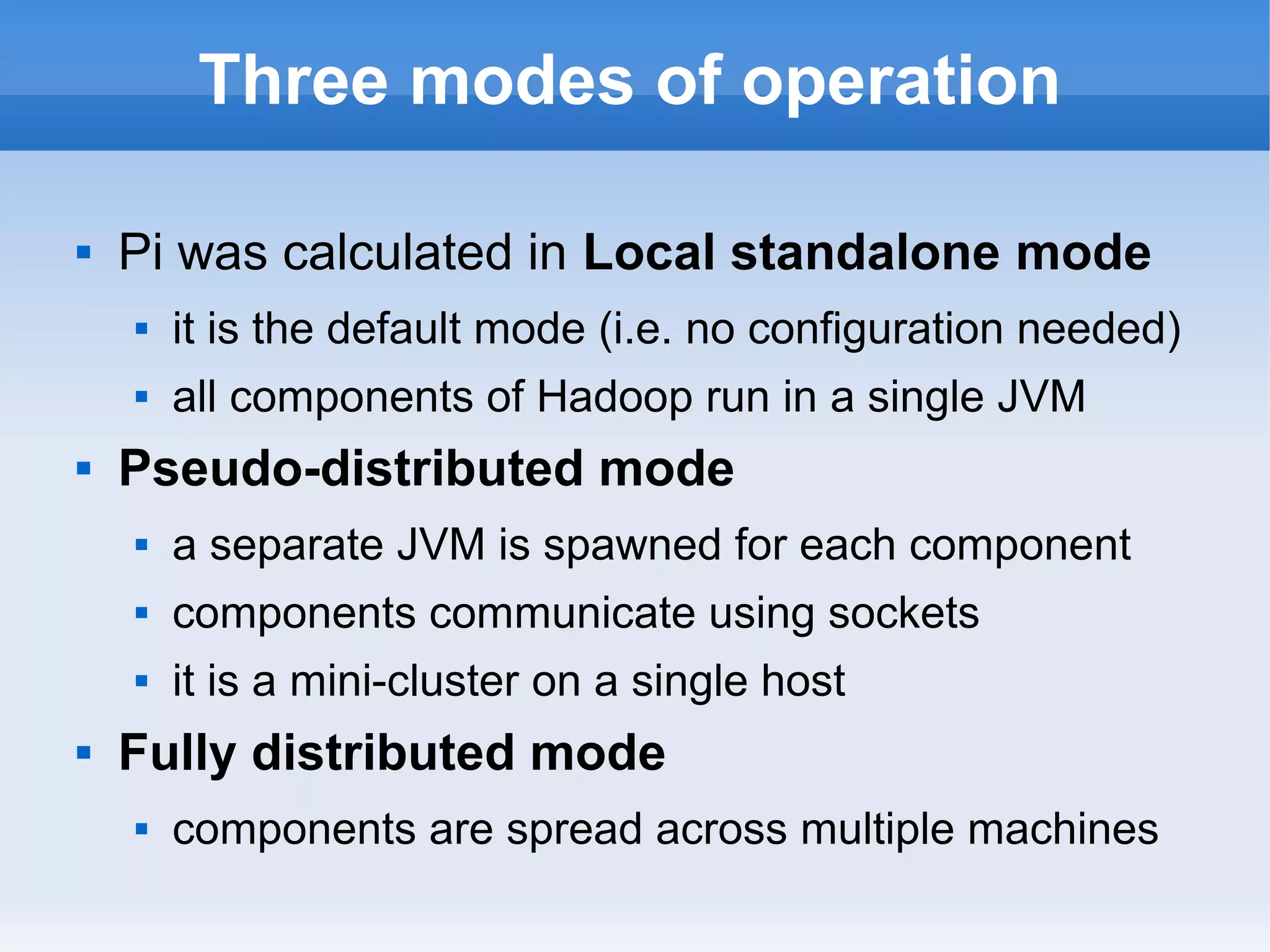

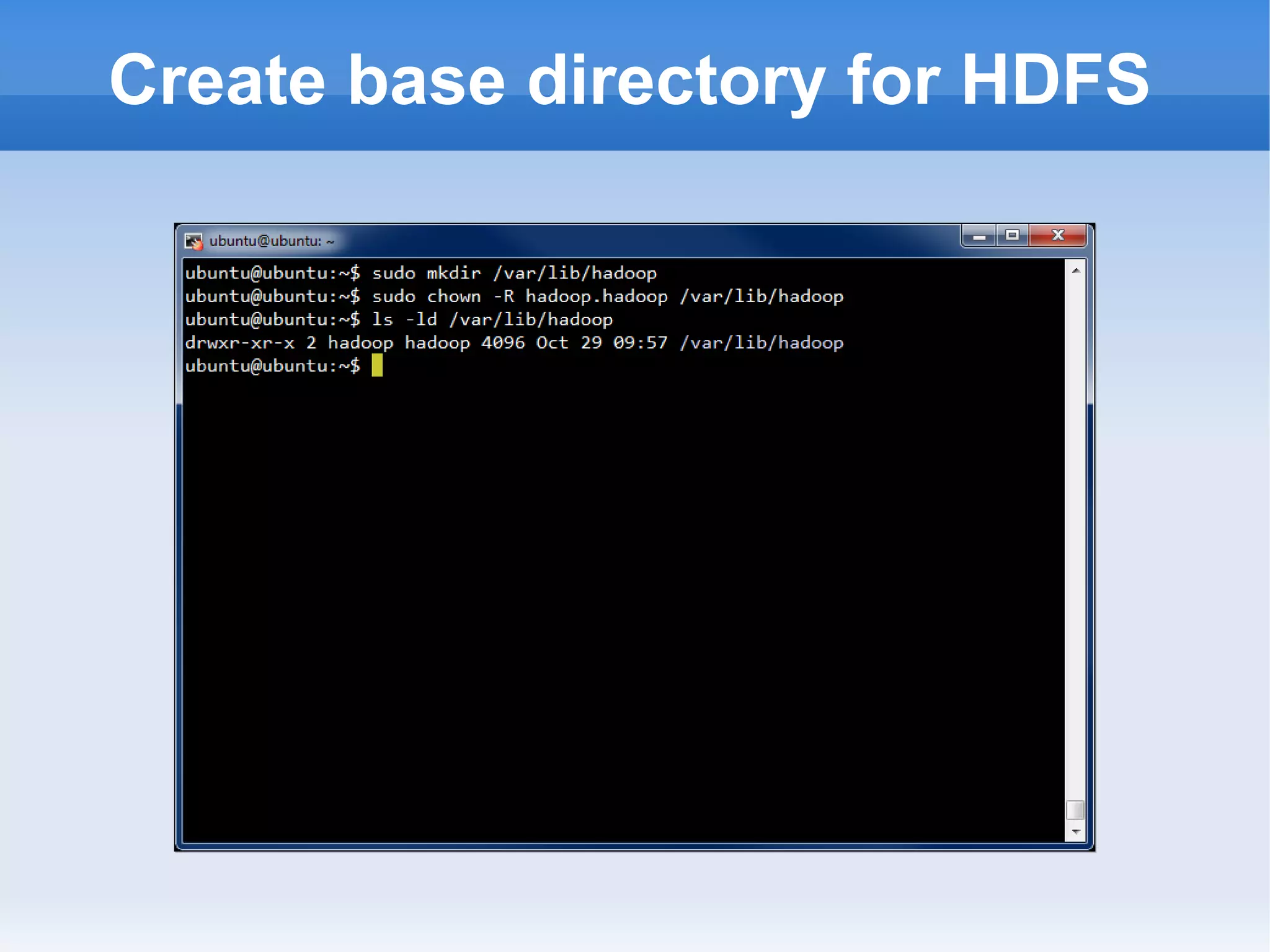

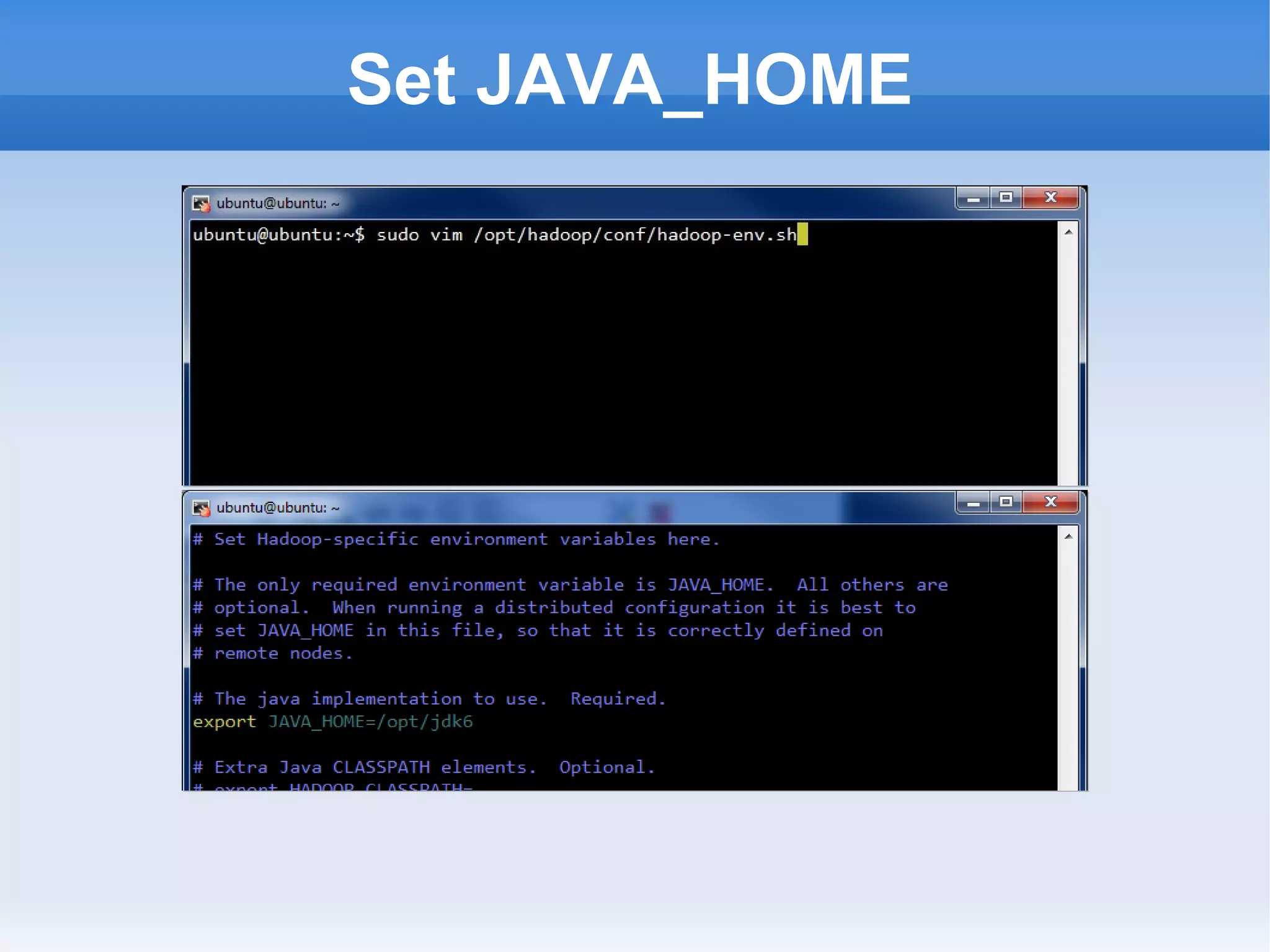

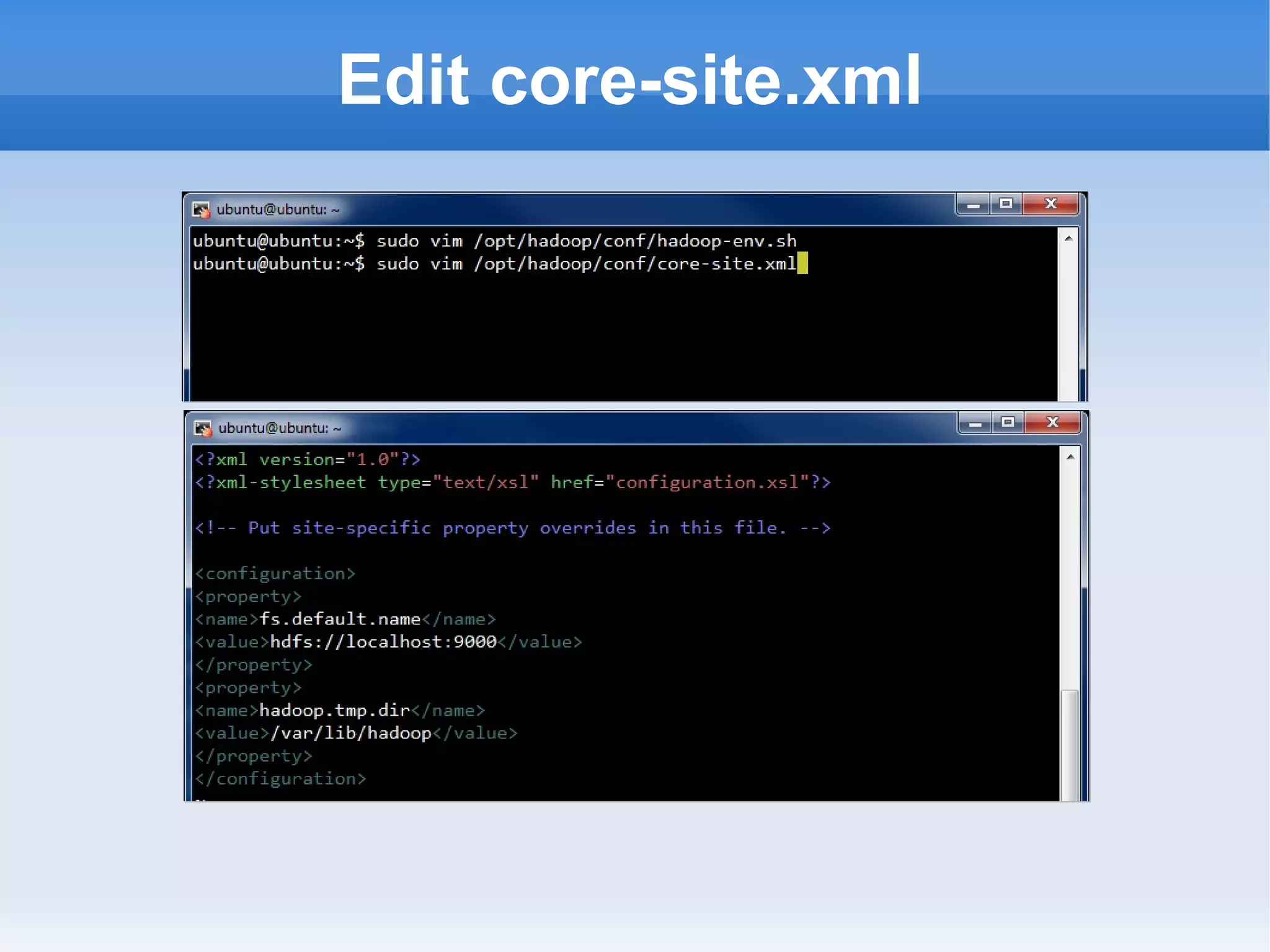

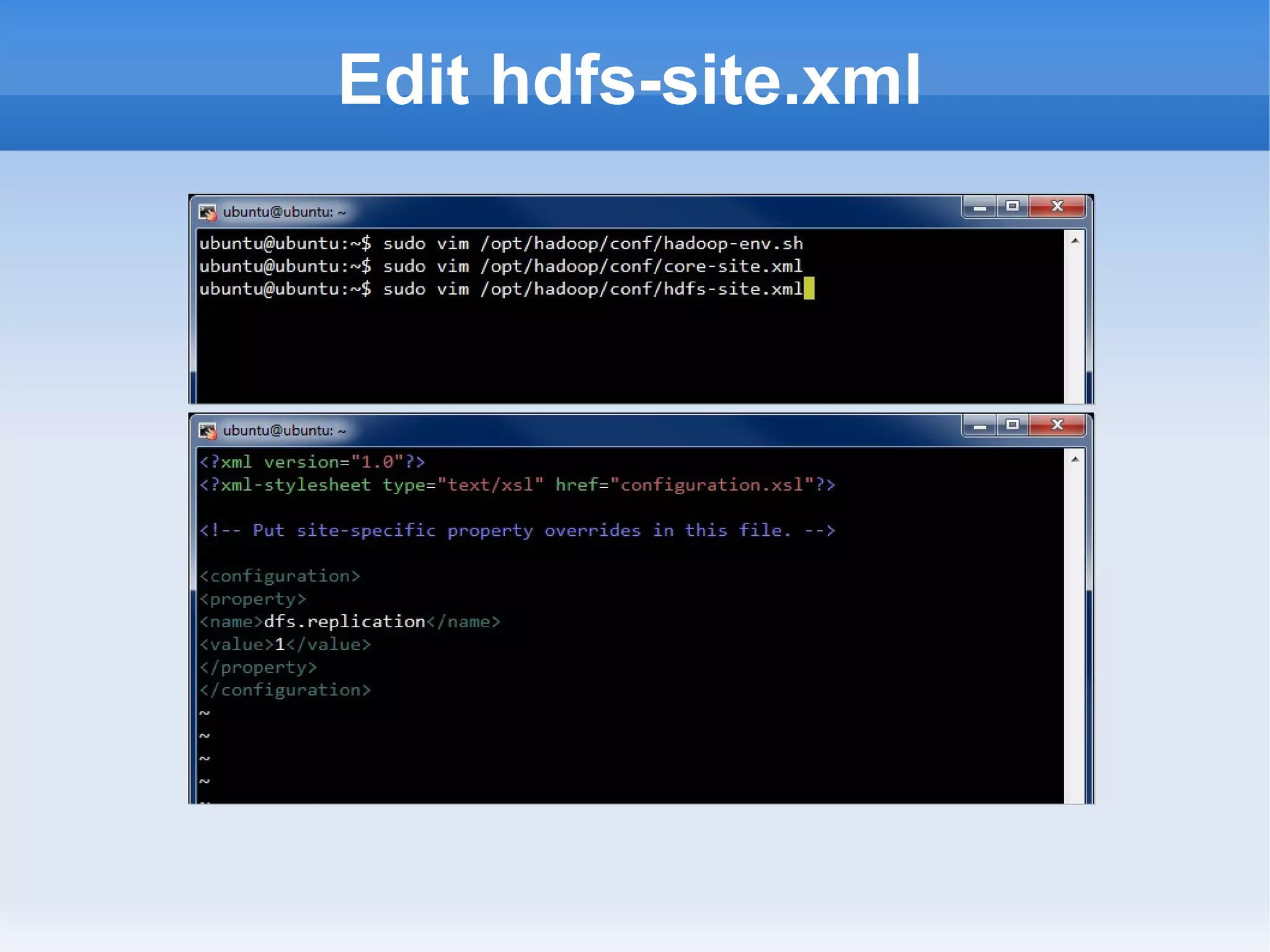

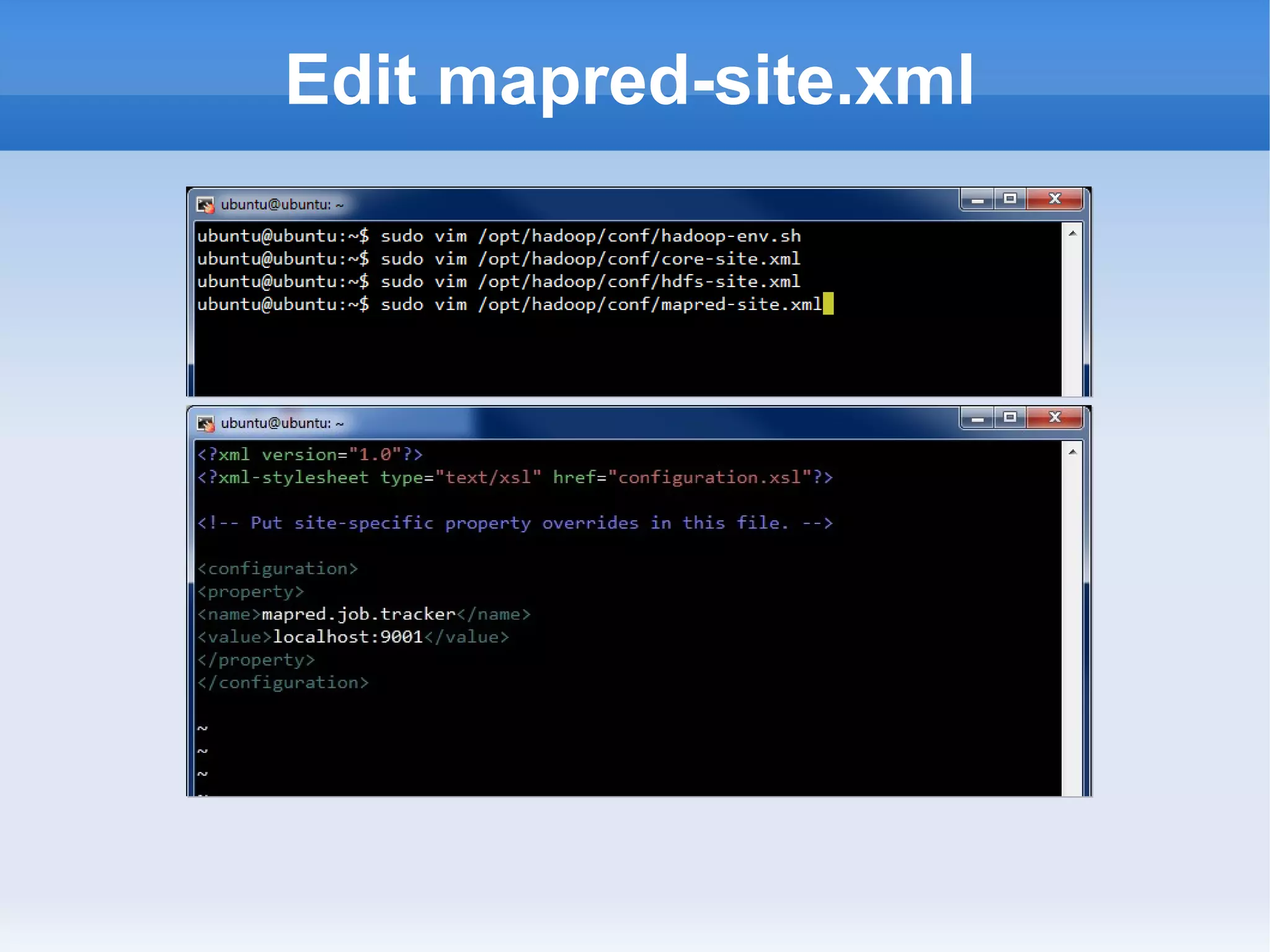

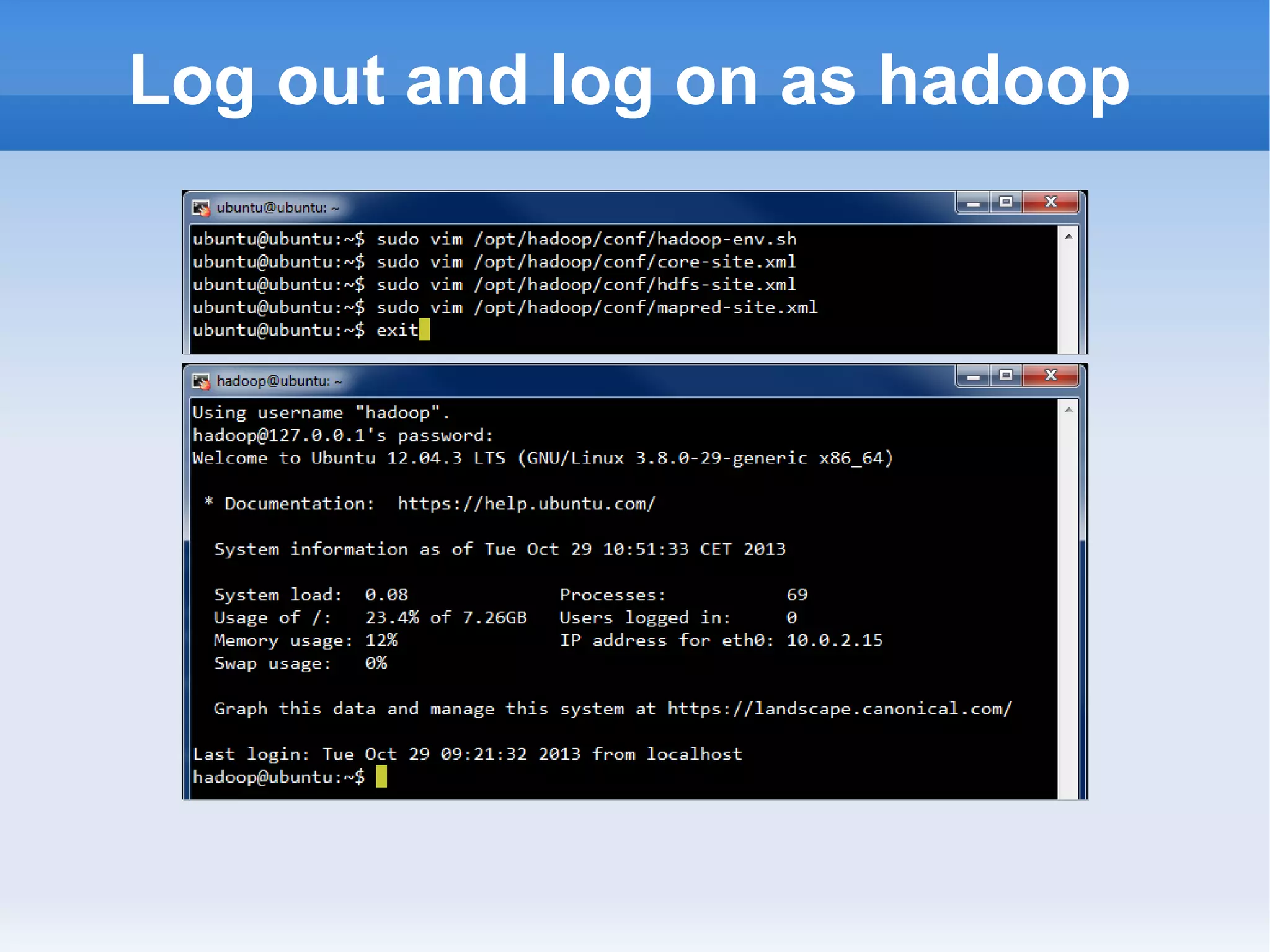

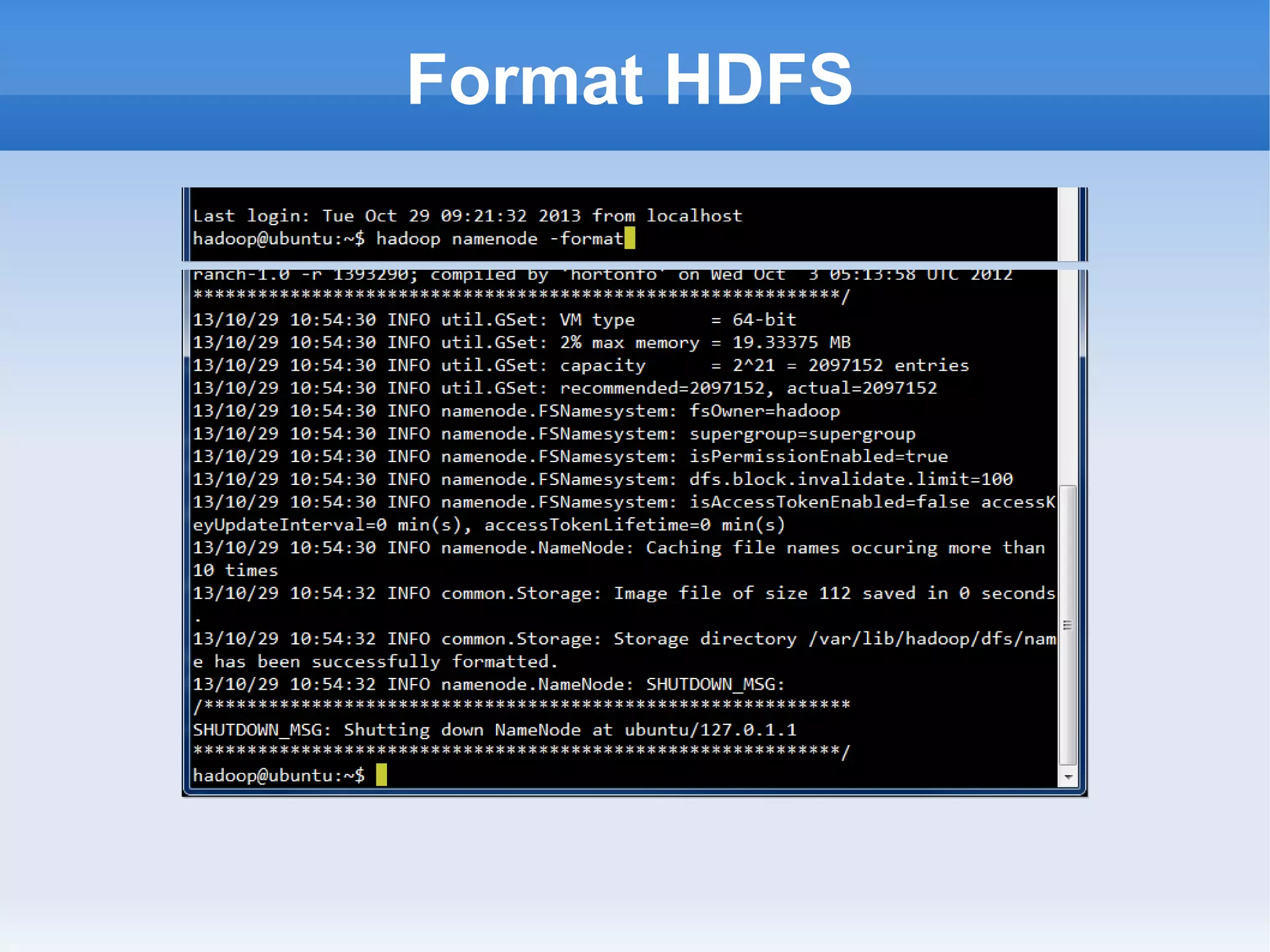

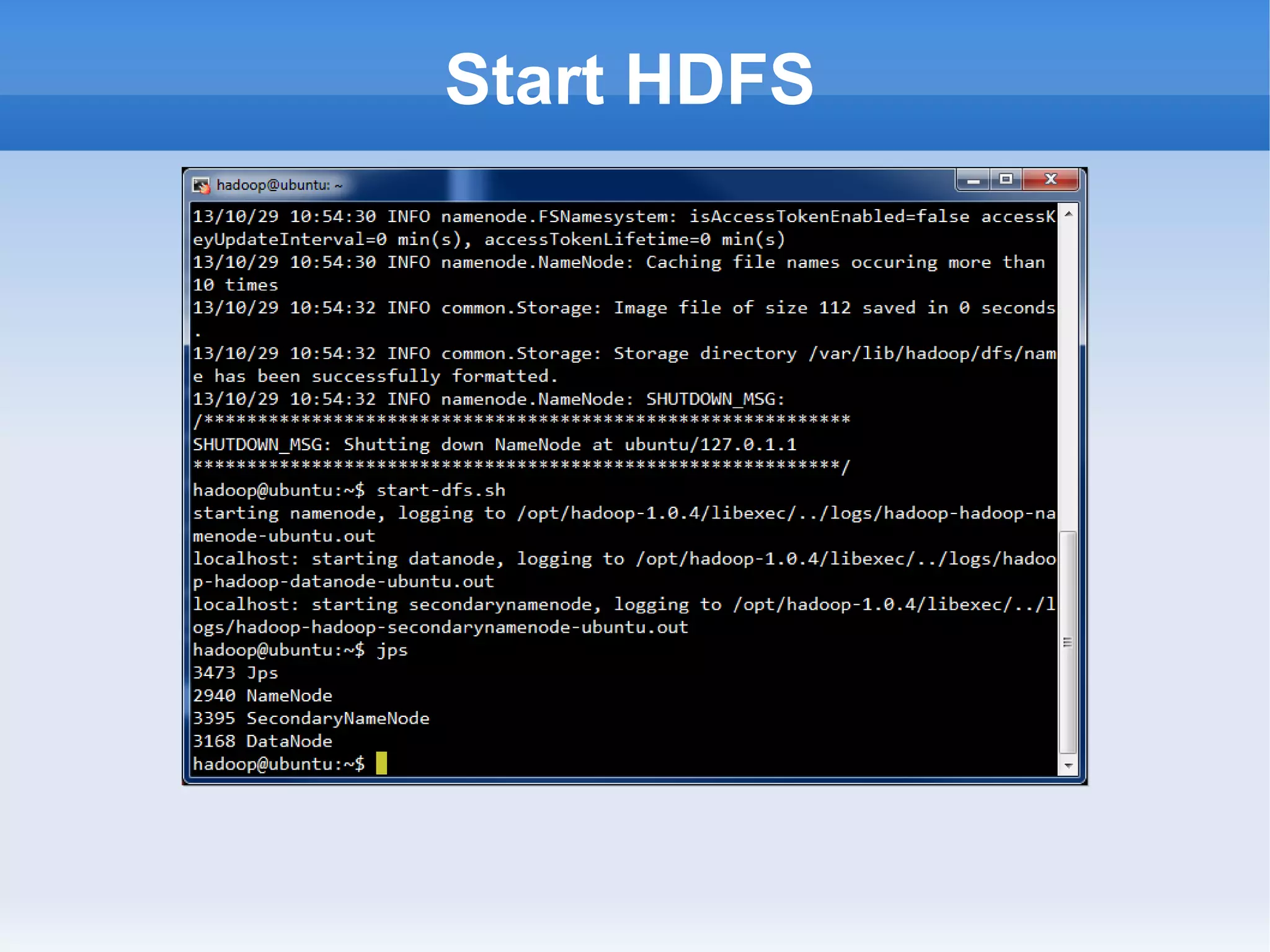

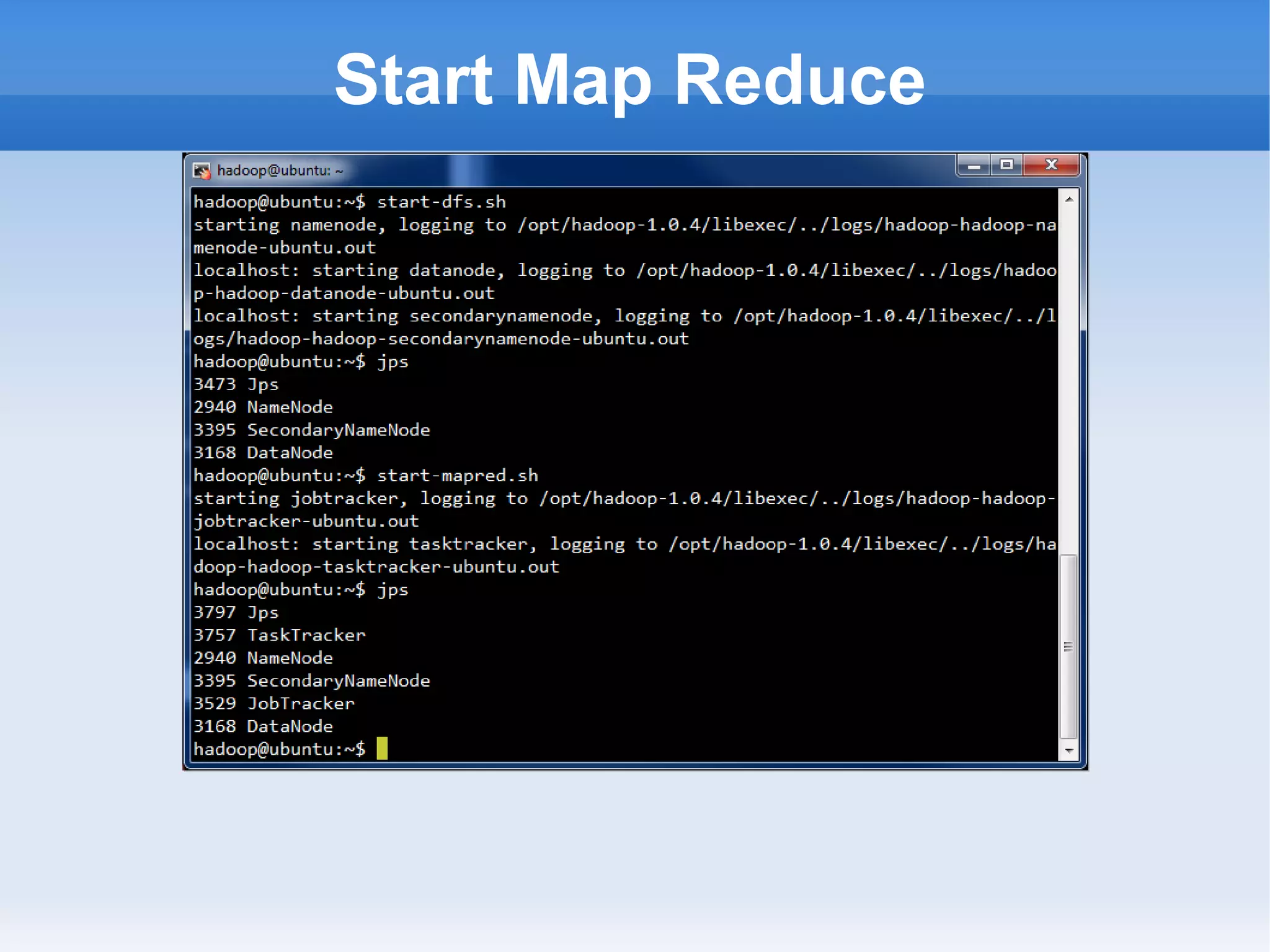

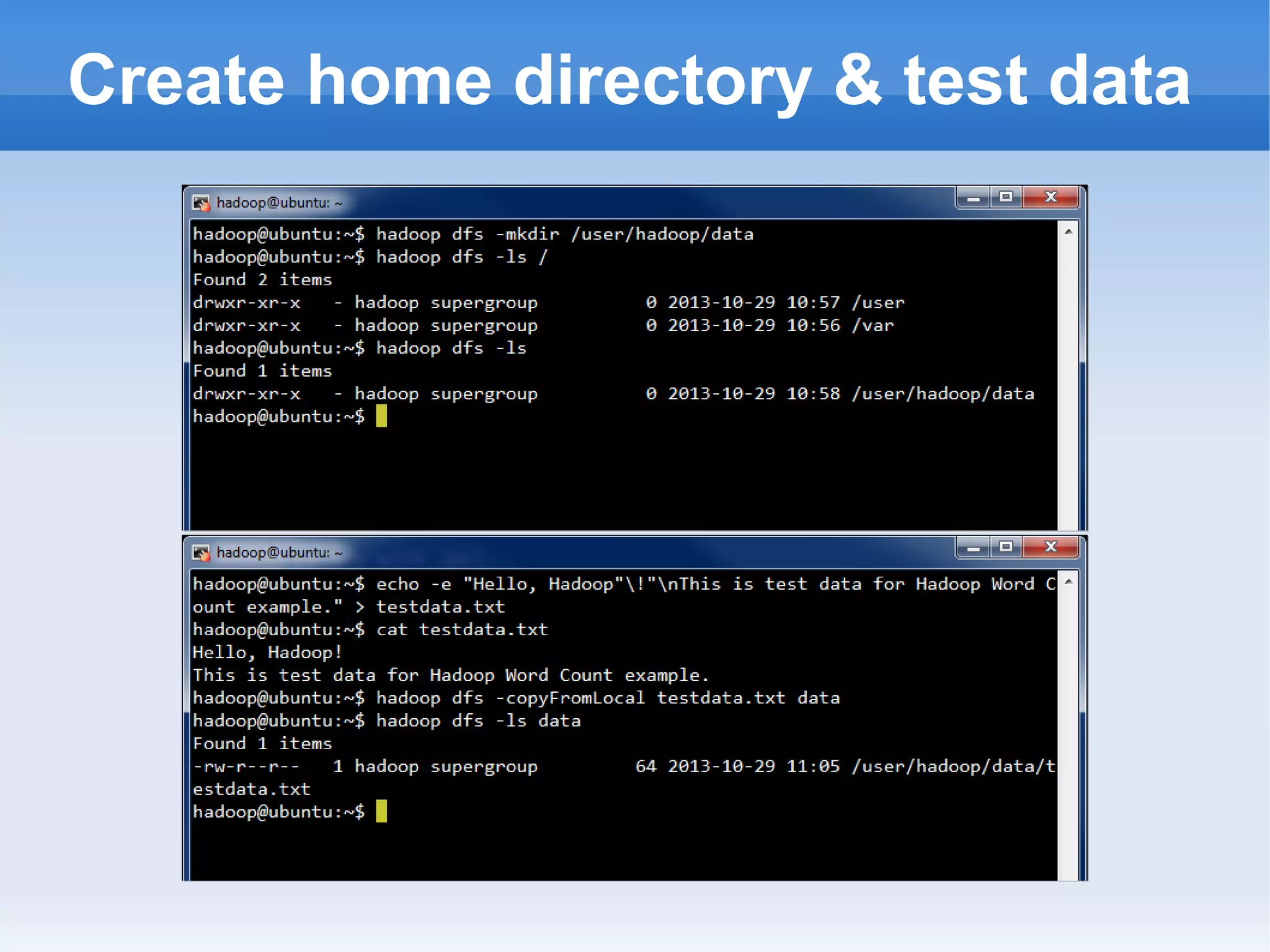

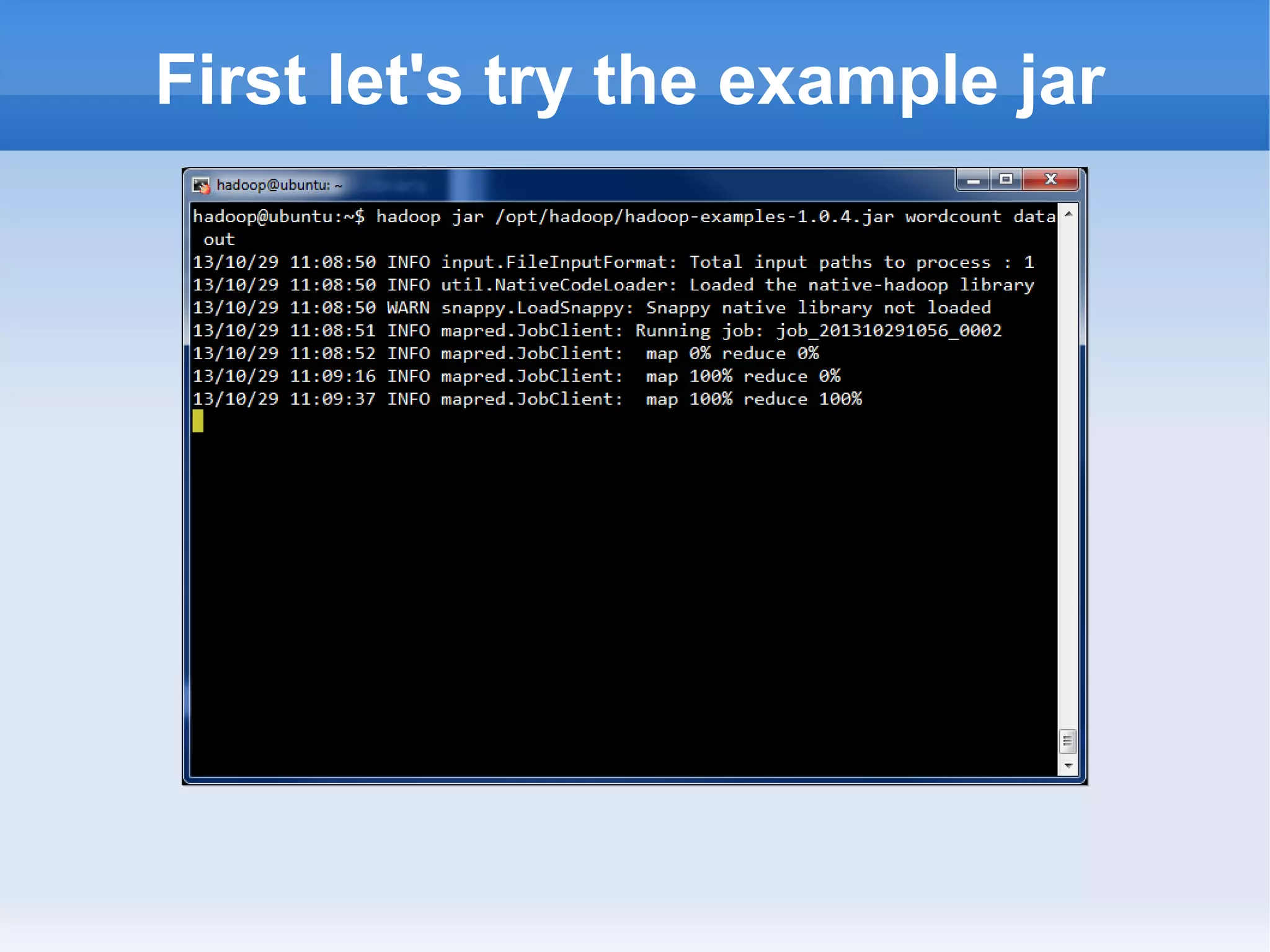

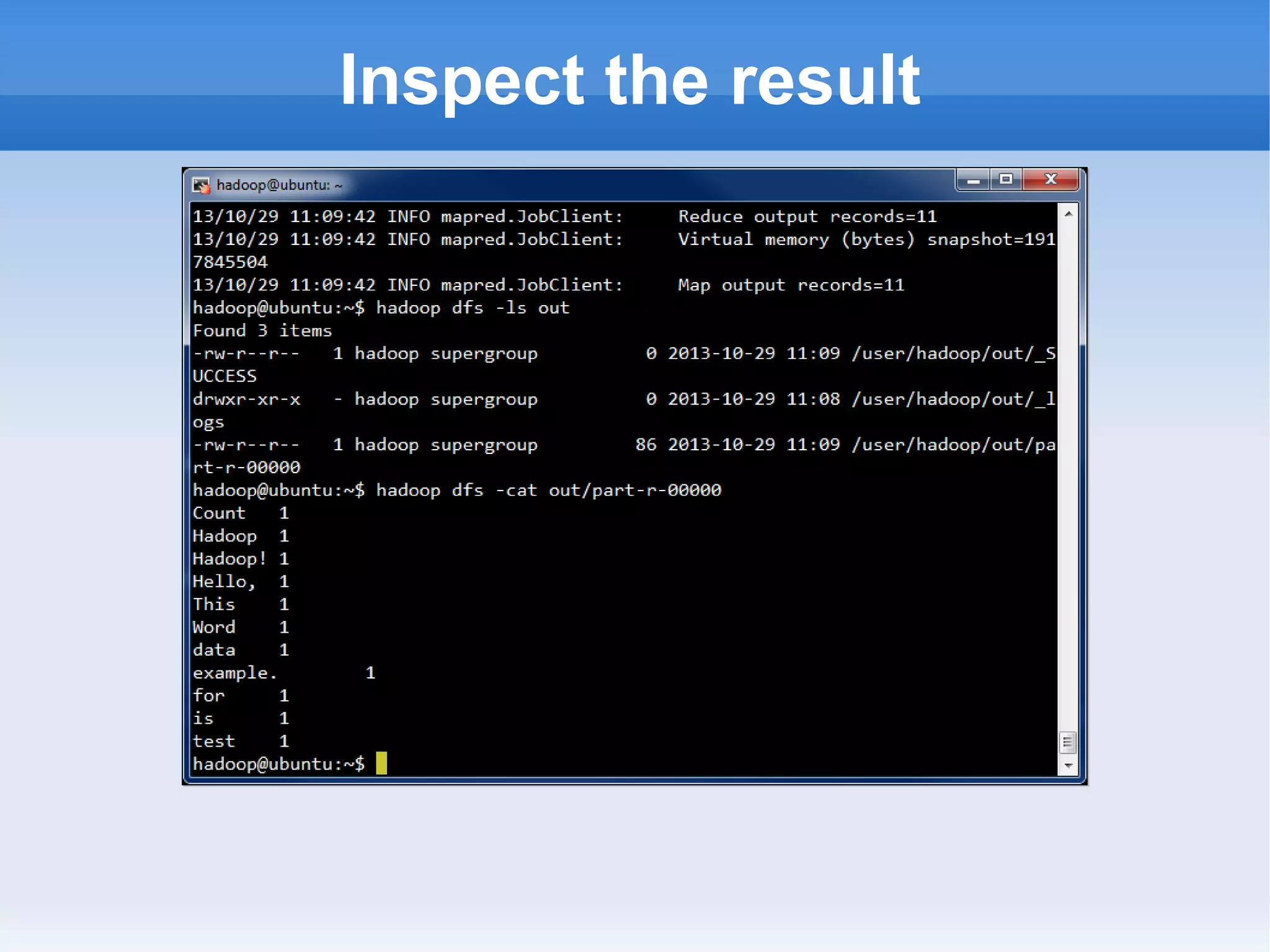

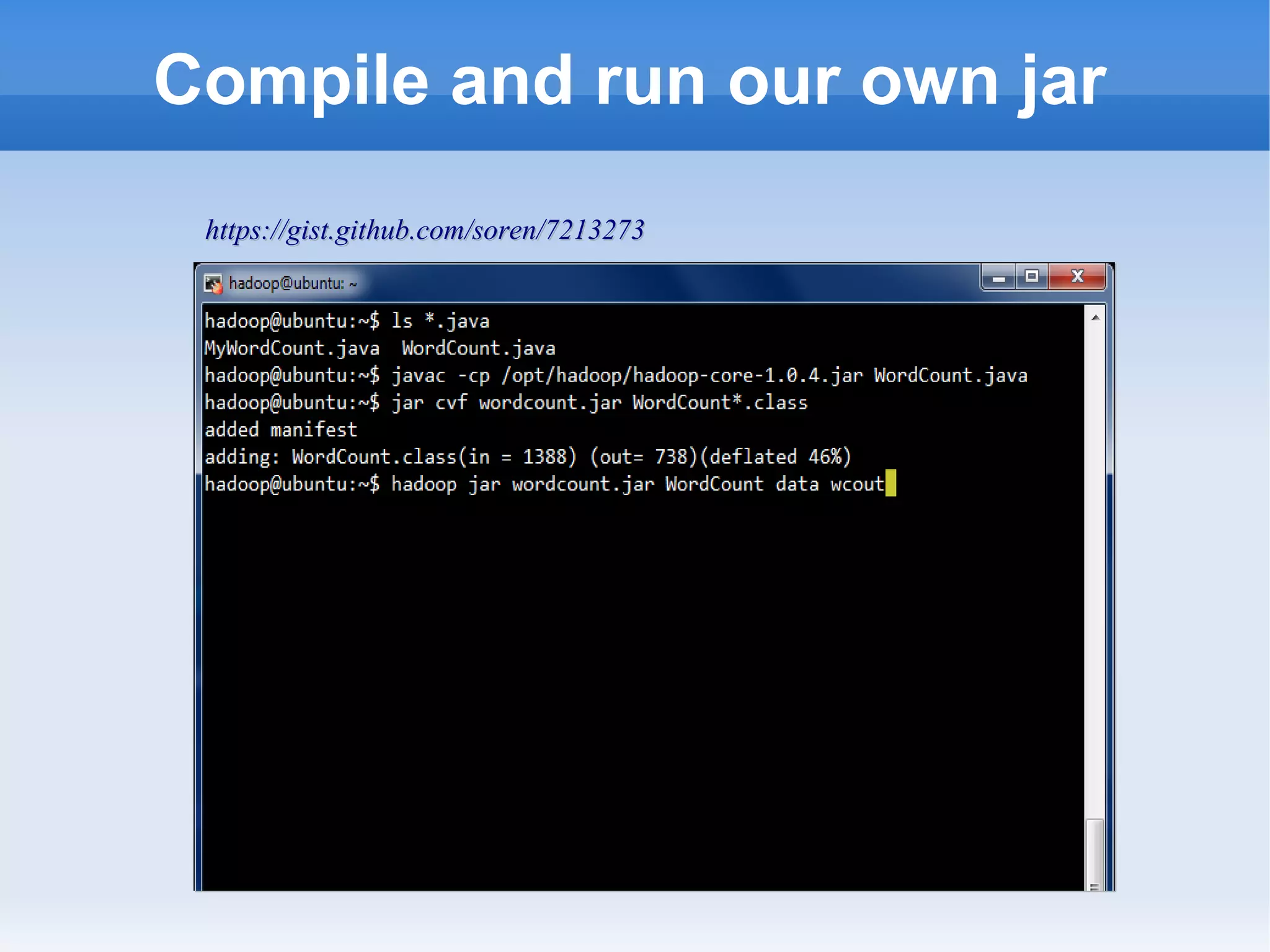

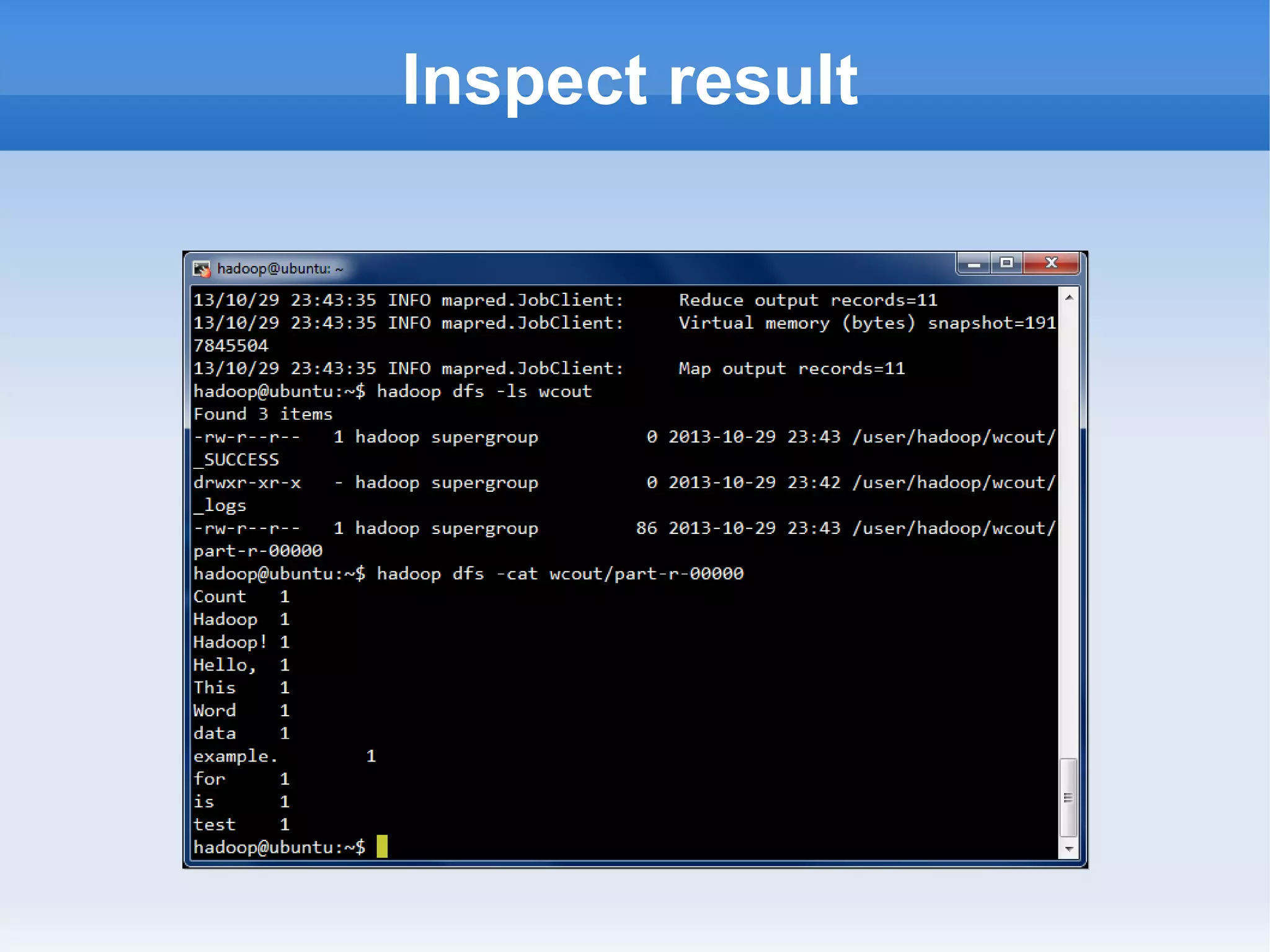

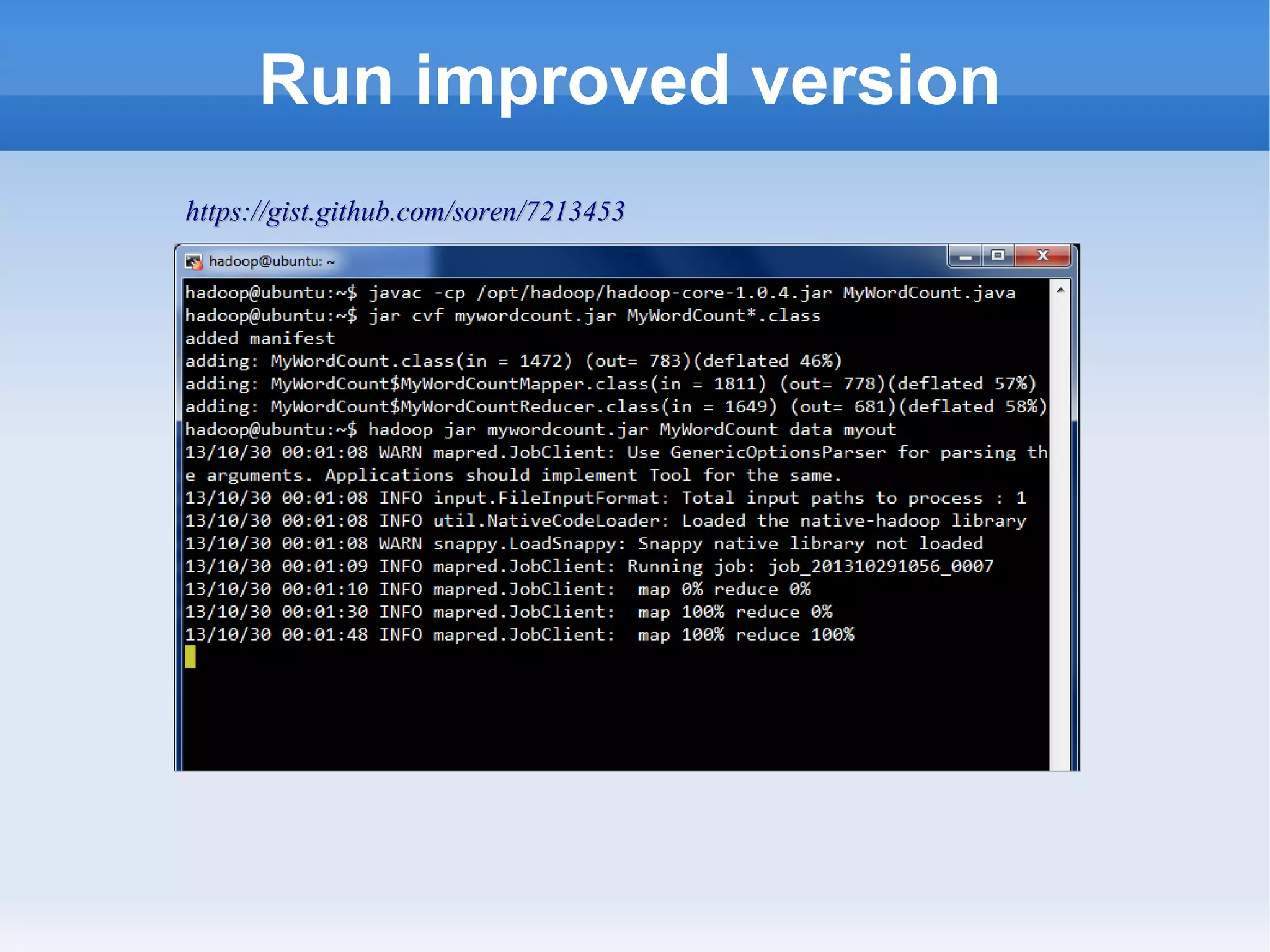

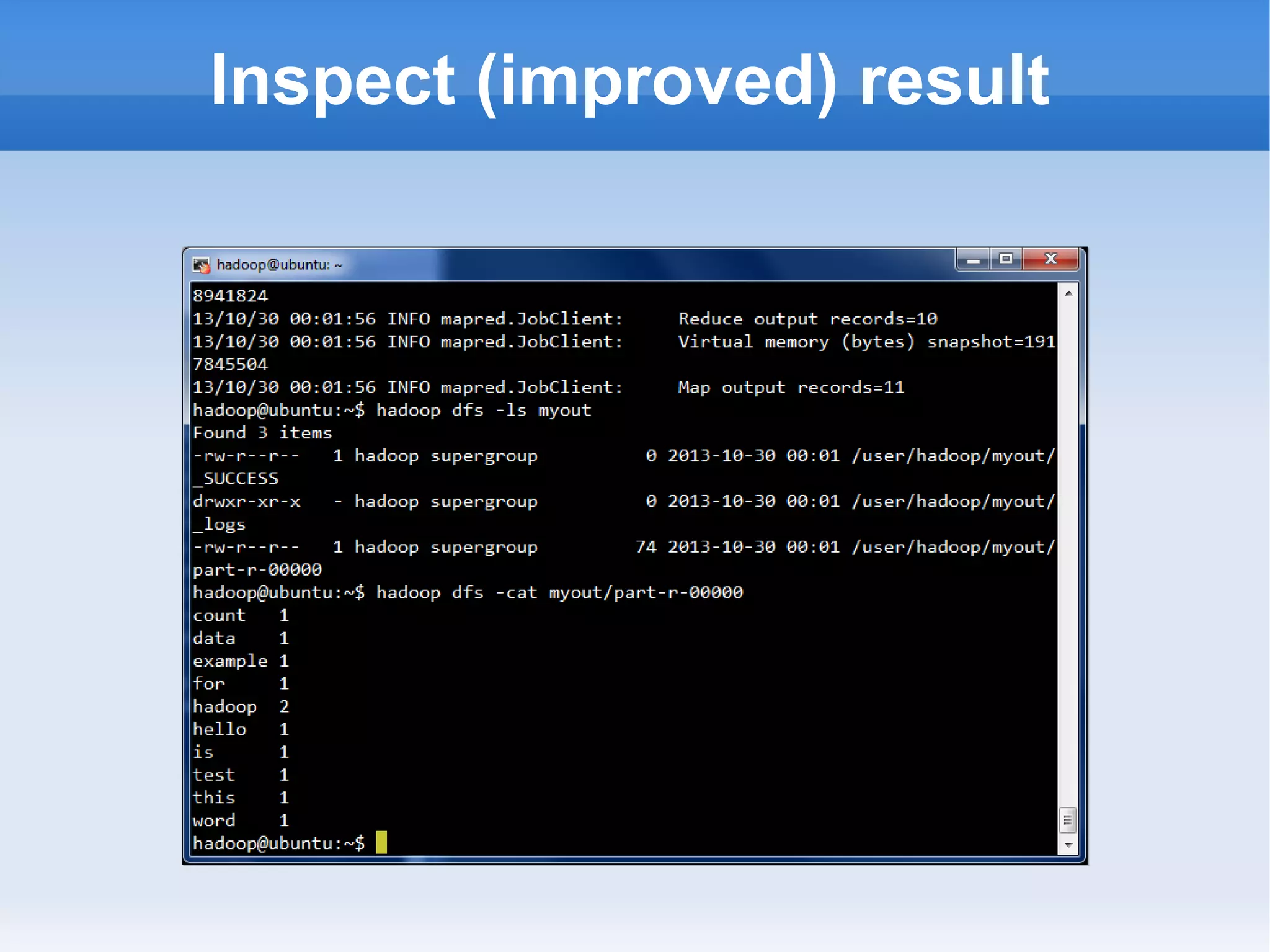

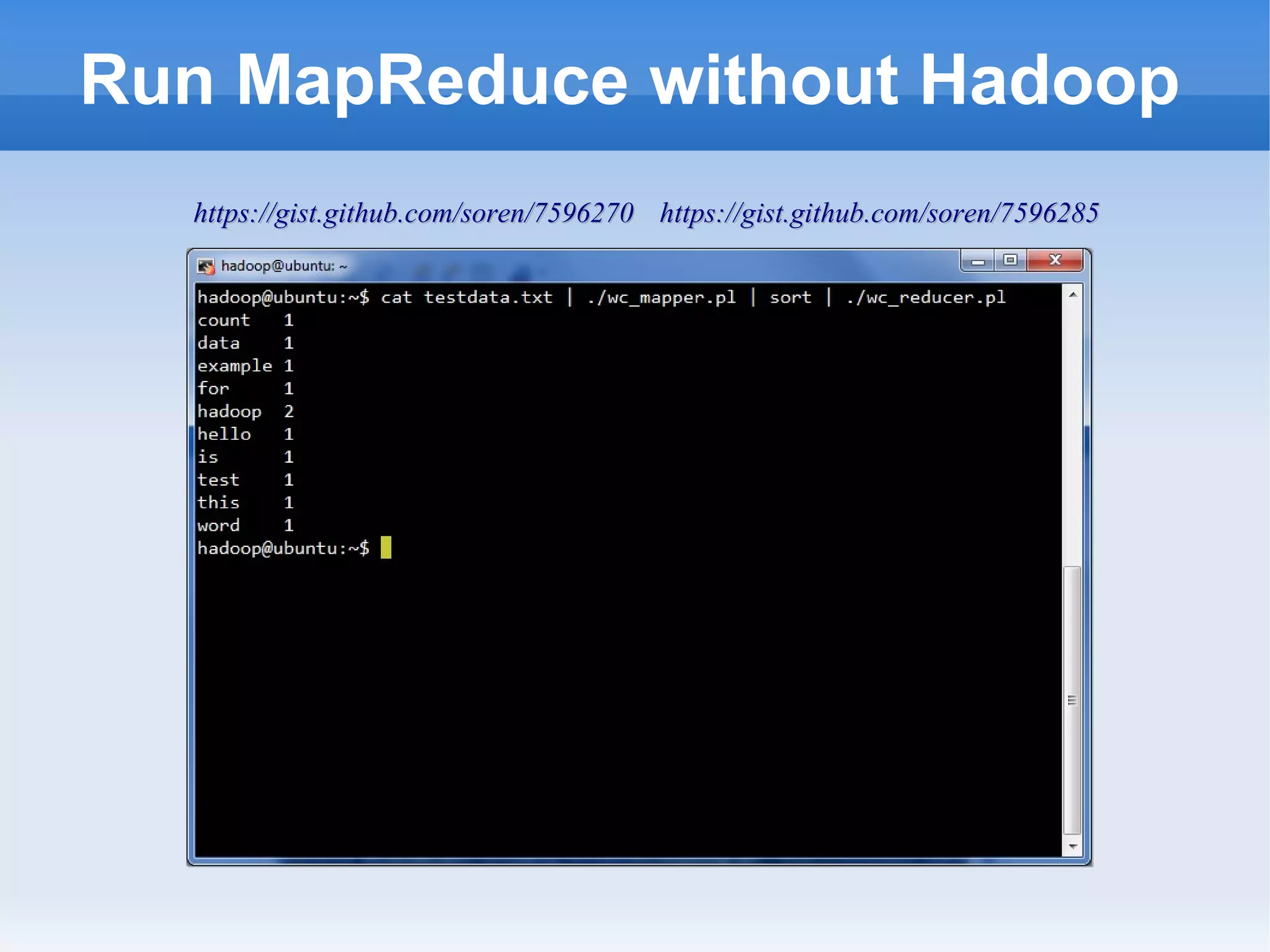

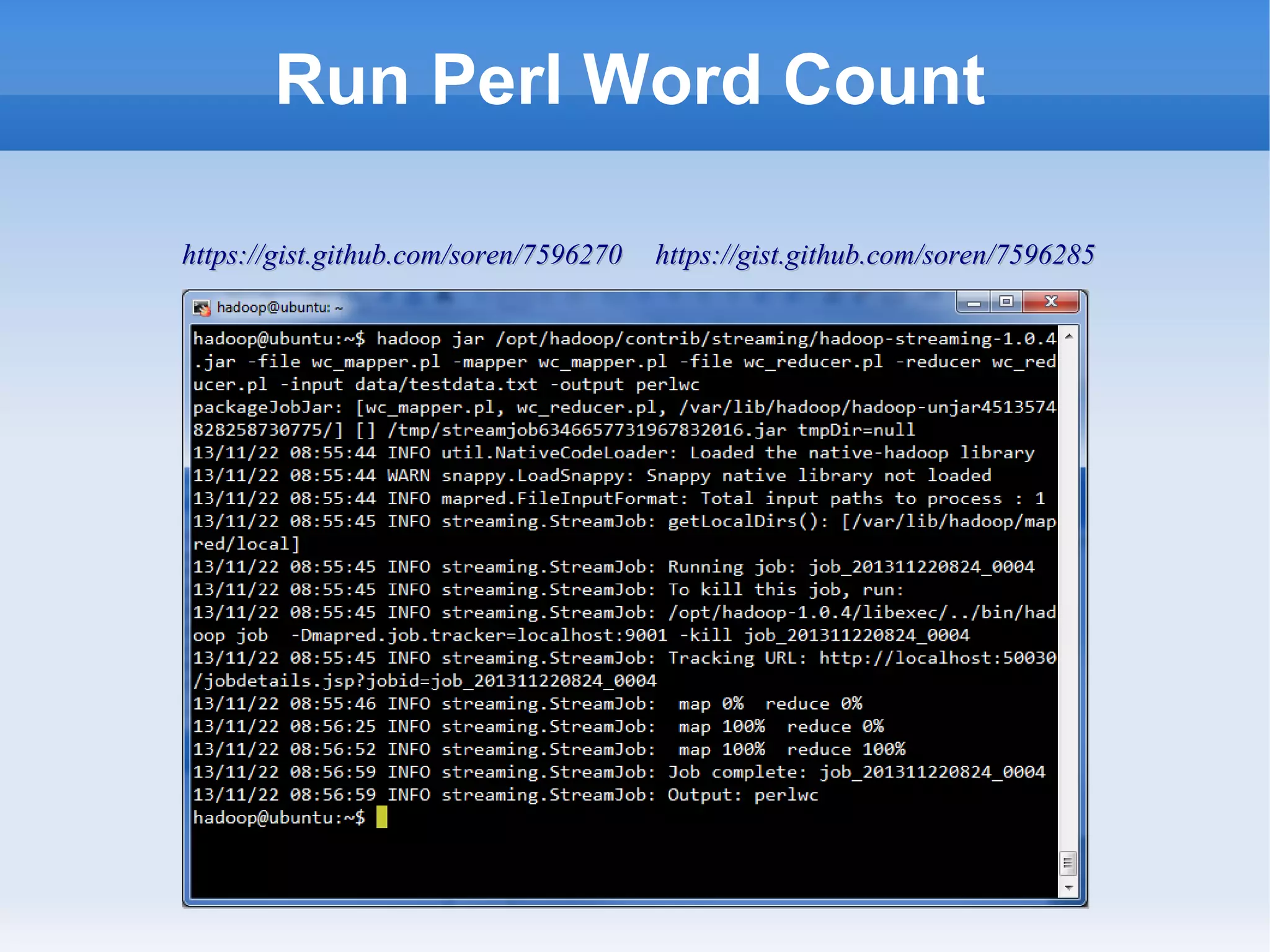

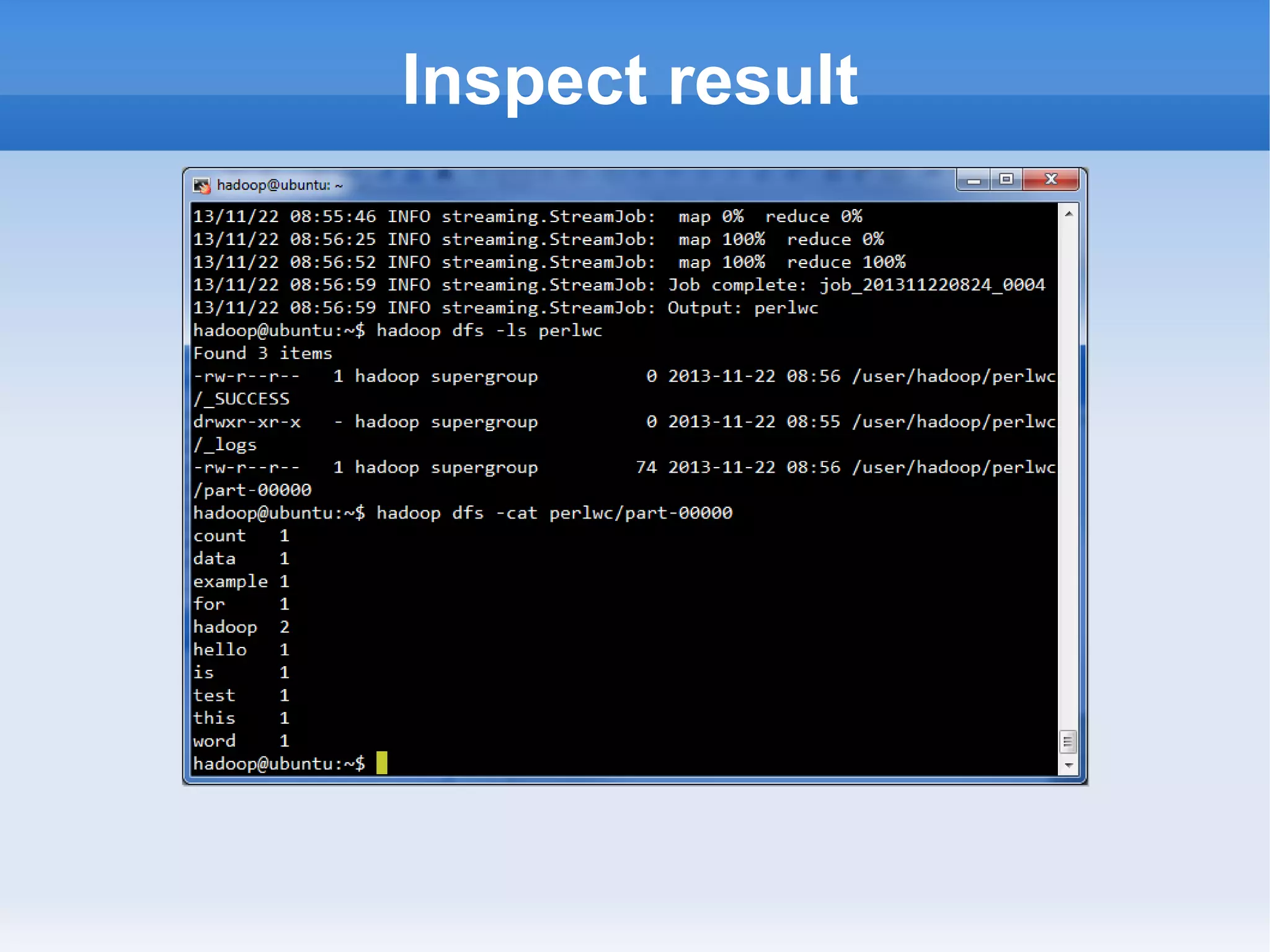

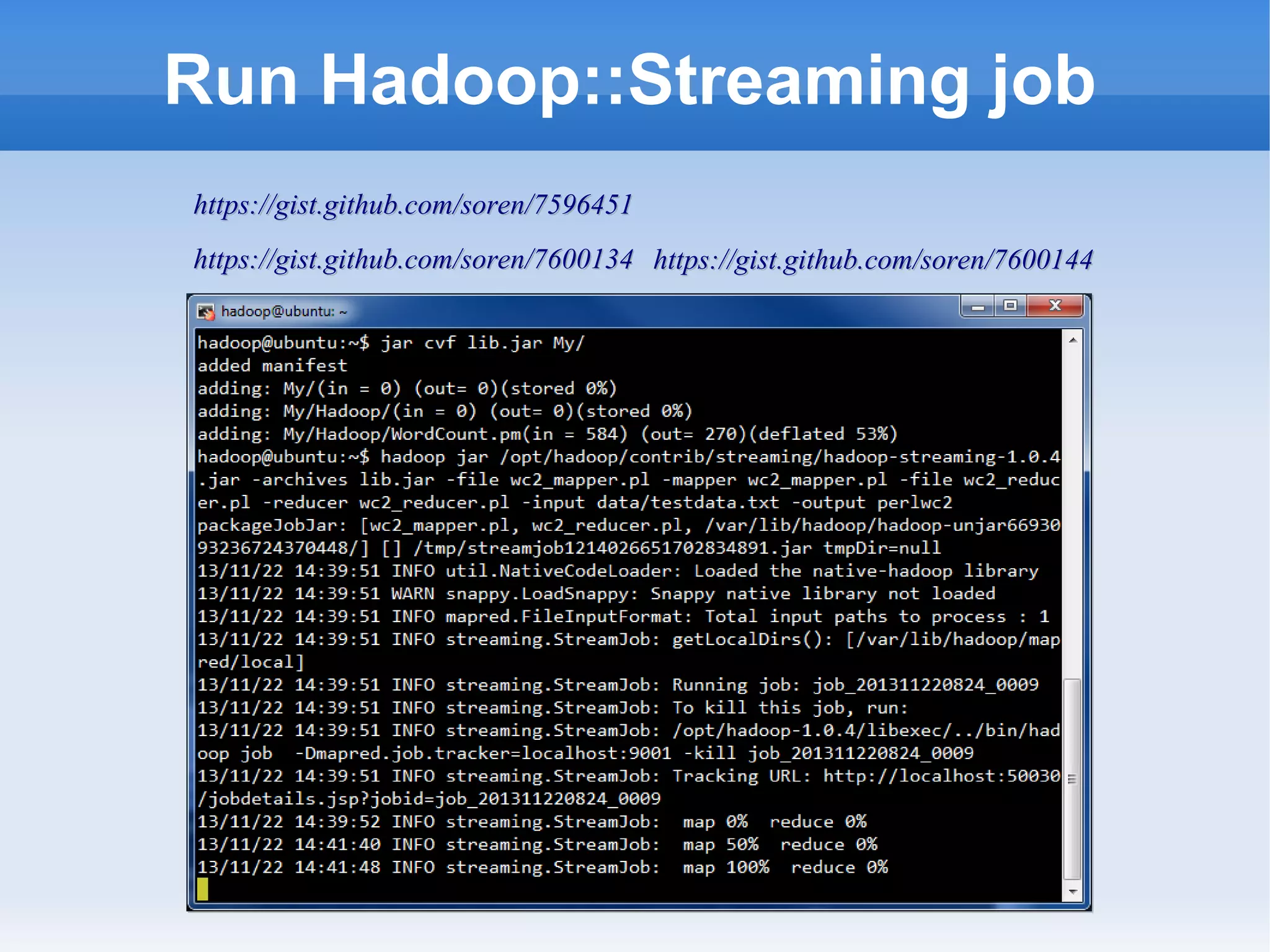

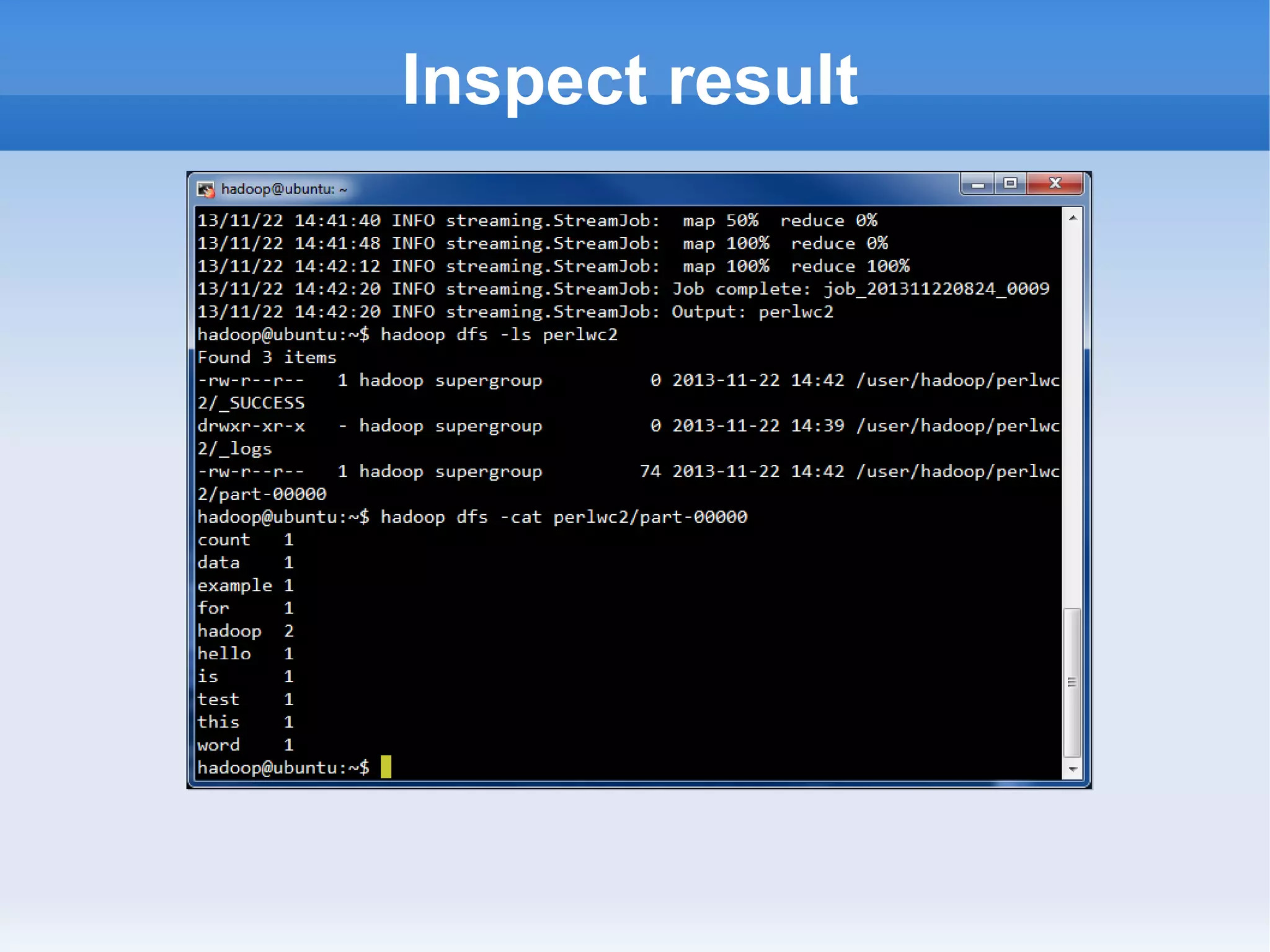

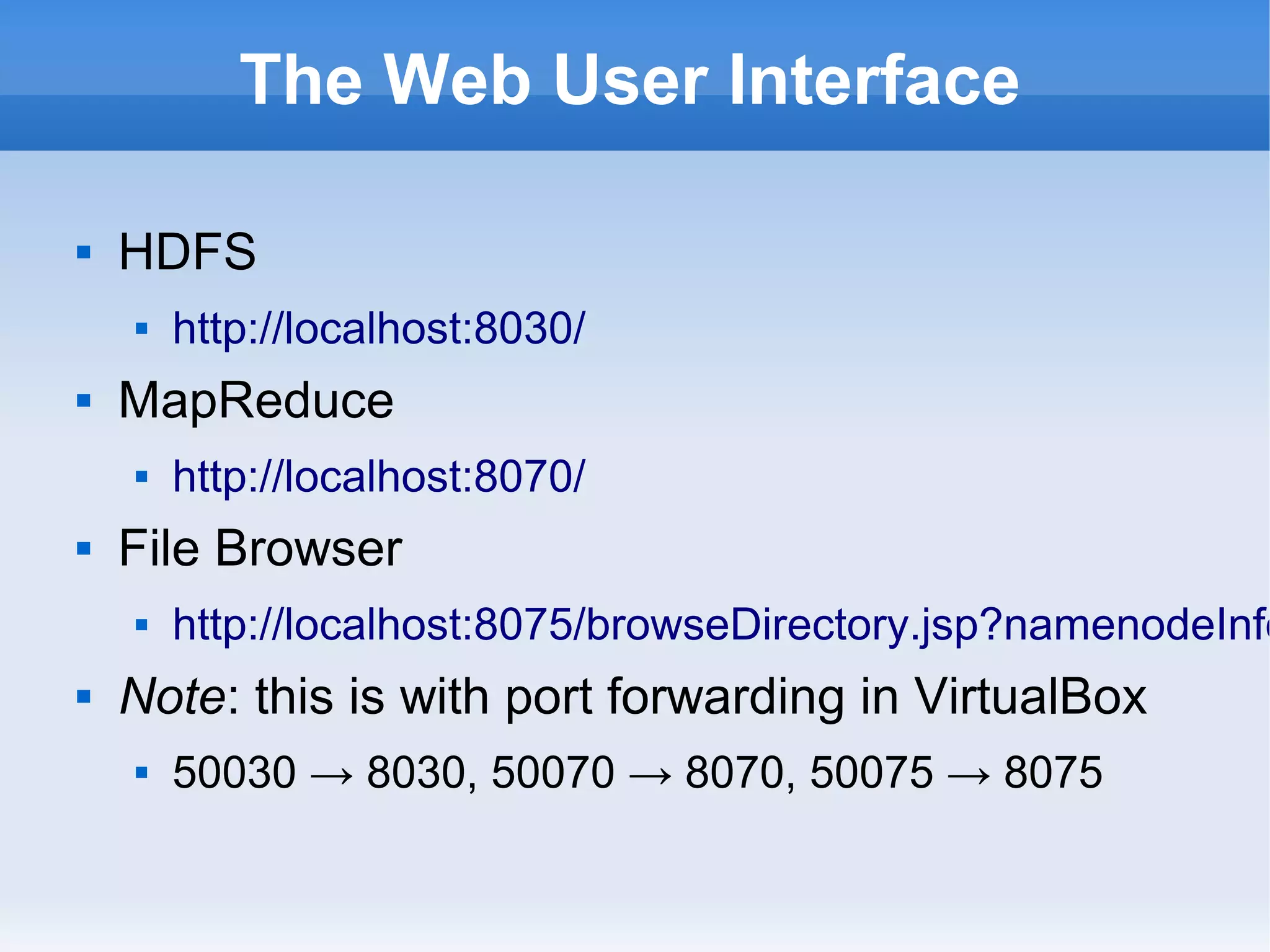

This document is a presentation on Hadoop given by Søren Lund. It begins with disclaimers that the speaker has no production experience with Hadoop. It then provides an overview of Hadoop, how it addresses the problem of scaling to large amounts of data, and its core components. The presentation demonstrates how to install and run Hadoop on a single machine, provides examples of running word count jobs locally and on Hadoop, and discusses related tools like Hive and Pig. It concludes with notes on the Hadoop user interface, joins, running Hadoop in the cloud, and other Hadoop distributions.