Embed presentation

Download to read offline

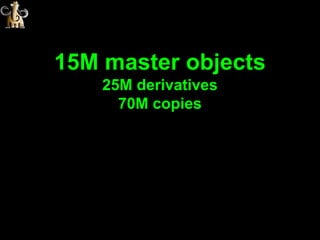

This document summarizes how a media company stores and processes over 15 million assets totaling 3 petabytes of data using PostgreSQL instead of traditional file systems. The PostgreSQL database stores metadata, file hashes, and derivatives for ingestion, processing, distribution and analysis while handling over 200GB of core data and 500GB of XML processing. A custom solution was built using PostgreSQL data types, hashing functions, and asynchronous actions to support the large scale storage and processing needs of the company.