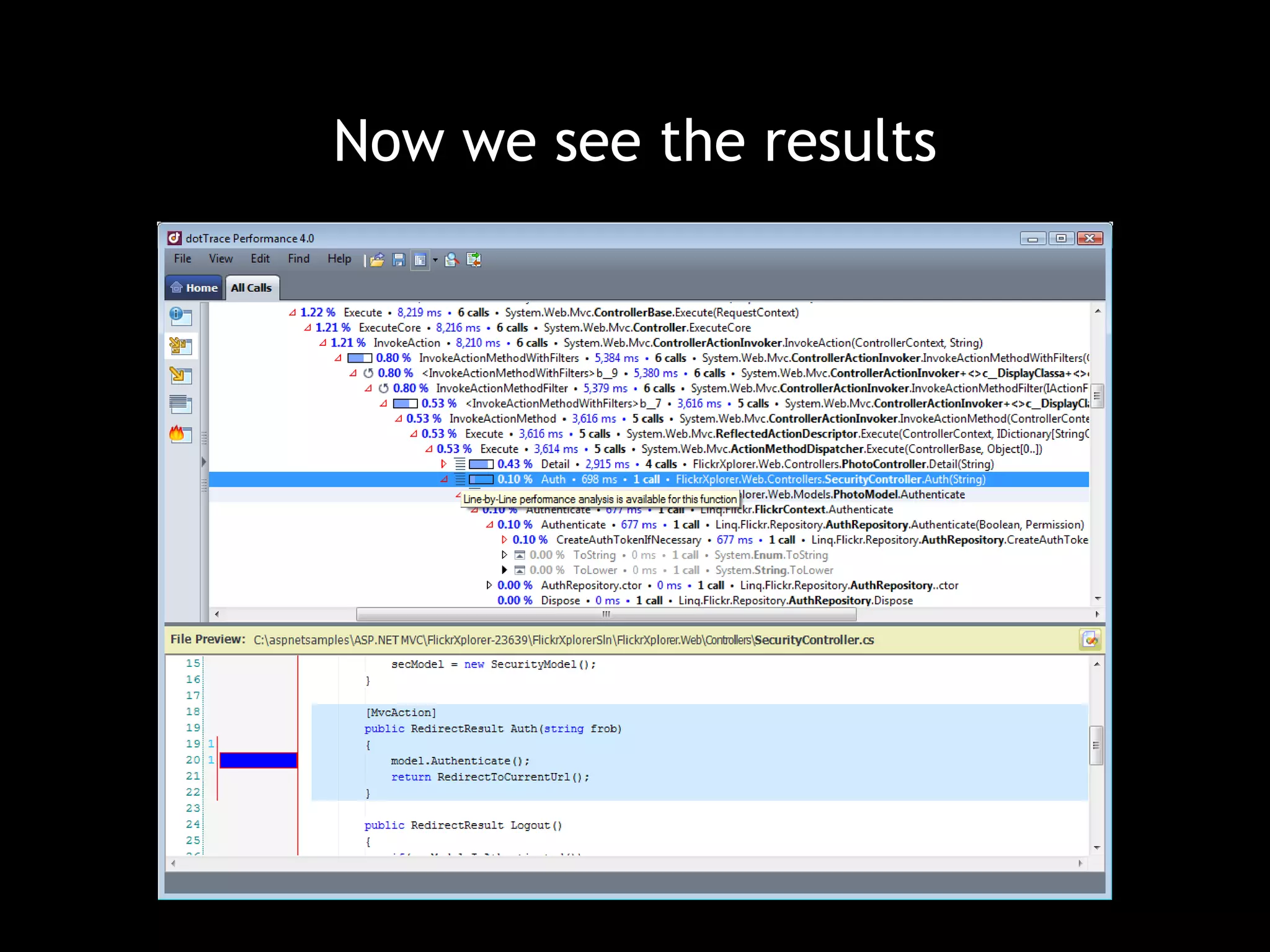

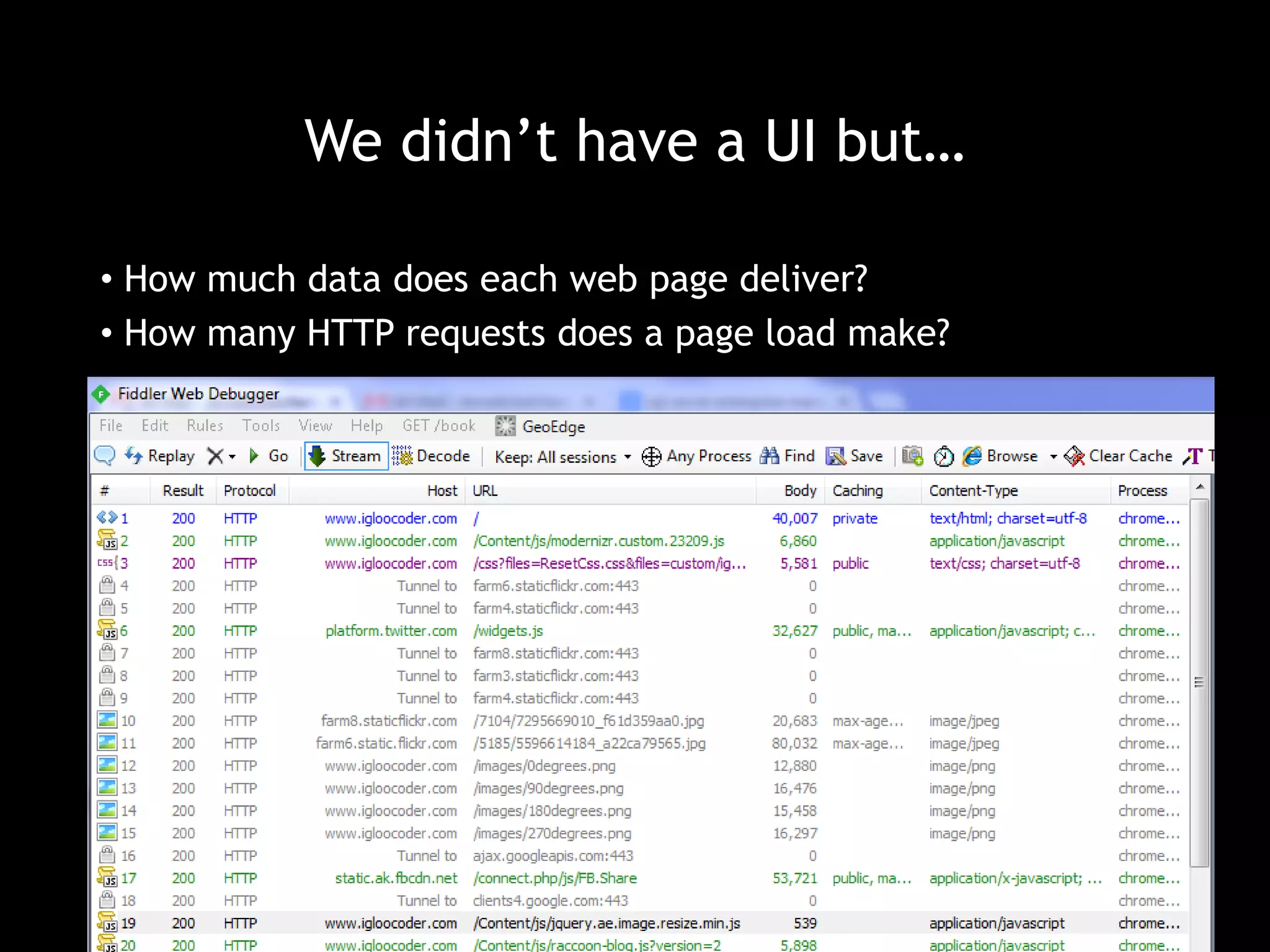

The document discusses the importance of performance testing software systems. It notes that performance testing is a time-consuming process that requires planning infrastructure, building test data scenarios, running performance tests, analyzing results, and evaluating code and architecture. Specific and quantifiable performance metrics are needed to determine if a system passes or fails. The document recommends starting performance testing early in the development process and dedicating adequate time and isolated testing environments for it.