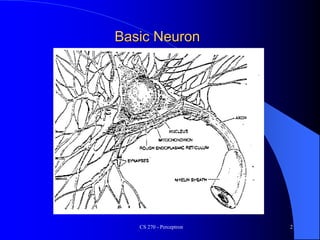

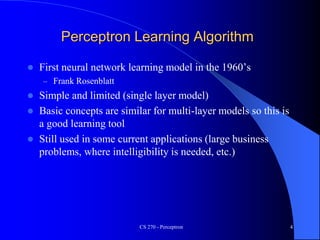

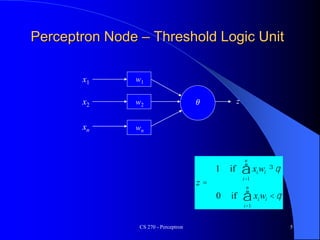

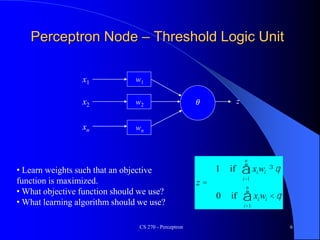

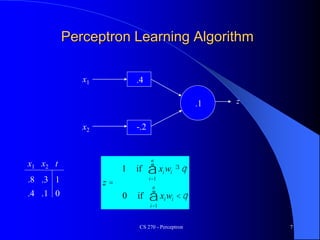

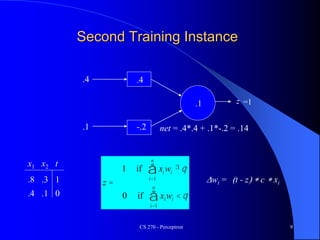

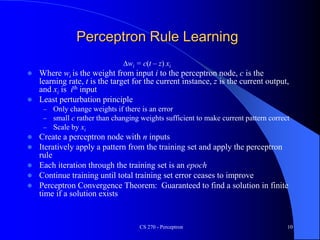

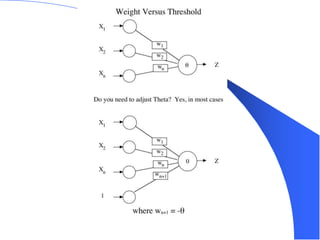

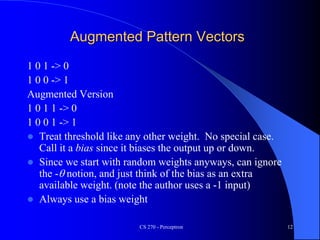

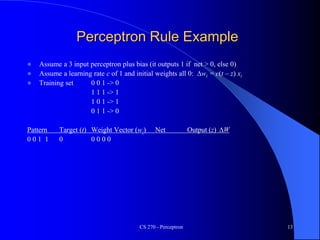

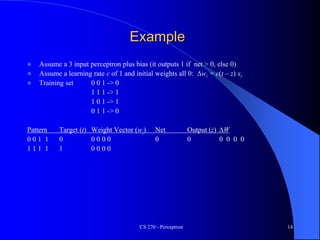

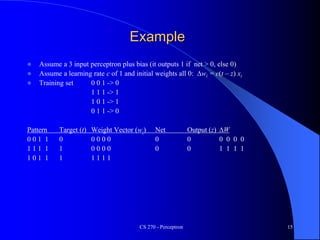

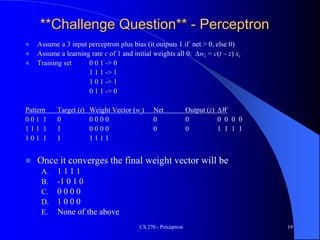

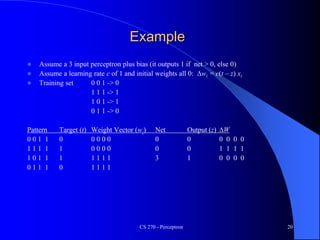

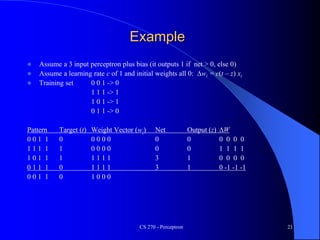

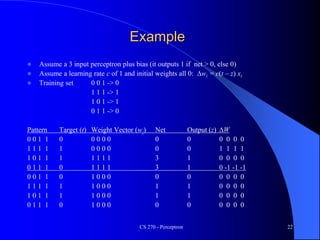

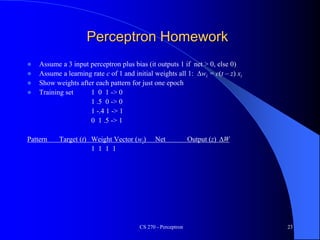

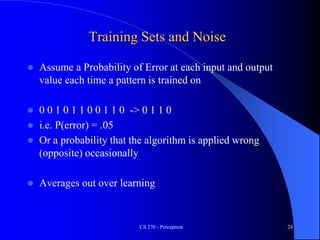

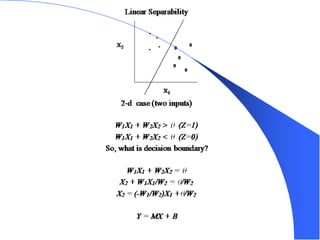

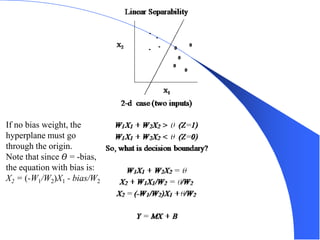

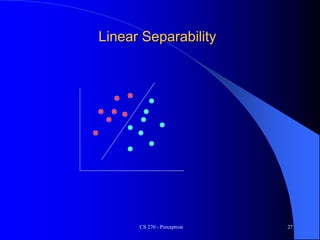

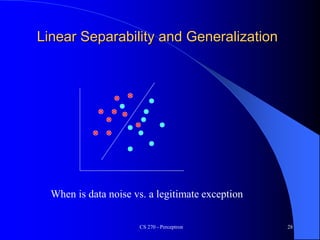

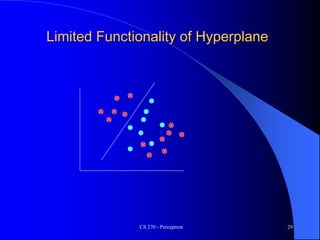

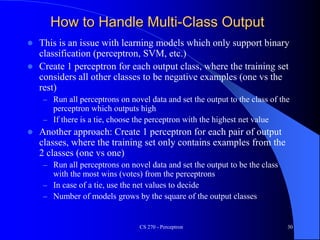

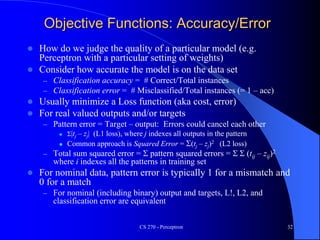

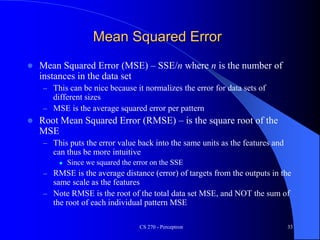

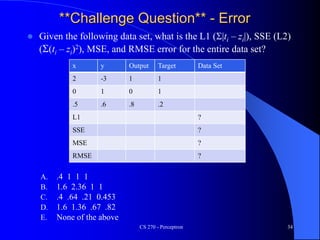

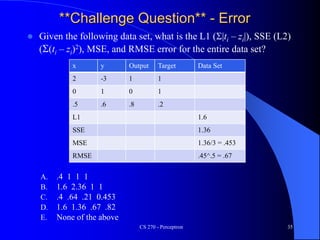

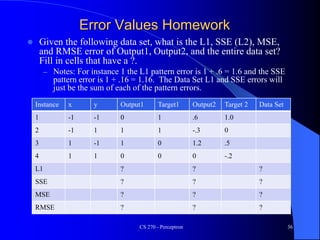

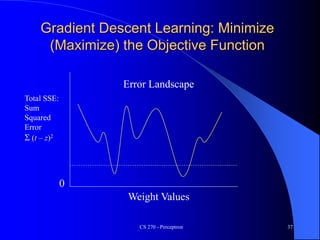

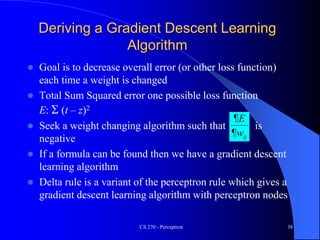

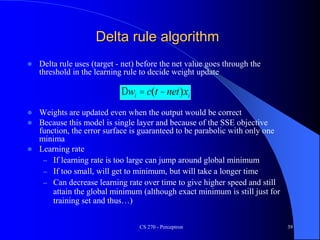

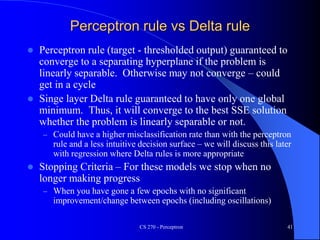

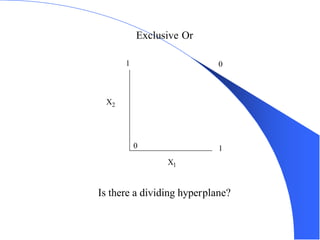

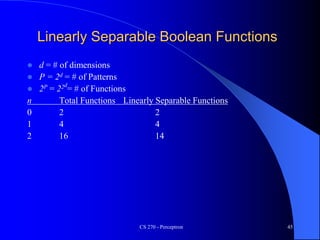

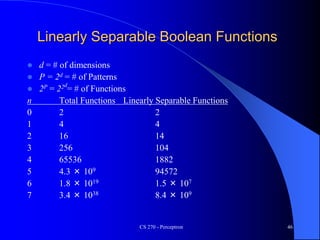

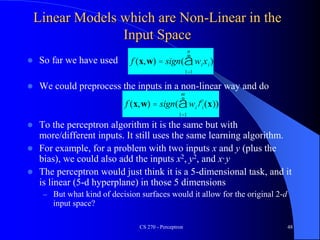

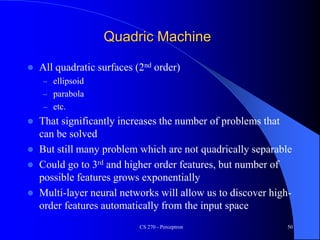

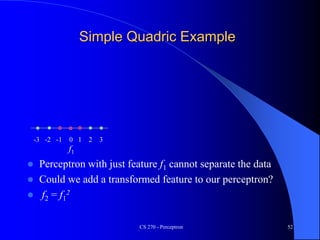

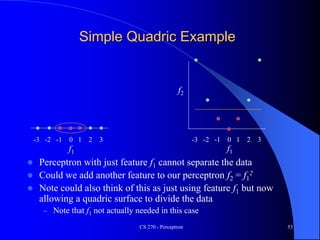

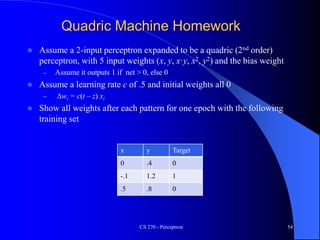

The document provides an overview of the perceptron, the first neural network learning model, developed by Frank Rosenblatt in the 1960s. It details the perceptron learning algorithm, including weight learning, the threshold logic unit, and the concept of bias in input data, while also discussing multi-class output scenarios. Additionally, it emphasizes active learning through peer instruction to enhance understanding and retention of the material.