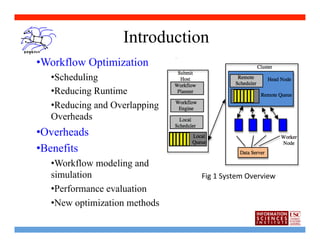

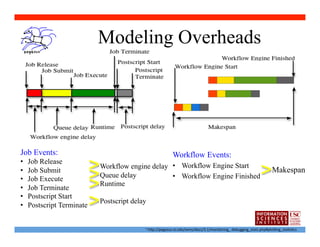

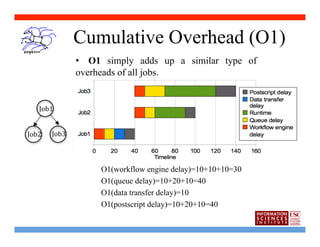

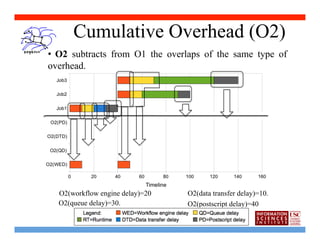

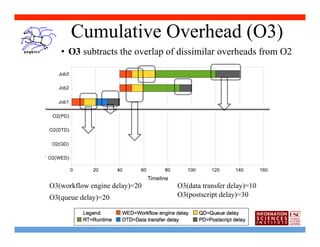

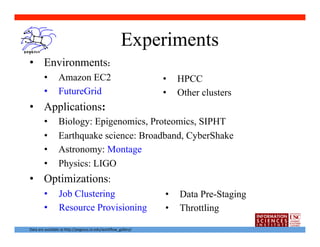

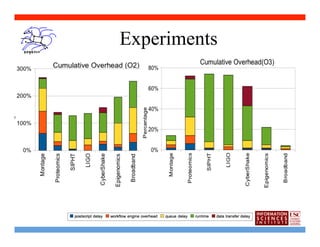

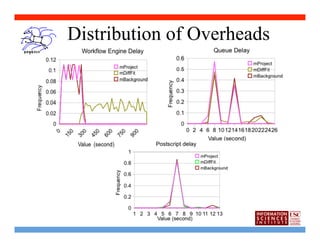

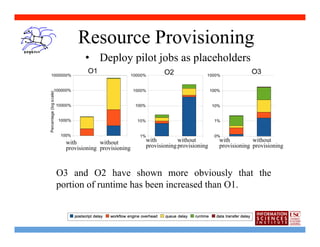

The document presents methods for modeling and analyzing workflow overhead in distributed systems, introducing approaches for calculating cumulative overhead, and reports on experiments applying optimization techniques like job clustering and resource provisioning to evaluate their effects on reducing overhead and improves workflow performance on different computational environments and applications.