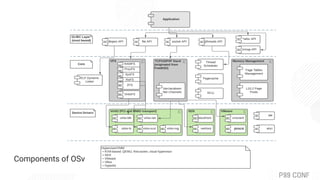

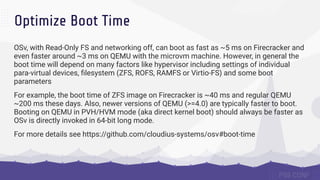

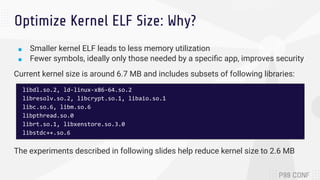

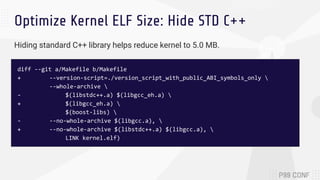

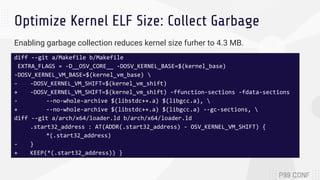

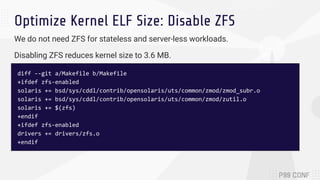

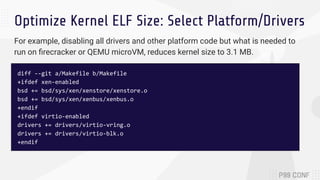

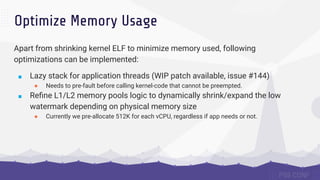

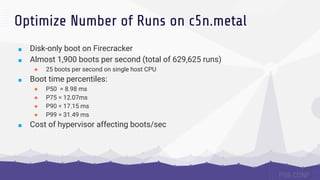

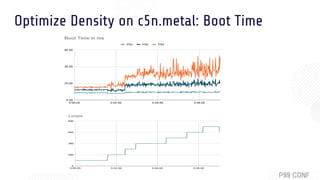

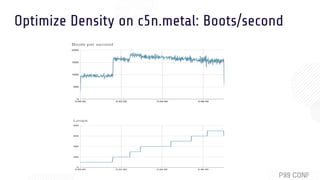

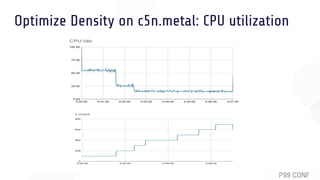

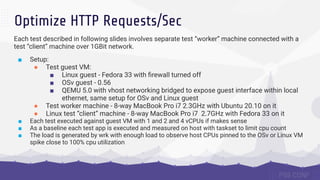

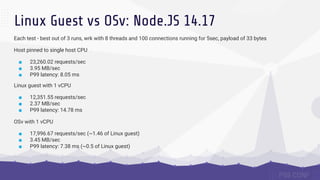

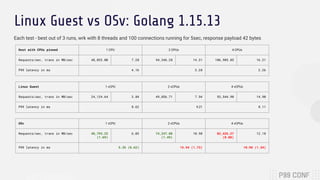

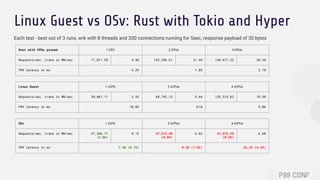

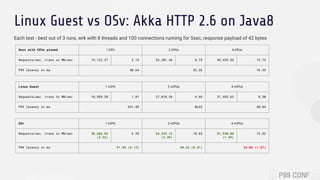

The document discusses OSv, an open-source unikernel designed to run single unmodified Linux applications securely as microVMs. It highlights its strengths for stateless and serverless workloads, including fast boot times, low memory usage, and optimized networking, along with techniques to minimize kernel size for improved performance. It also presents experimental results comparing OSv with traditional Linux VMs across various applications and workloads, demonstrating superior request handling and lower latencies.