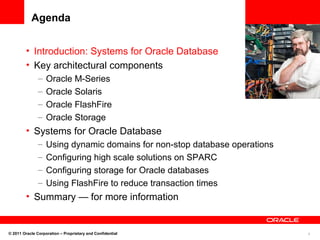

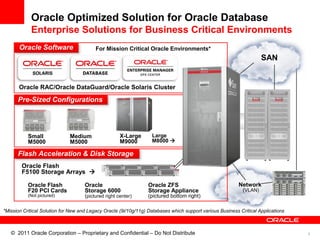

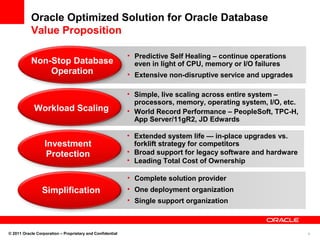

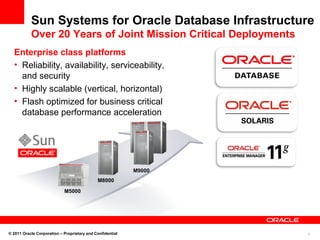

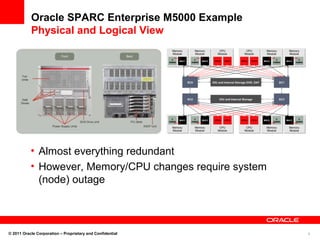

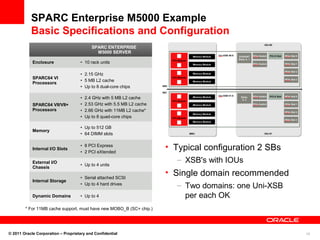

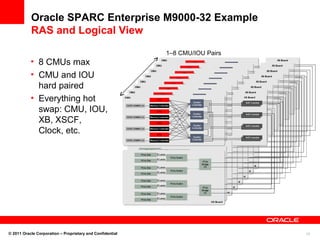

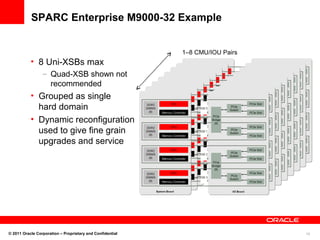

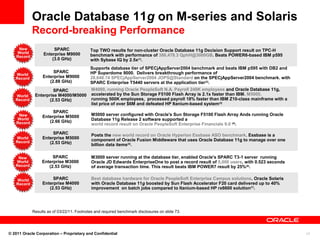

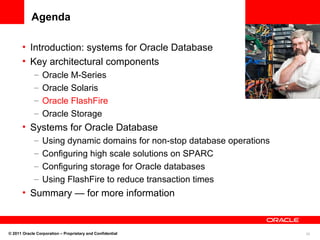

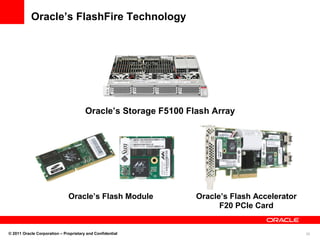

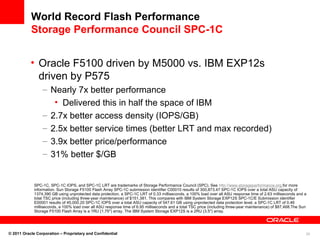

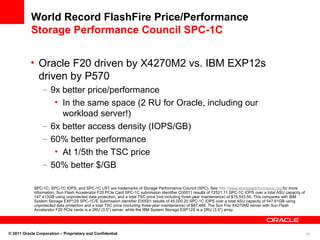

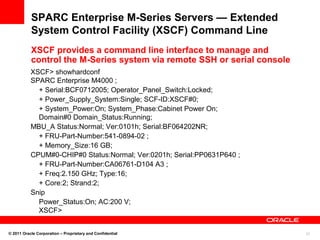

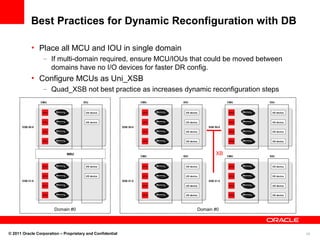

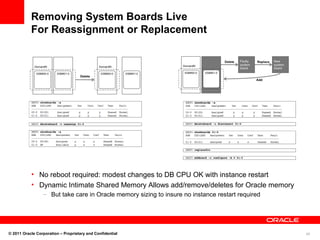

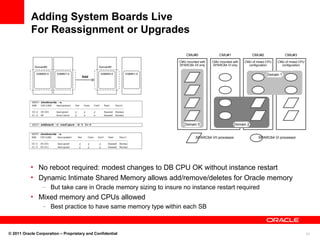

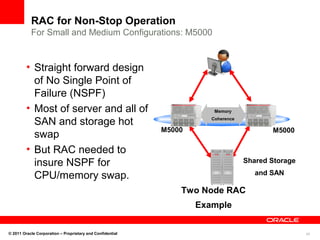

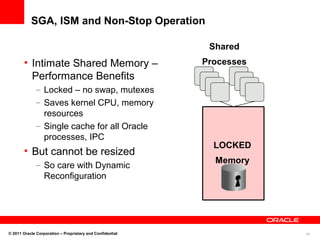

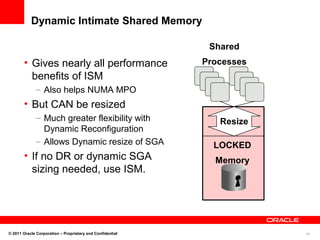

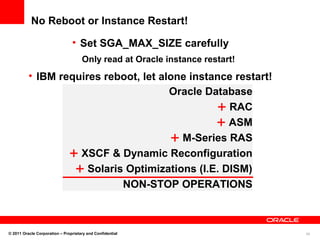

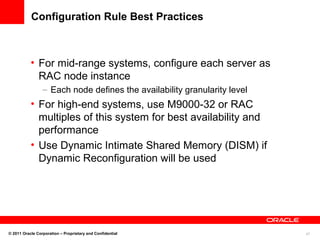

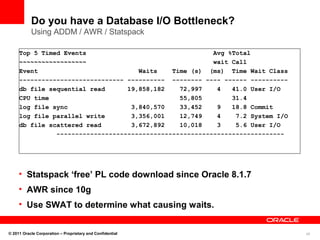

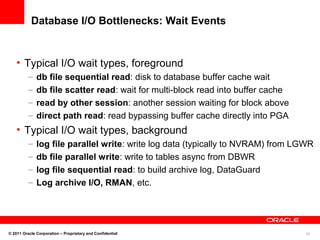

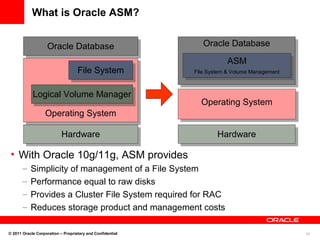

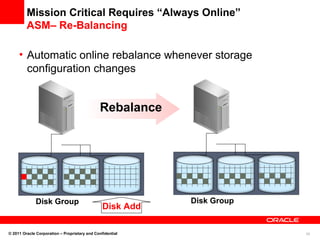

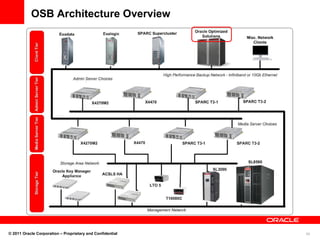

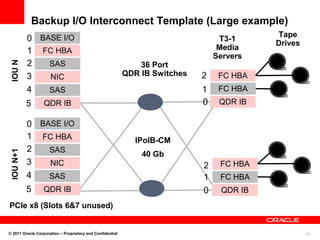

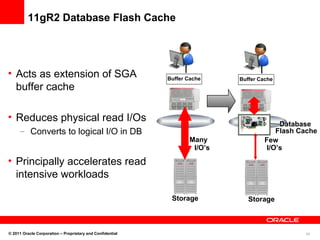

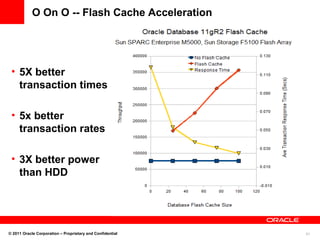

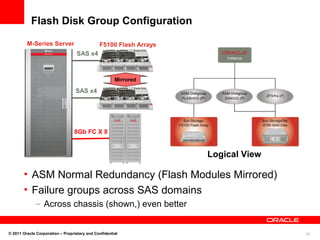

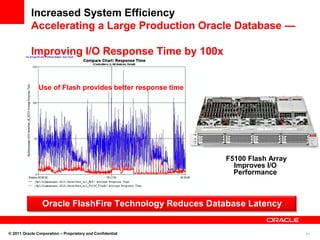

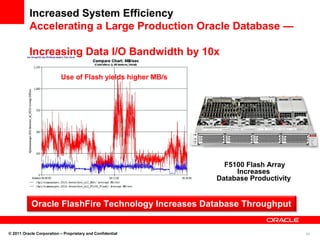

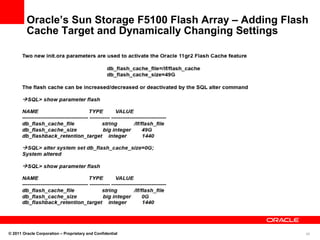

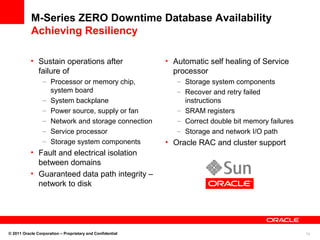

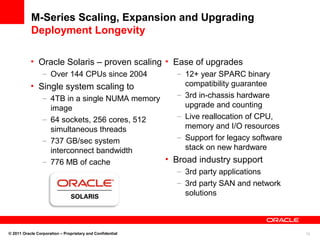

The document outlines Oracle's optimized solution for mission-critical database environments, detailing technical architecture and key components, including the Oracle M-series and flash storage technologies. It emphasizes capabilities such as live scaling, predictive self-healing, and non-disruptive upgrades, making a case for Oracle's leadership in UNIX system scaling and database performance. The presentation targets information for decision-makers regarding Oracle's product direction and competitive advantages in database solutions.