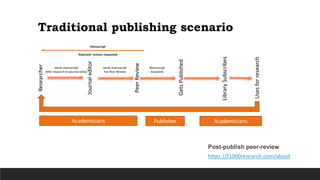

This document discusses open access and institutional repositories. It begins by outlining the traditional scholarly publishing process and some of the motivations for scholars to publish their work. It then defines open access as free online access to scholarly works, along with the ability to legally share and reuse those works. The document describes the two main types of open access - green open access through institutional repositories, and gold open access through fully open access journals. It also discusses various publication metrics used to measure journal quality, individual article and researcher quality, such as the journal impact factor, h-index, and g-index. Overall, the document provides an overview of open access models and debates around common bibliometric indicators.