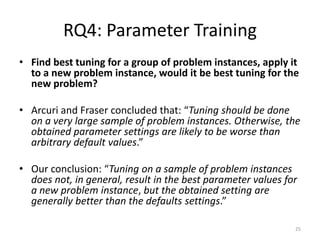

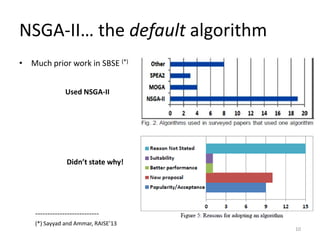

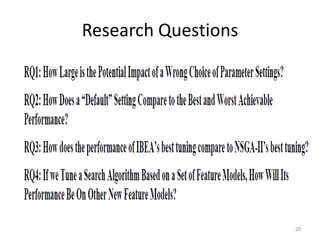

The document summarizes a study that replicated an earlier empirical study on parameter tuning in search-based software engineering. The original study found that different parameter settings can significantly impact performance and that default parameter settings do not always perform optimally. The replication confirmed these findings, and also found that default settings generally performed poorly compared to best tuned settings. The replication also found that IBEA's best tuned performance was generally better than NSGA-II's best tuned performance. Additionally, parameter tuning on a sample of problems did not necessarily lead to the best settings for a new problem, but was generally better than default settings.

![Survival of the fittest

(according to NSGA-II [Deb et al. 2002])

Boolean dominance (x Dominates y, or does not):

- In no objective is x worse than y

- In at least one objective, x is better than y

Crowd

pruning

8](https://image.slidesharecdn.com/sayyadslidesreser13-131014171904-phpapp02/85/On-Parameter-Tuning-in-Search-Based-Software-Engineering-A-Replicated-Empirical-Study-8-320.jpg)

![Feature–oriented domain analysis [Kang 1990]

• Feature models = a

lightweight method for

defining a space of options

• De facto standard for

modeling variability, e.g.

Software Product Lines

Cross-Tree Constraints

Cross-Tree Constraints

15](https://image.slidesharecdn.com/sayyadslidesreser13-131014171904-phpapp02/85/On-Parameter-Tuning-in-Search-Based-Software-Engineering-A-Replicated-Empirical-Study-15-320.jpg)

![What are “default settings”?

• Population size = 100

• Crossover rate = 80%

– 60% < Crossover rate < 90%

• [A. E. Eiben and J. E. Smith, Introduction to Evolutionary

Computing.: Springer, 2003.]

• Mutation rate = 1/Features

• [one bit out of the whole string]

19](https://image.slidesharecdn.com/sayyadslidesreser13-131014171904-phpapp02/85/On-Parameter-Tuning-in-Search-Based-Software-Engineering-A-Replicated-Empirical-Study-19-320.jpg)

![Results [10 sec / algorithm / FM]

21](https://image.slidesharecdn.com/sayyadslidesreser13-131014171904-phpapp02/85/On-Parameter-Tuning-in-Search-Based-Software-Engineering-A-Replicated-Empirical-Study-21-320.jpg)