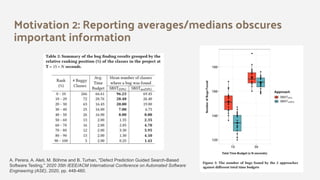

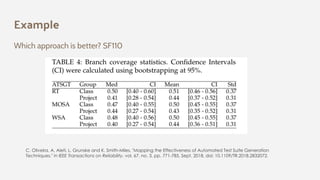

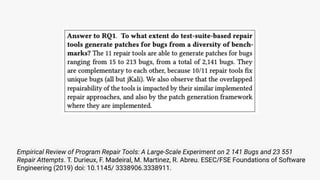

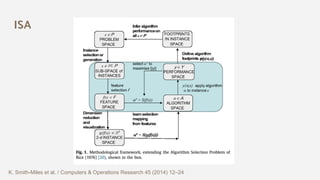

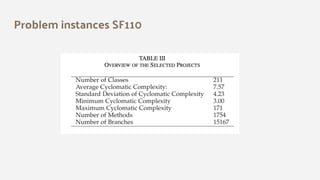

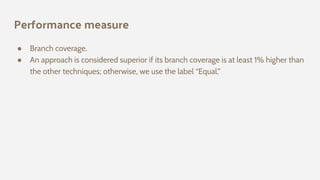

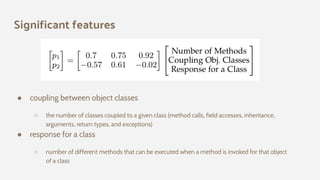

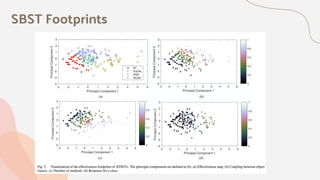

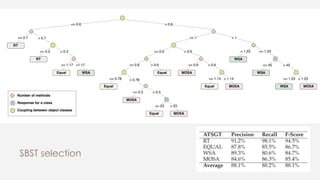

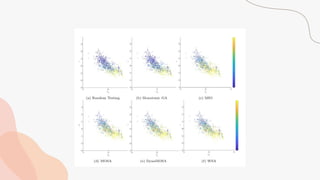

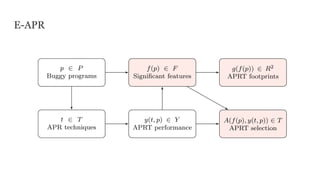

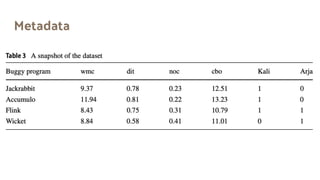

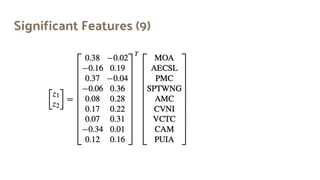

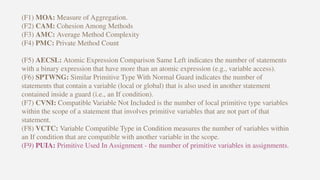

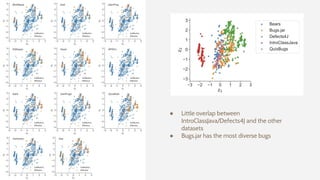

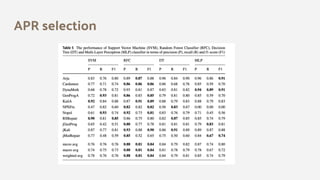

The document discusses the effectiveness of search-based software engineering (SBSE) techniques through instance space analysis, emphasizing the importance of properly selected benchmark problems for fair comparisons. It highlights the need to understand the strengths and weaknesses of different SBSE approaches based on diverse problem instances and their features. Open questions are raised regarding the assessment of SBSE techniques, their performance dependencies, and the suitability of different techniques based on specific problem characteristics.