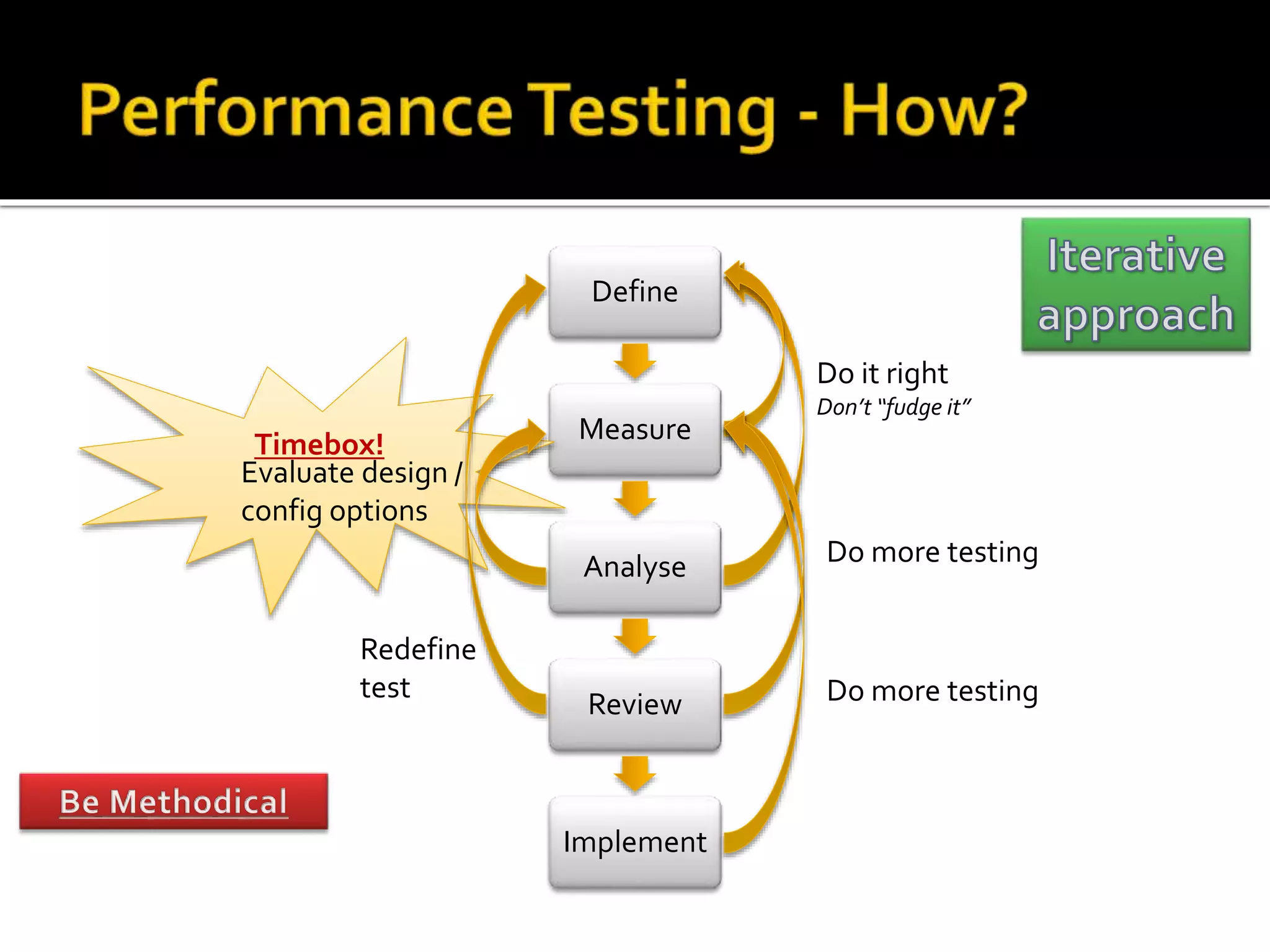

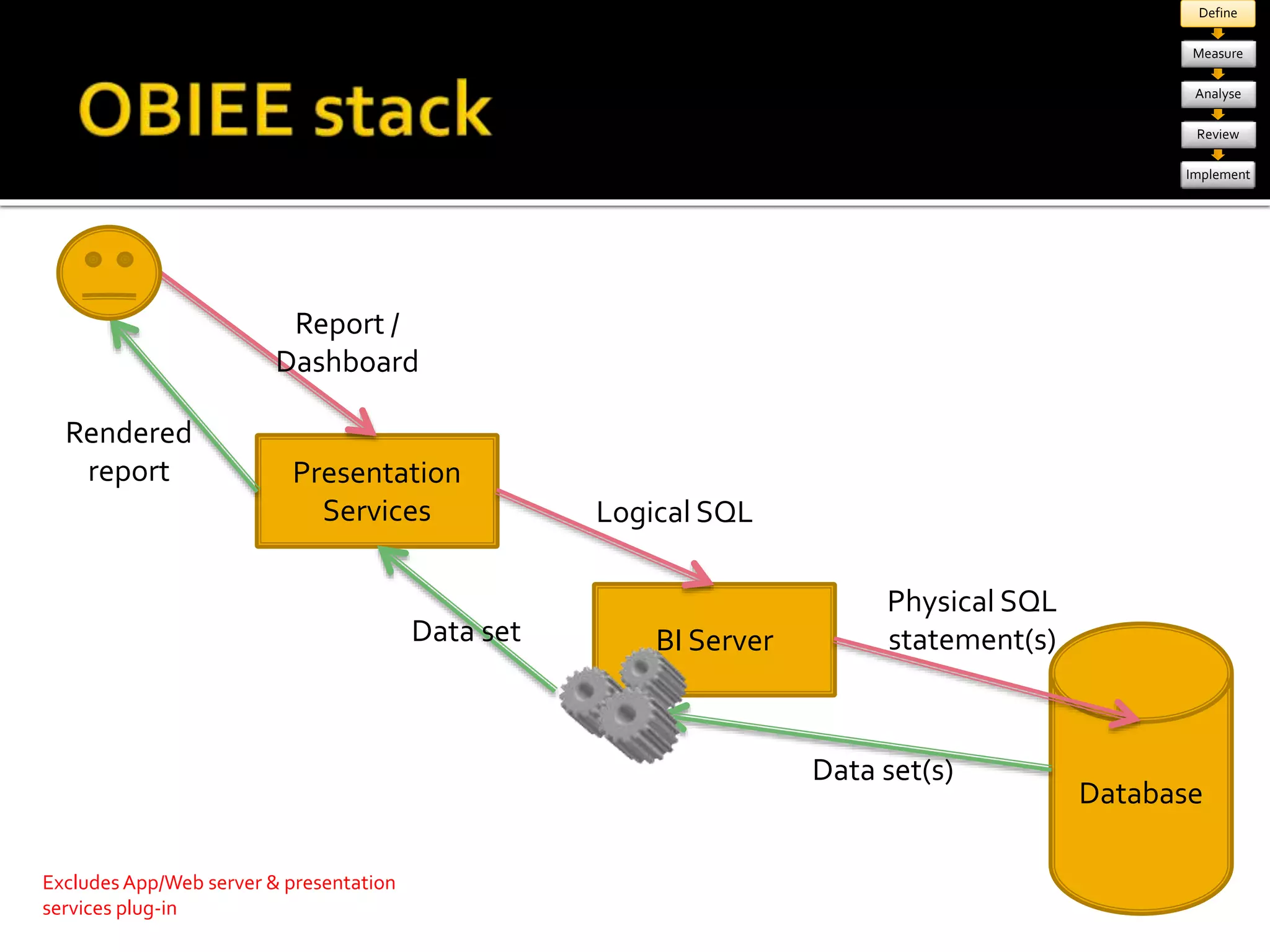

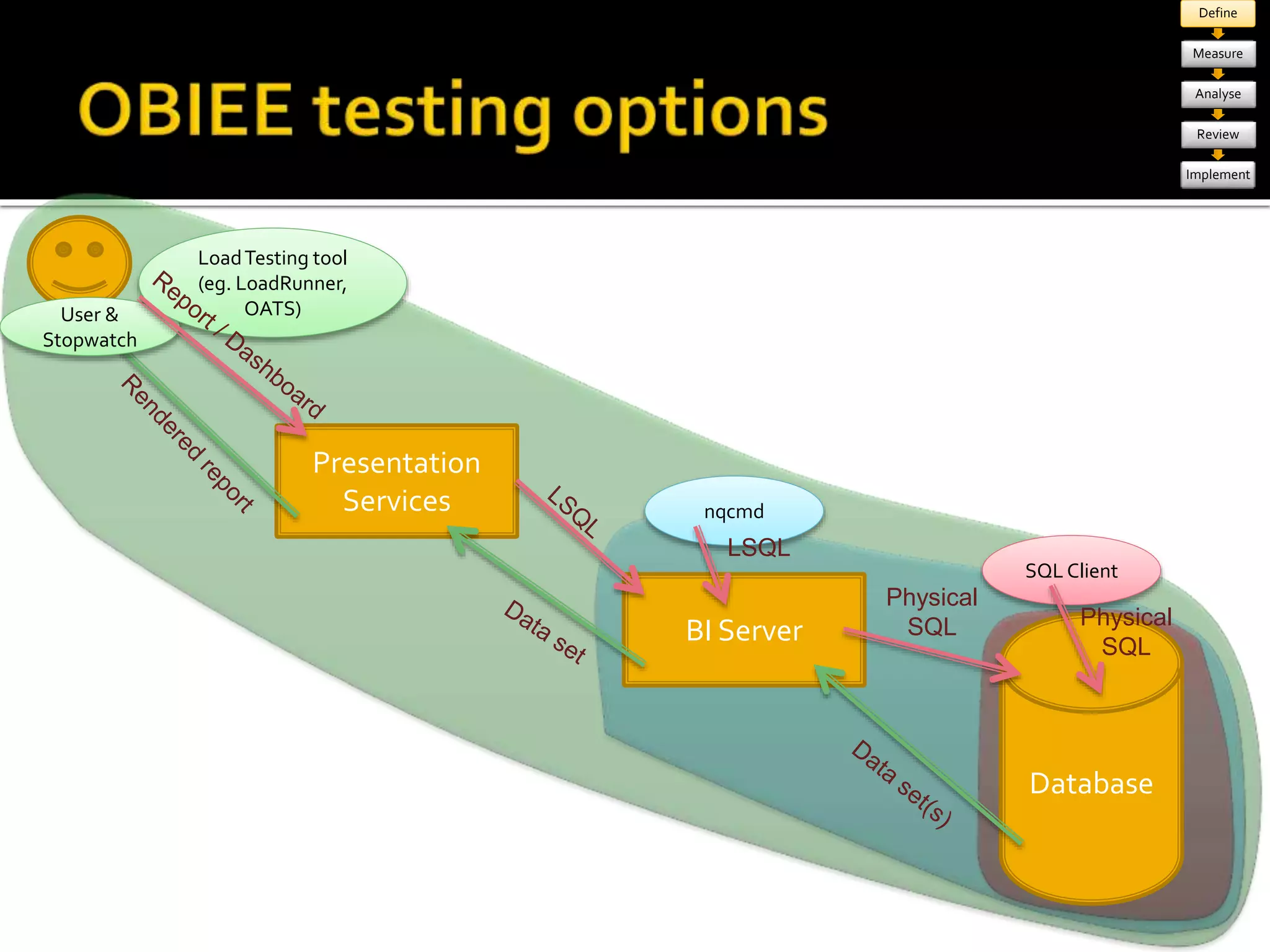

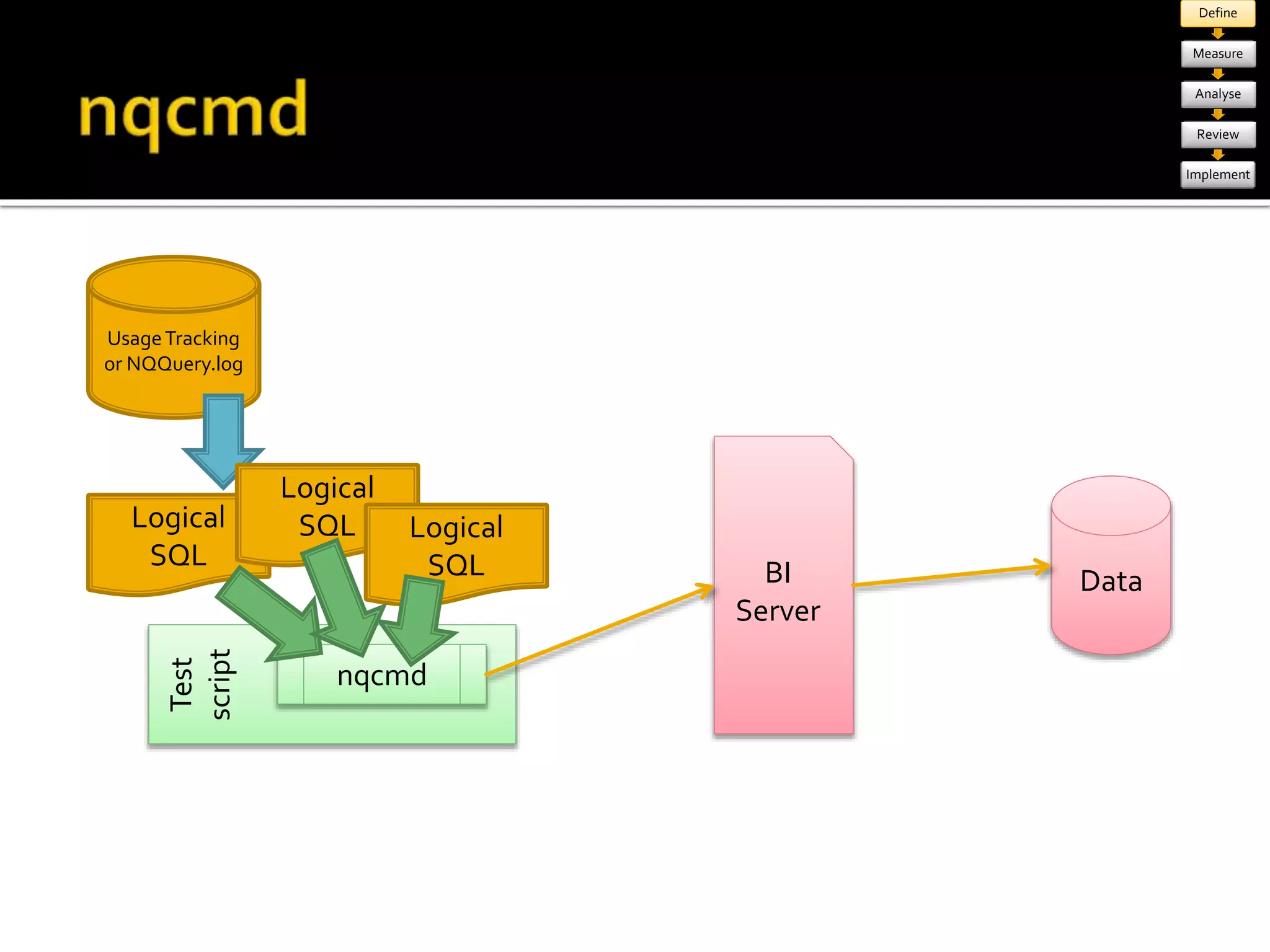

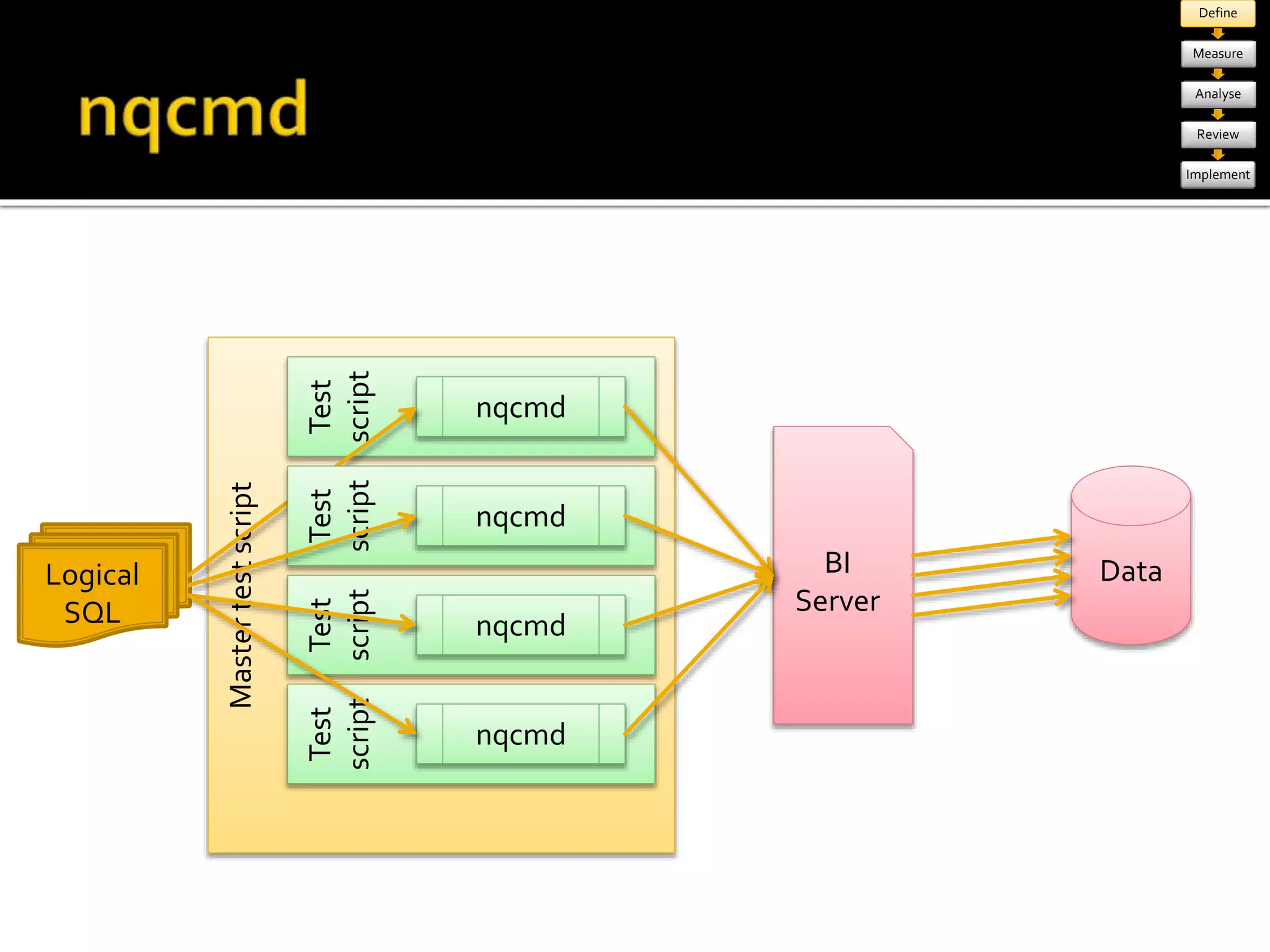

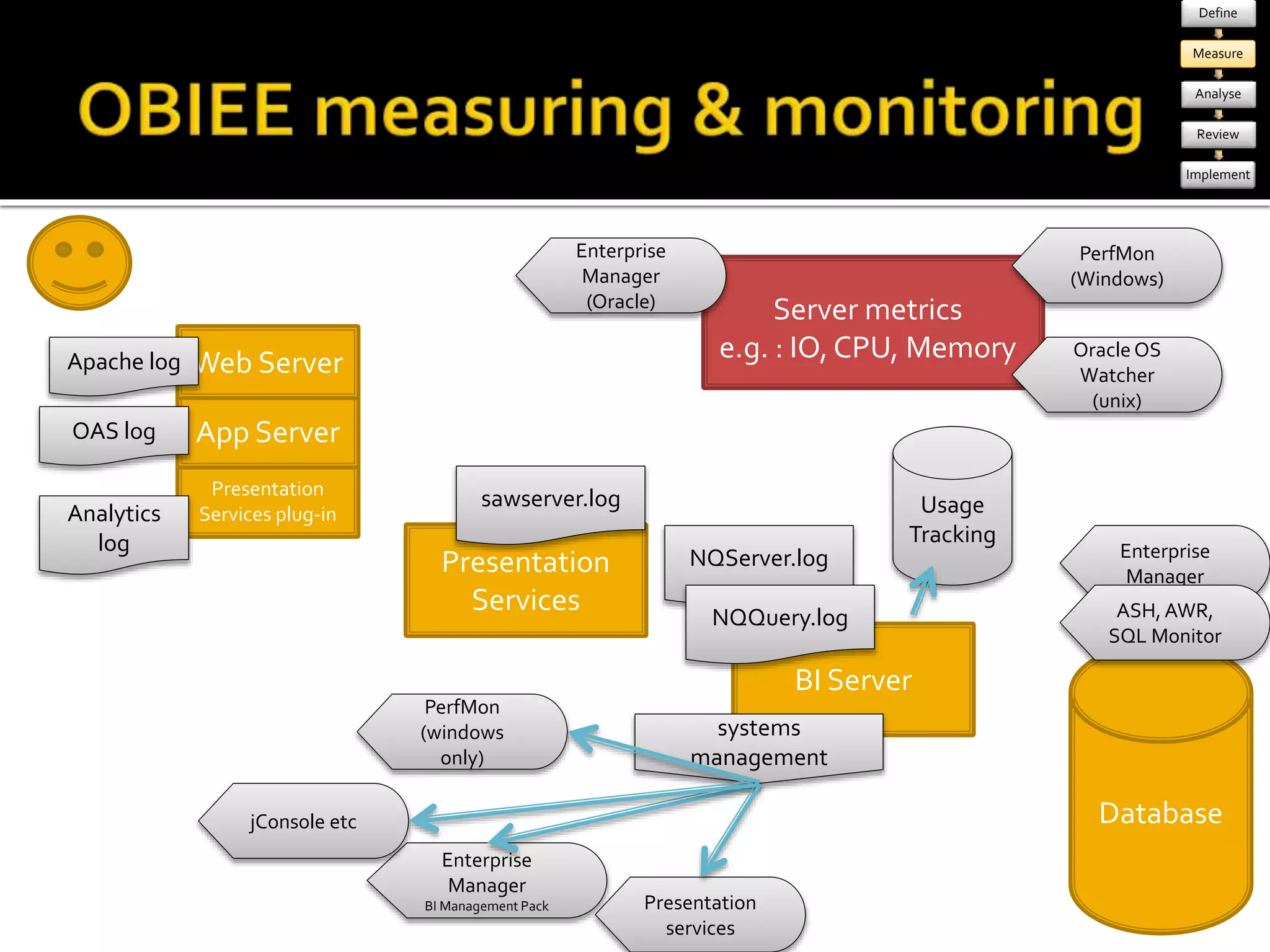

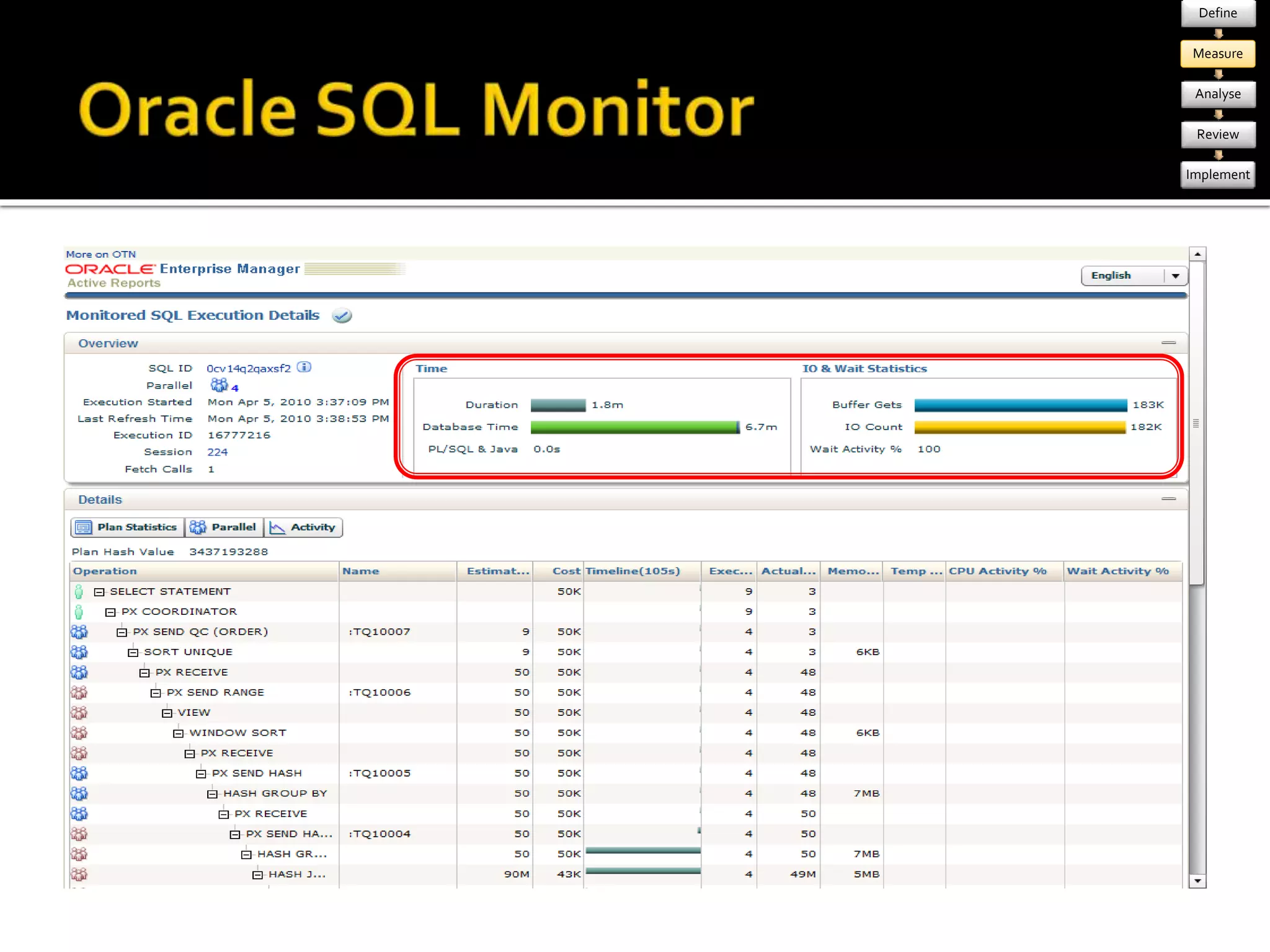

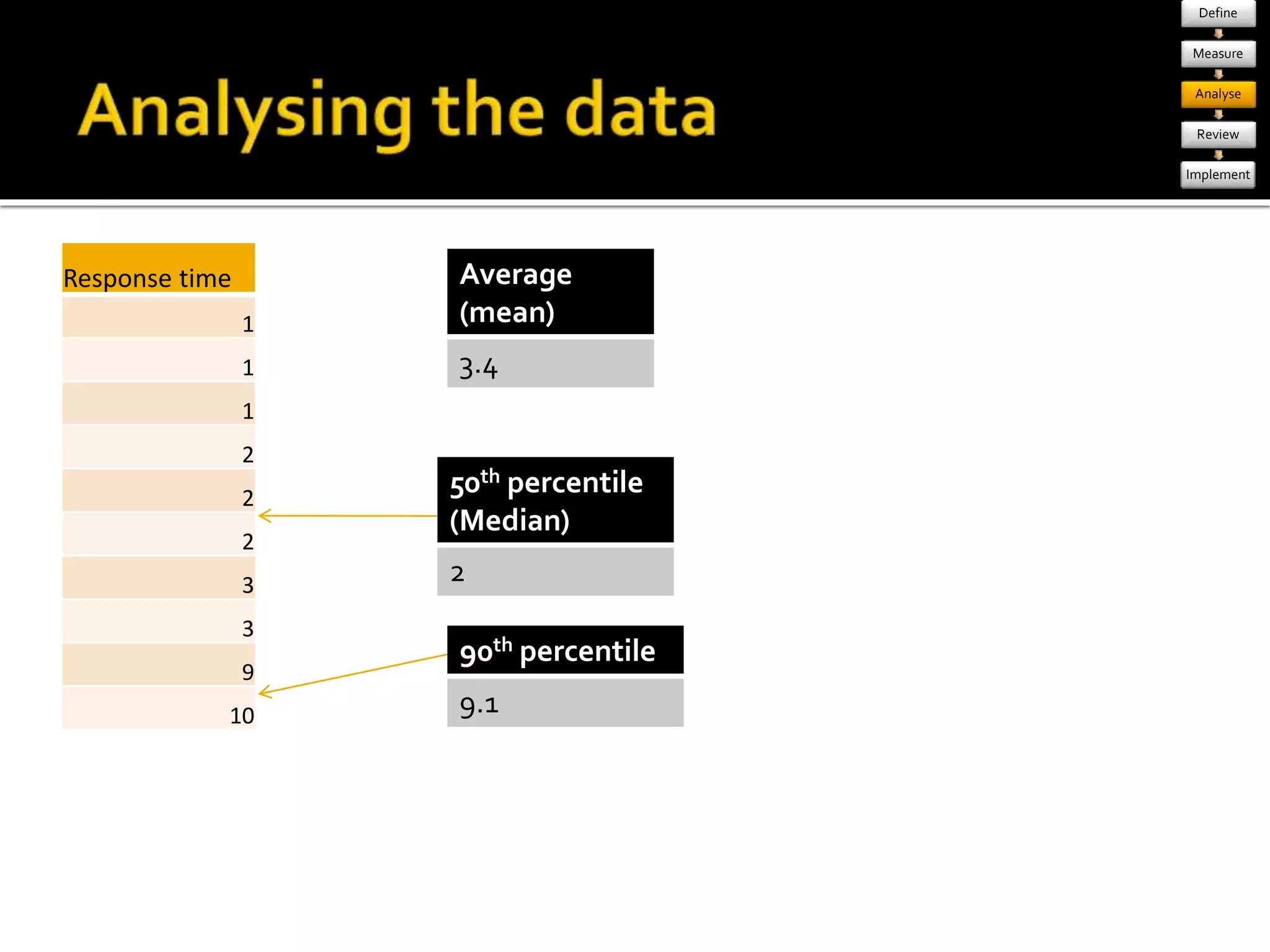

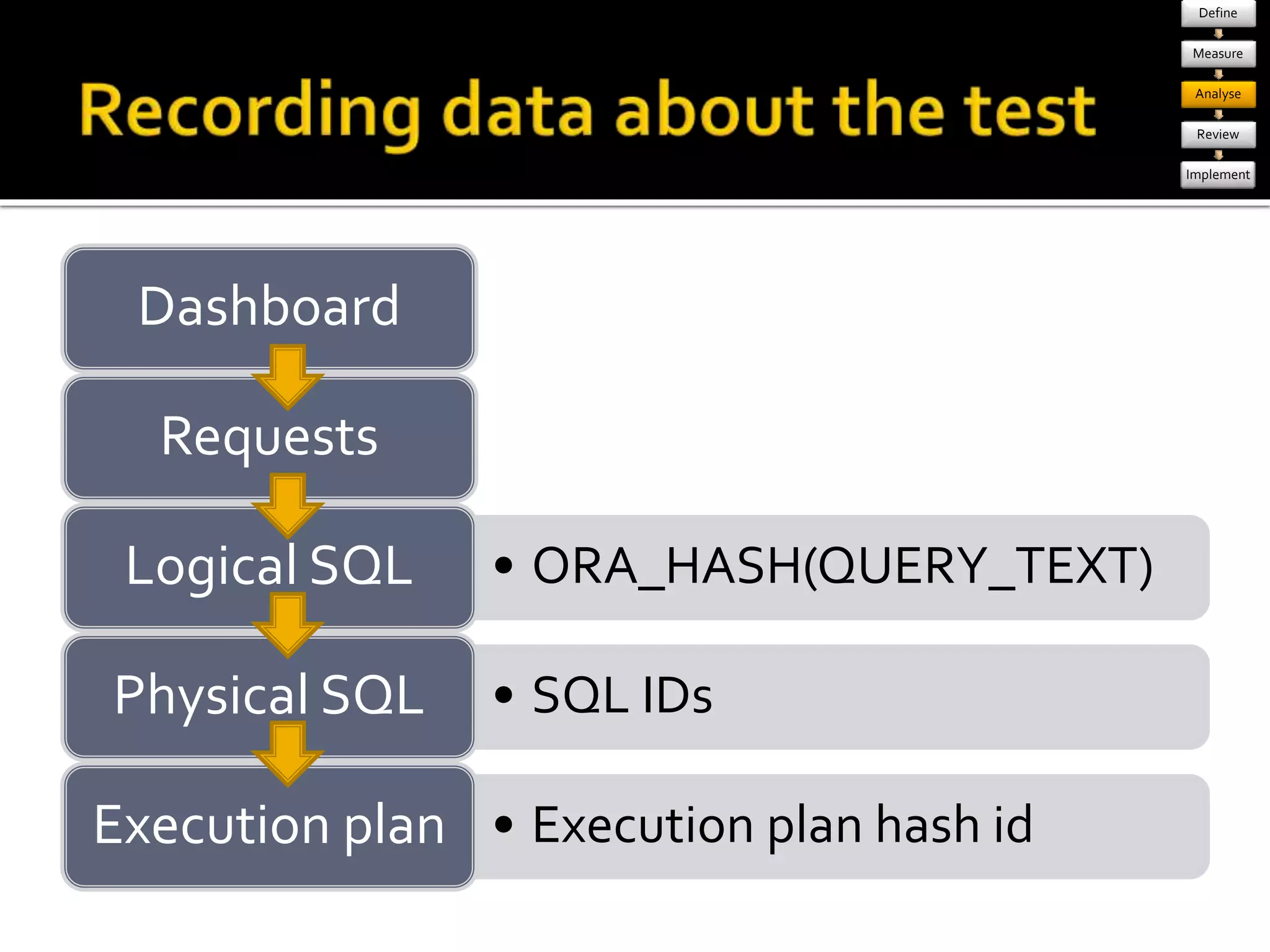

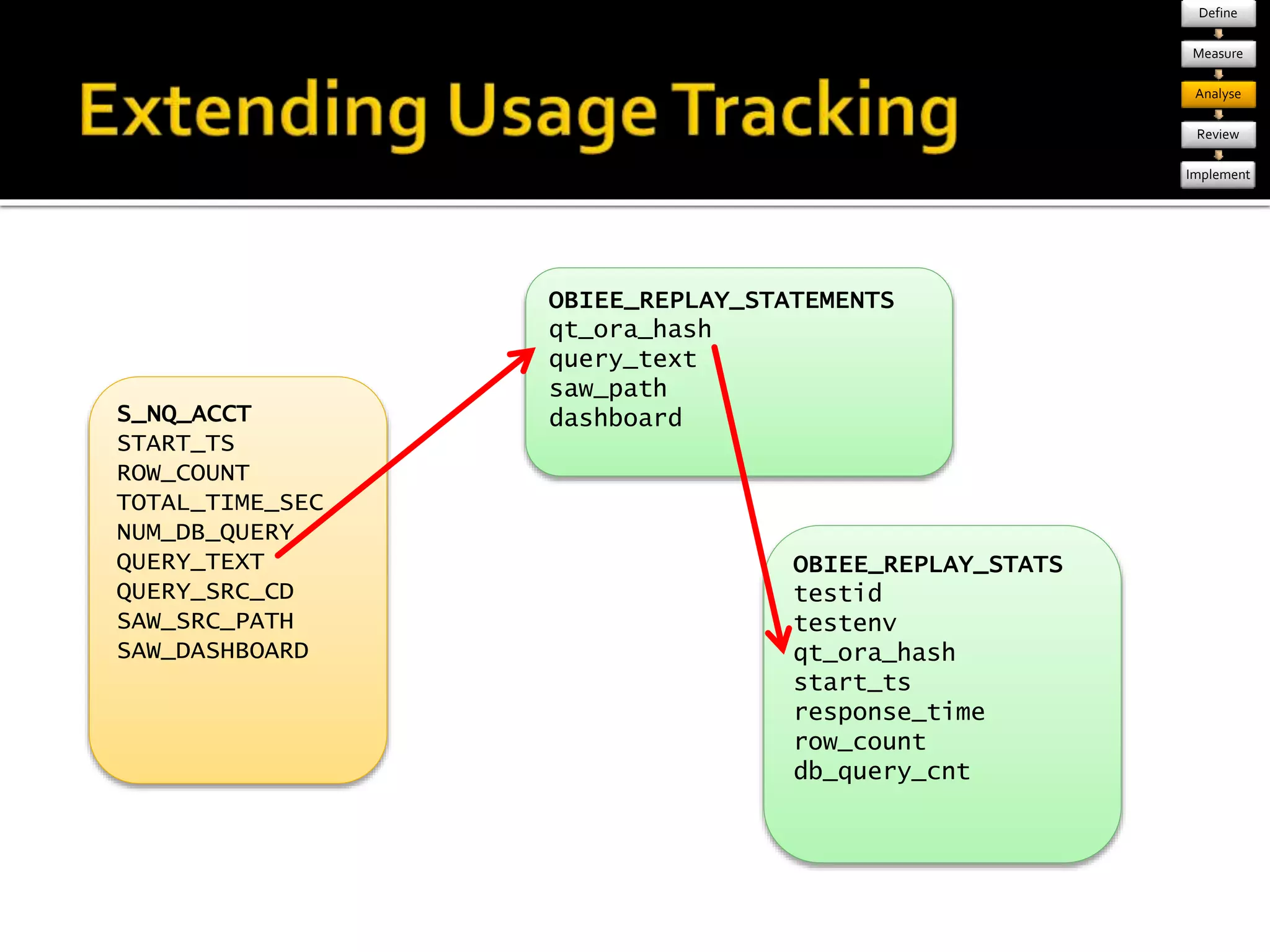

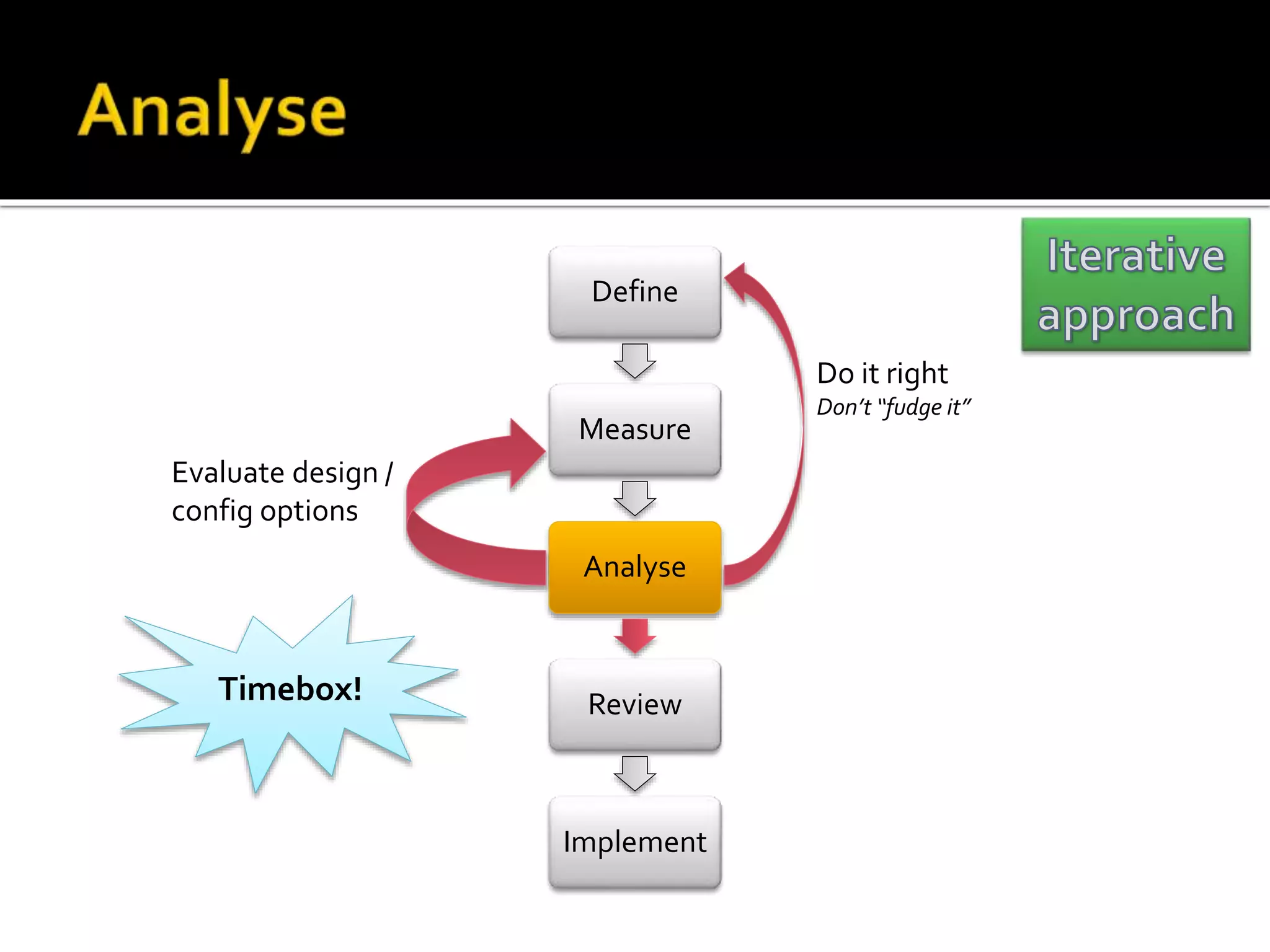

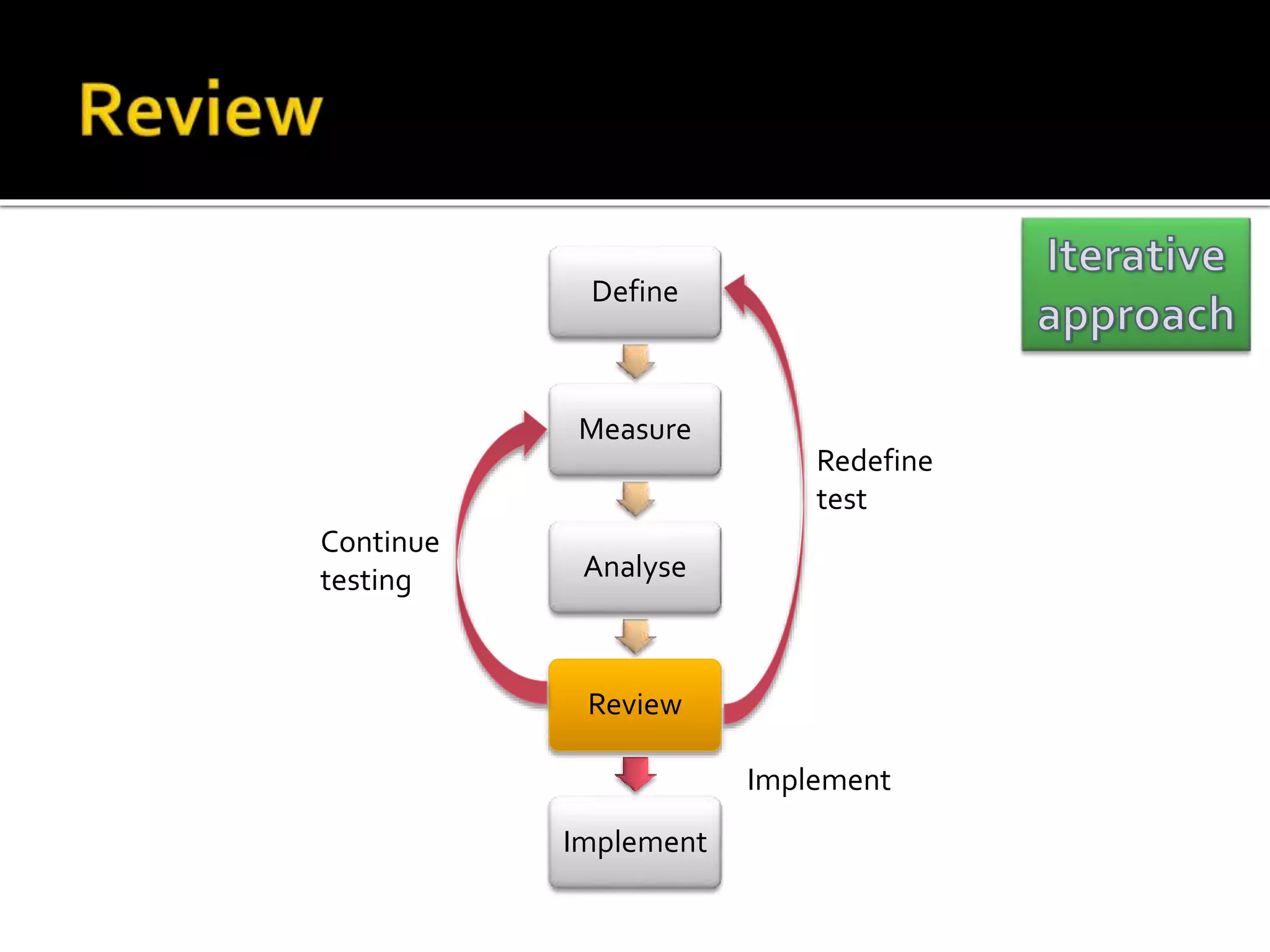

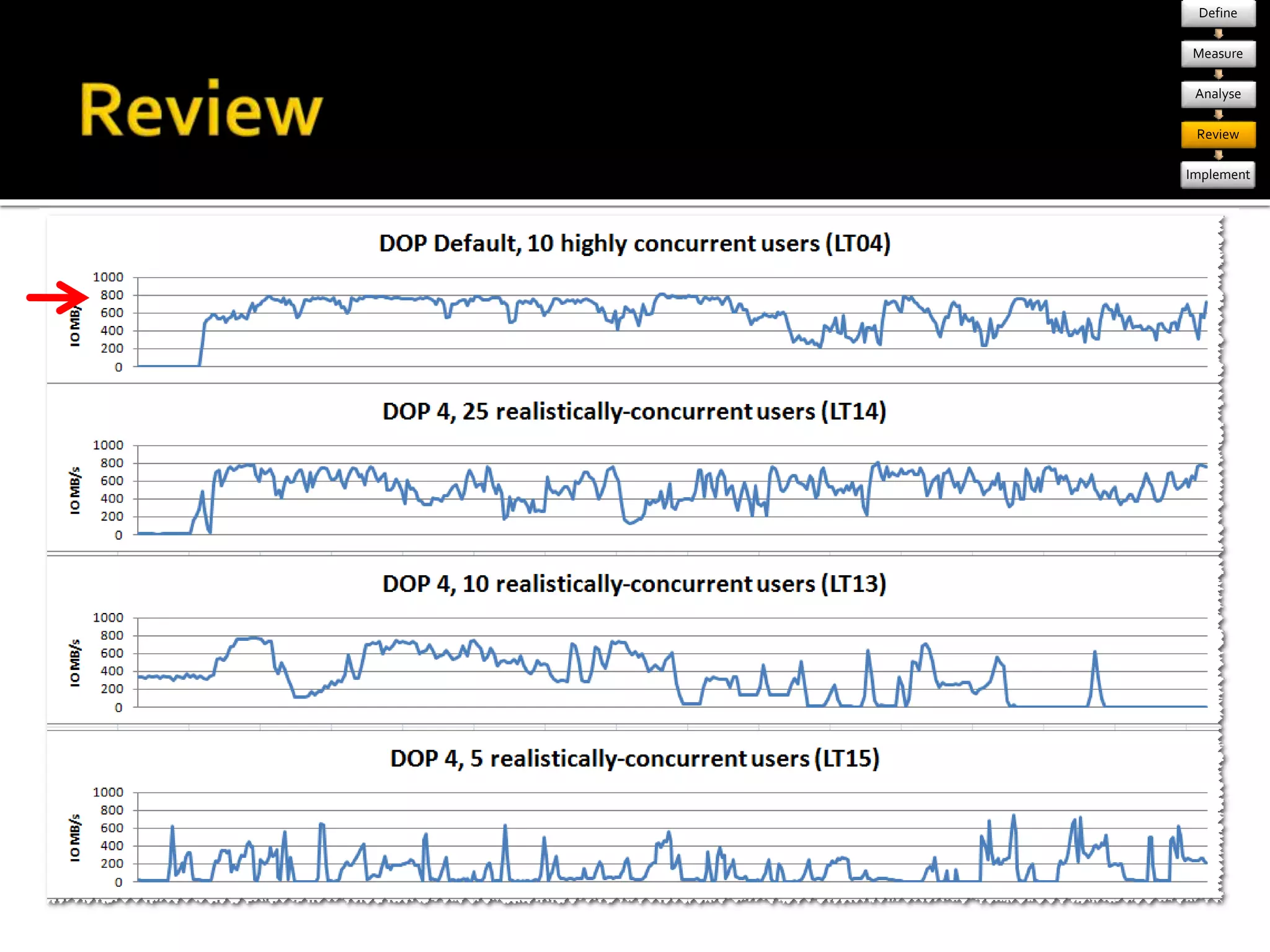

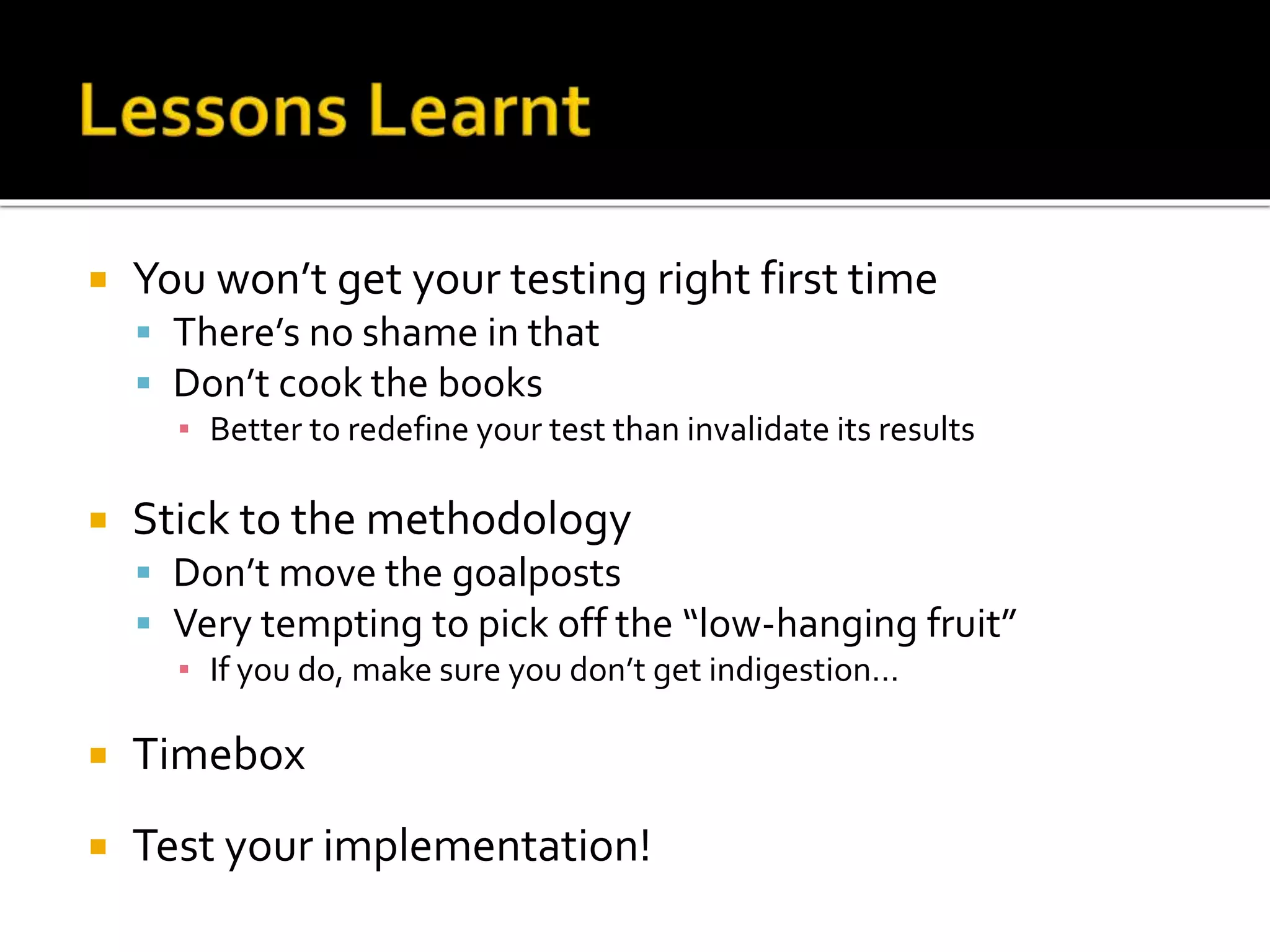

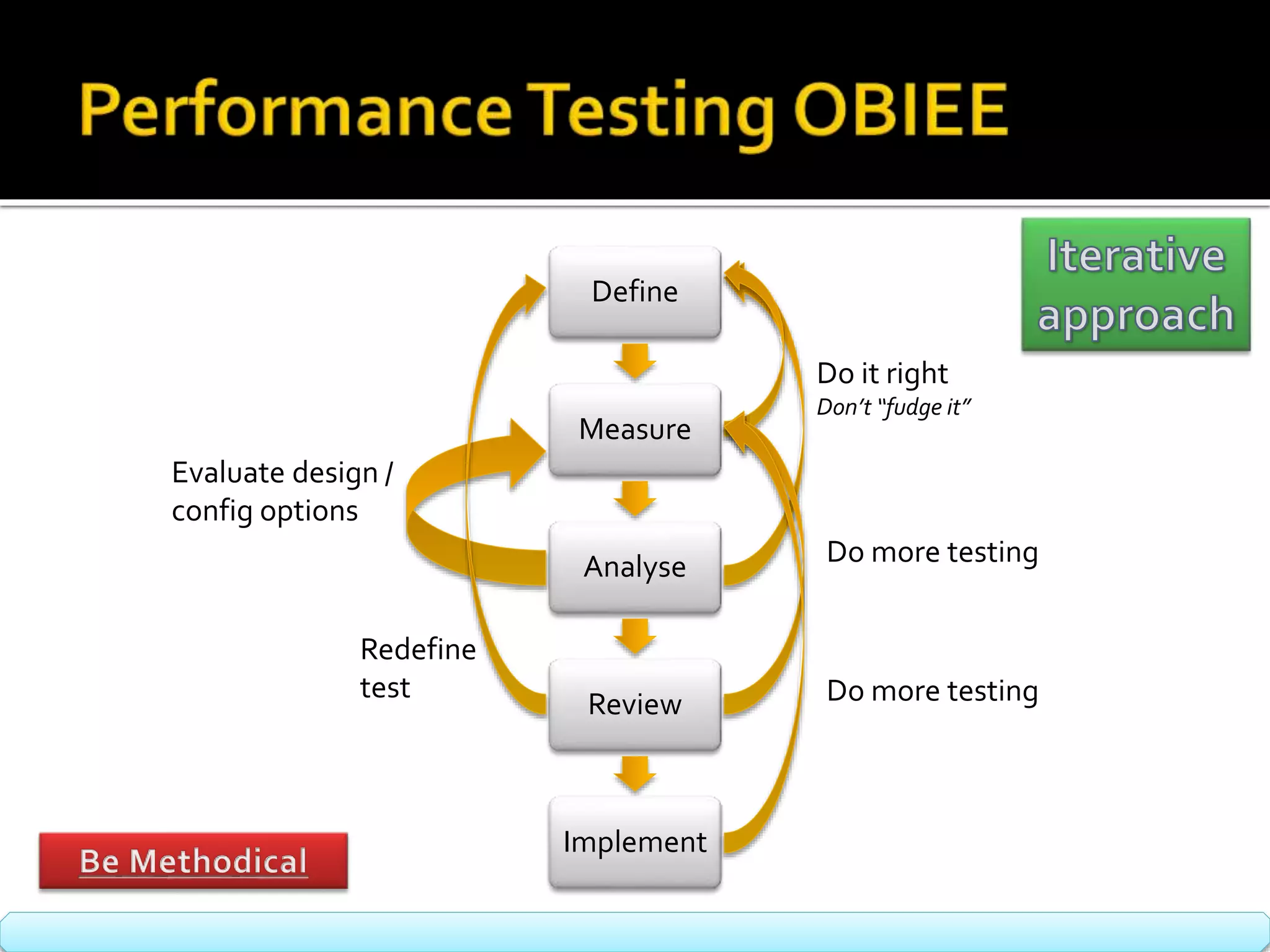

The document outlines a performance testing methodology for Oracle BI systems, emphasizing the need for thorough testing and tuning to ensure user satisfaction and system scalability. It stresses the importance of establishing baselines, validating designs, and employing effective diagnostic techniques for ongoing issues. The guide includes recommendations for defining test parameters, various tools for performance measurement, and the necessity of documentation throughout the testing process.