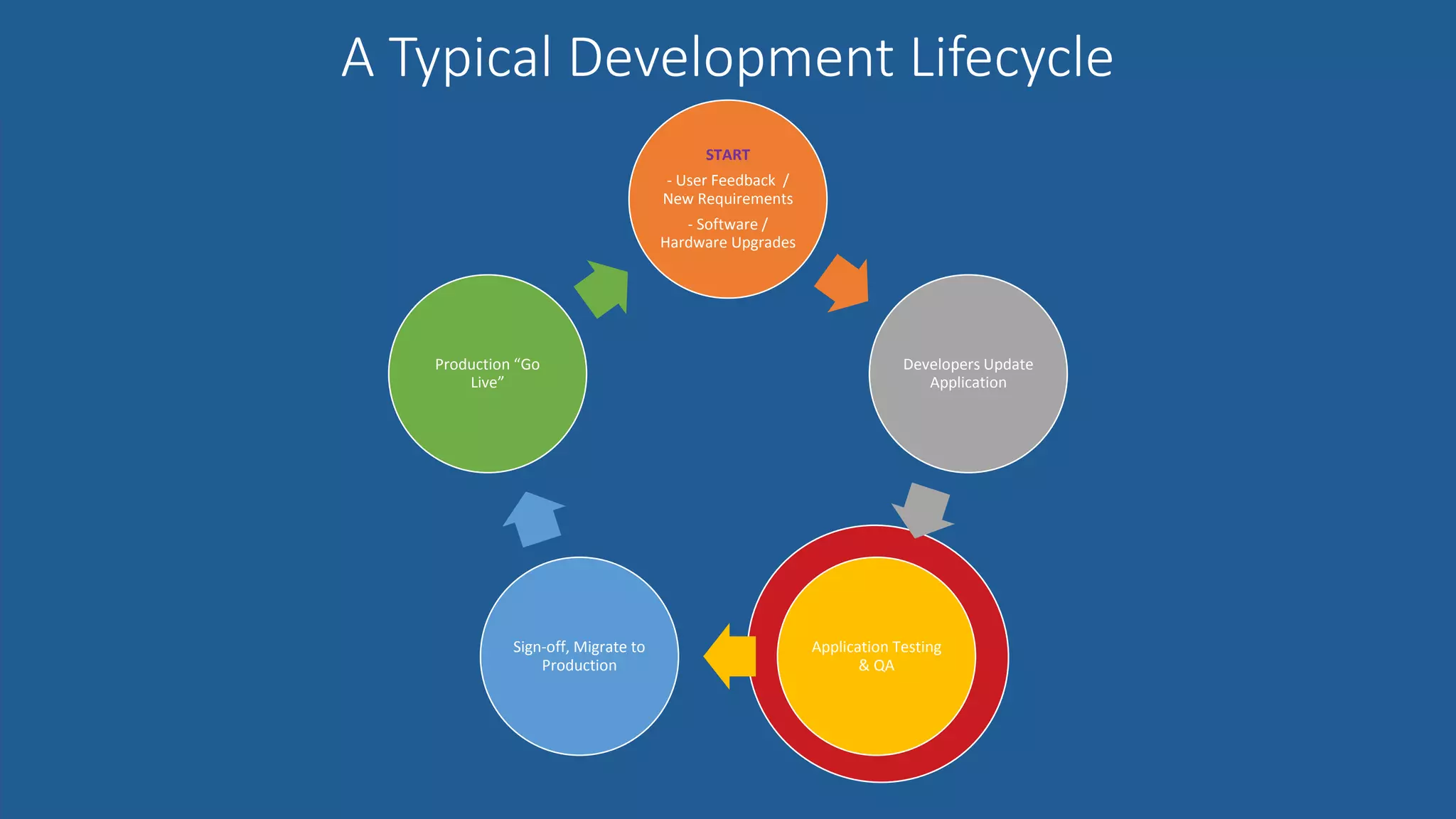

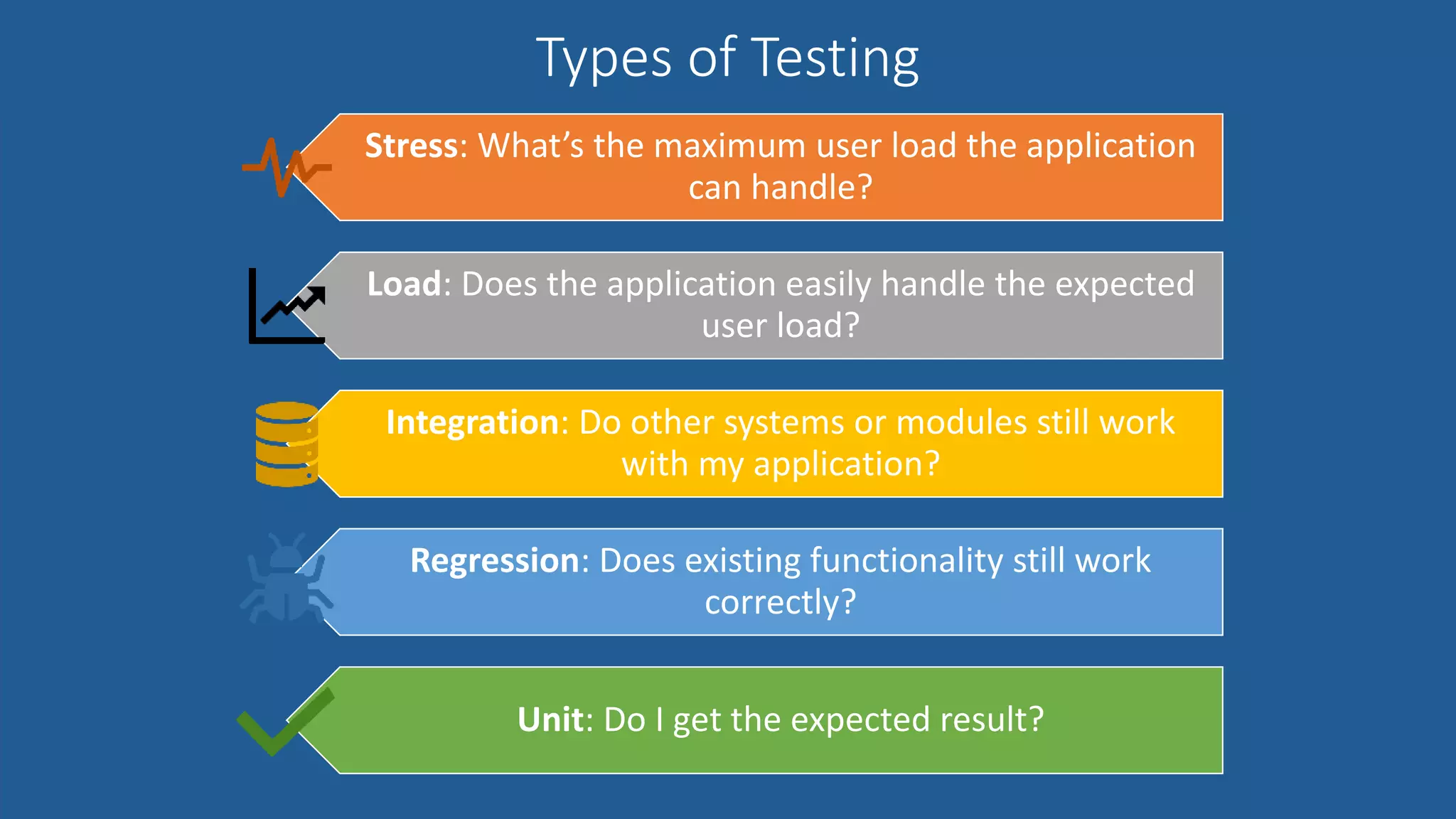

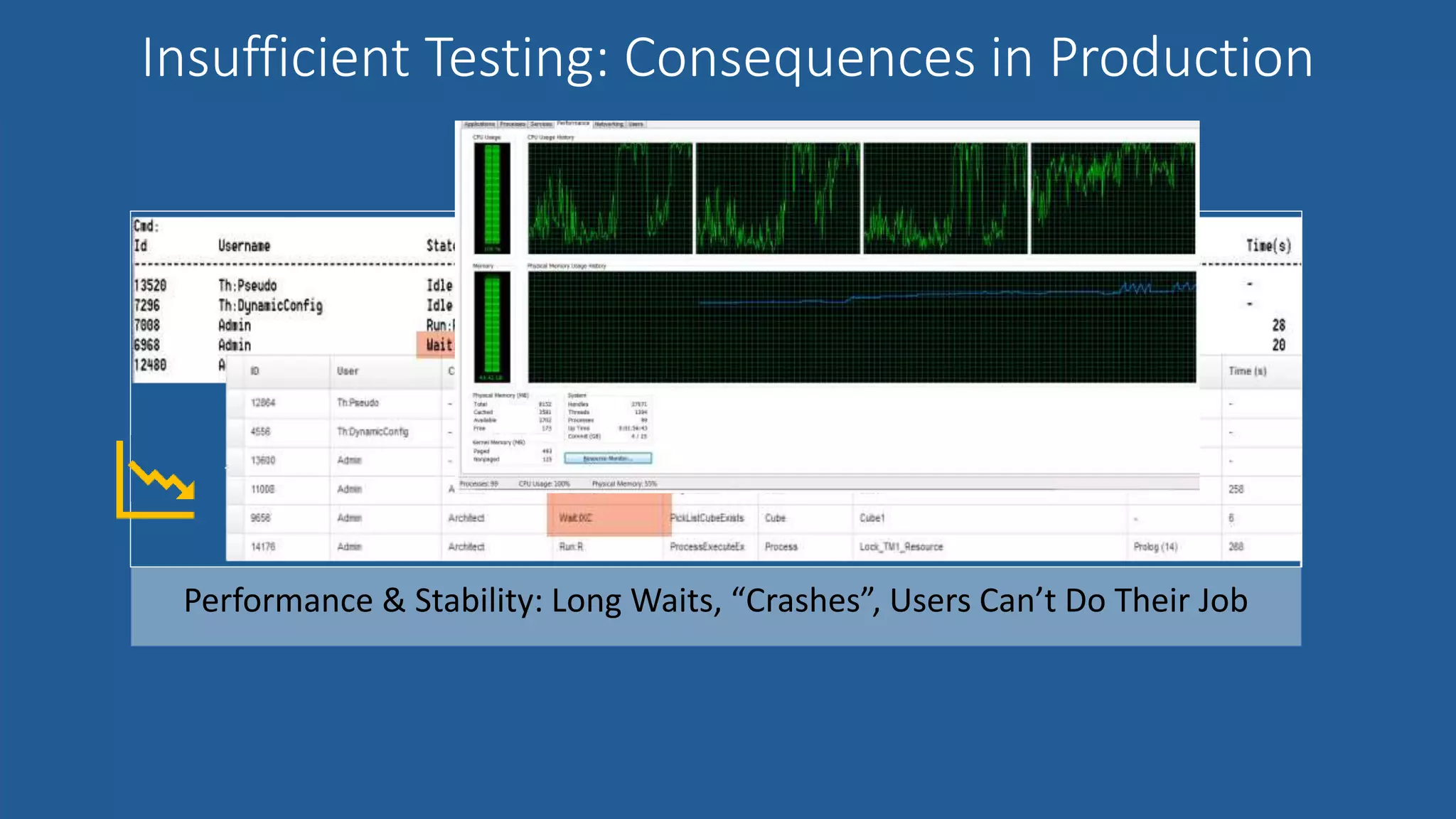

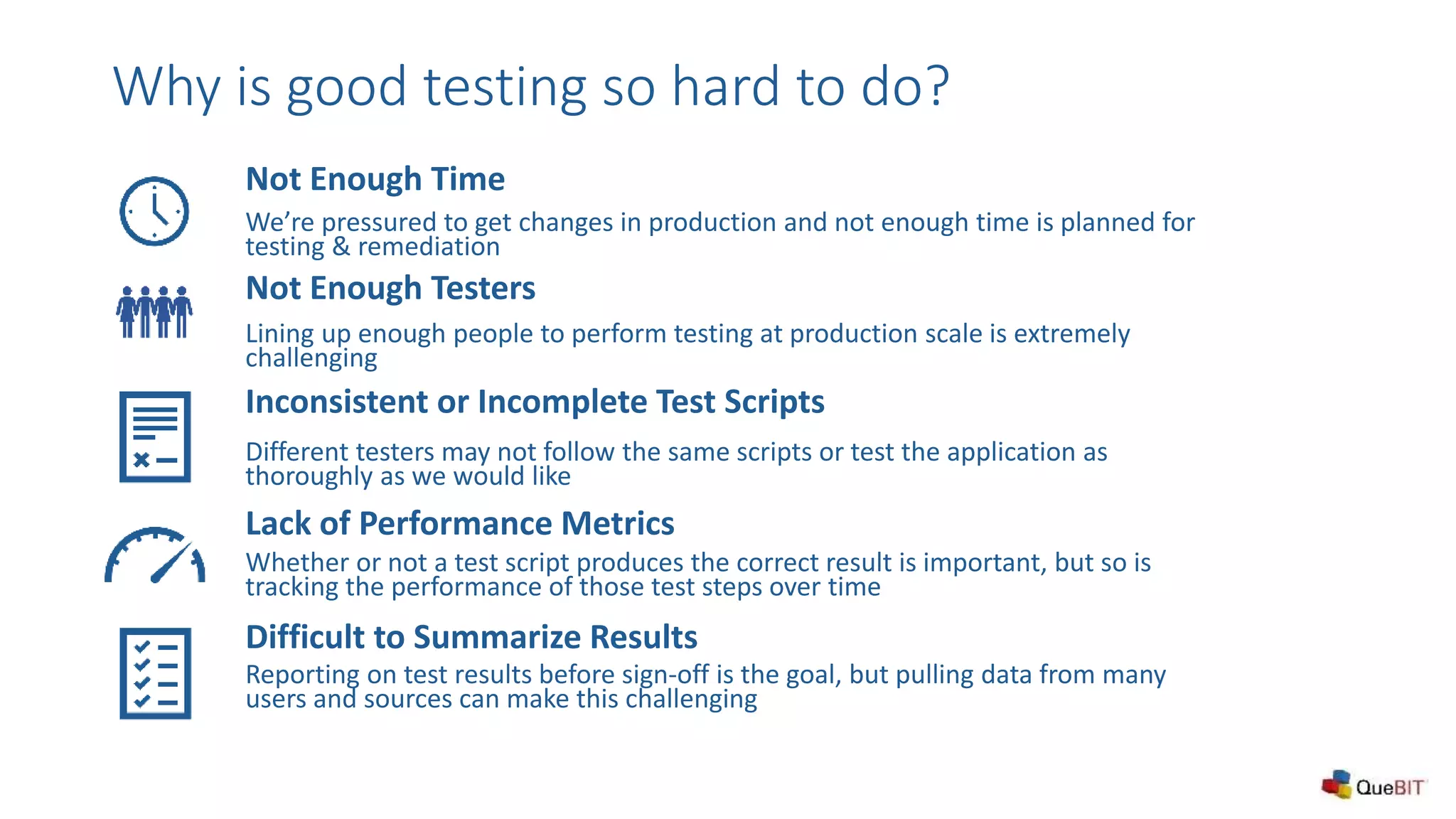

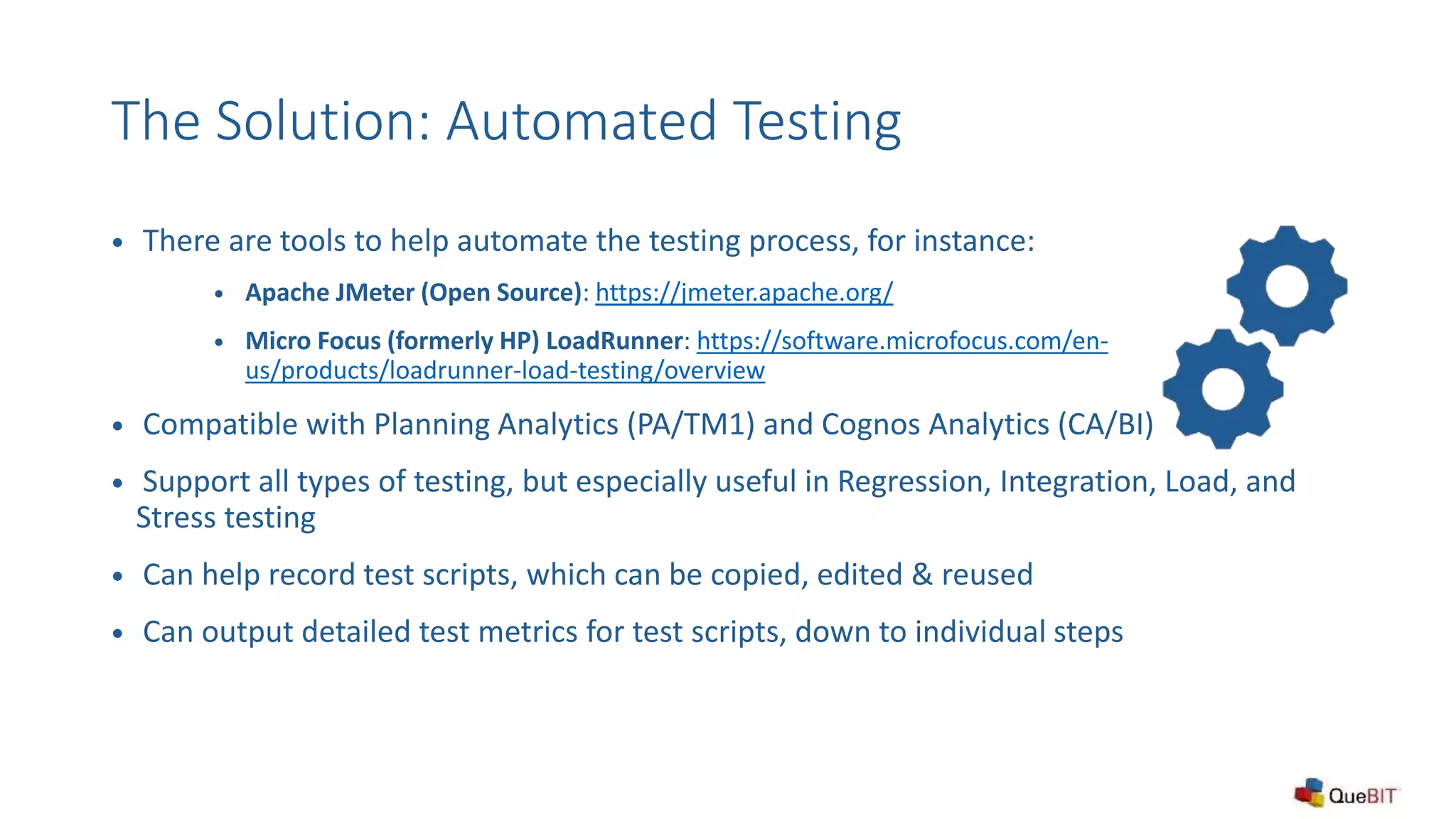

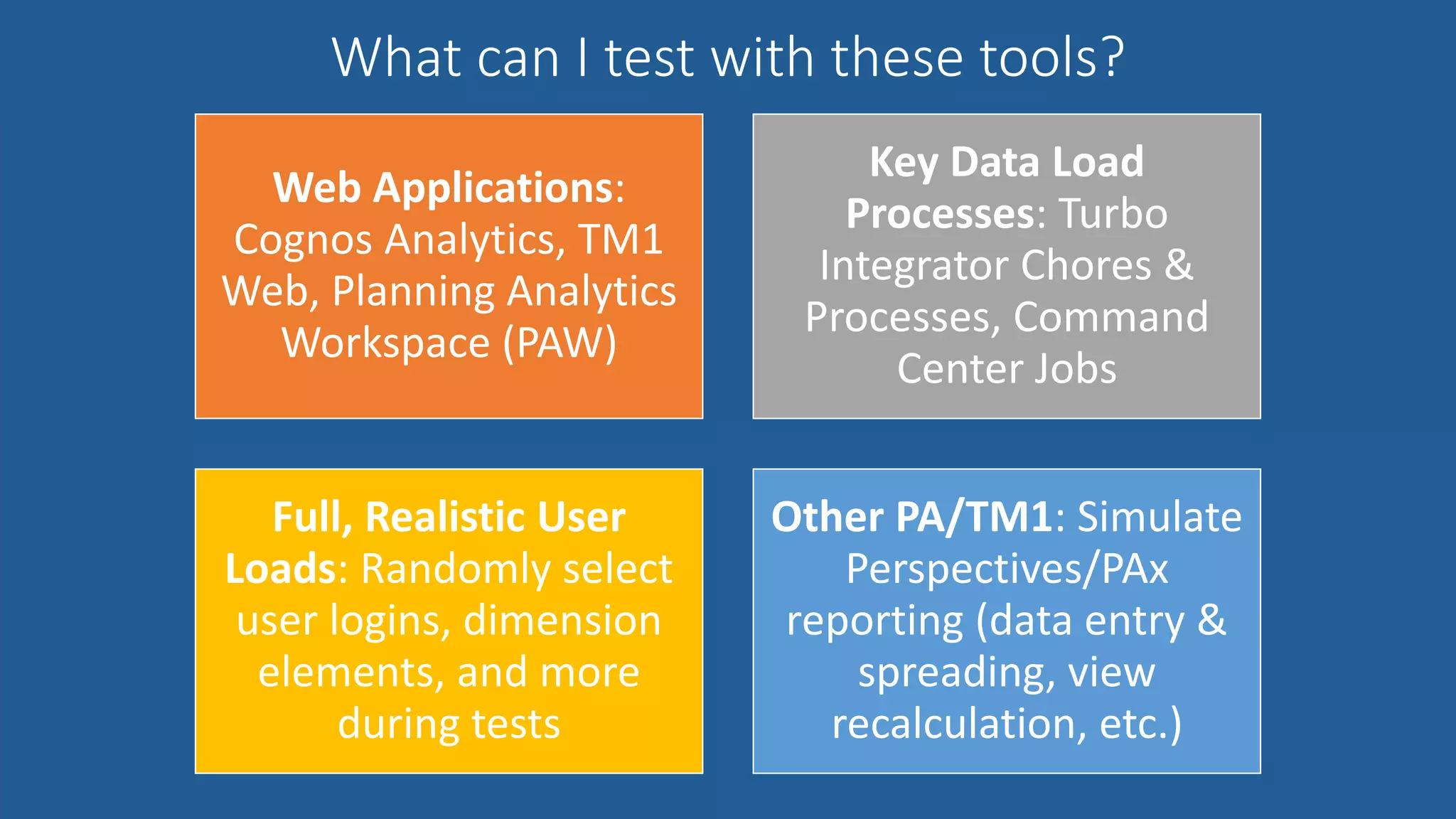

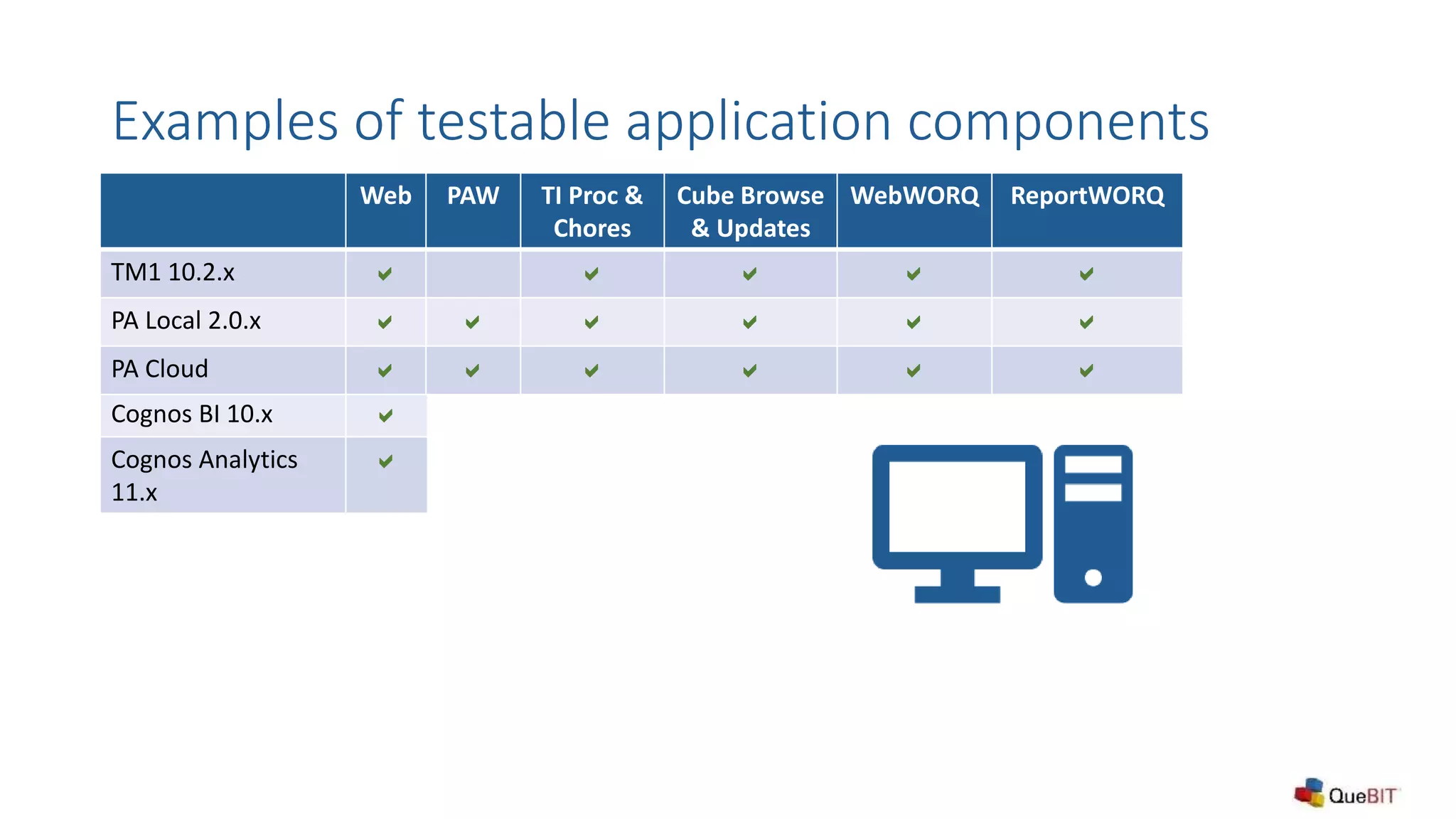

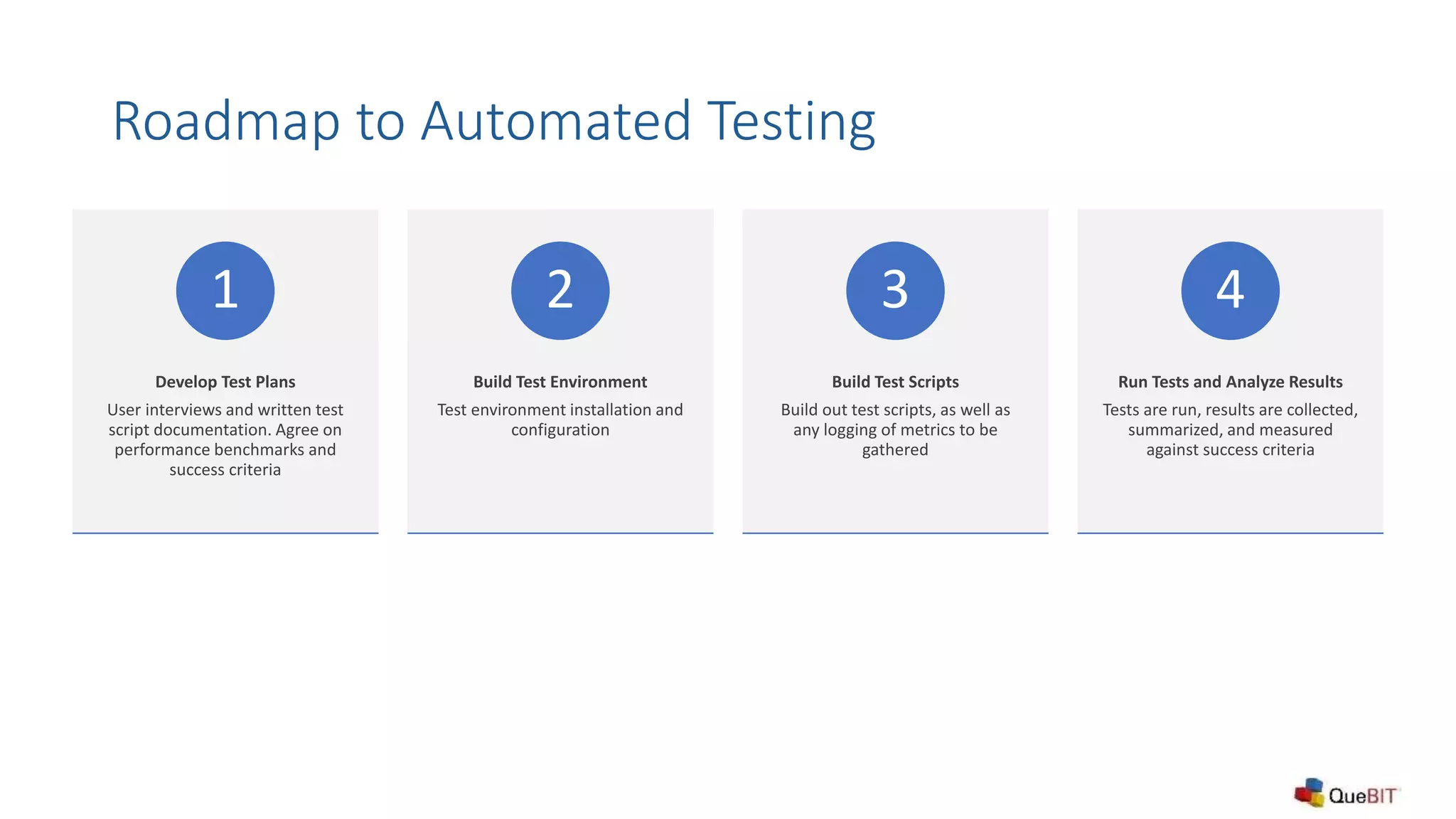

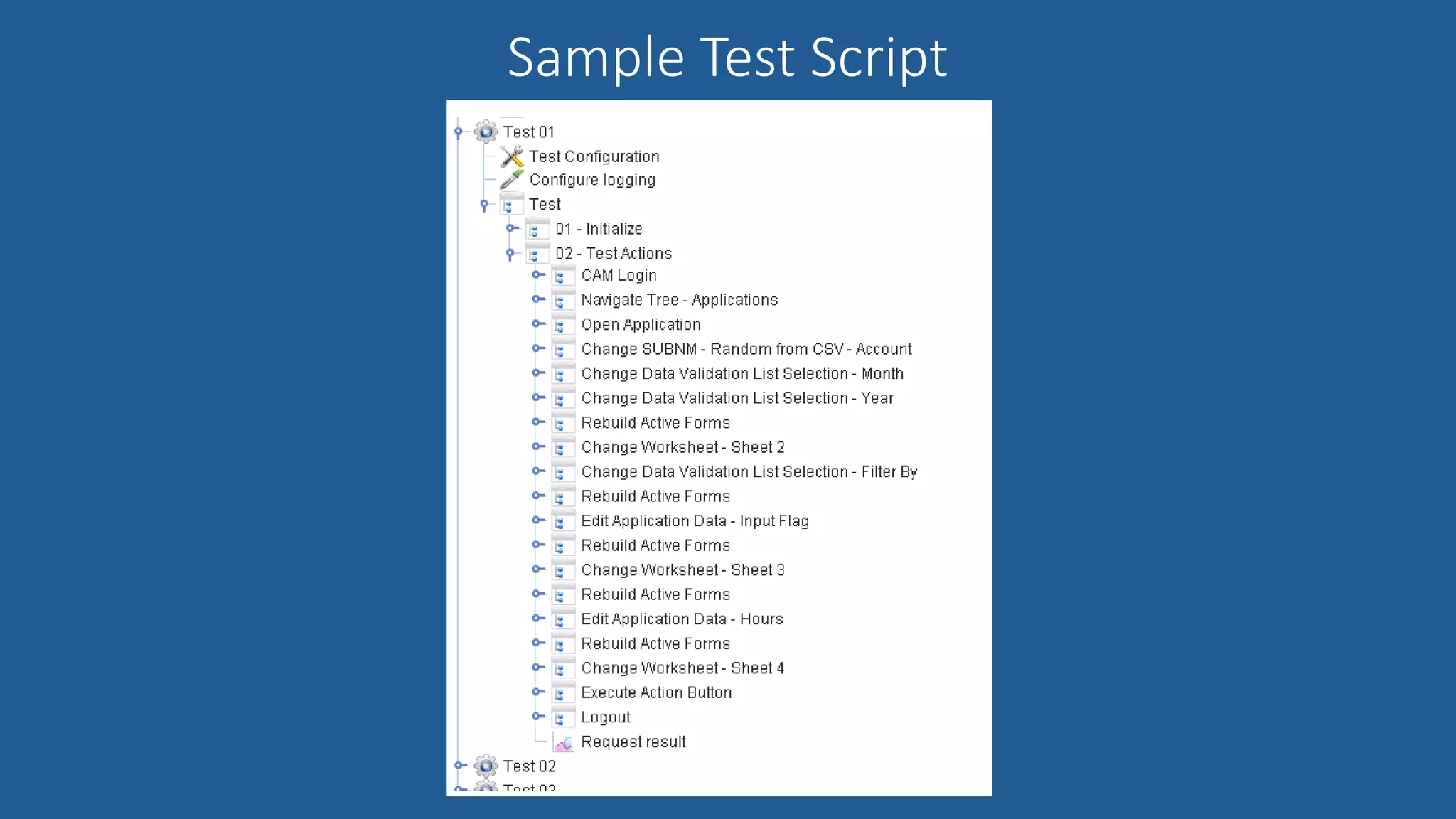

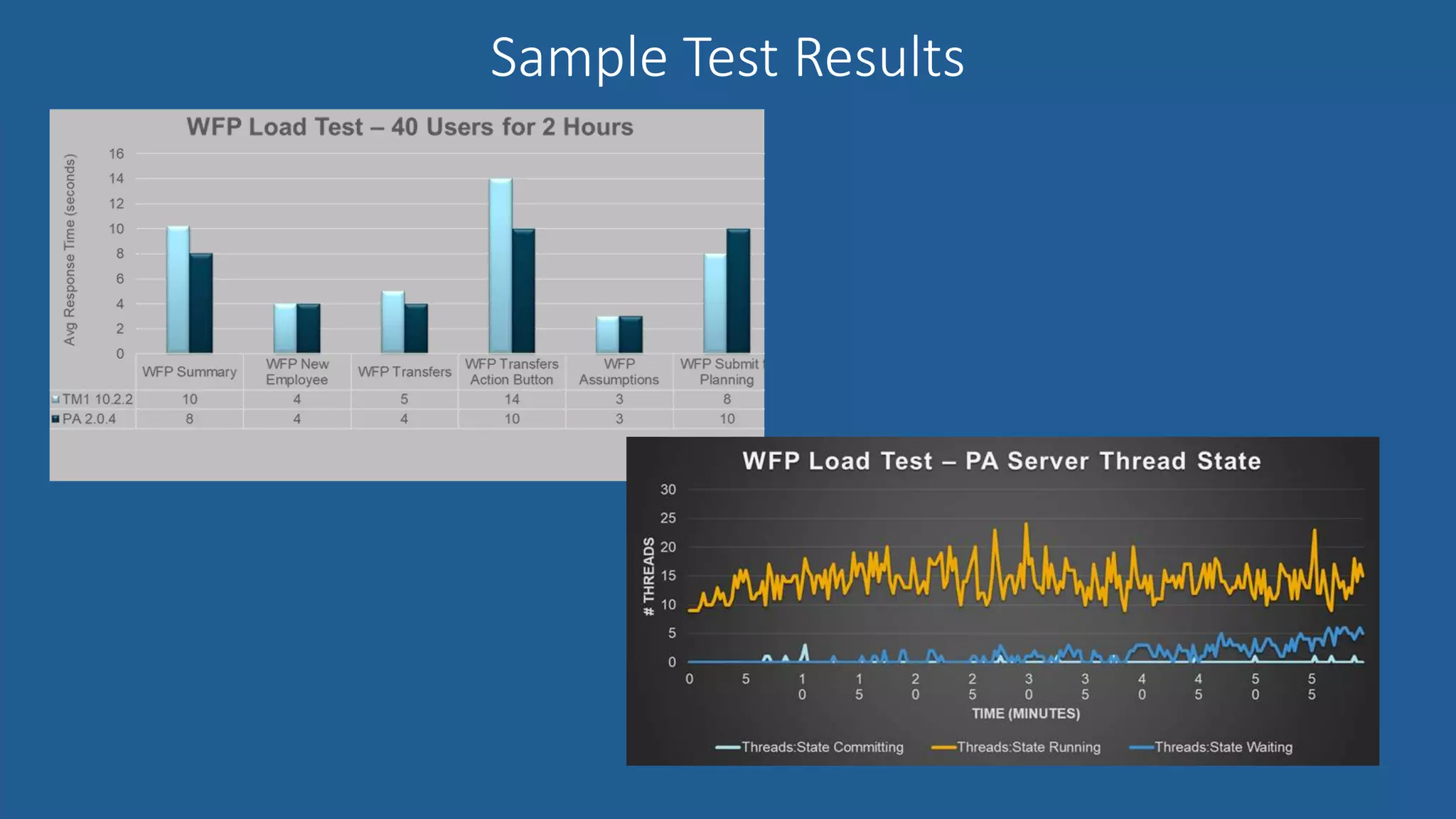

The webinar discusses the significance of performance testing in software development, highlighting the need for effective testing to avoid issues like application crashes and data errors. It emphasizes the challenges of adequate testing due to time constraints, lack of performance metrics, and the necessity for automated testing tools like Apache JMeter and Micro Focus LoadRunner. Additionally, the session outlines a roadmap for establishing automated testing processes, including developing test plans, building test environments, and analyzing results.