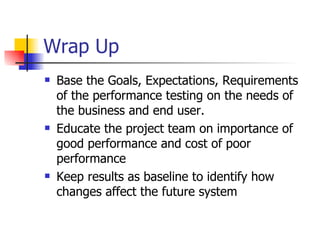

The document outlines a process for setting performance test requirements and expectations. It involves:

1) Conducting a performance testing questionnaire to understand system usage and customer expectations.

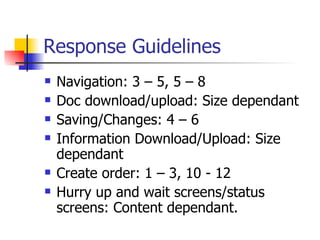

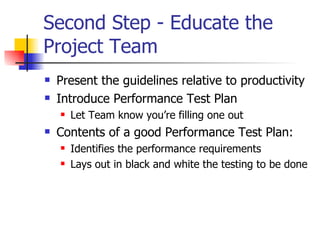

2) Educating the project team on guidelines for response times and the importance of performance testing.

3) Setting and documenting pass/fail performance criteria in a test plan to get sign-off.

4) Running iterative performance tests, comparing results to expectations, and addressing any issues found.

![Questions Contact me via email: [email_address] Will send a copy of performance testing questionnaires for creating a performance test plan.](https://image.slidesharecdn.com/septembersquadpresentation1-12610940510187-phpapp02/85/September_08-SQuAd-Presentation-27-320.jpg)