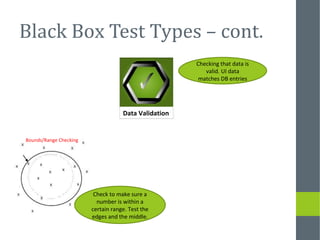

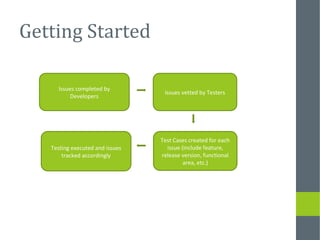

The document provides guidance for testers on how to test software effectively. It outlines different types of testing like black box testing, white box testing, and grey box testing. It emphasizes thinking like a user and trying to break the software in testing. The document also discusses test documentation, regression testing, and tips for testing across browsers and operating systems.