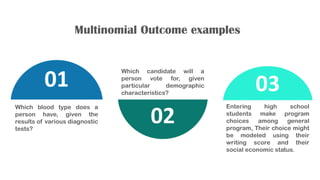

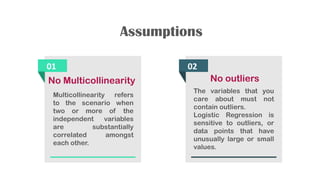

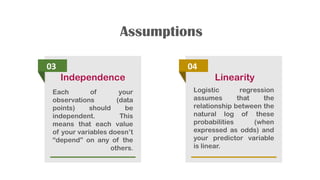

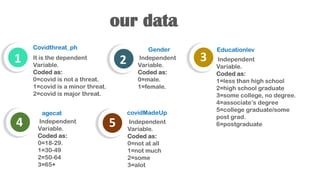

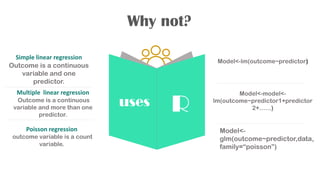

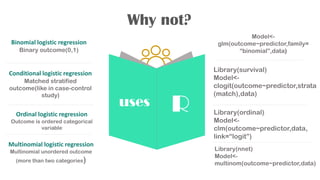

Multinomial logistic regression is an extension of binary logistic regression for outcomes with more than two unordered categories. It models the probabilities of the different categories using maximum likelihood estimation. Some examples of multinomial outcomes include blood type or candidate choice. The assumptions include no multicollinearity, no outliers, independence of observations, and linearity of relationships. The results are k-1 logistic regression models, where k is the number of categories. Interpretation includes pseudo R-square, p-values, and odds ratios. Resources for practicing on different datasets were also provided.