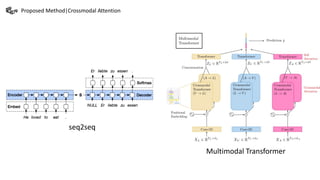

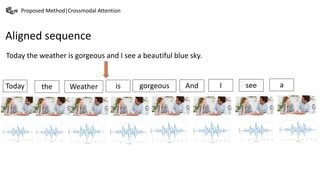

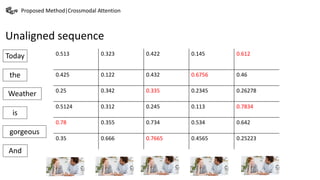

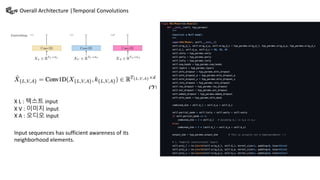

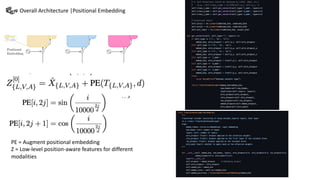

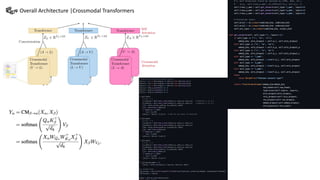

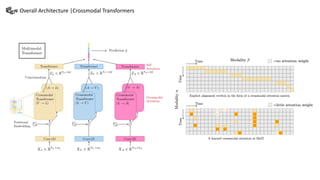

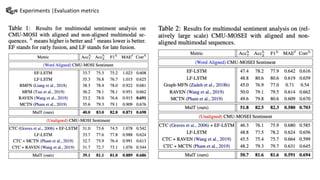

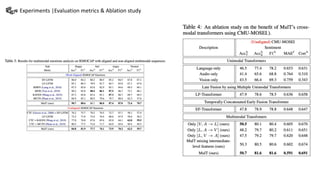

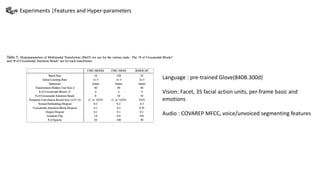

This document summarizes a presentation on multimodal language analysis using multimodal transformers for unaligned multimodal language sequences. It discusses how multimodal transformers can analyze text, vision, and audio inputs simultaneously using crossmodal attention. The proposed method is shown to generate embeddings that capture the relationships between different input modalities without strict alignment between the sequences. Various experiments are conducted to evaluate the model using different metrics and ablation studies.