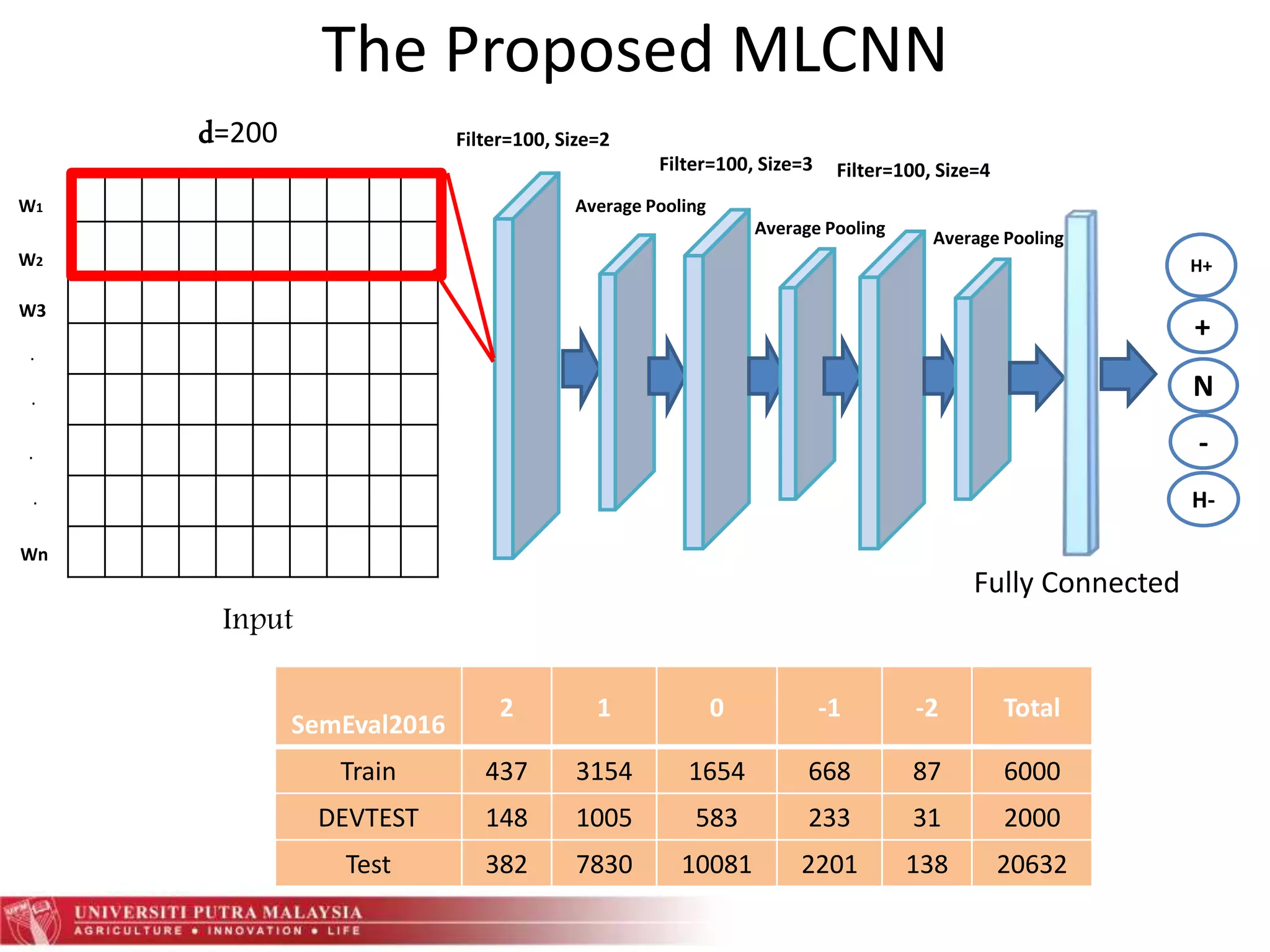

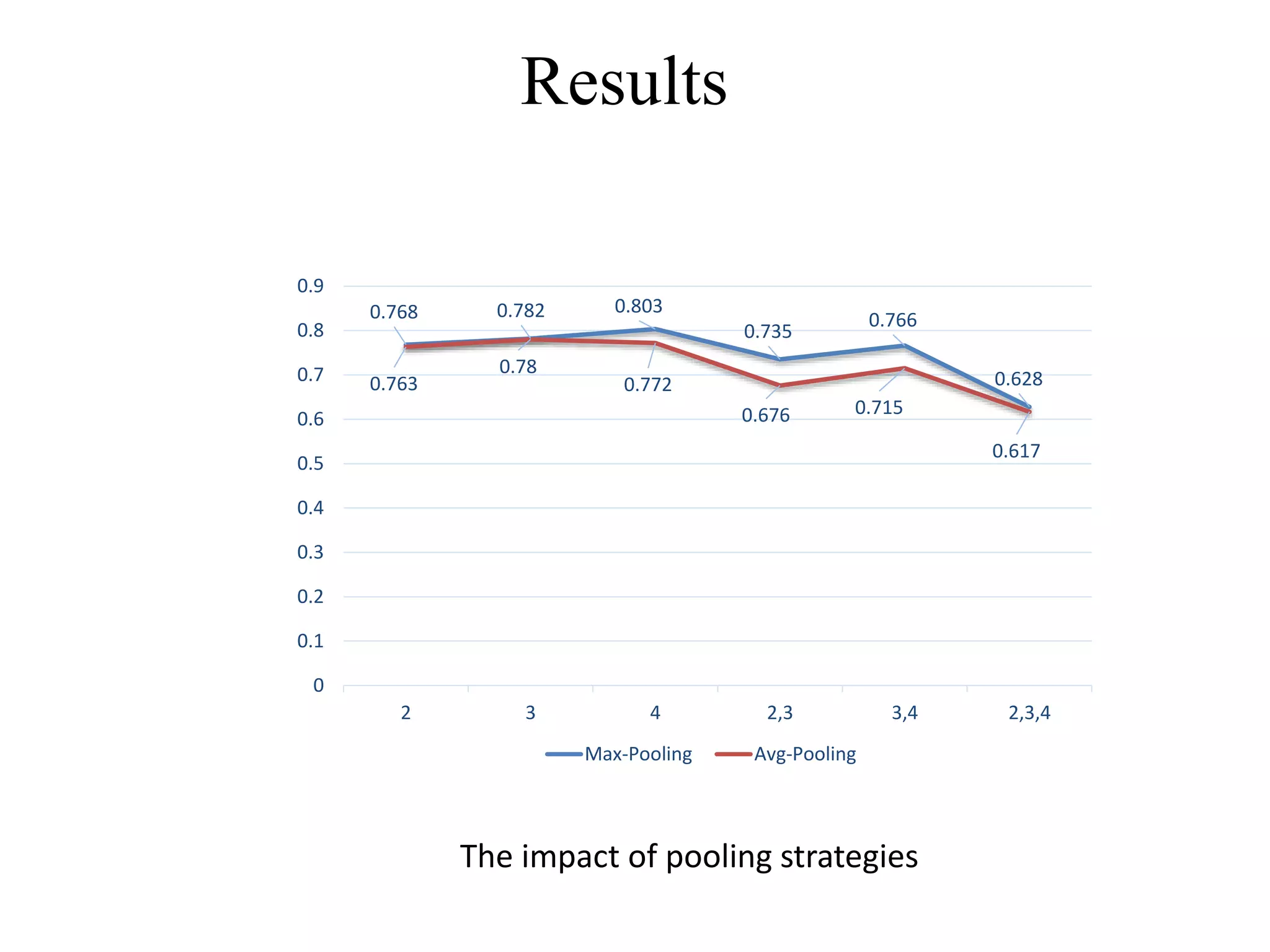

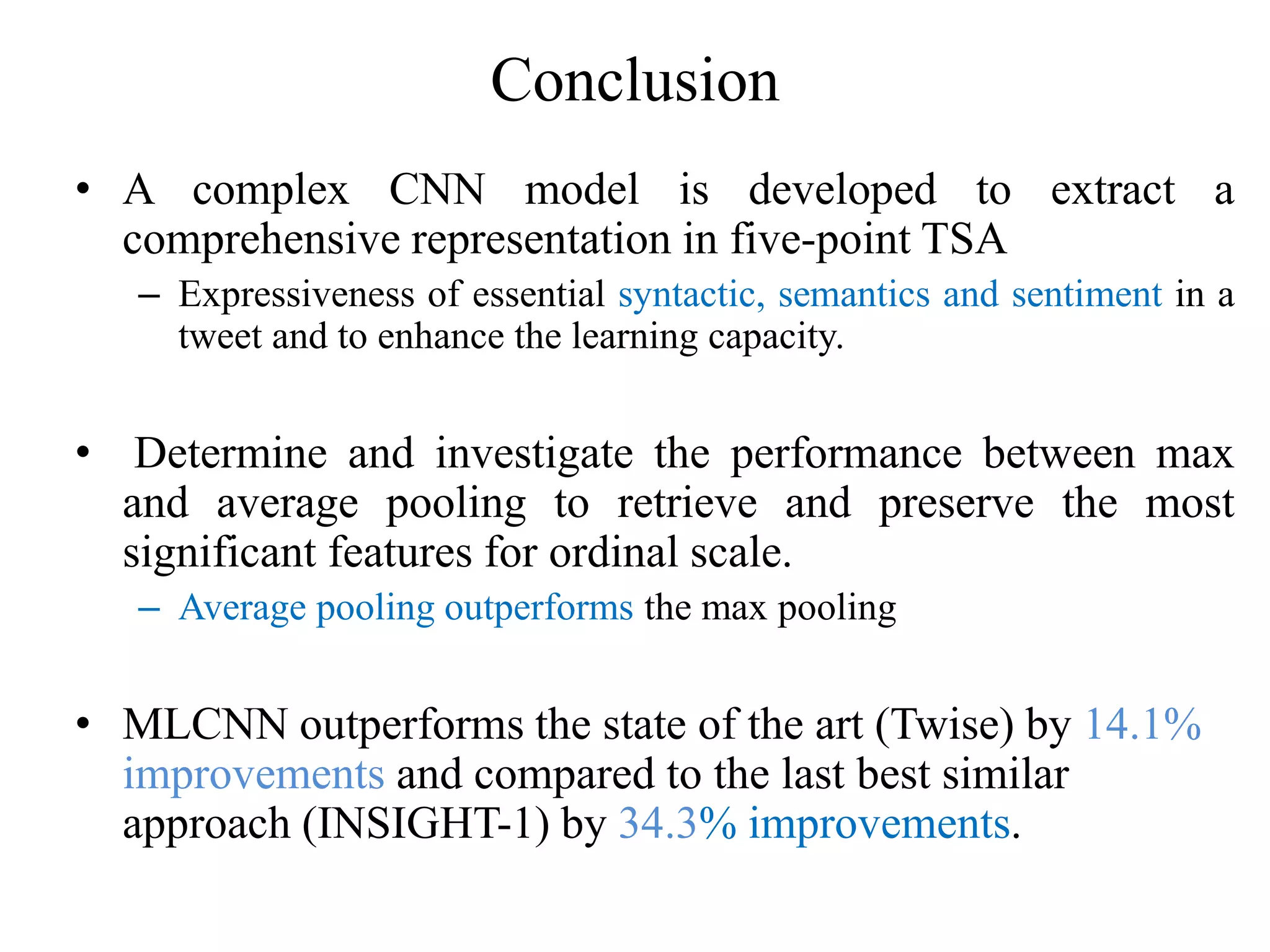

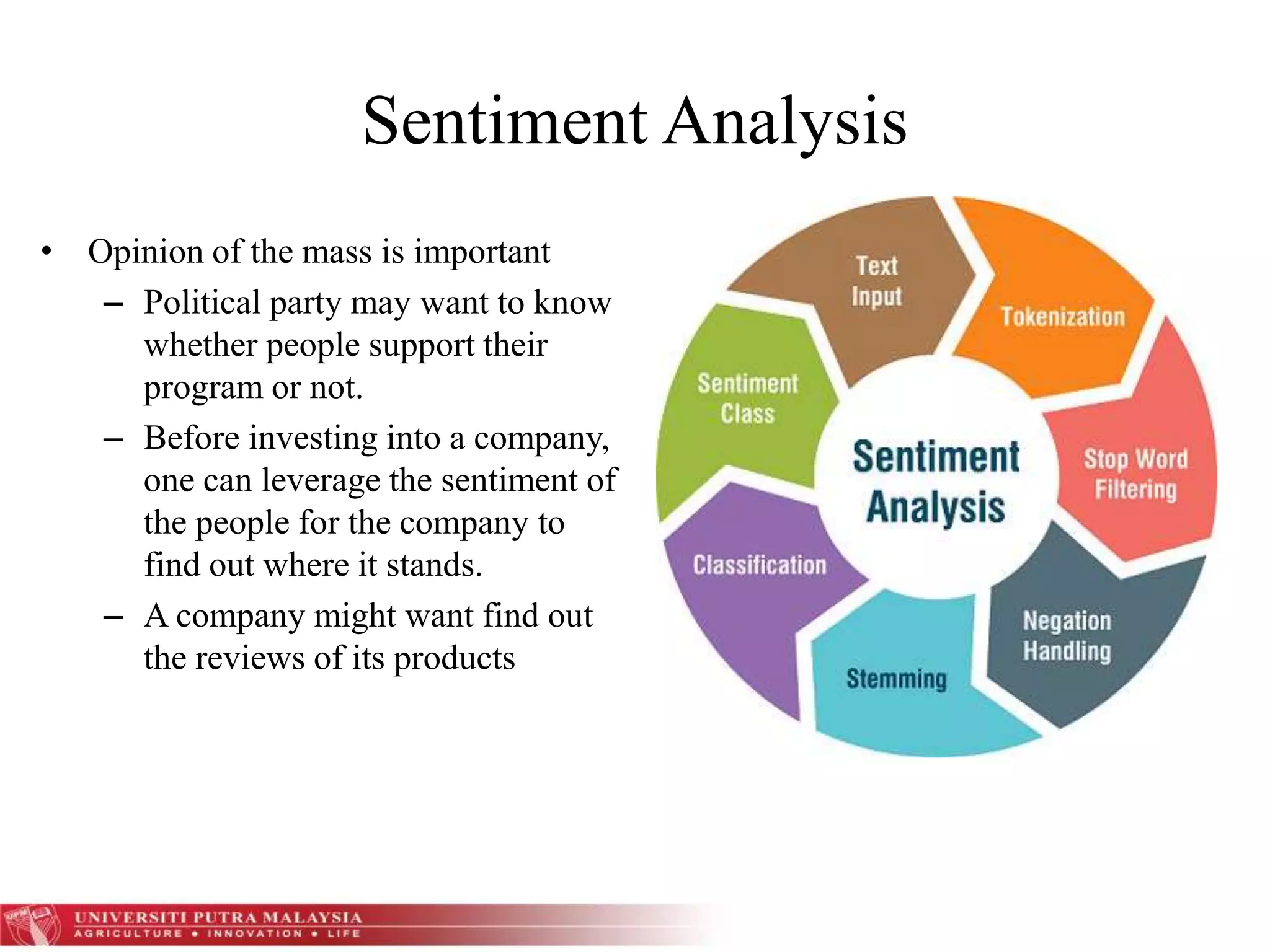

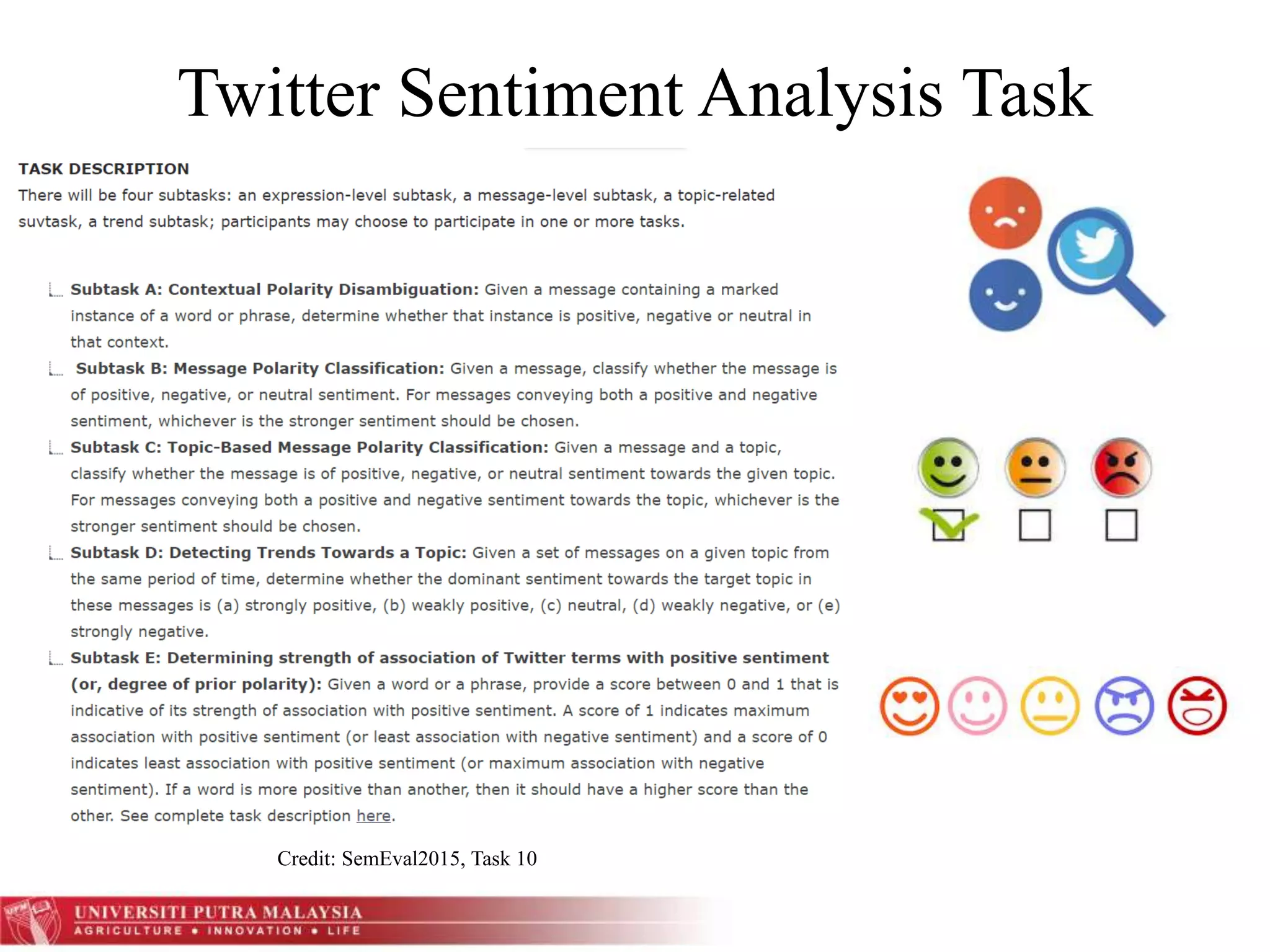

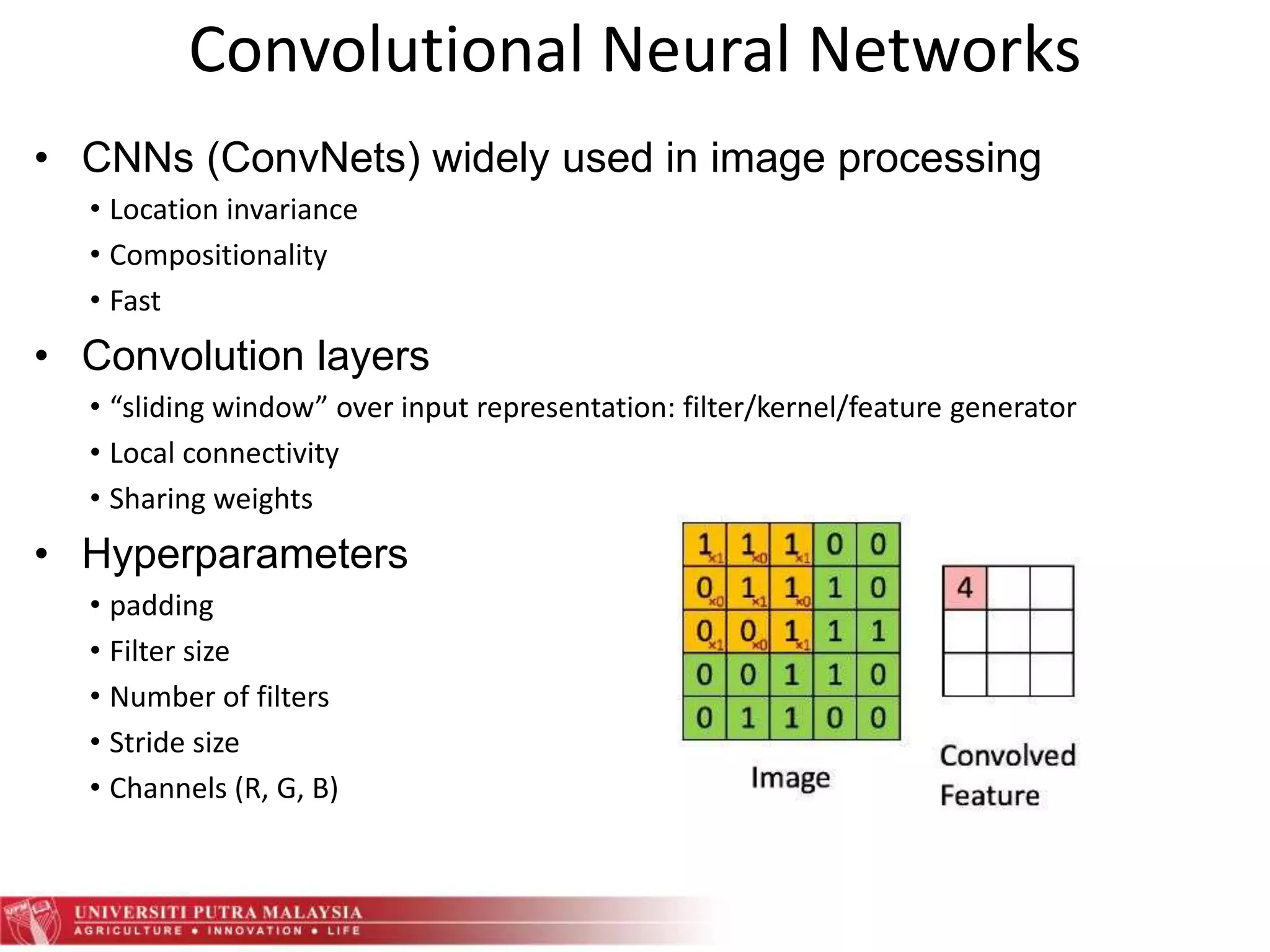

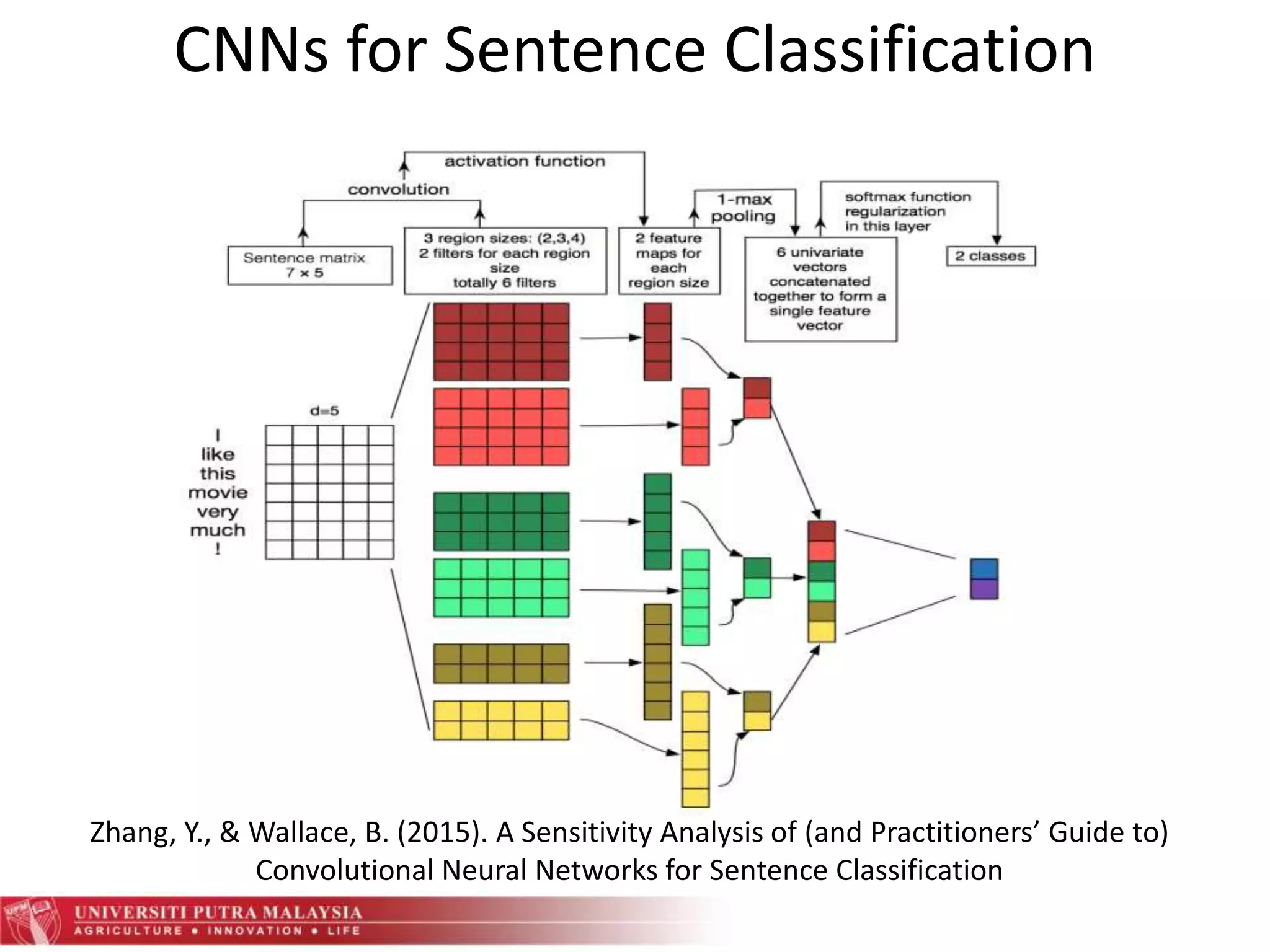

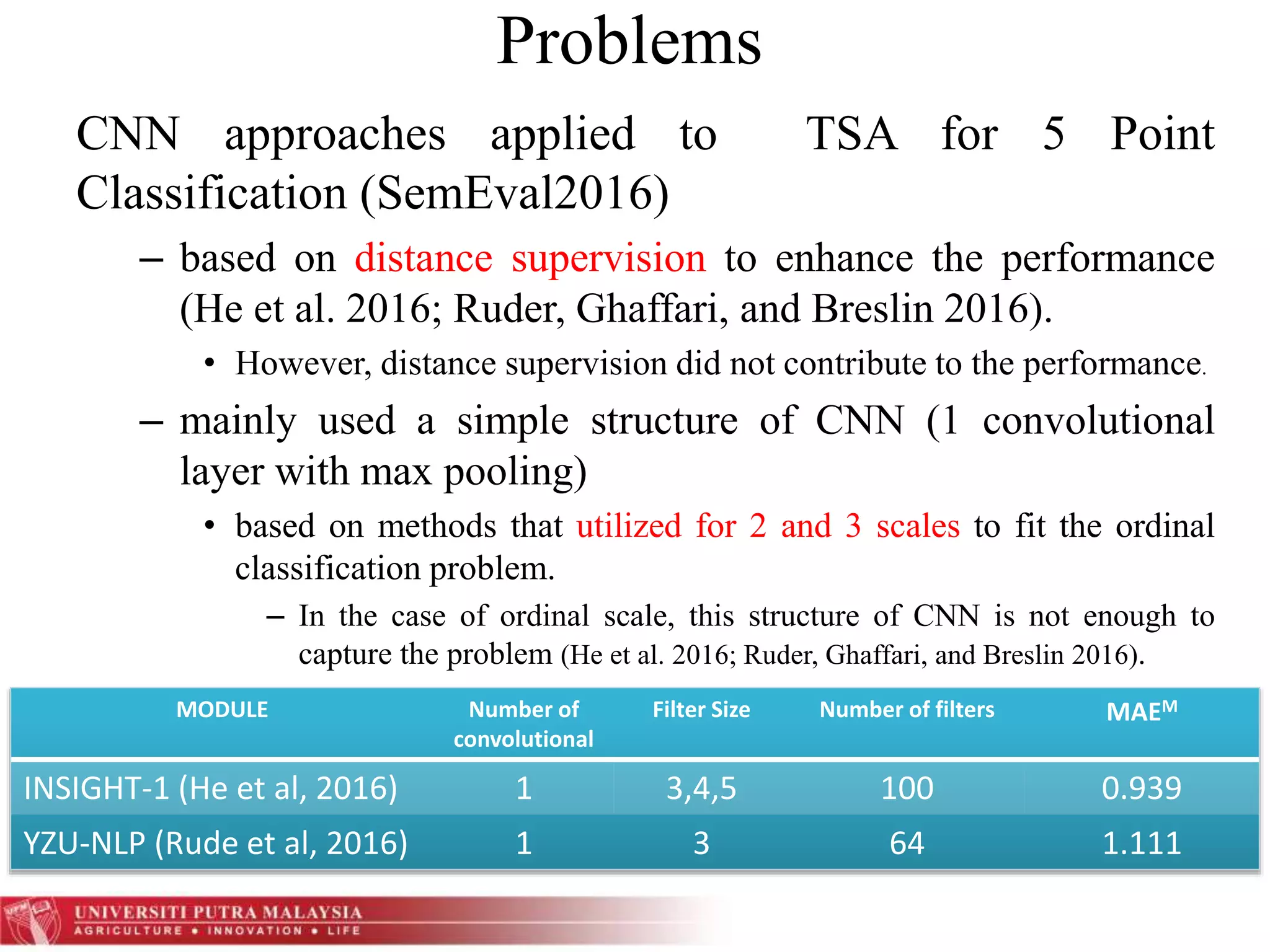

This document presents a multi-layer convolutional neural network (MLCNN) for classifying Twitter sentiment on an ordinal scale of five points (Highly Positive, Positive, Neutral, Negative, Highly Negative). The MLCNN uses different filter sizes and pooling techniques to capture the complexity of ordinal classification. It outperforms previous state-of-the-art models on the SemEval 2016 Twitter sentiment dataset, achieving a MAEM score of 0.617 using various filter sizes and average pooling. The MLCNN is able to automatically learn features and representations from word embeddings to perform Twitter sentiment analysis, without extensive feature engineering.

![The Proposed MLCNN

• Dataset provided by SemEval 2016 [8]; divided into training,

development, development test and test.

– Five-point scale (Highly Positive, Positive, Neutral, Negative, Highly

Negative)

• MLCNN Model

– trained on word embedding, we used publicly available GloVe

Embeddings [9] pre-trained on 2B tweets to initialize our word

embedding with 200- dimensional Glove vector.

– Maximum tweet length of 50, Embedding size =200

– applied different filter size (2,3,4) separately, and the combination of

them with different pooling technique (Max and Average).

– trained using Adam optimizer for 10 epoch and batch size 100.

Implemented using Keras library on a Theano backend.](https://image.slidesharecdn.com/scdmcnntsapresentation-180207025245/75/Multi-layers-Convolutional-Neural-Network-for-Tweet-Sentiment-Classification-8-2048.jpg)