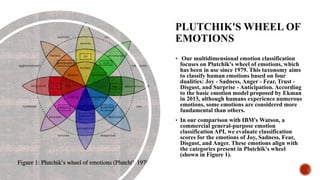

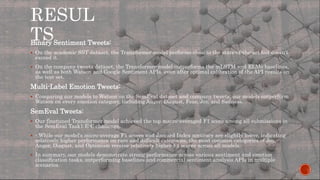

The document summarizes research on using large pre-trained language models for practical text classification tasks. The authors trained Transformer and mLSTM models on a large text dataset and fine-tuned them for sentiment analysis and multi-dimensional emotion classification. Their models achieved state-of-the-art or competitive performance on various datasets, outperforming commercial APIs. The analysis highlighted the importance of dataset size, context specificity, and benefits of unsupervised pre-training with fine-tuning for challenging classification problems.