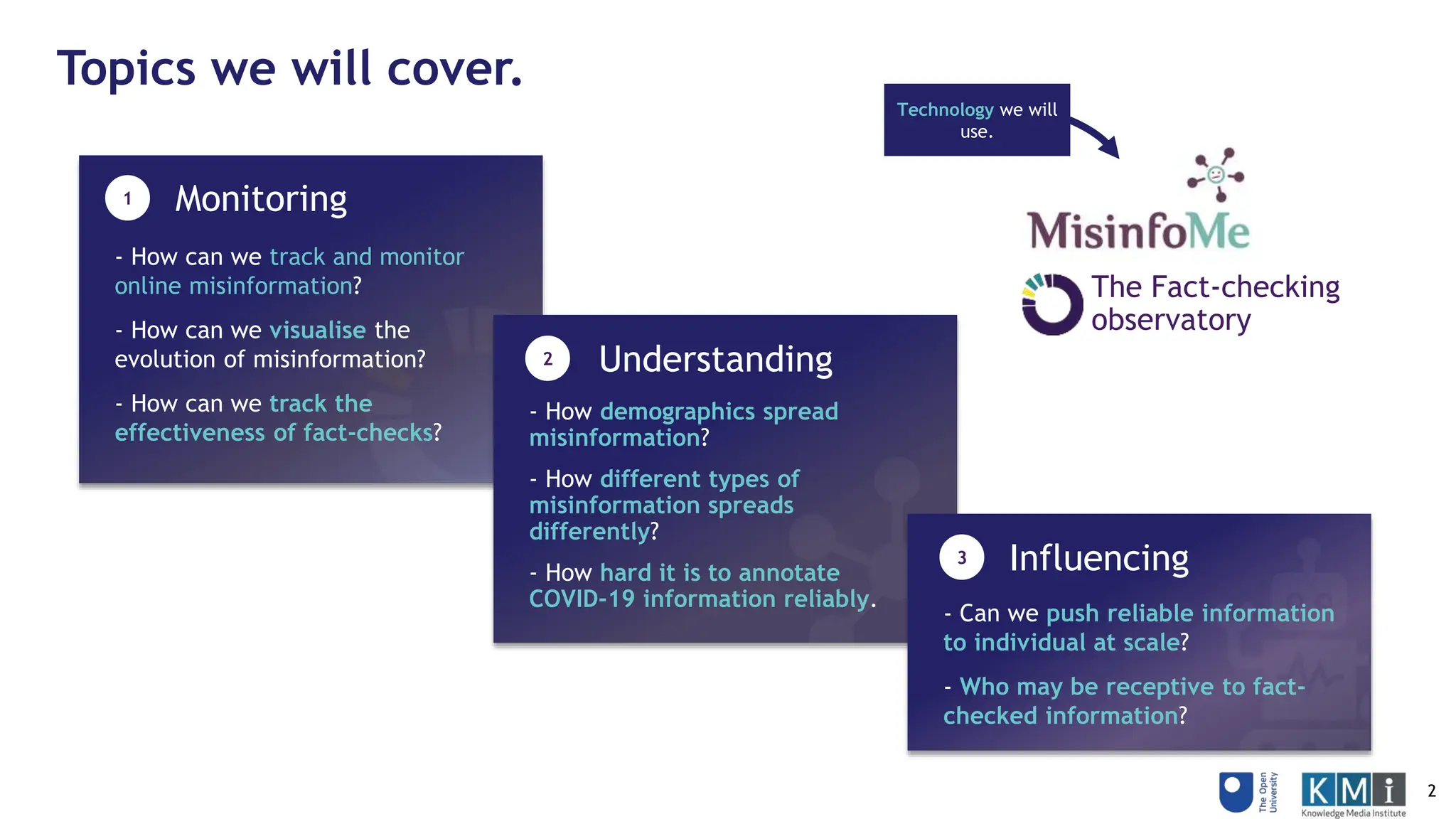

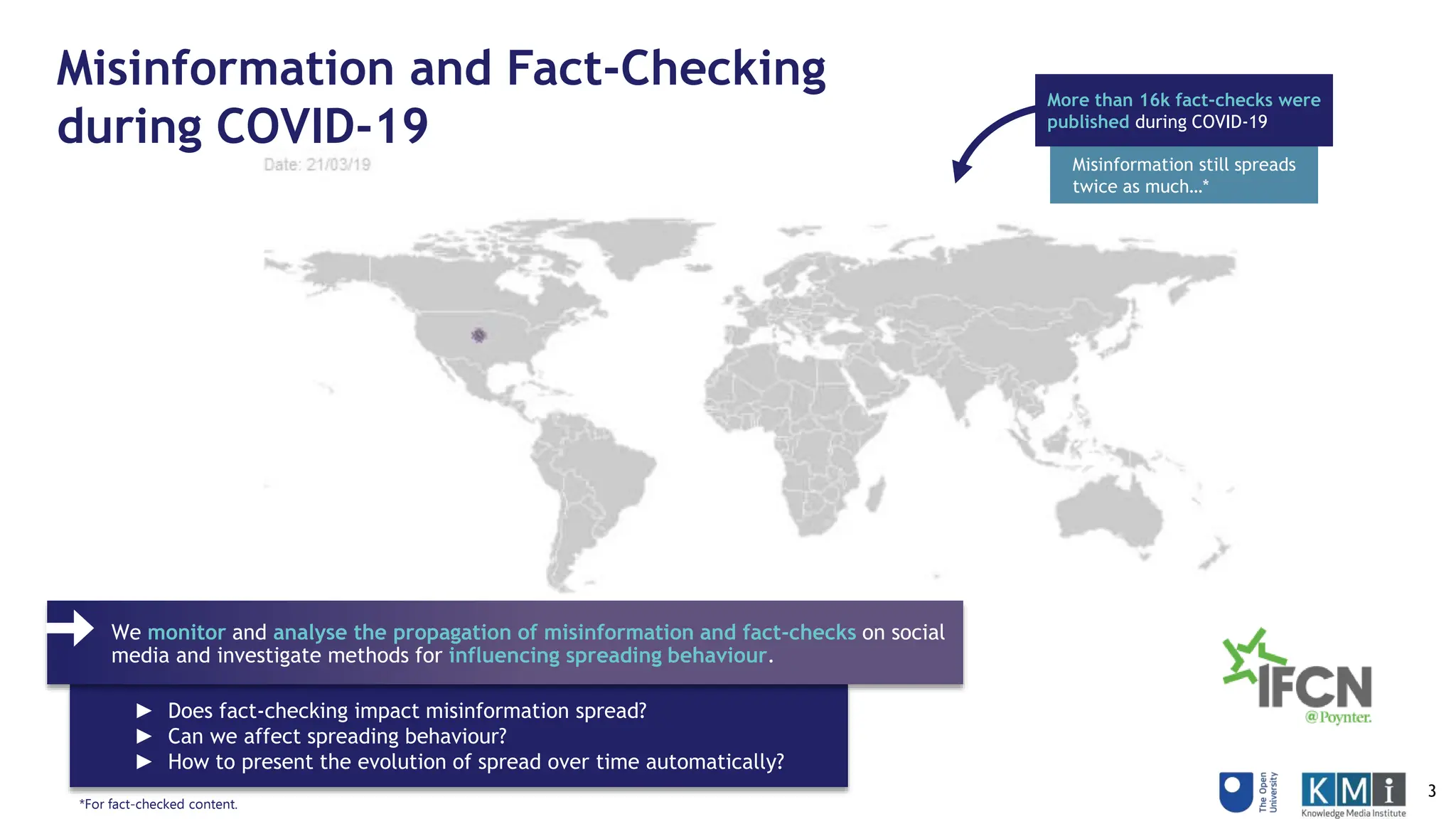

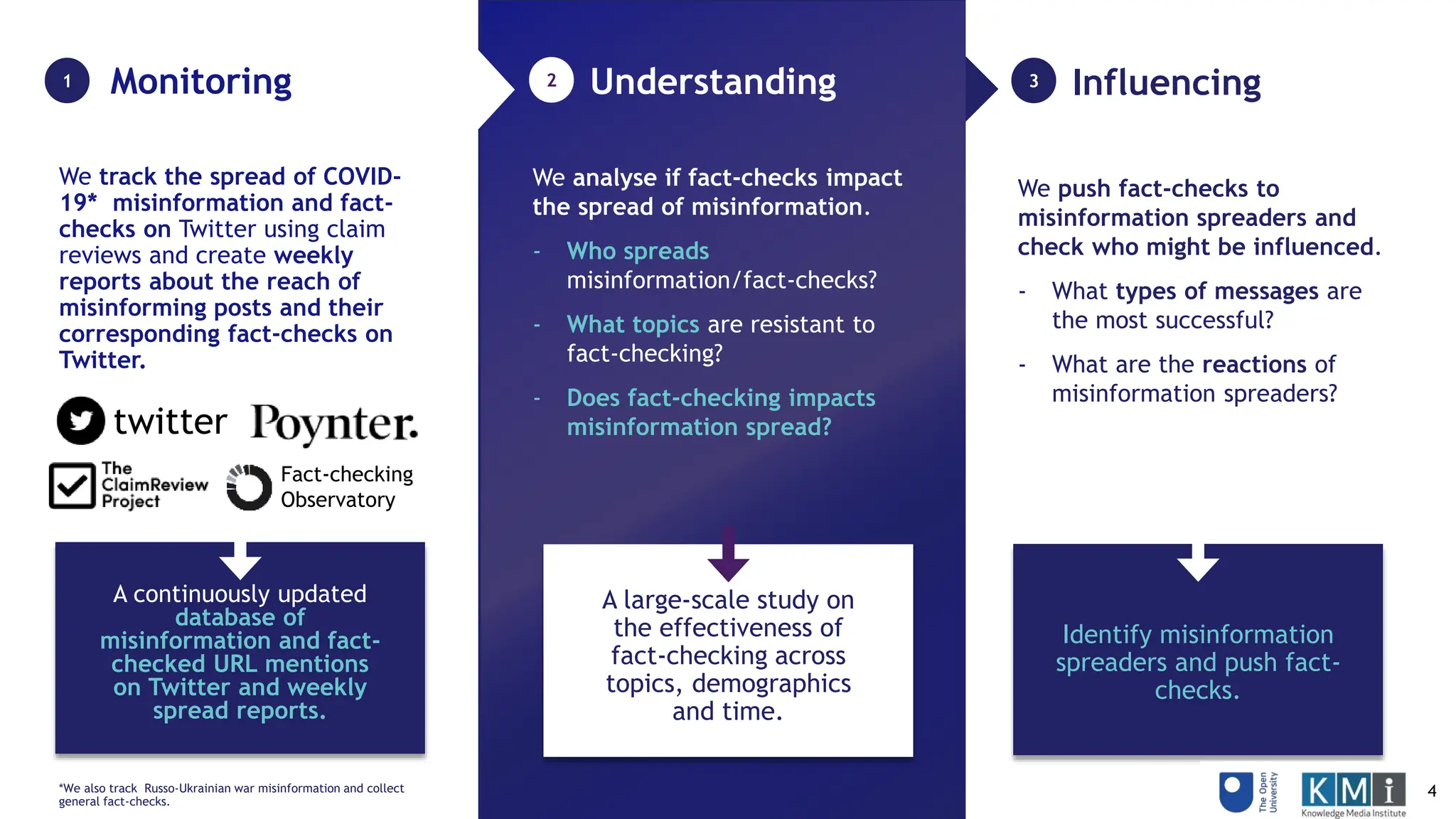

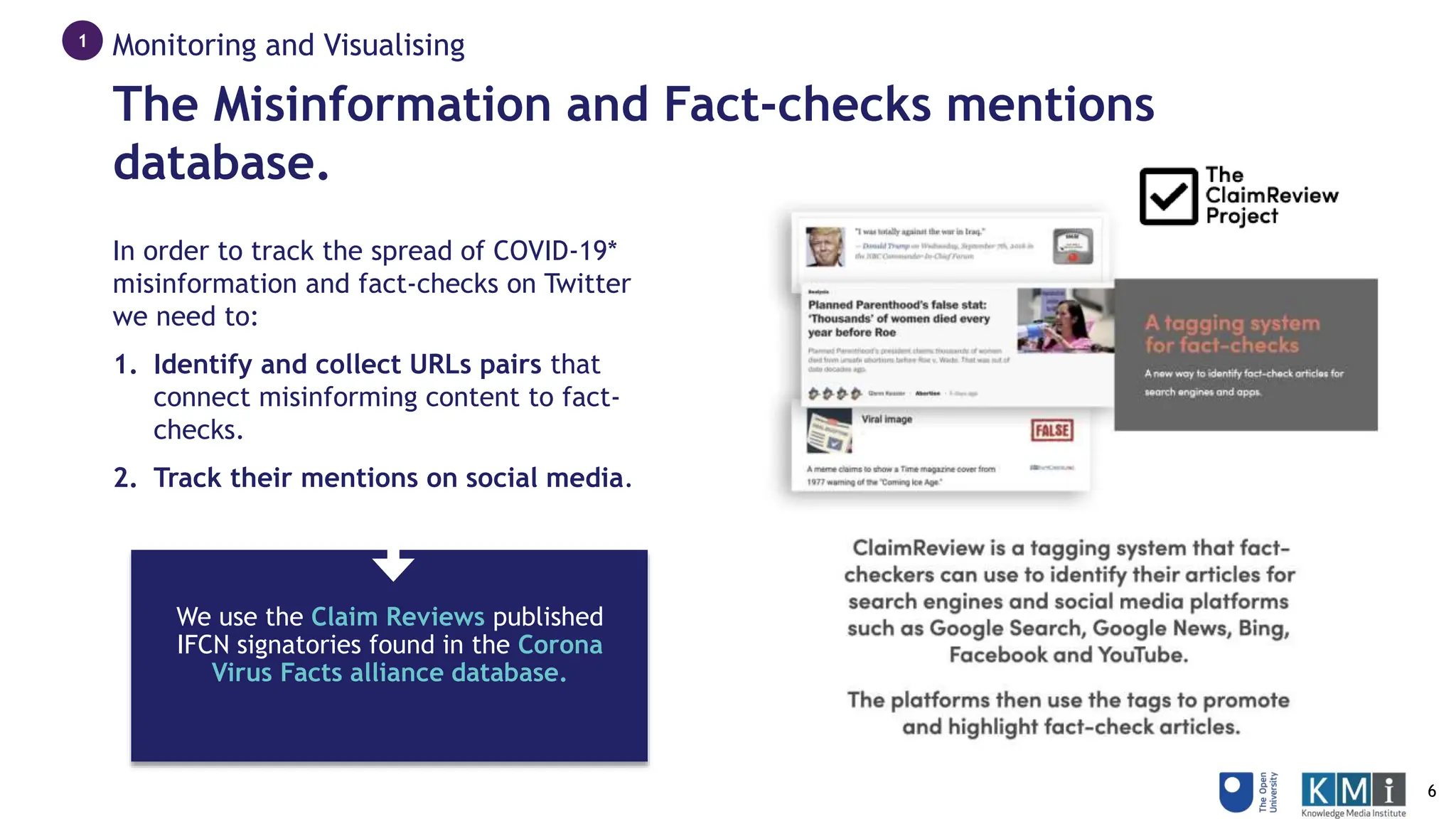

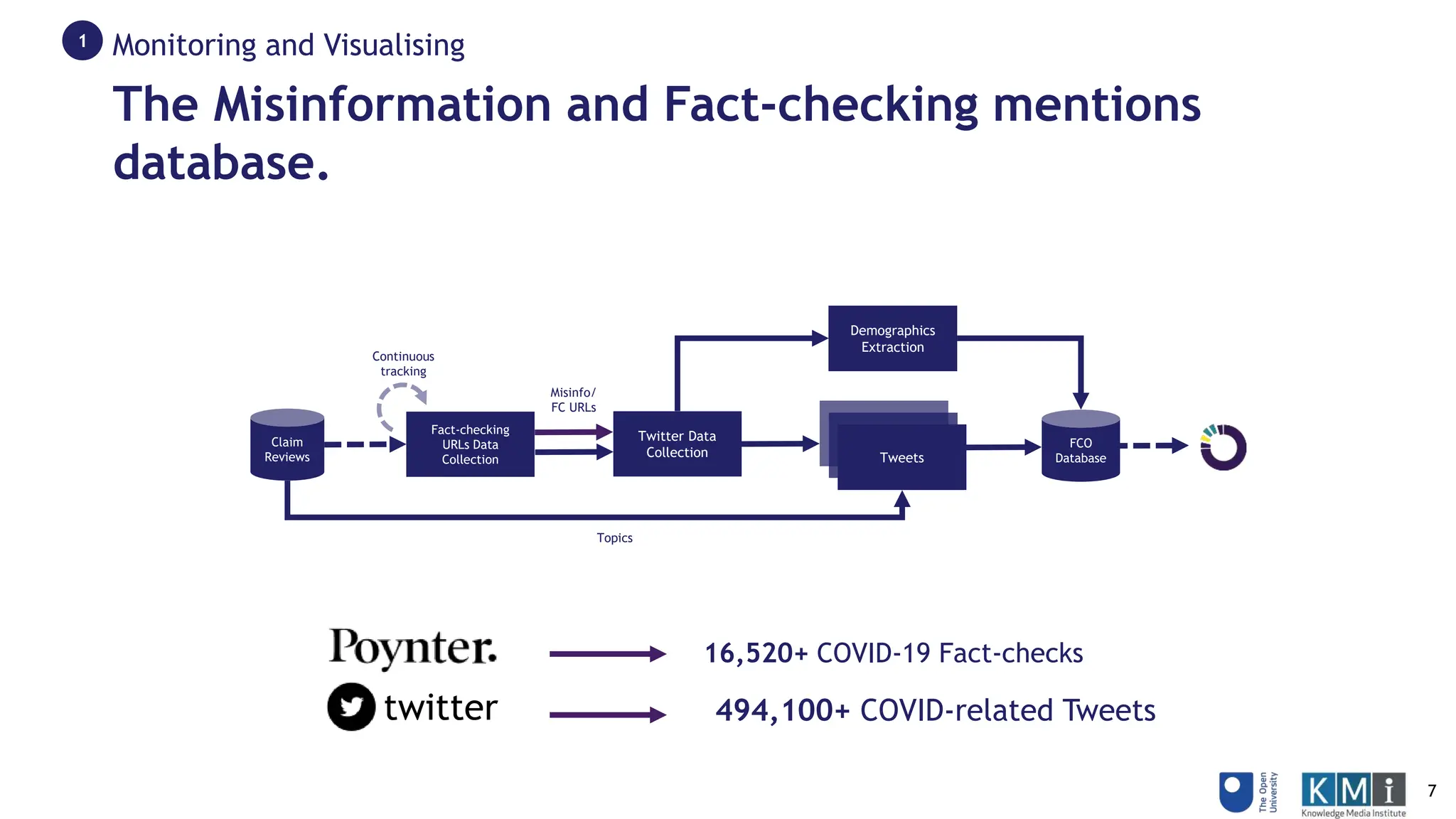

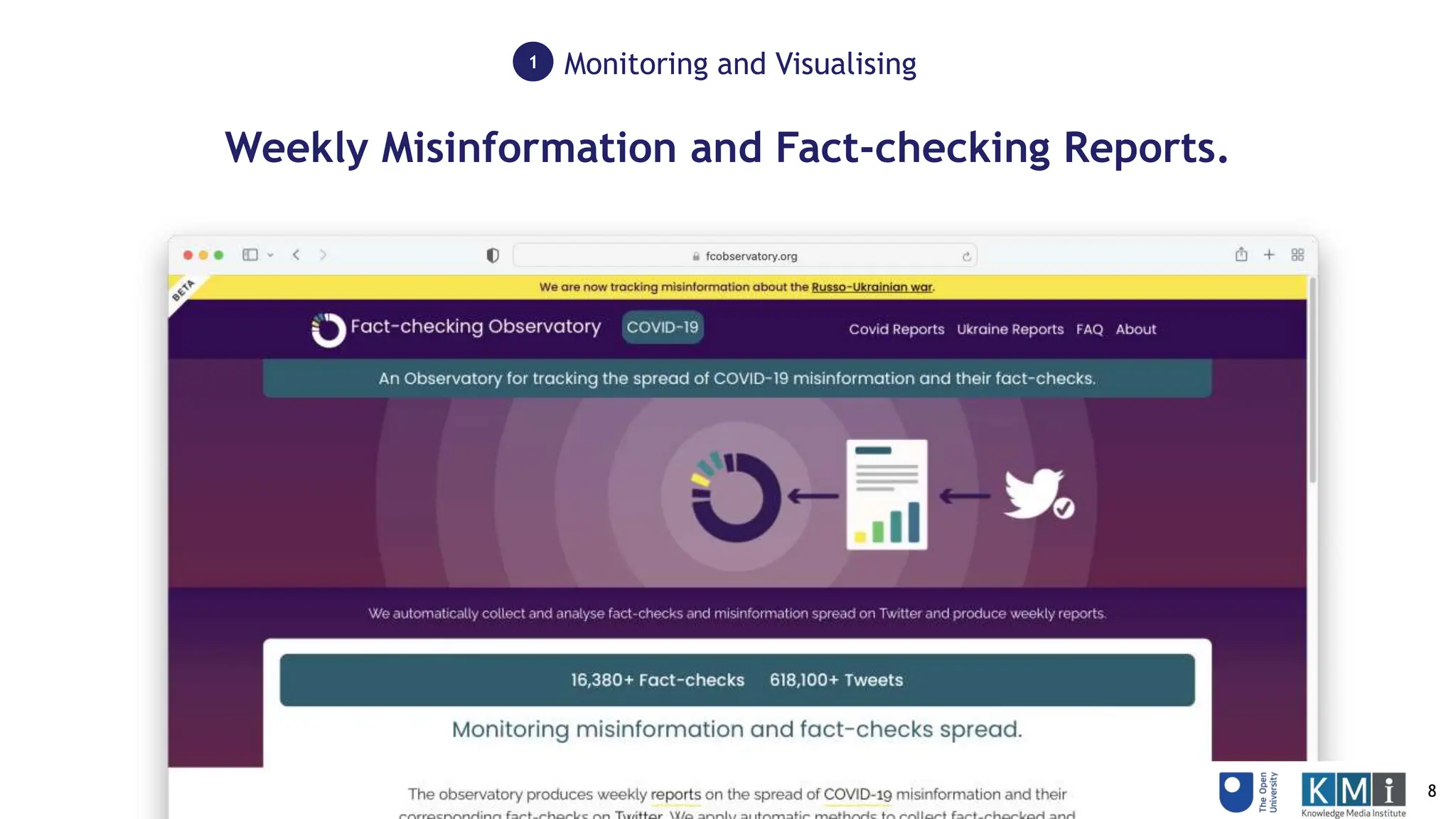

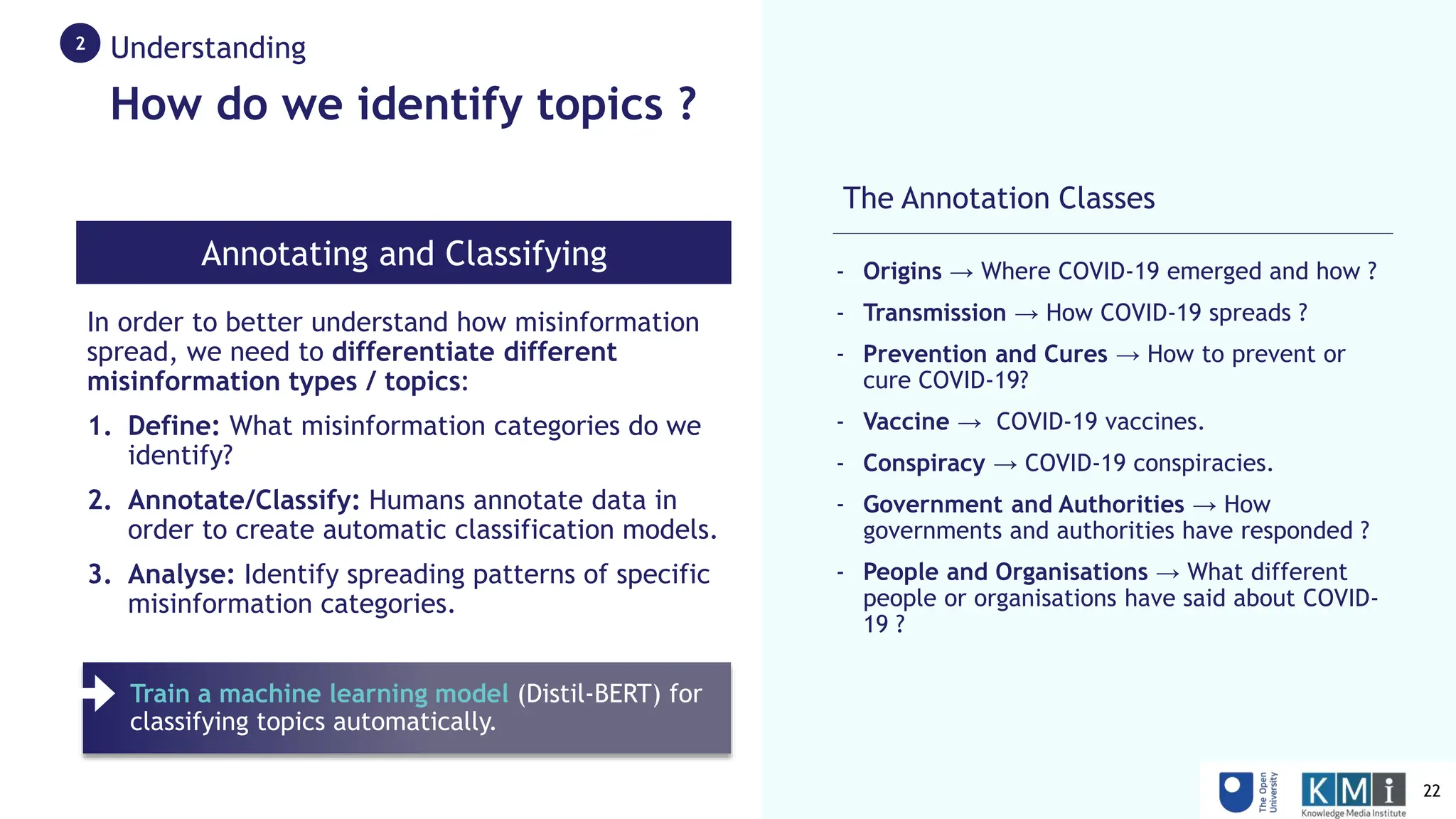

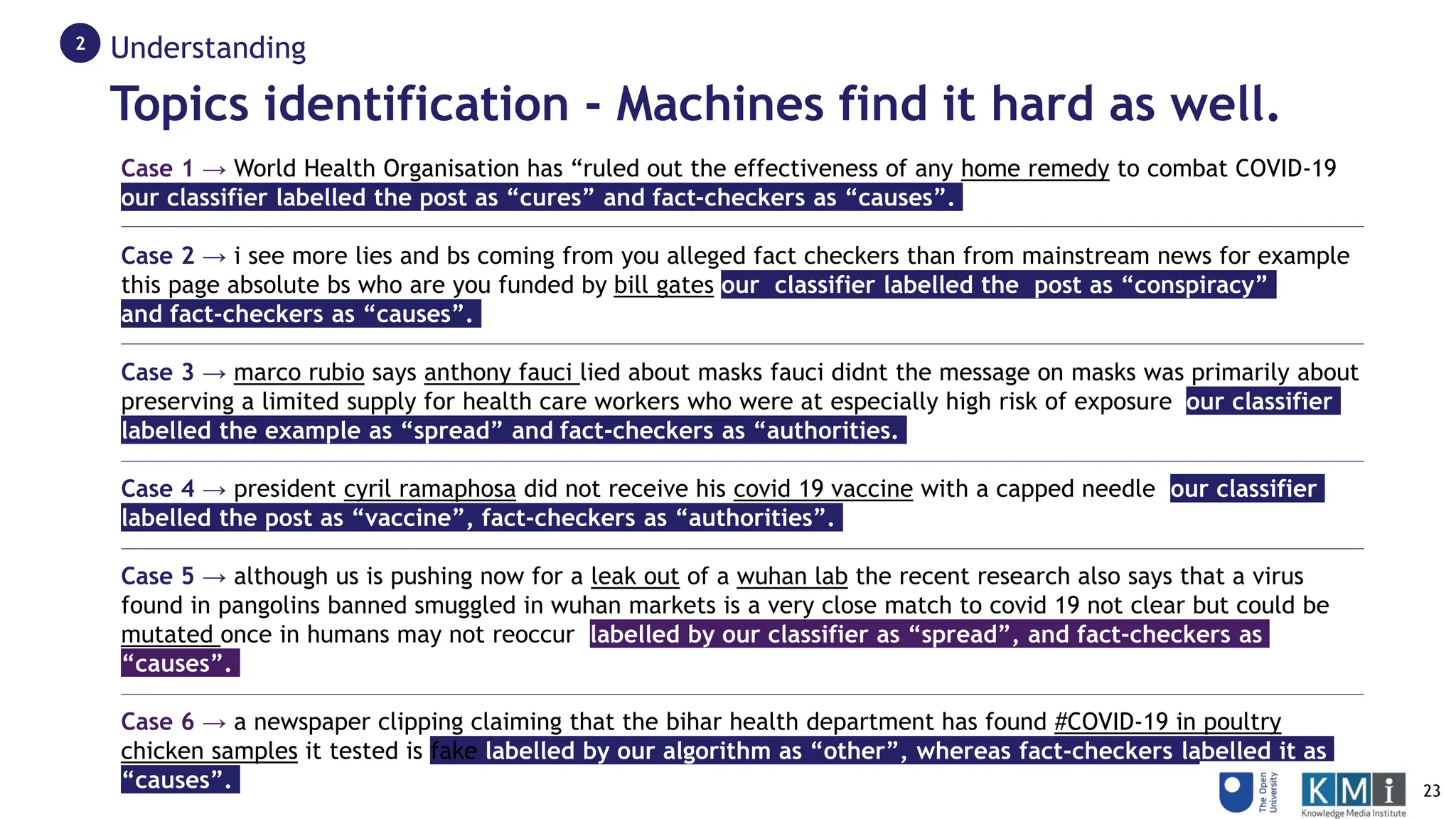

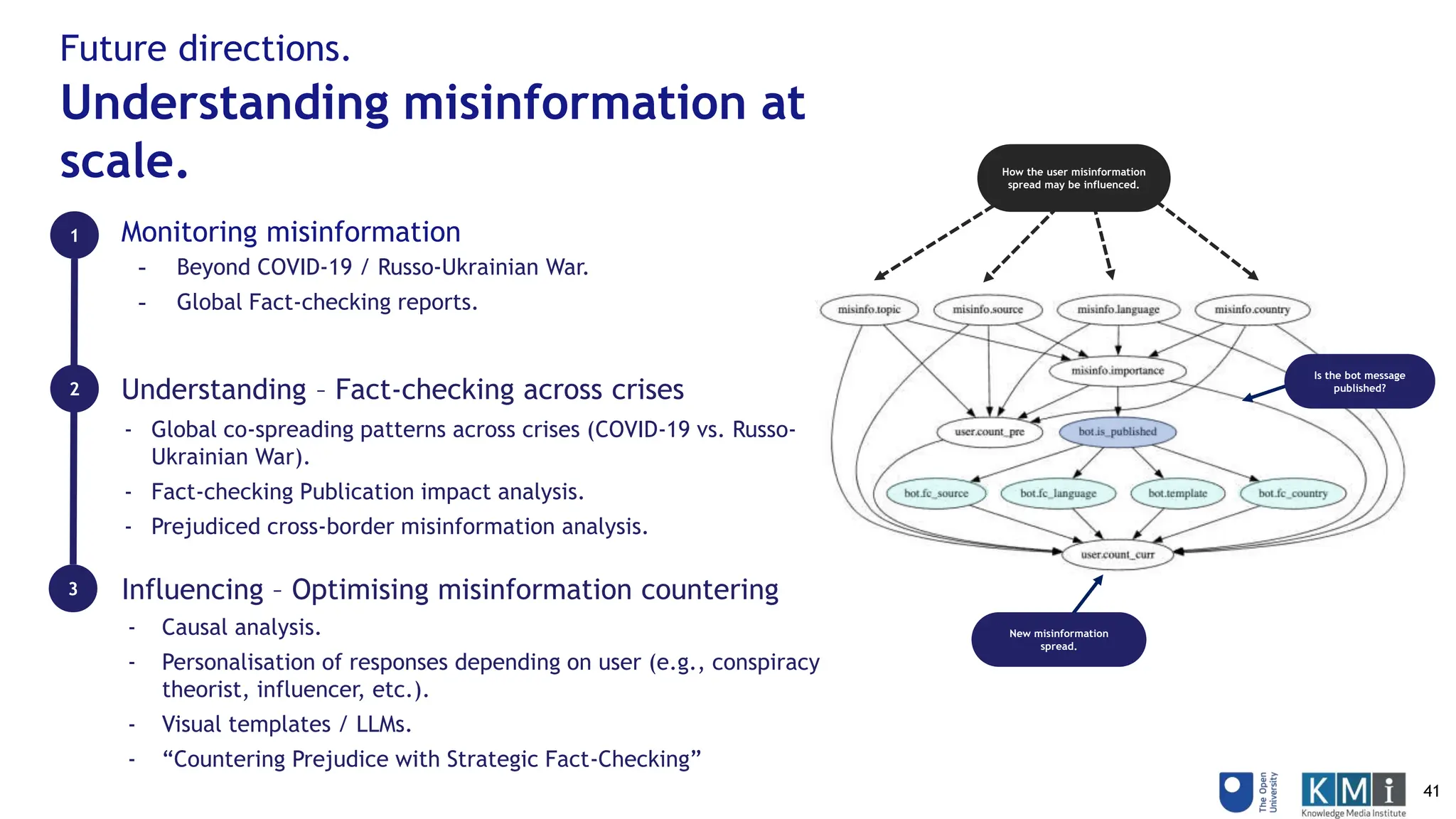

The document discusses monitoring, understanding, and influencing the spread of COVID-19 misinformation and fact-checks online. It describes:

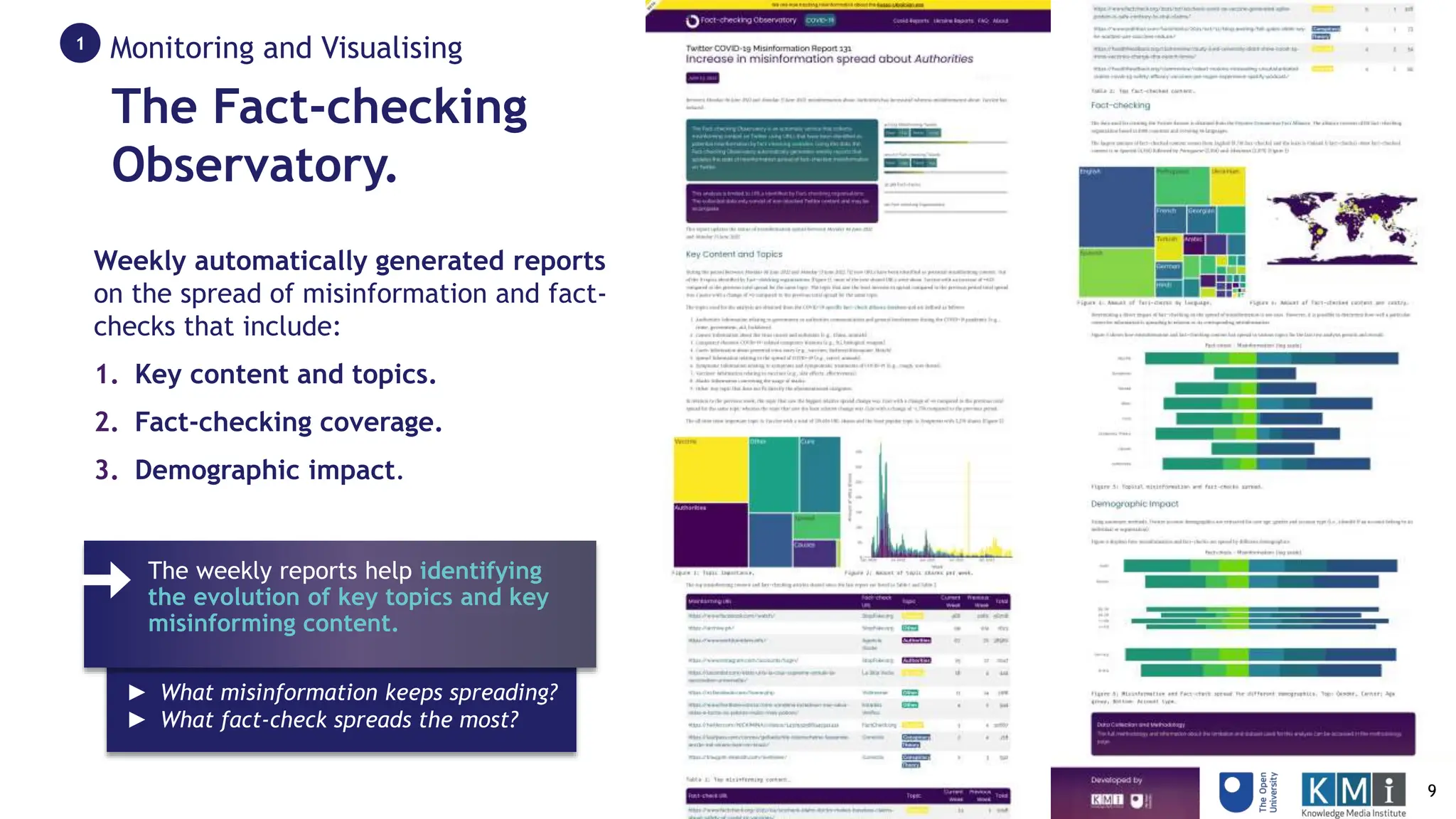

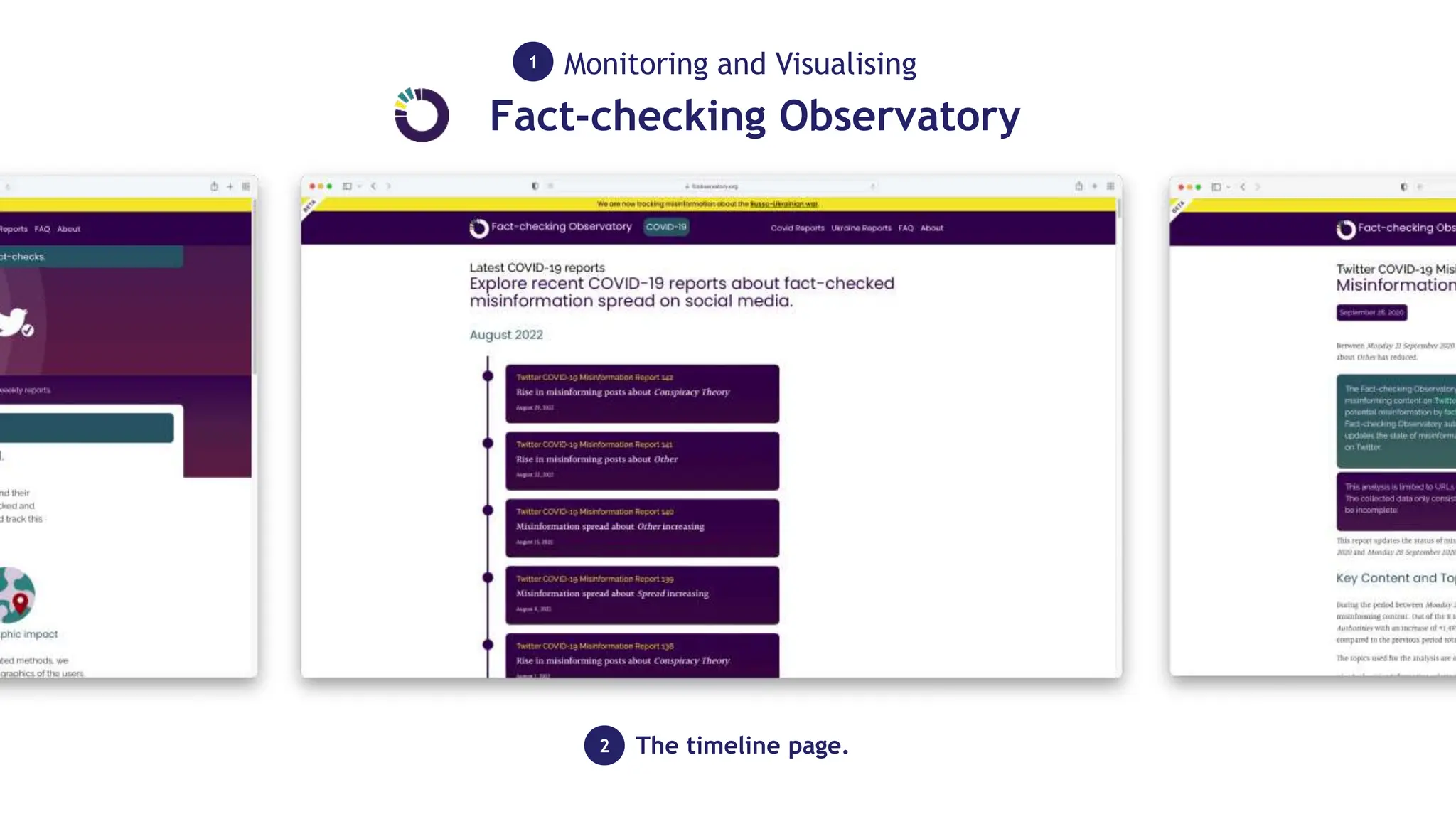

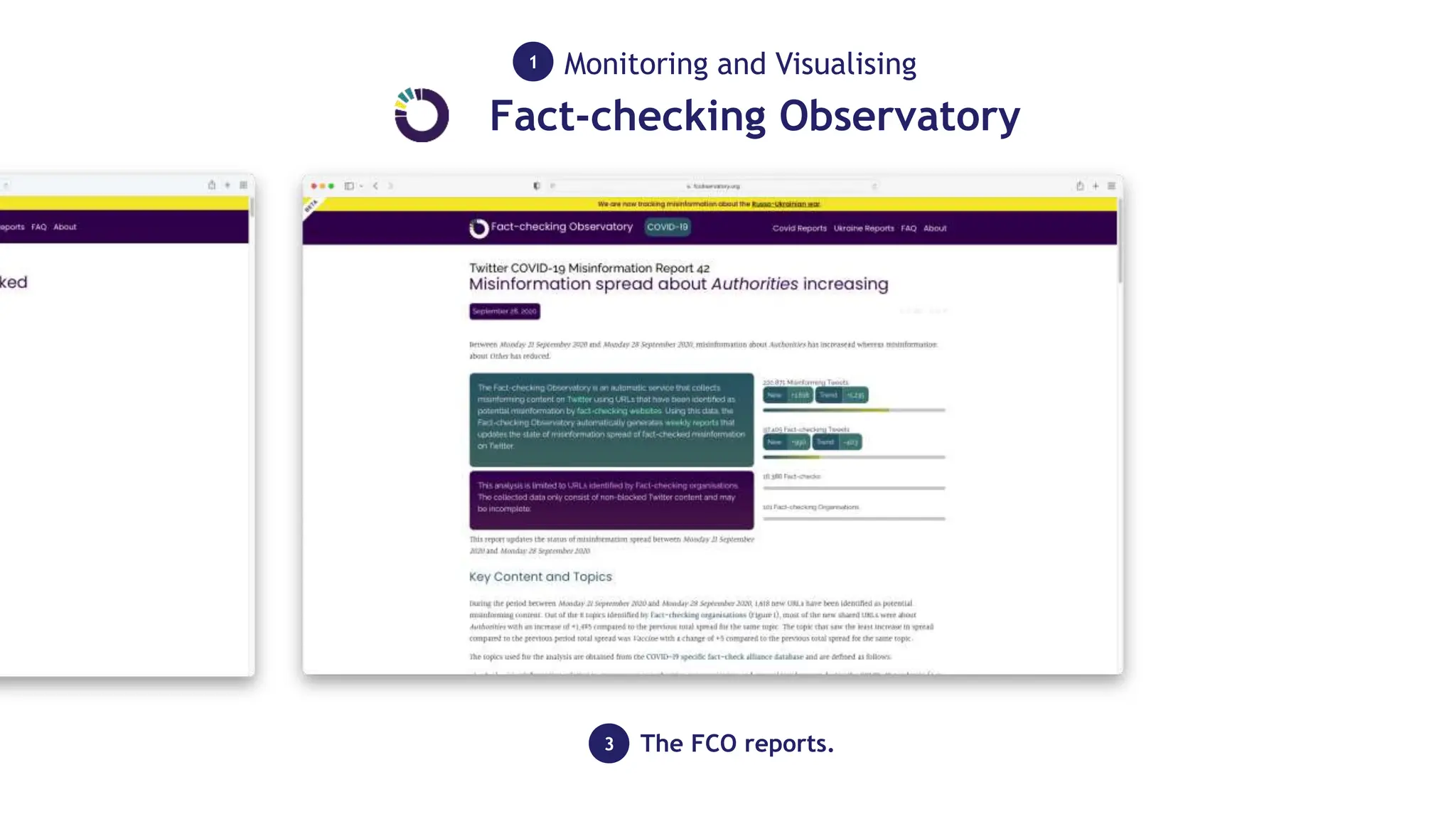

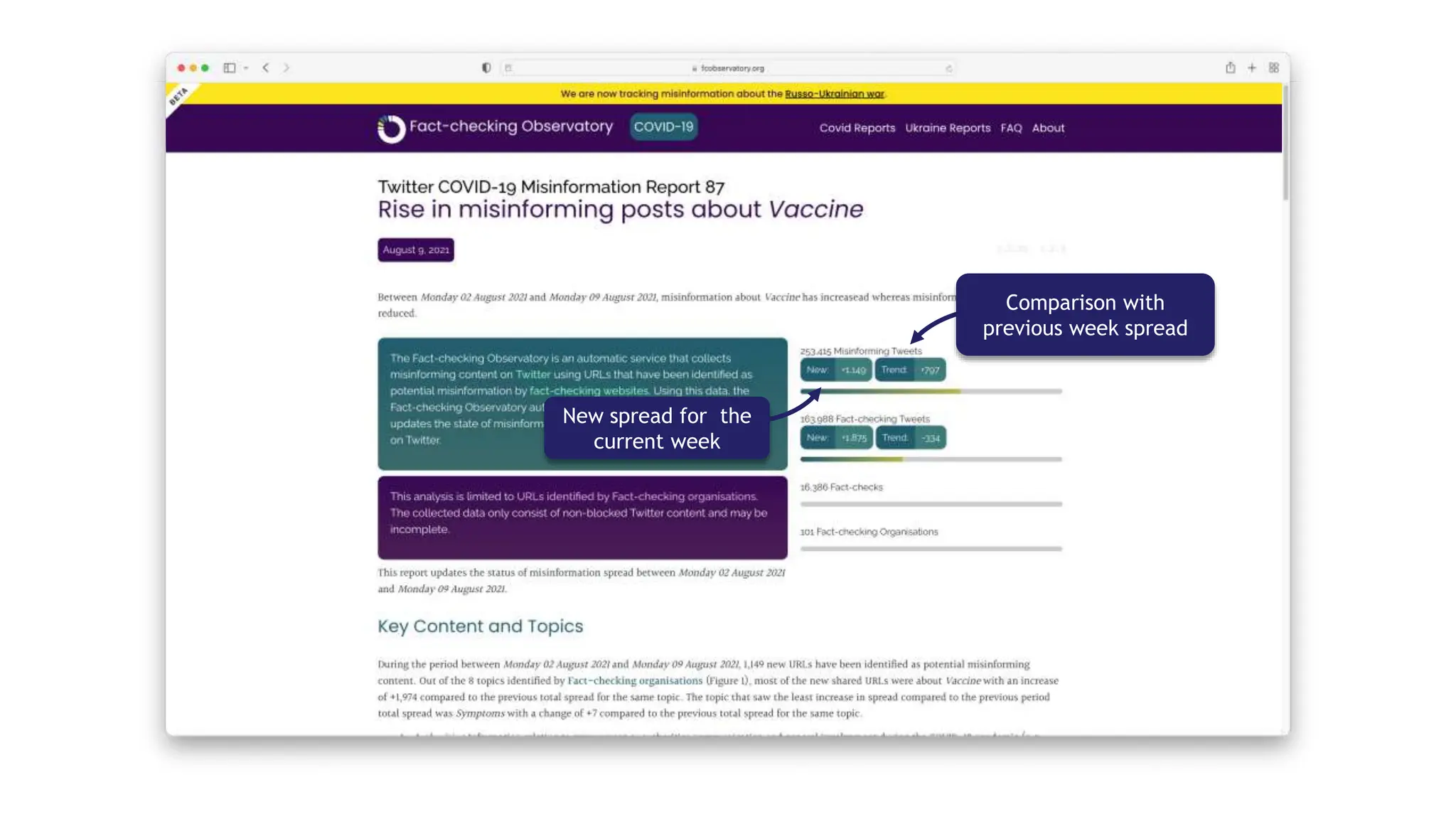

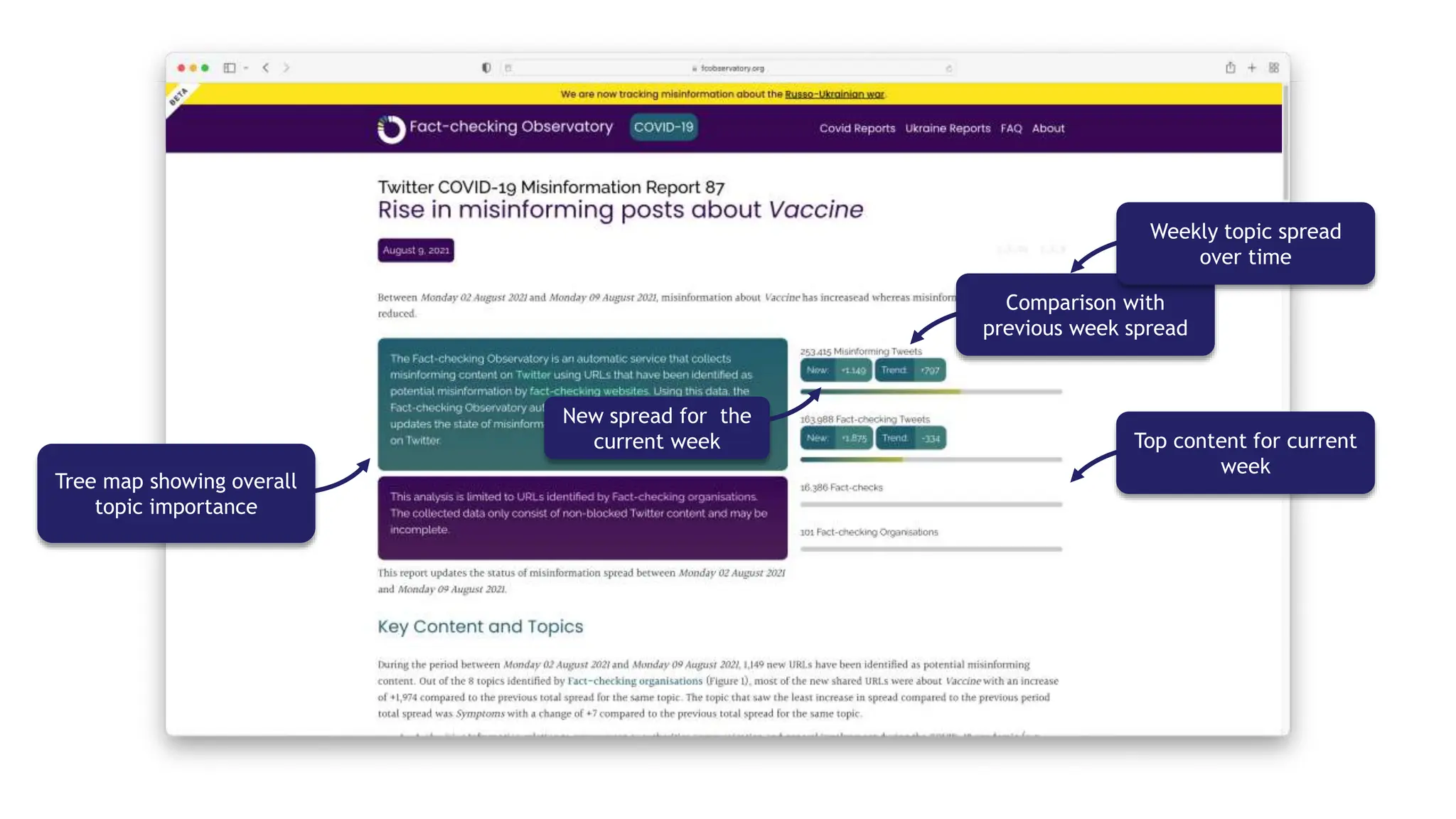

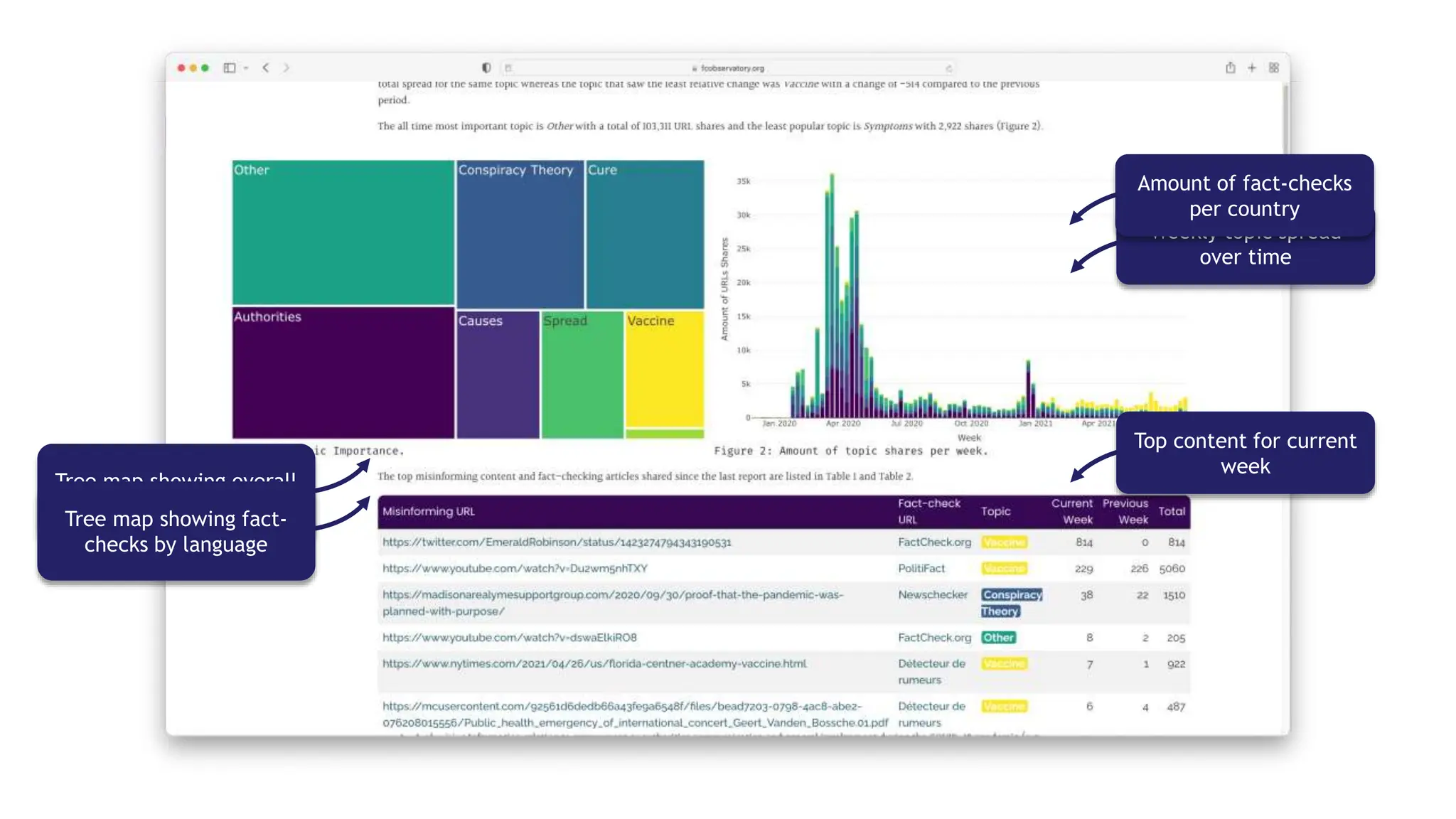

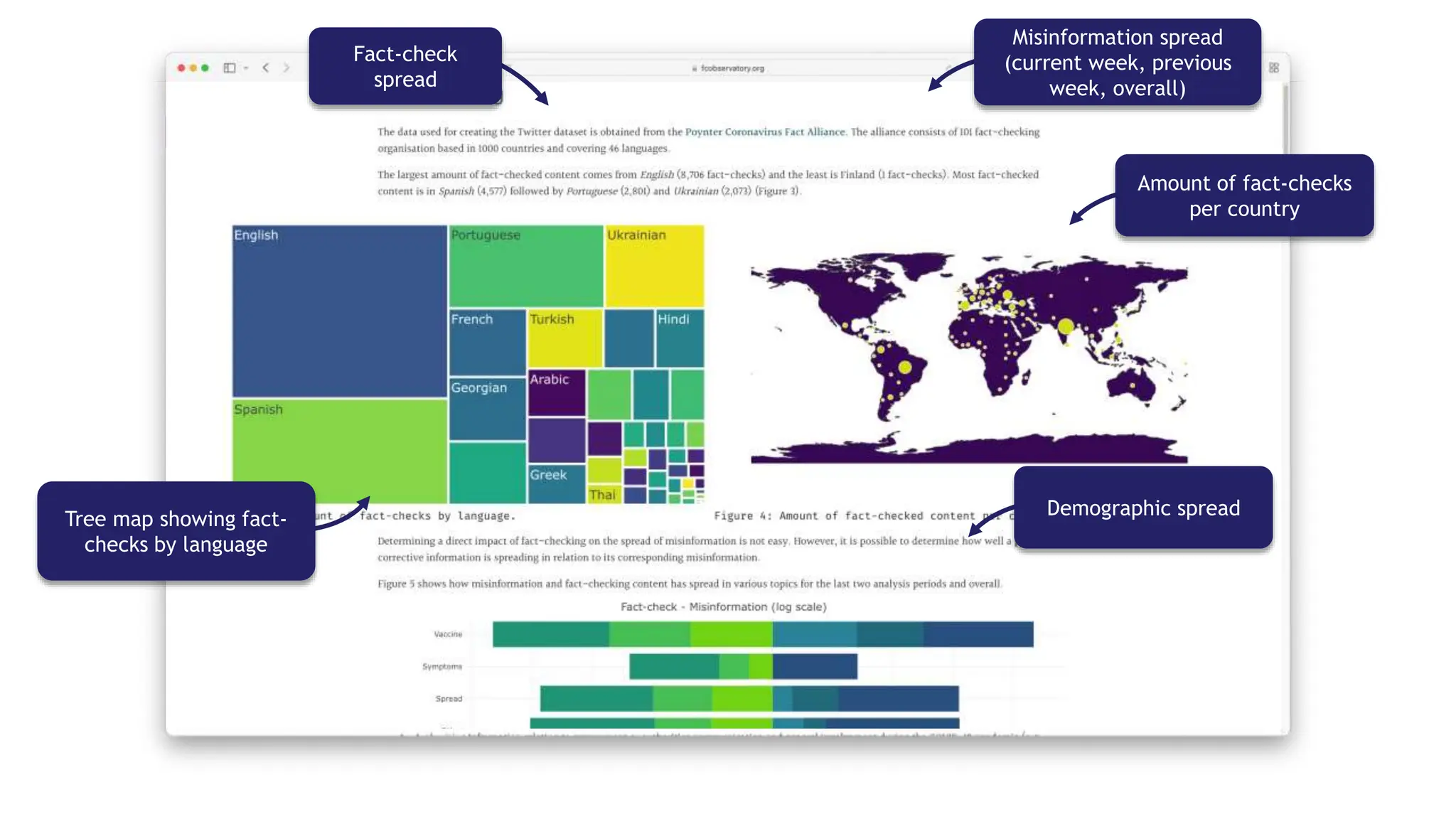

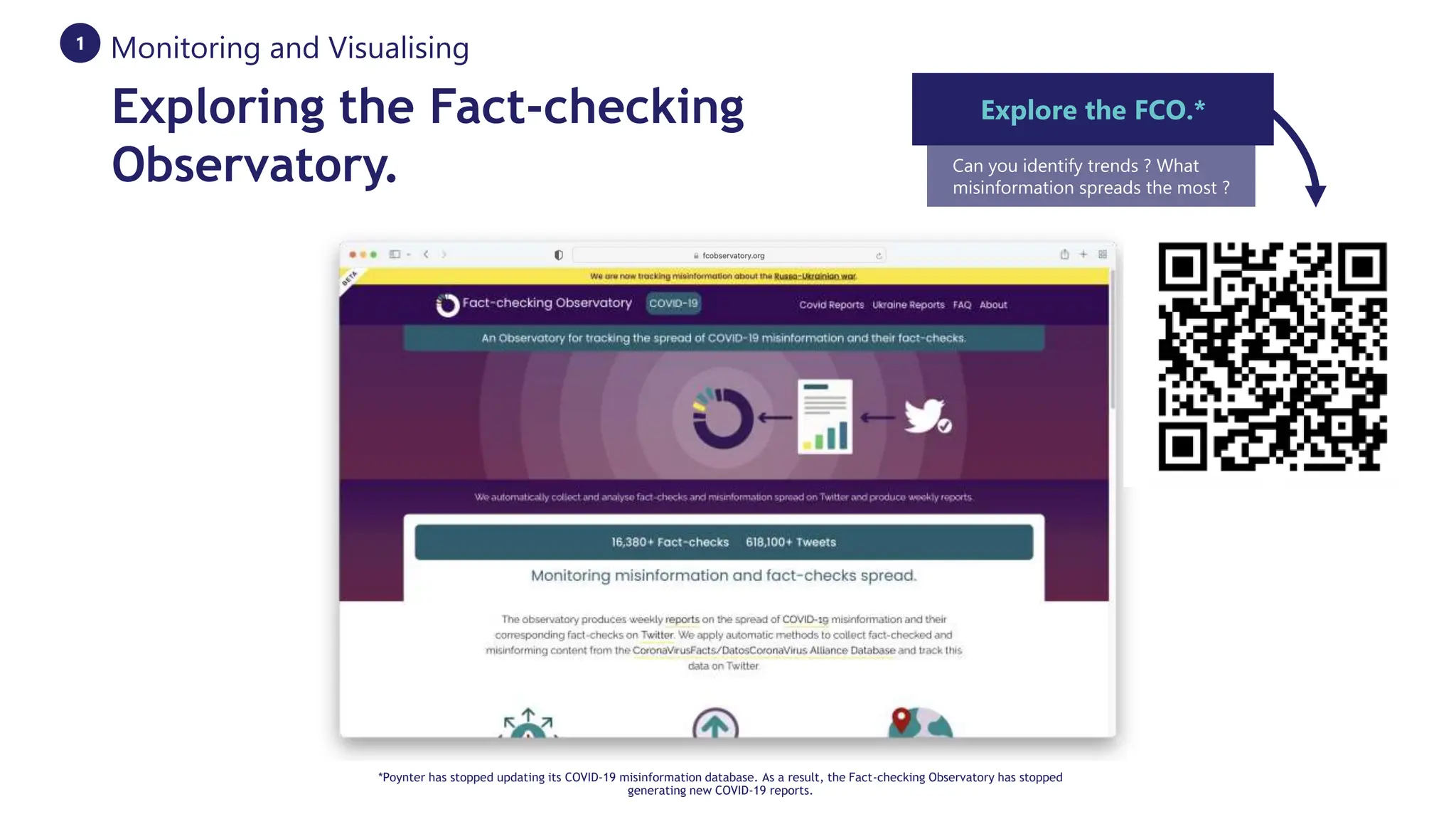

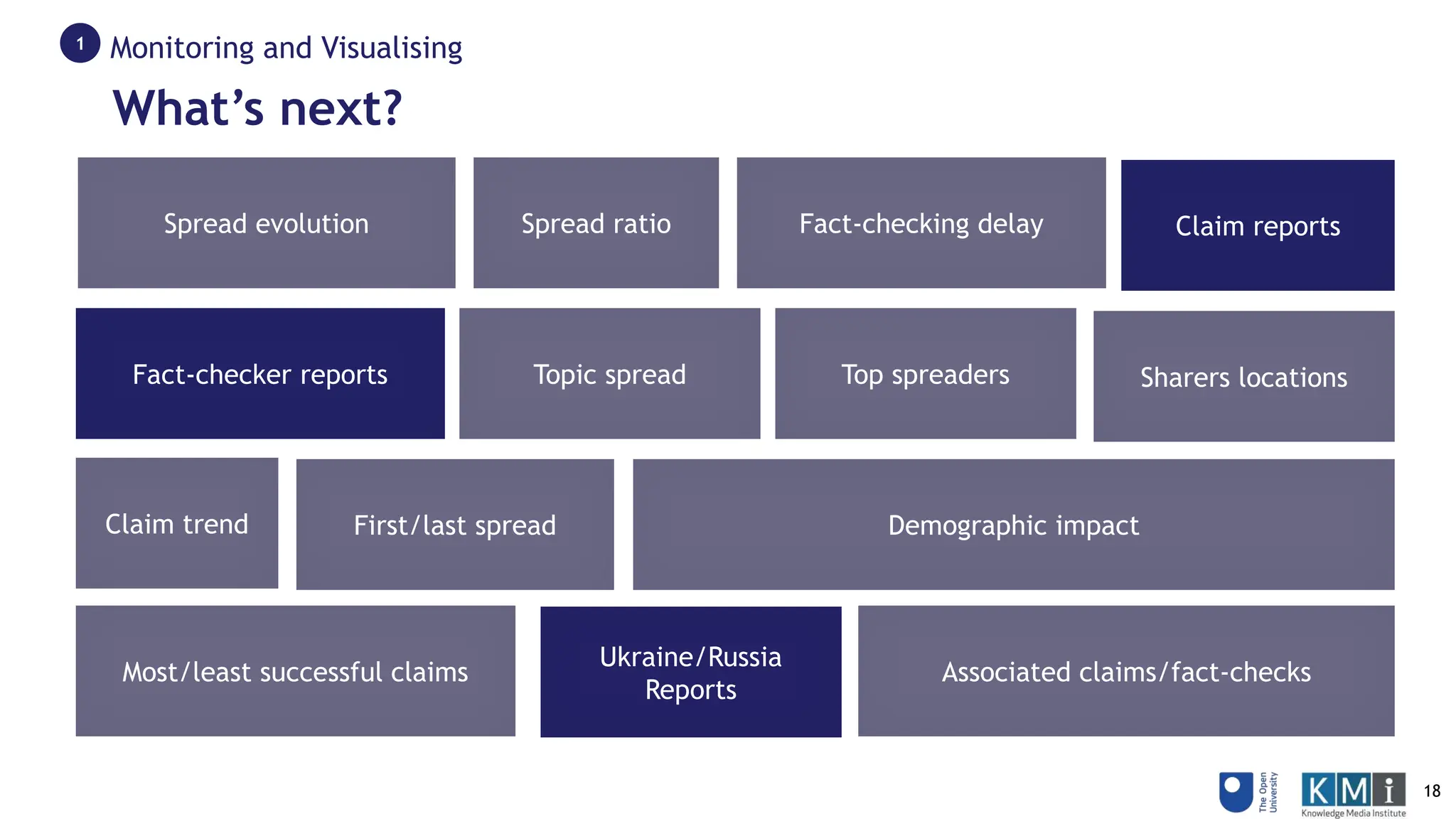

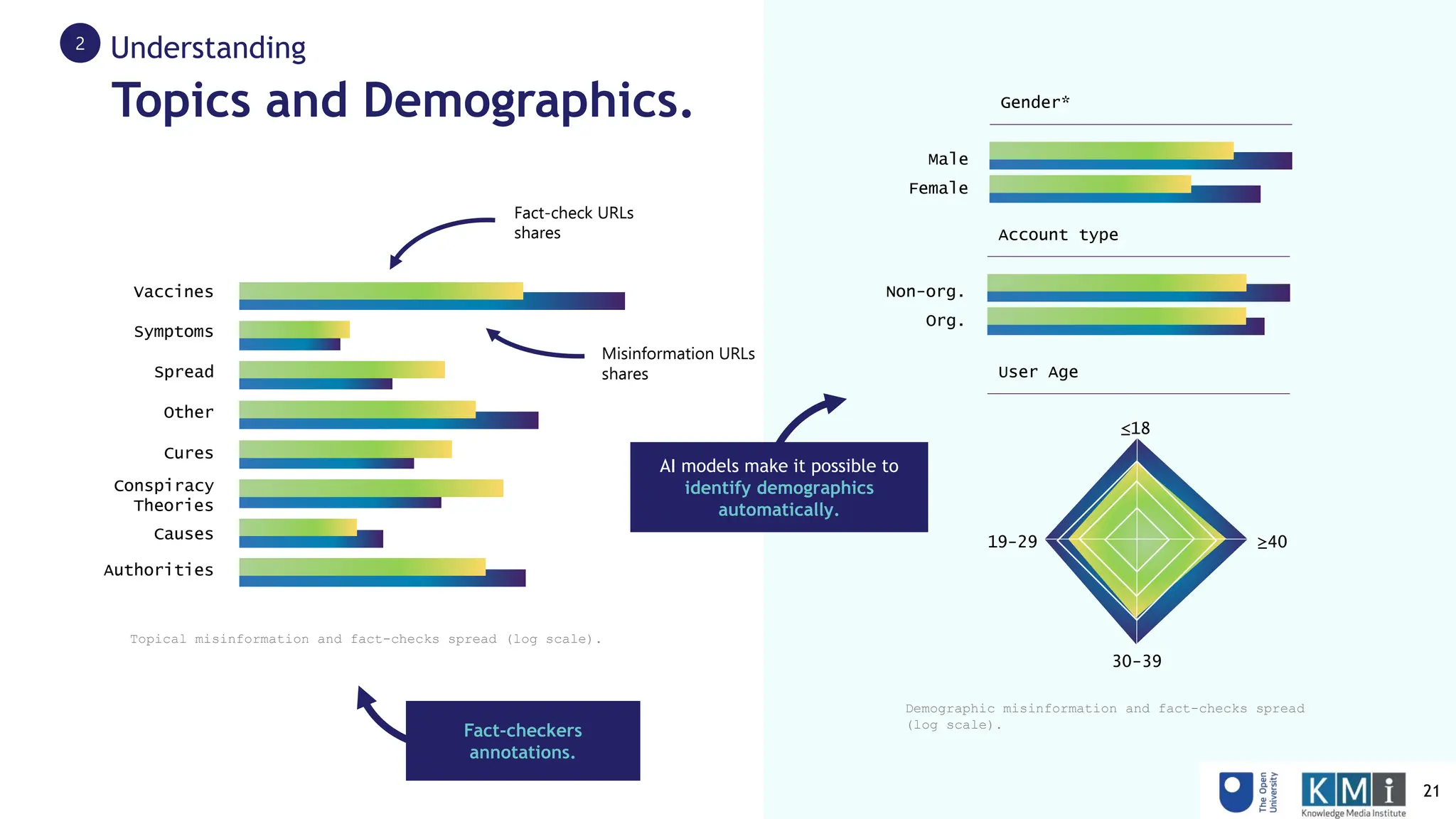

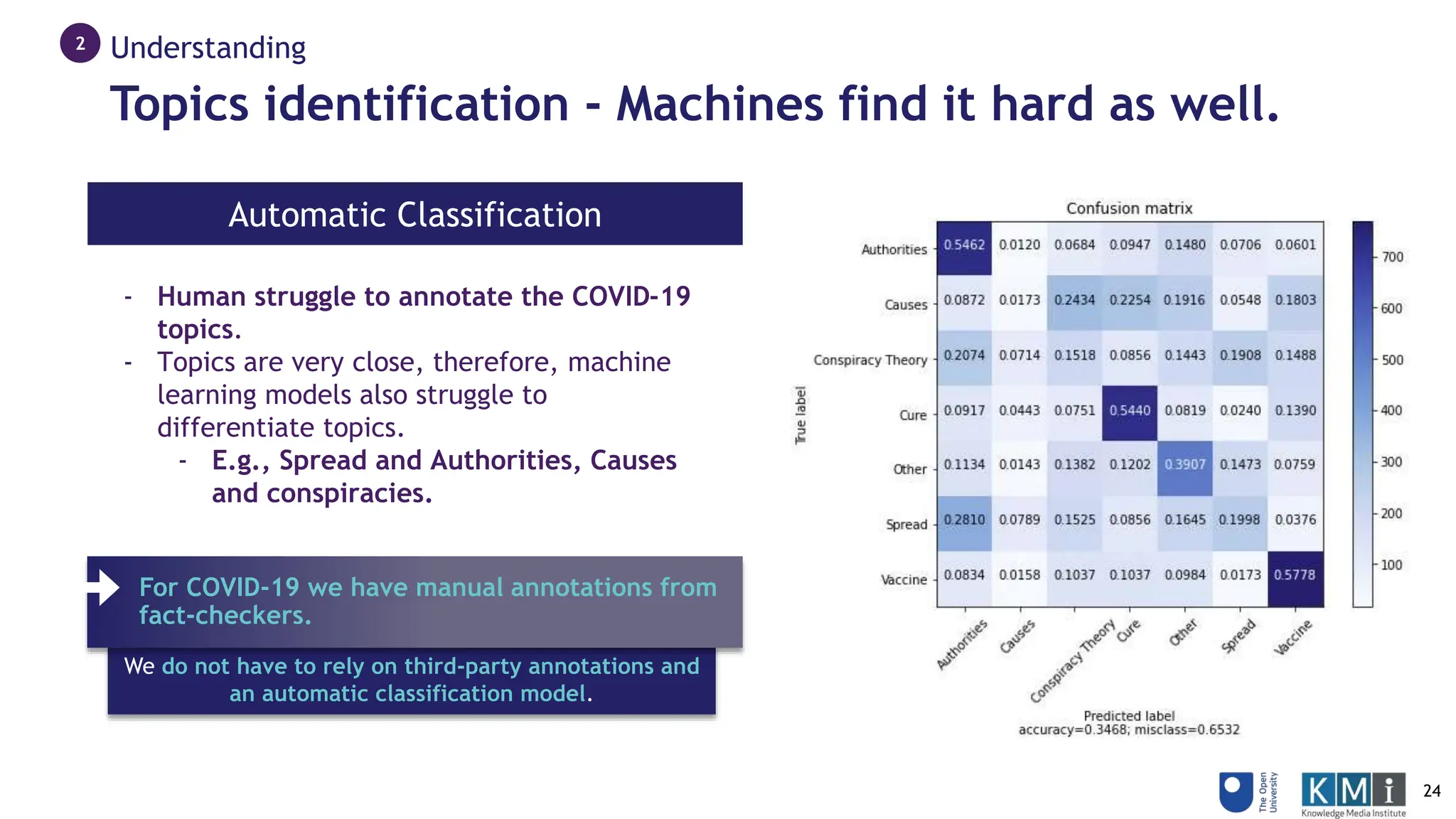

1) The Fact-checking Observatory which tracks the spread of COVID misinformation and fact-checks on Twitter, generates weekly reports on topics and reach, and provides visualizations of trends over time.

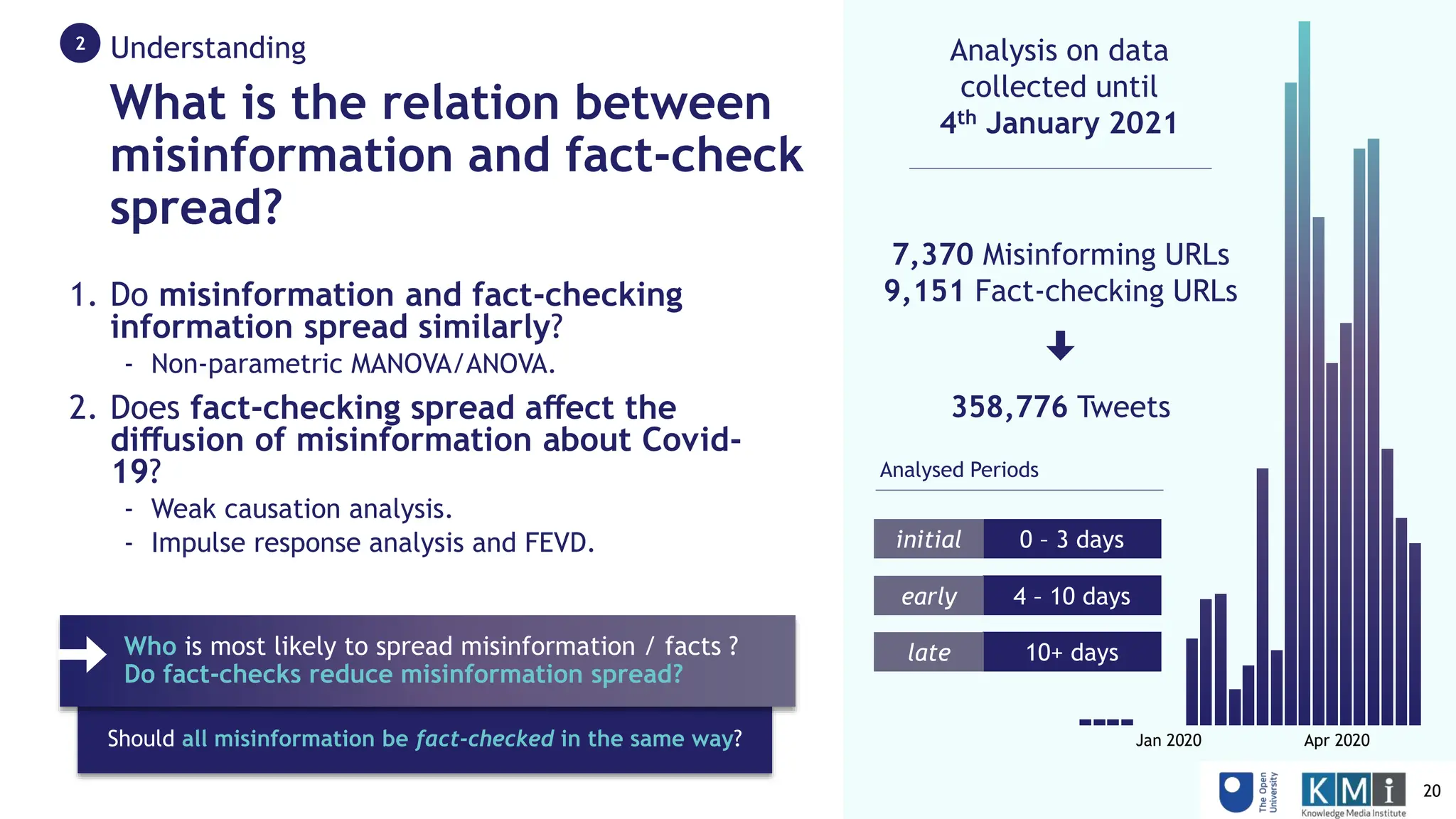

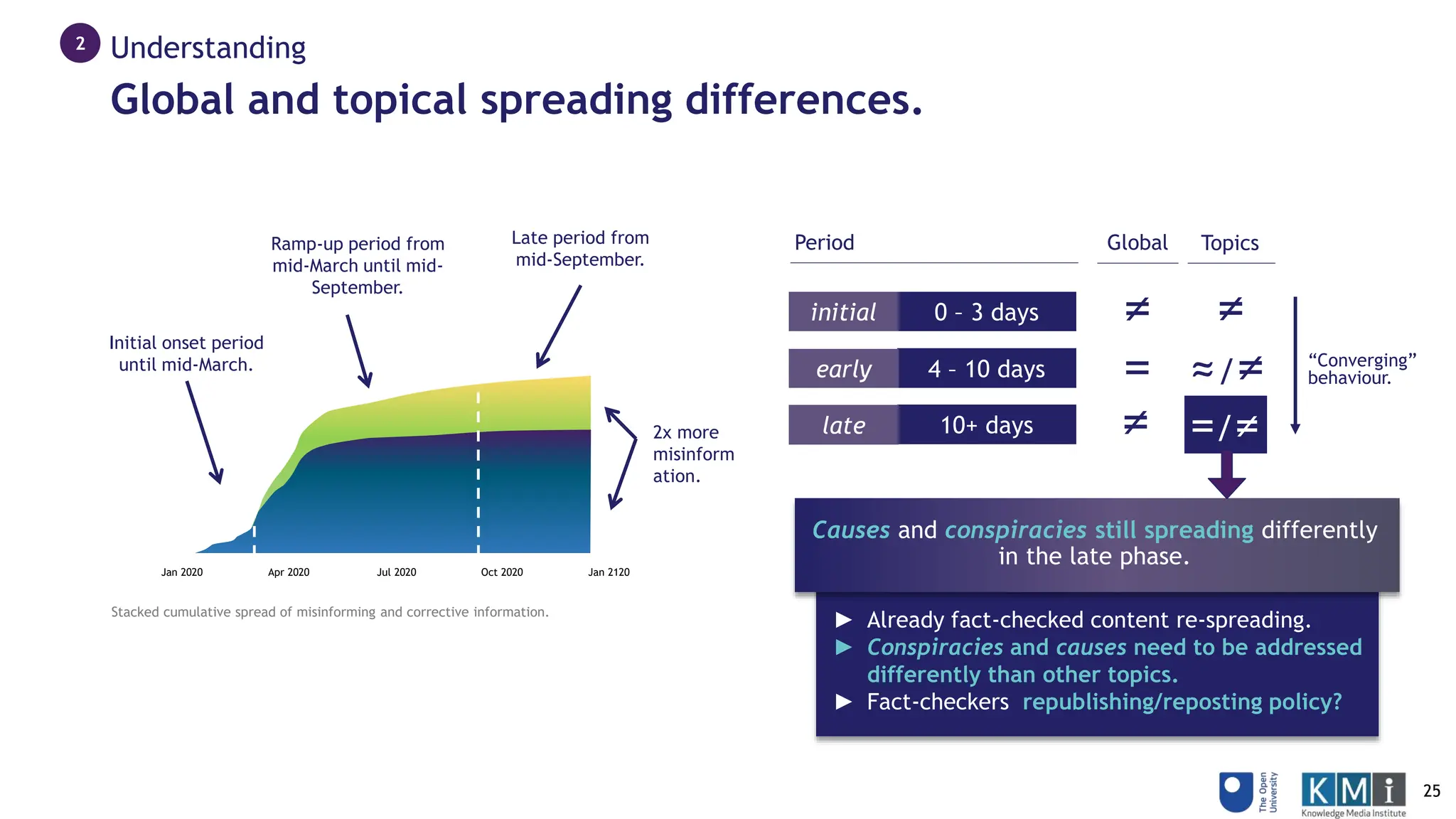

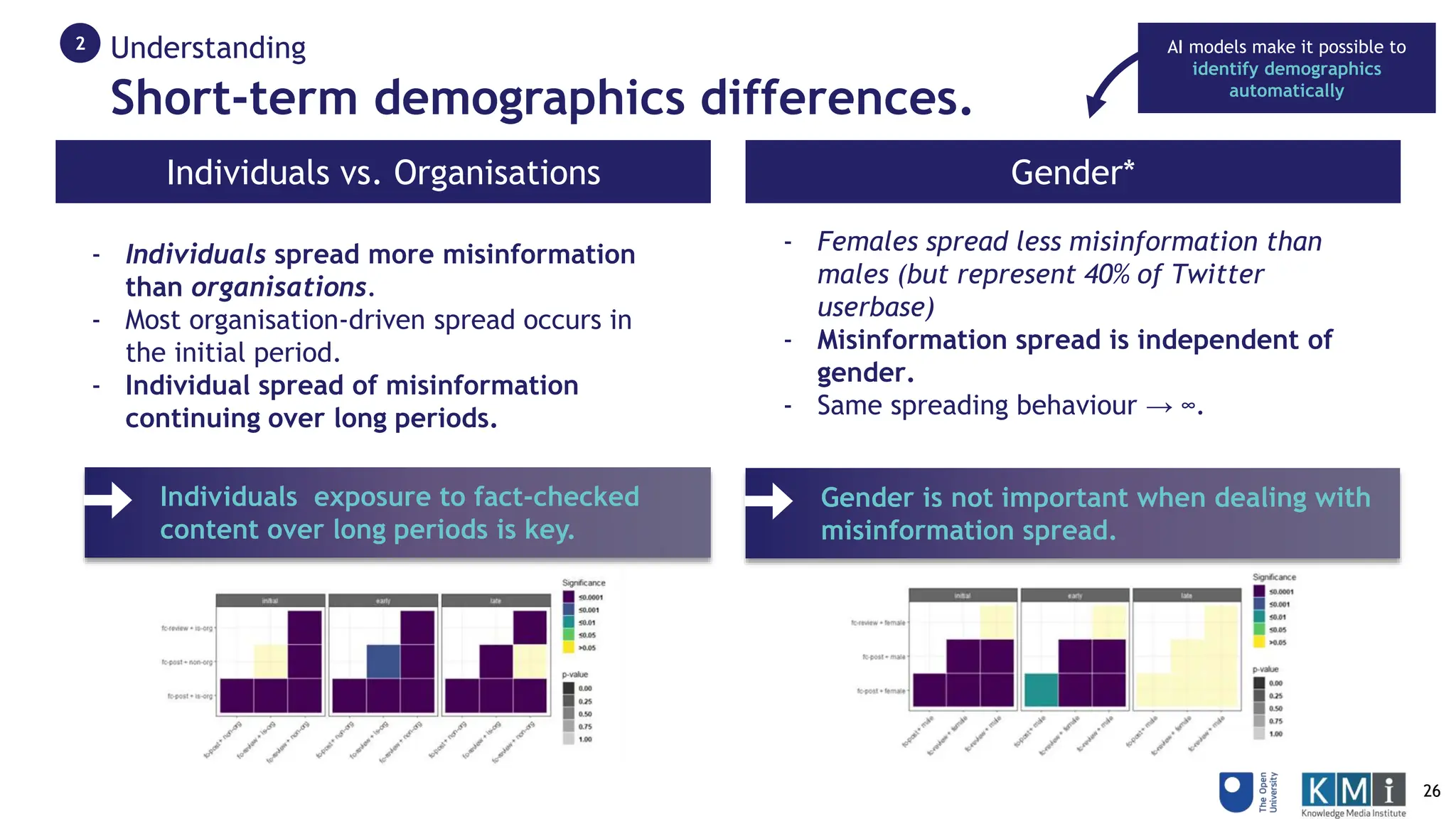

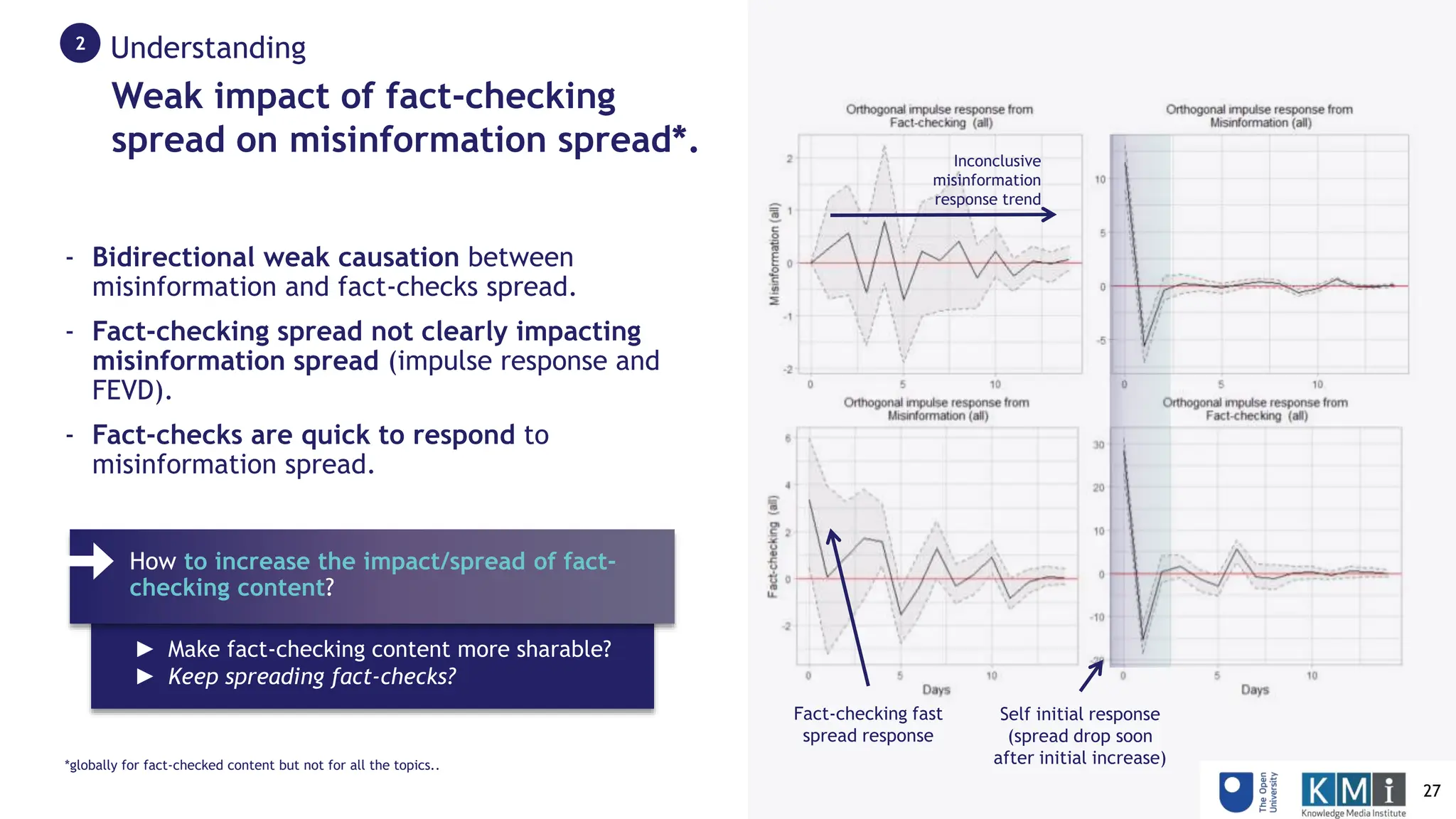

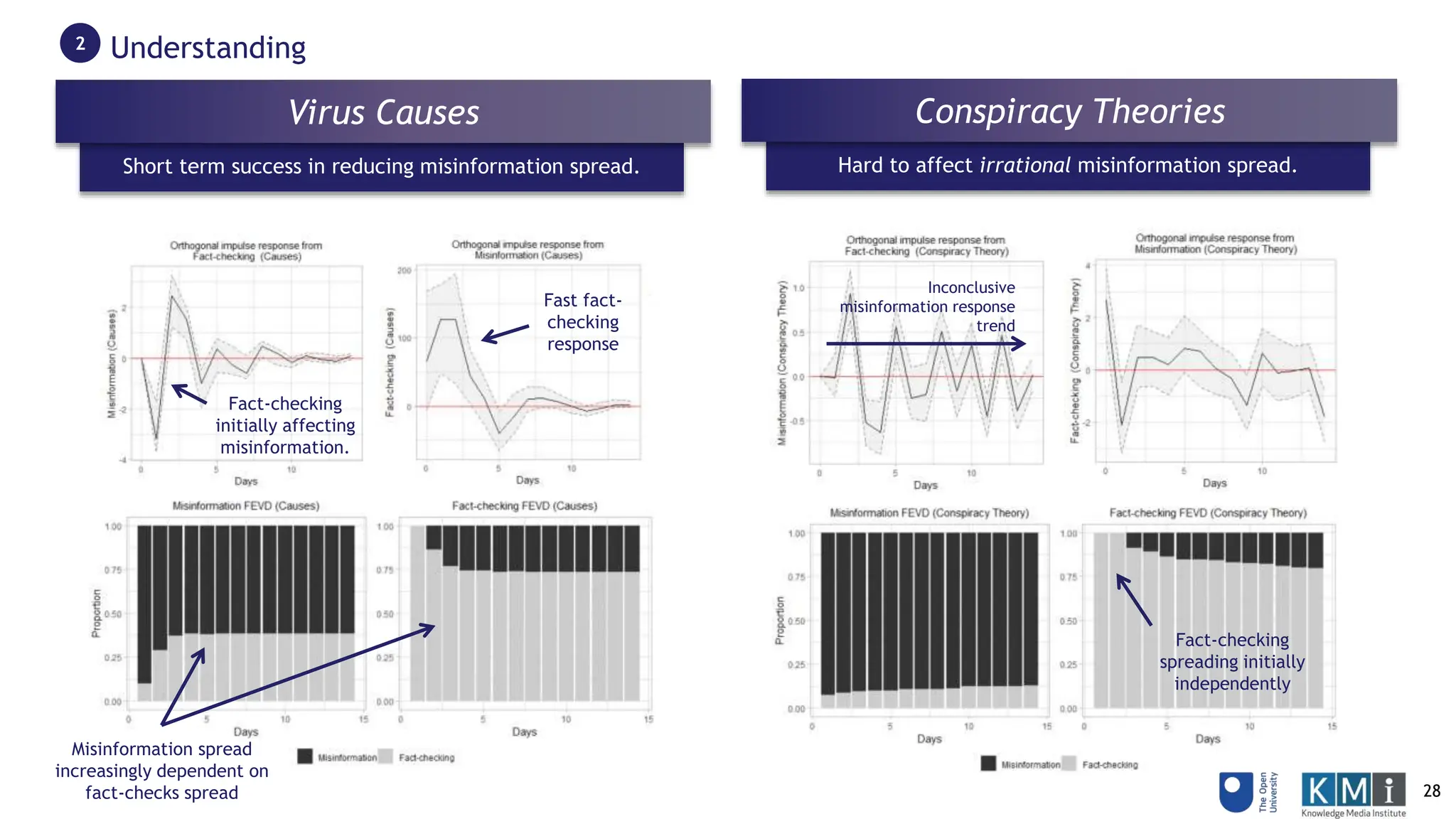

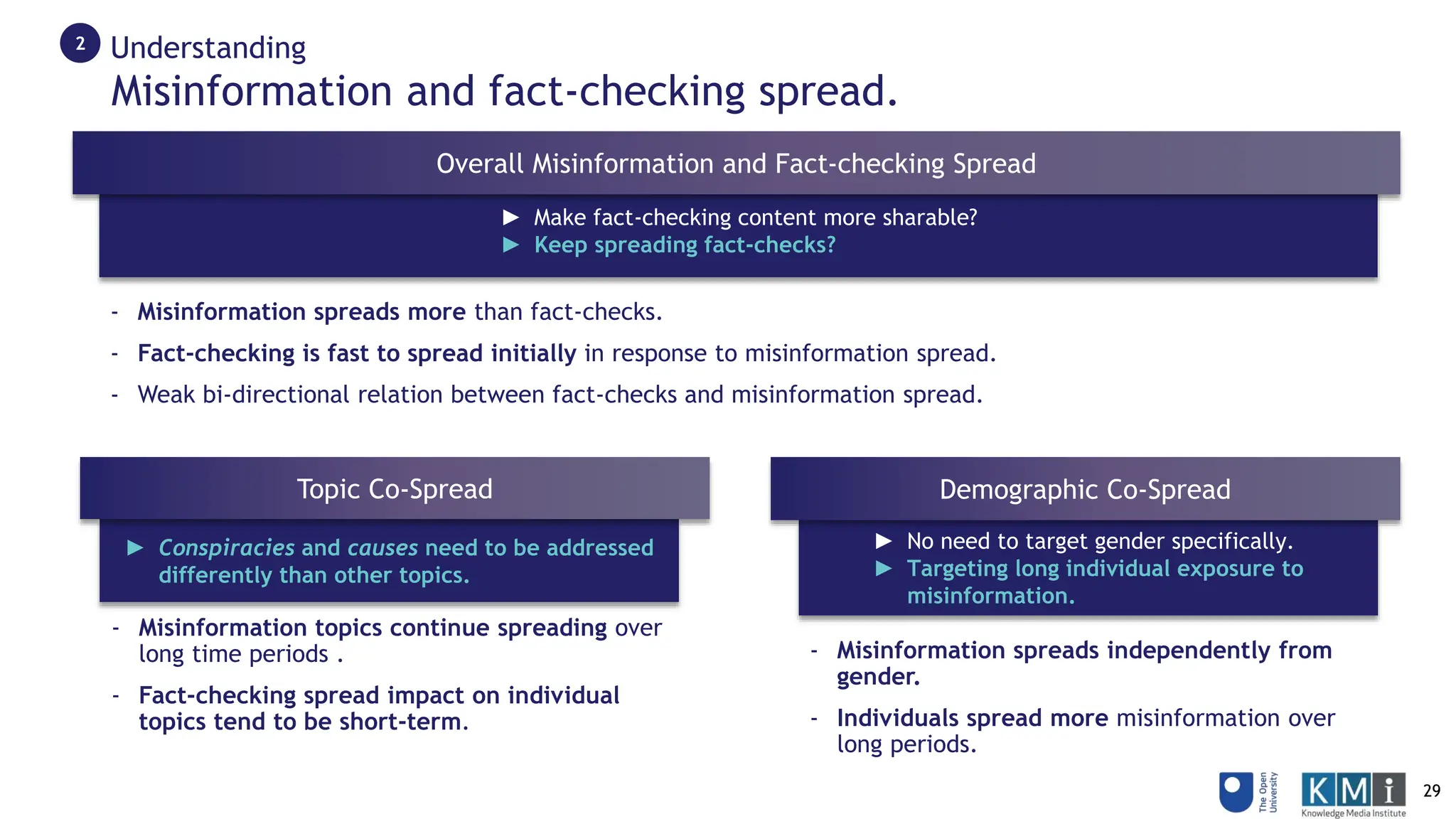

2) Analysis of the co-spread of misinformation and fact-checks which found fact-checking has a weak impact on reducing misinformation spread globally but a stronger short-term impact on specific topics like causes and conspiracies. Individuals spread more misinformation than organizations.

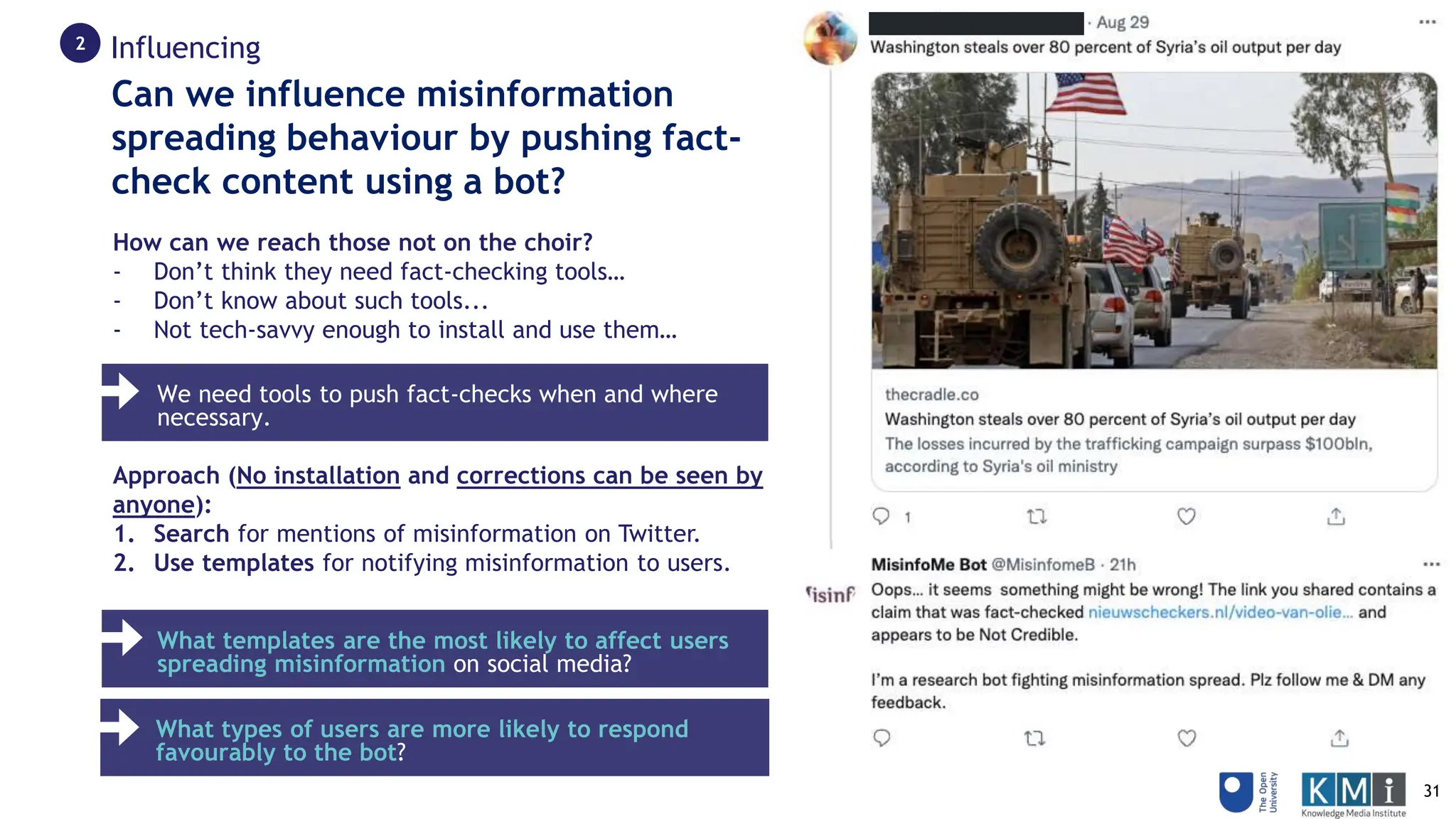

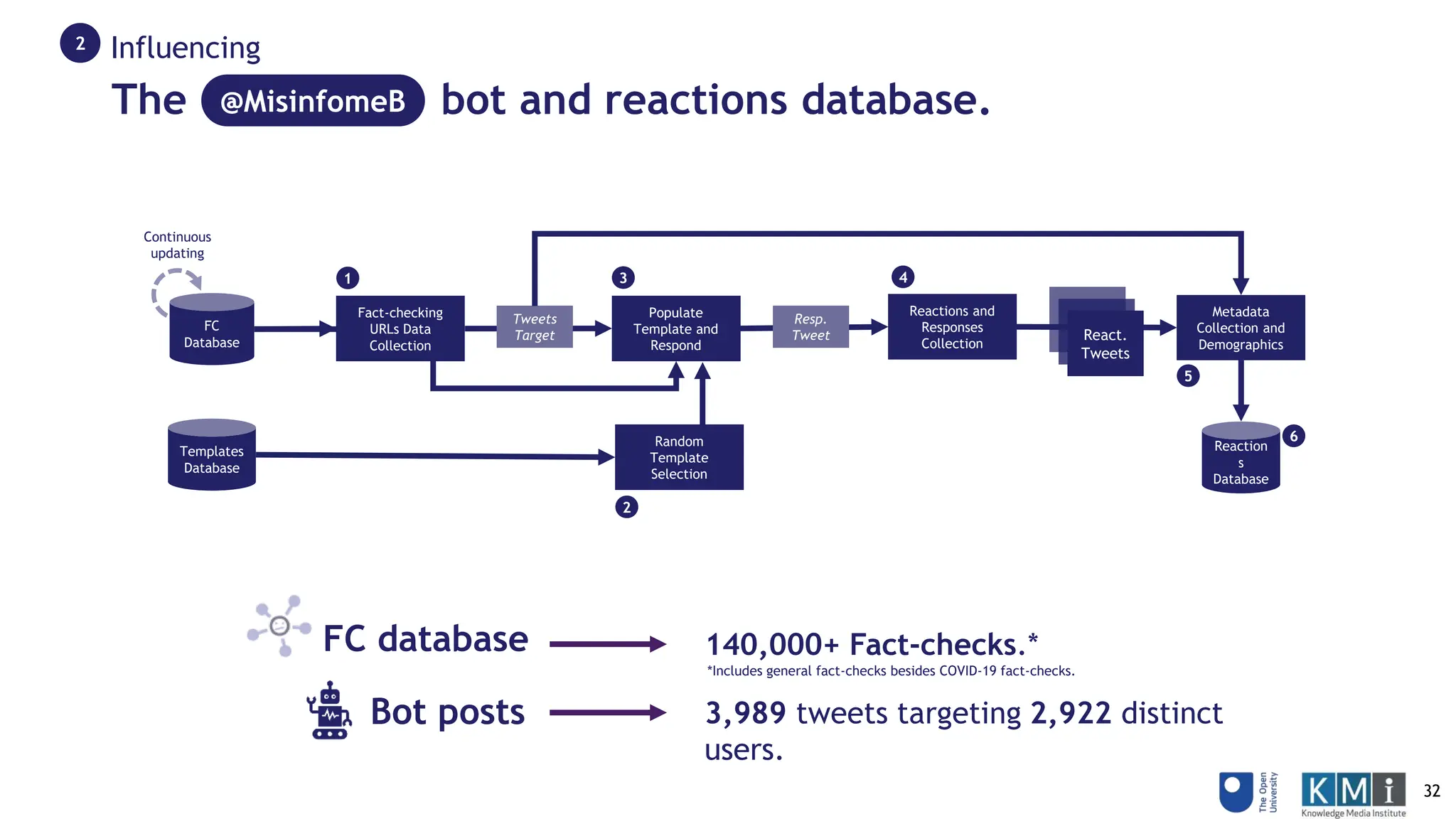

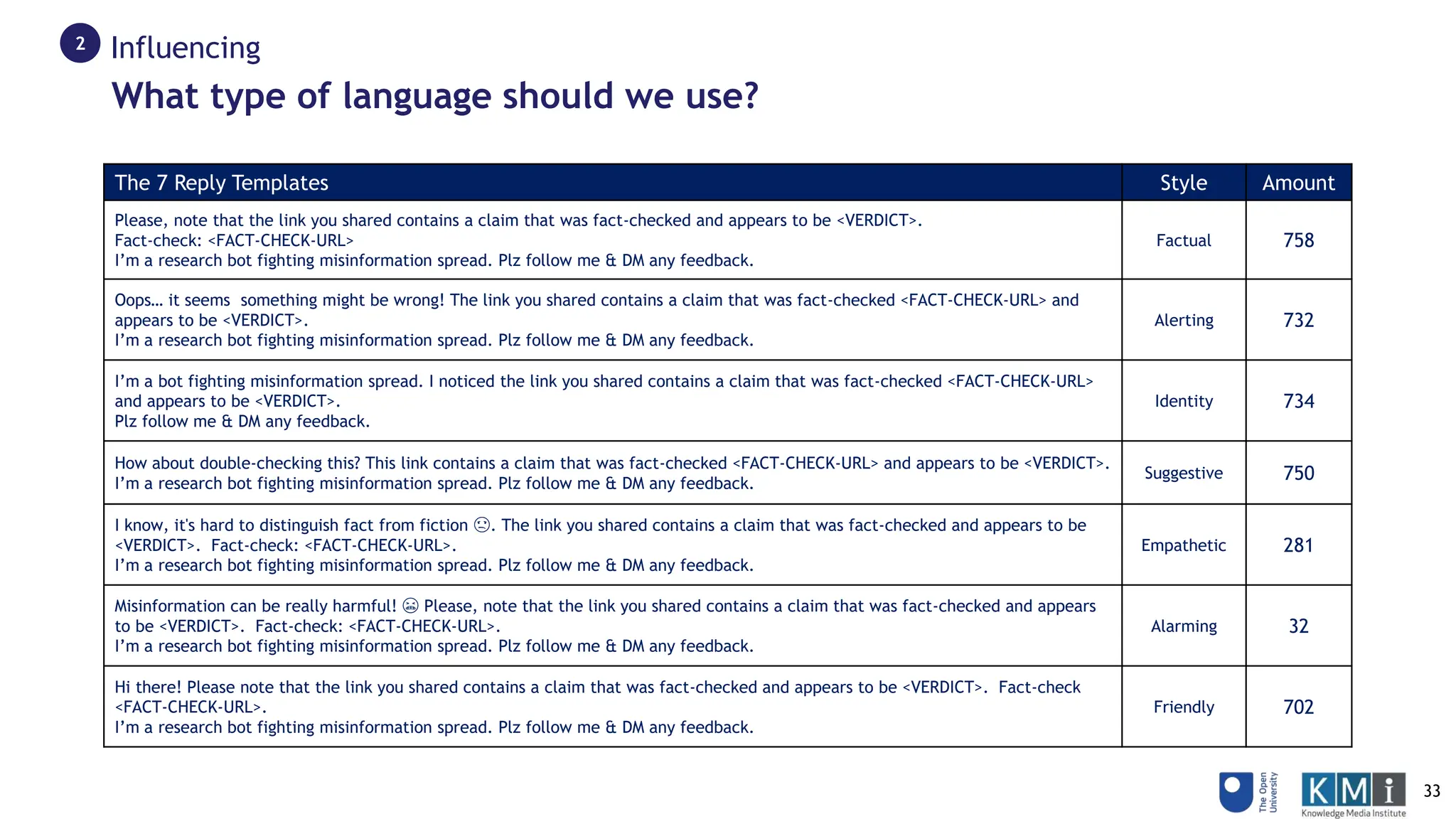

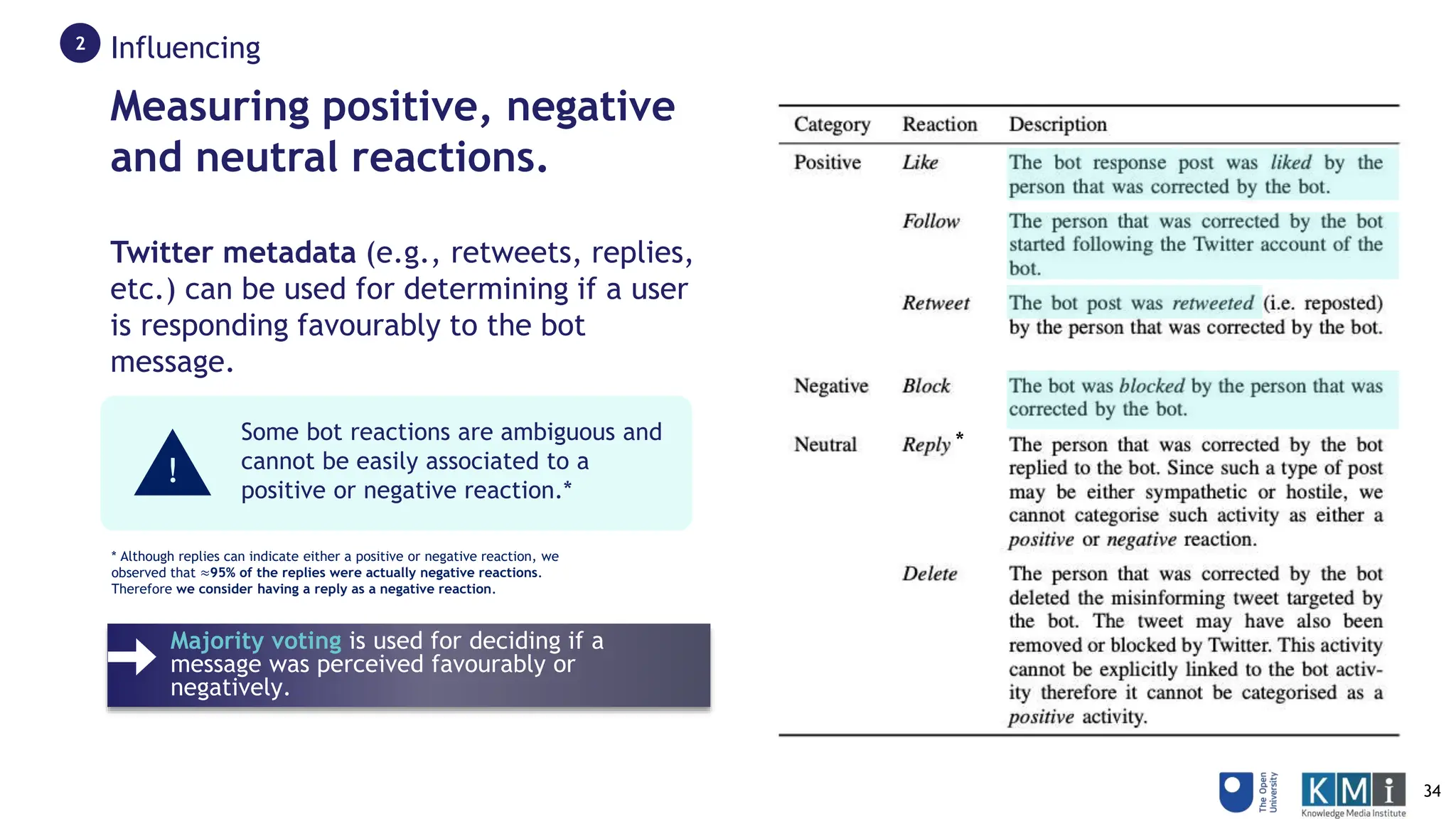

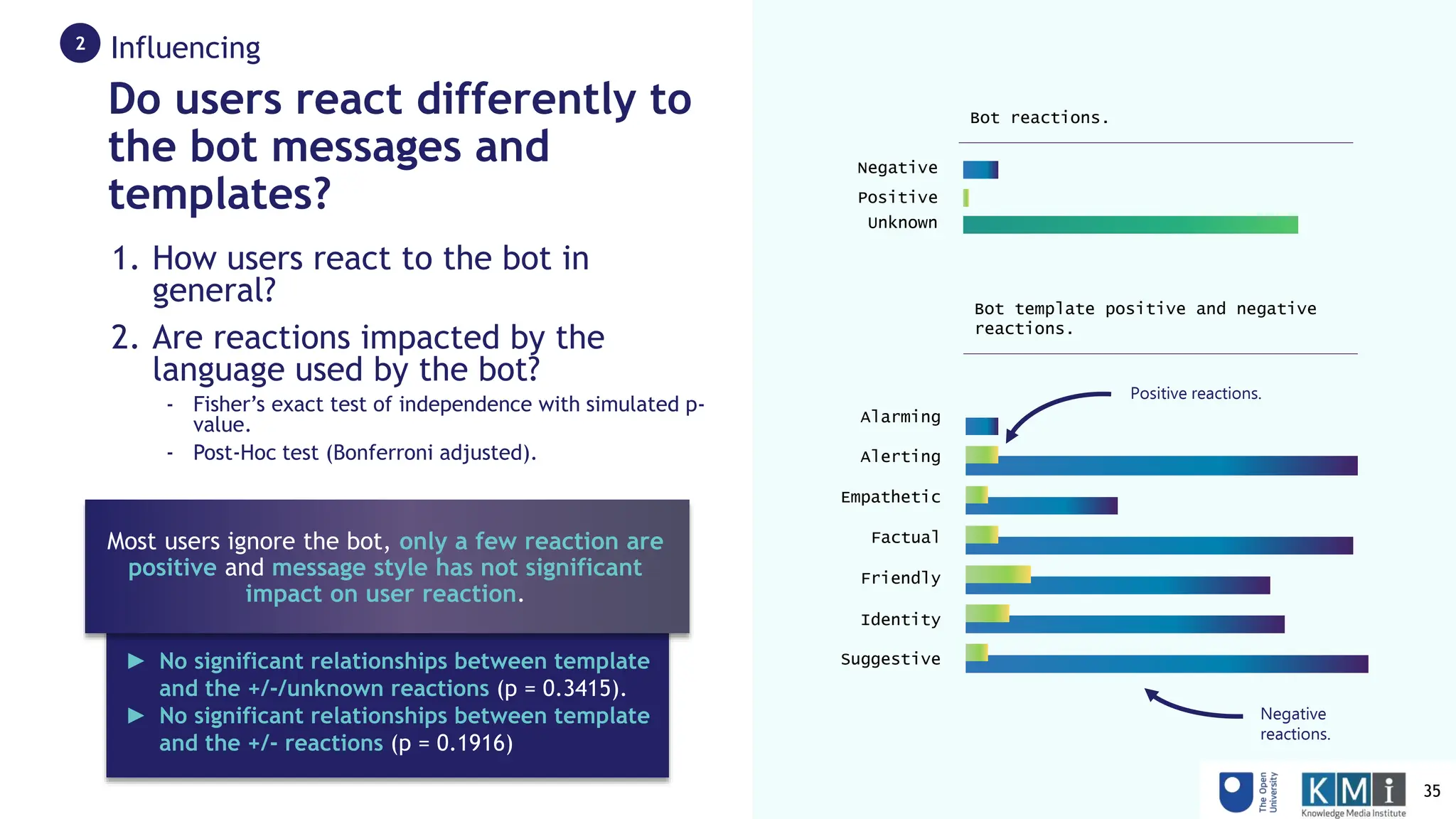

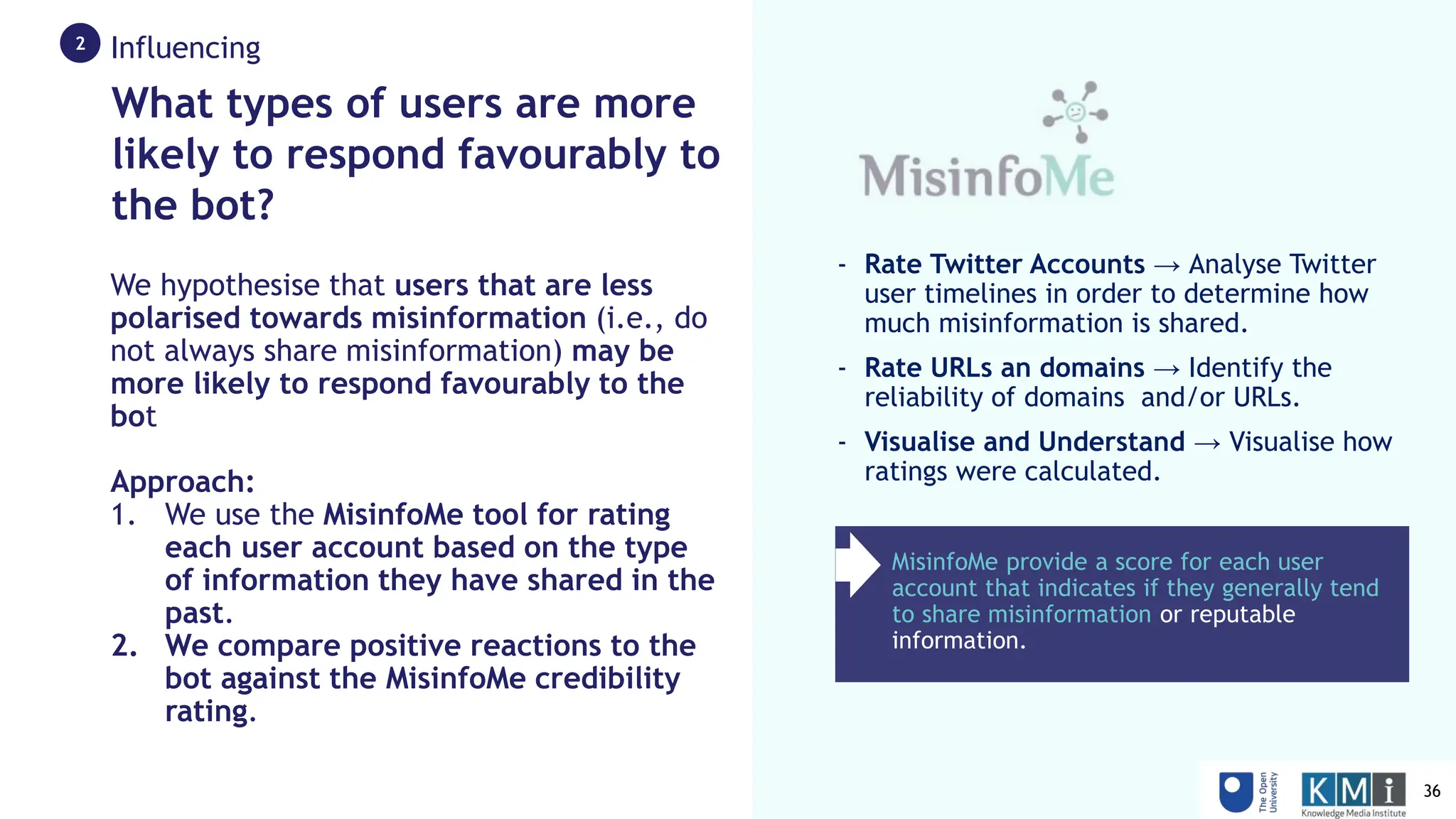

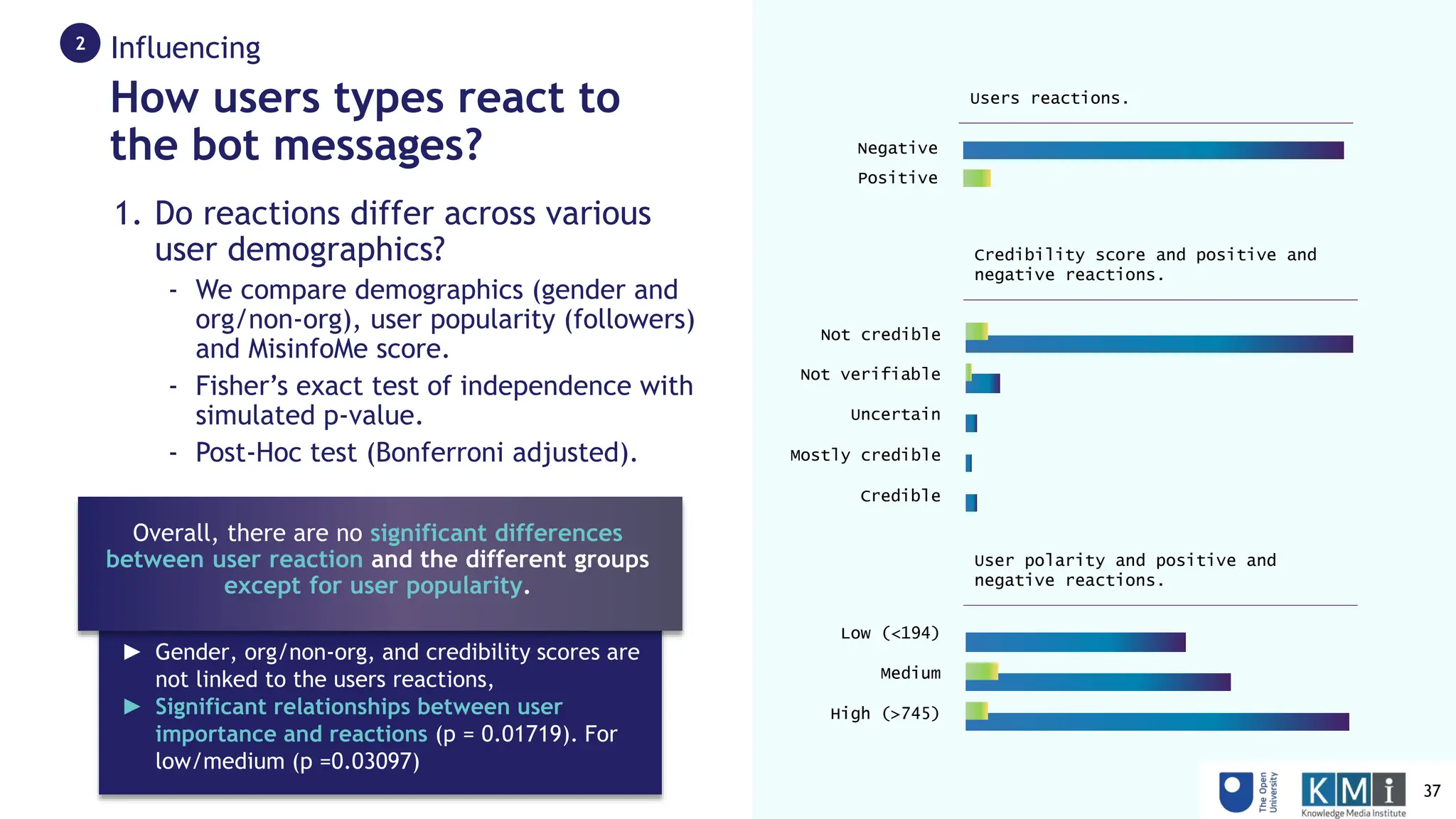

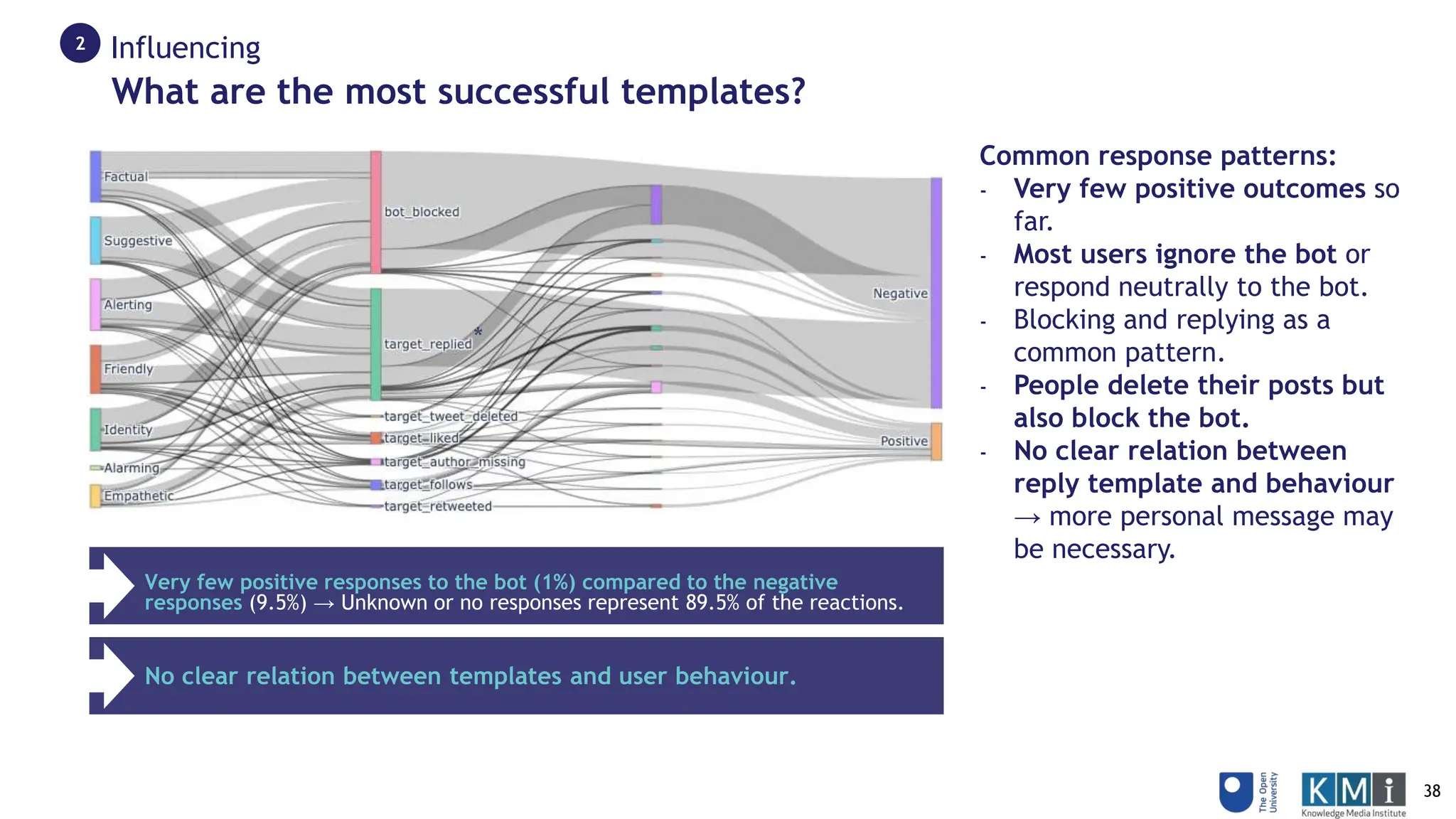

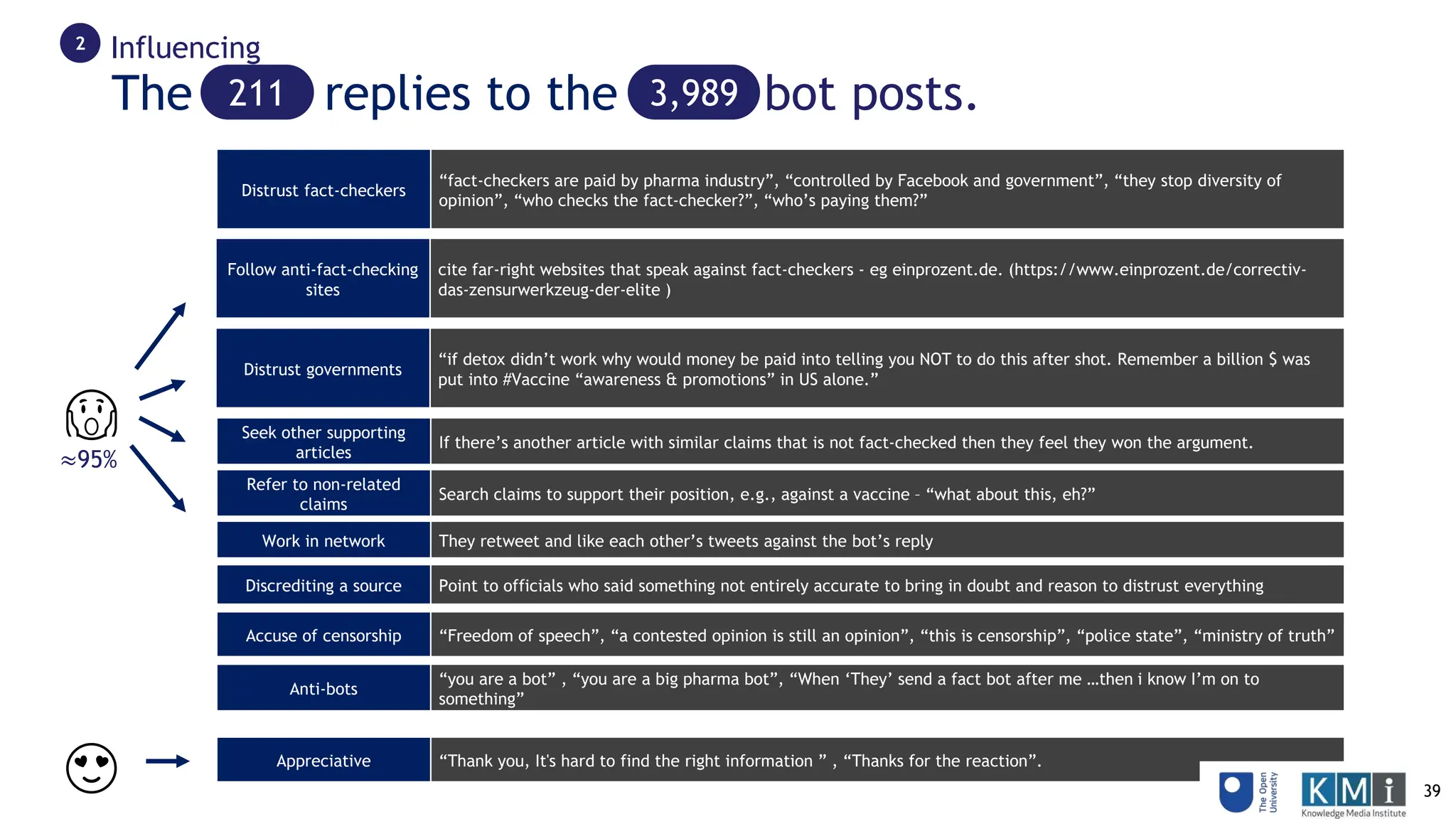

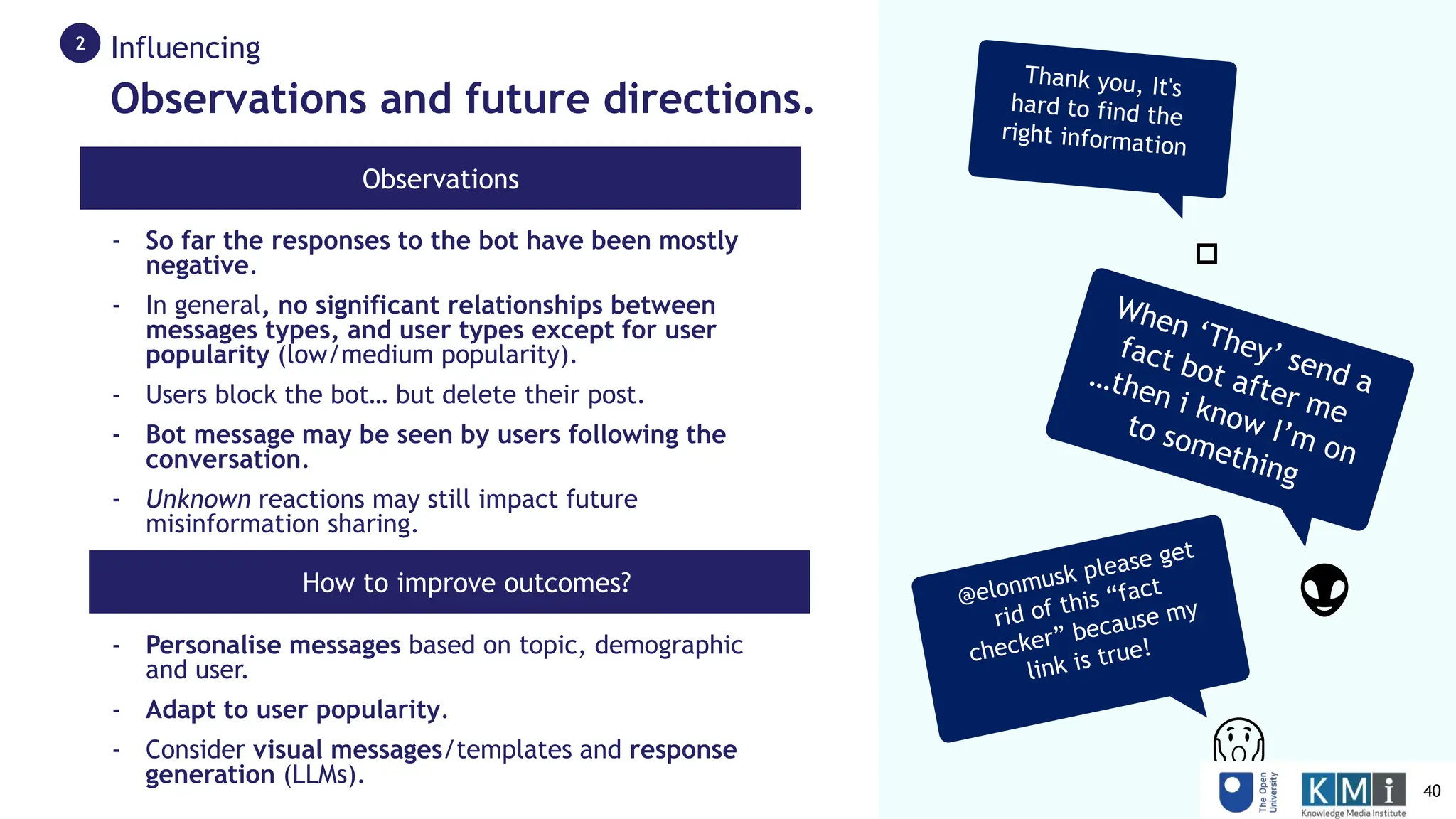

3) An experiment with an automated fact-checking bot that pushes fact-checks to people spreading