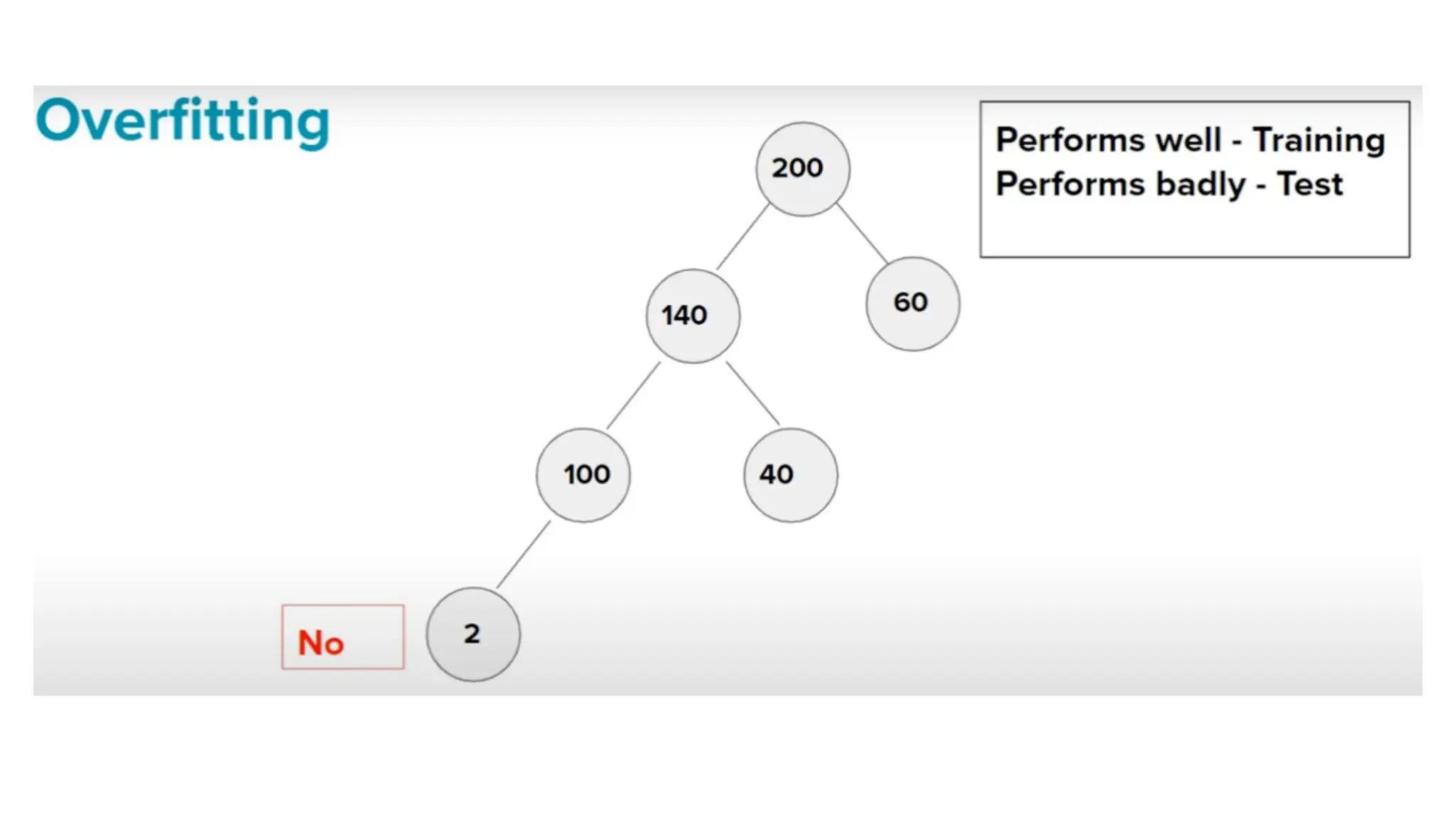

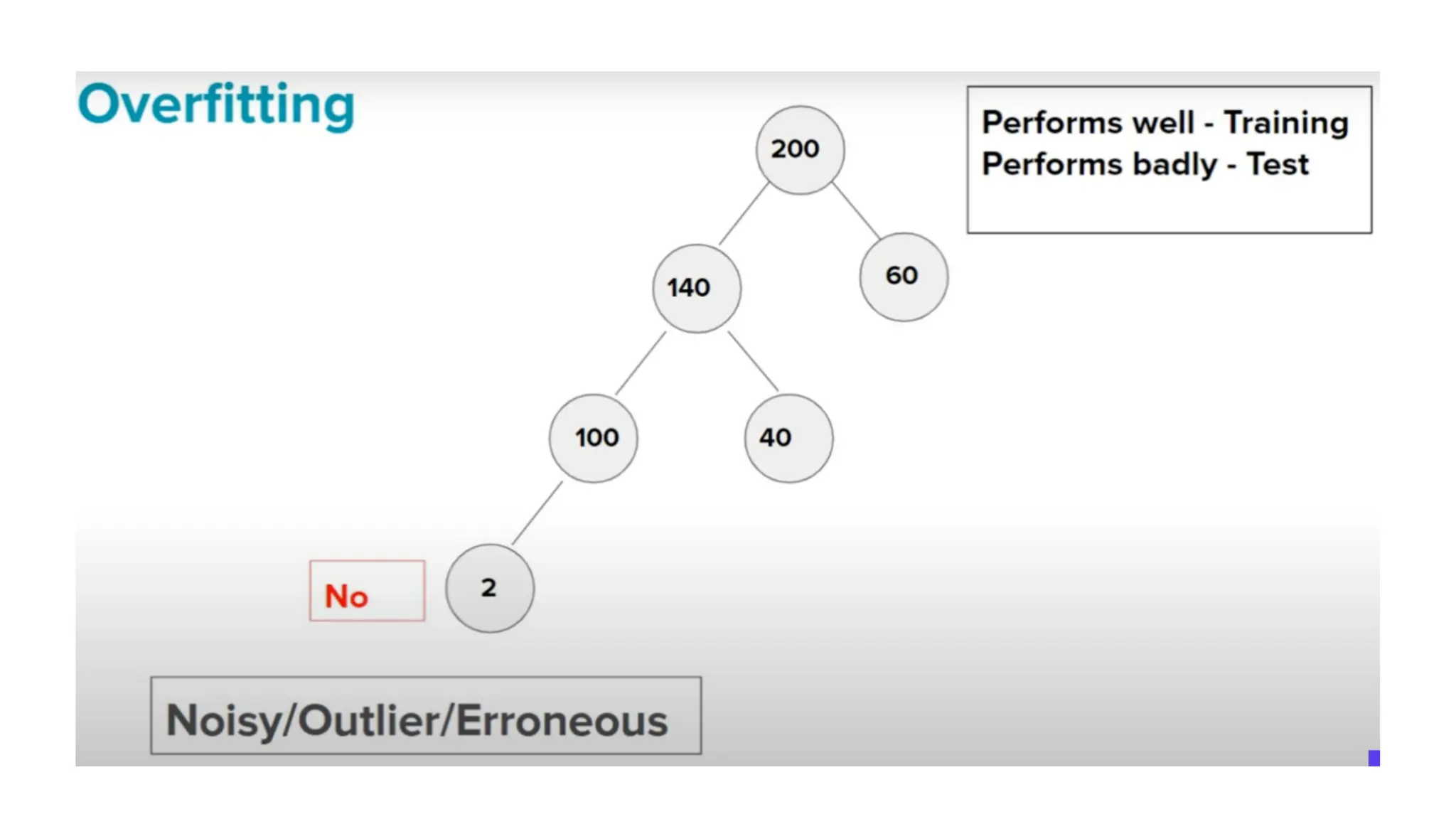

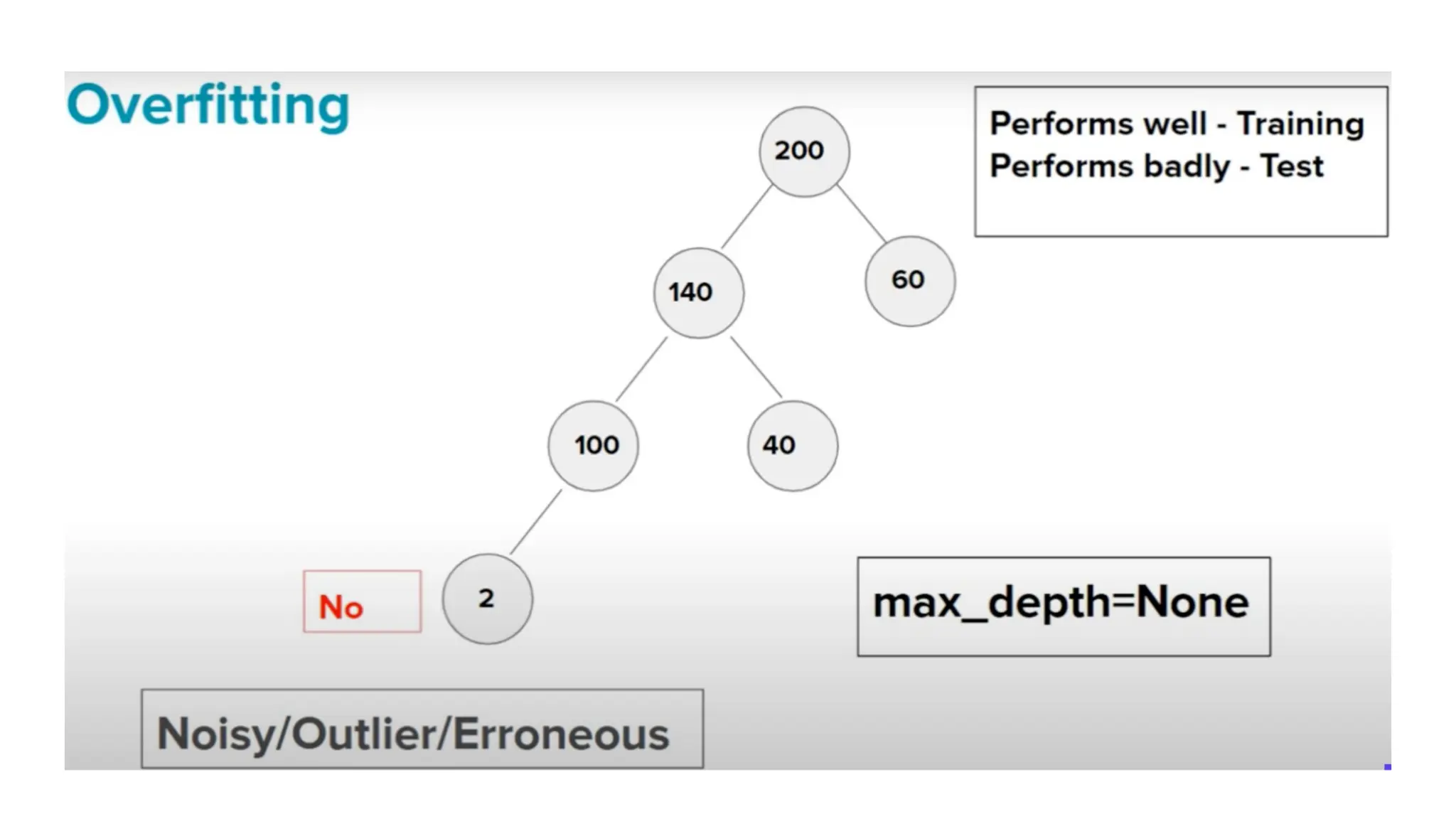

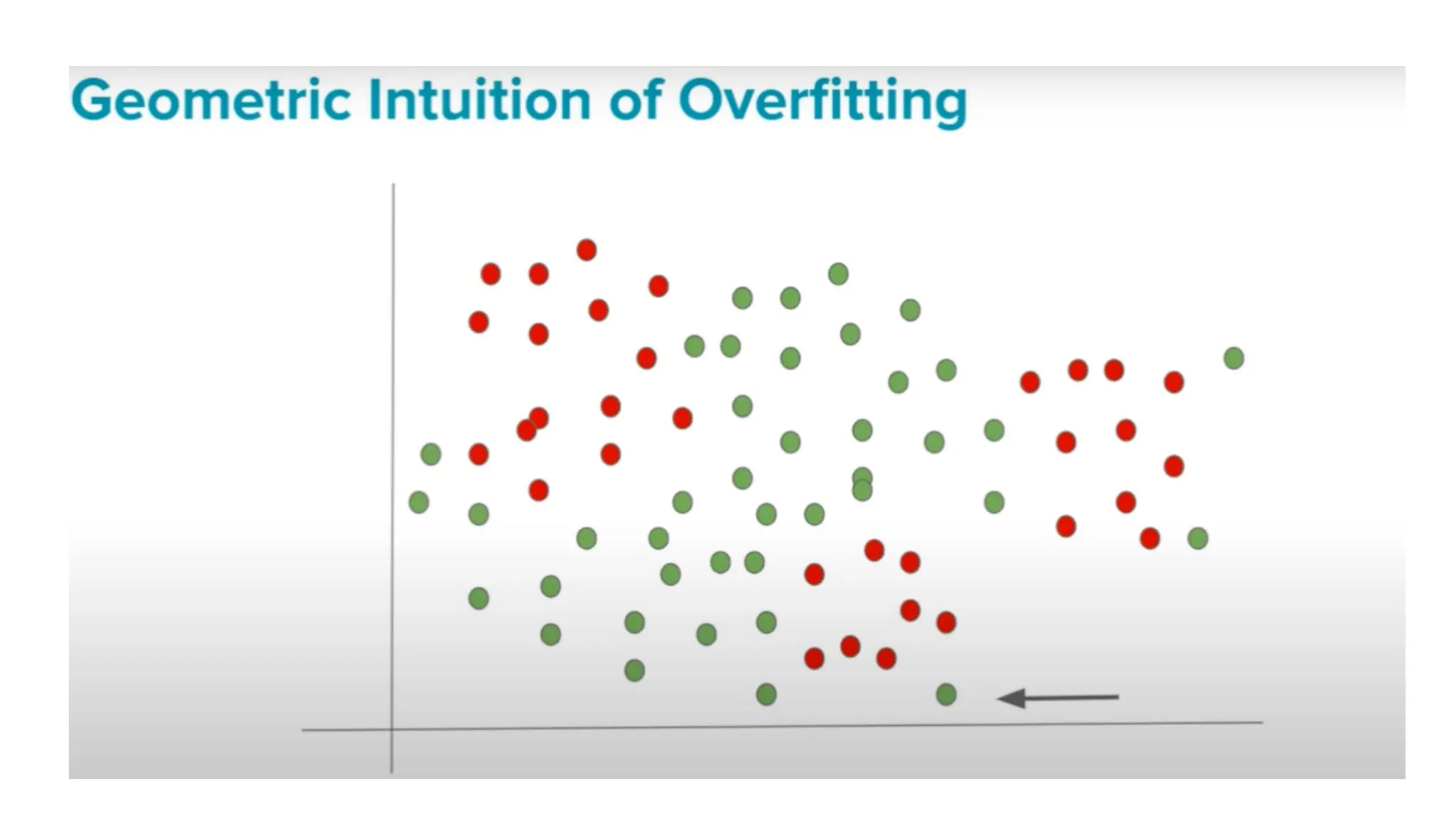

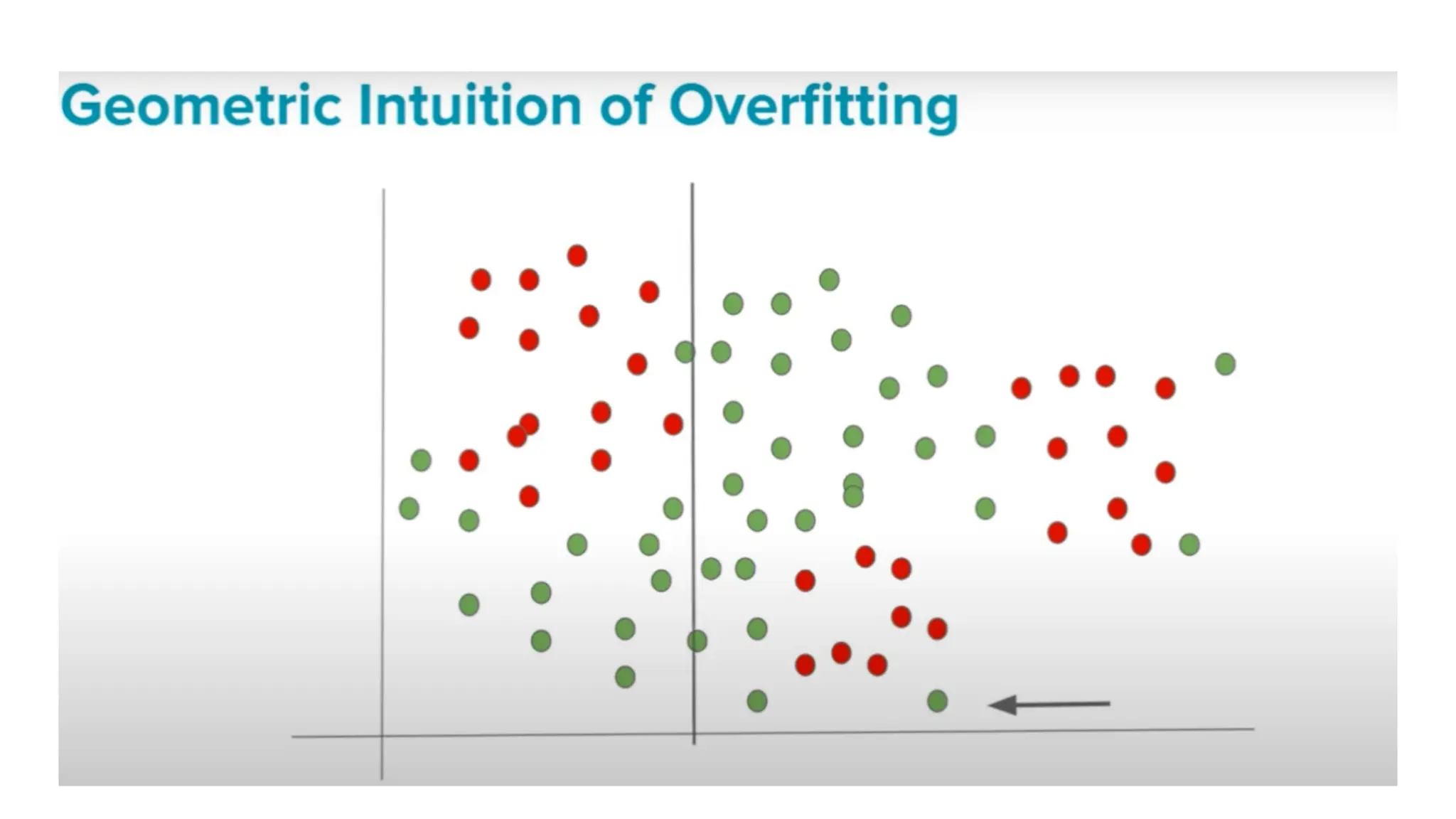

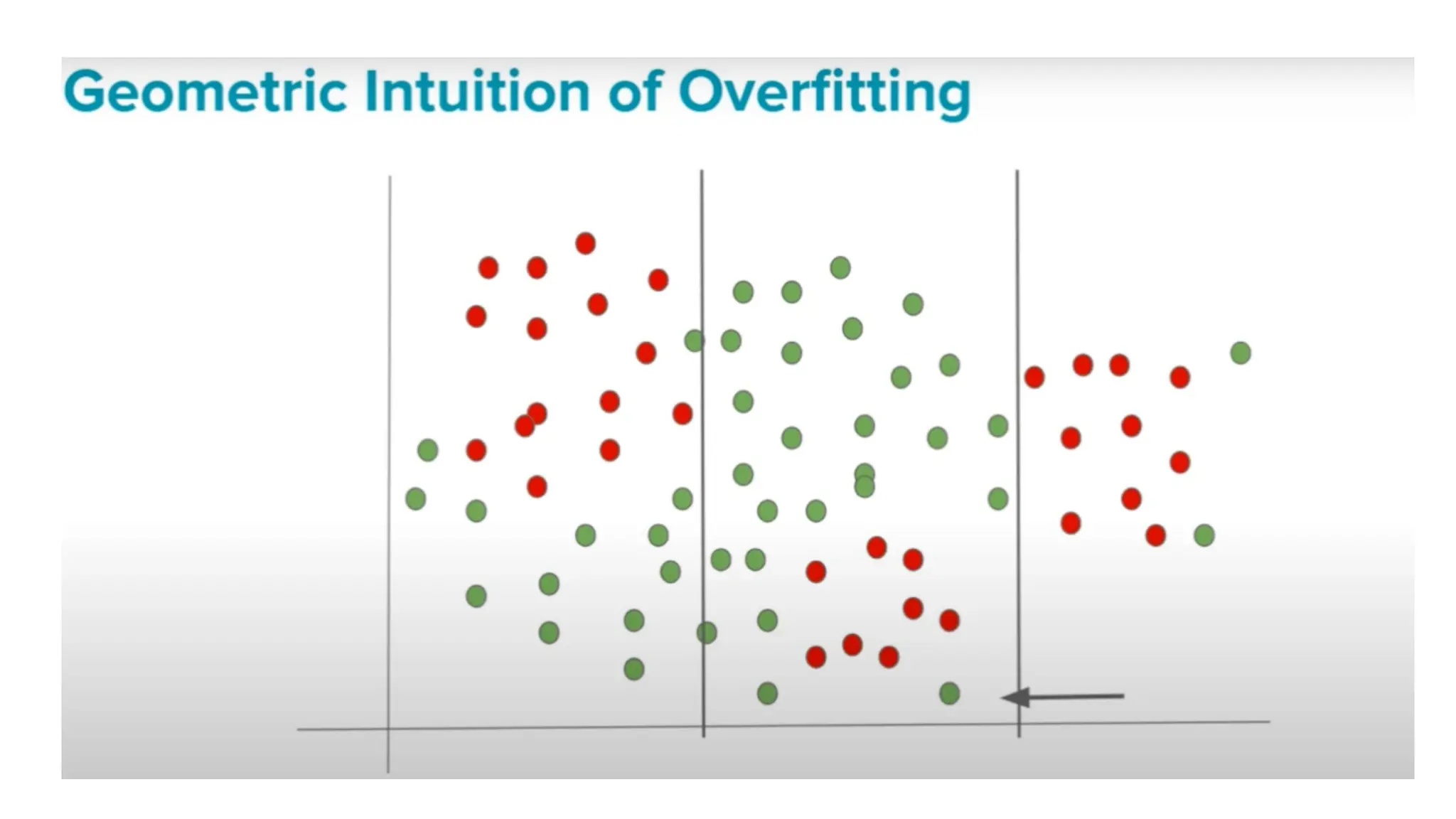

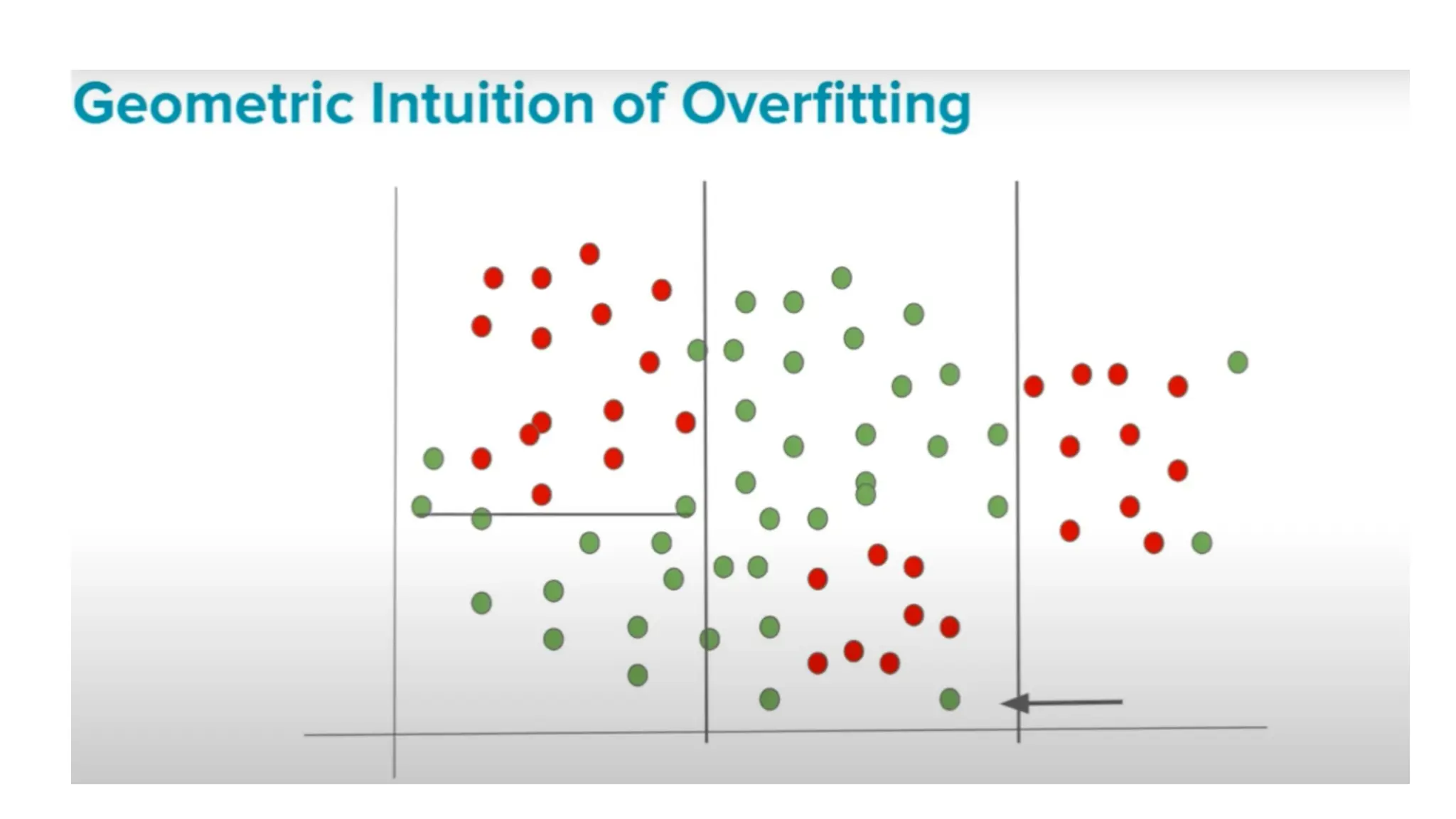

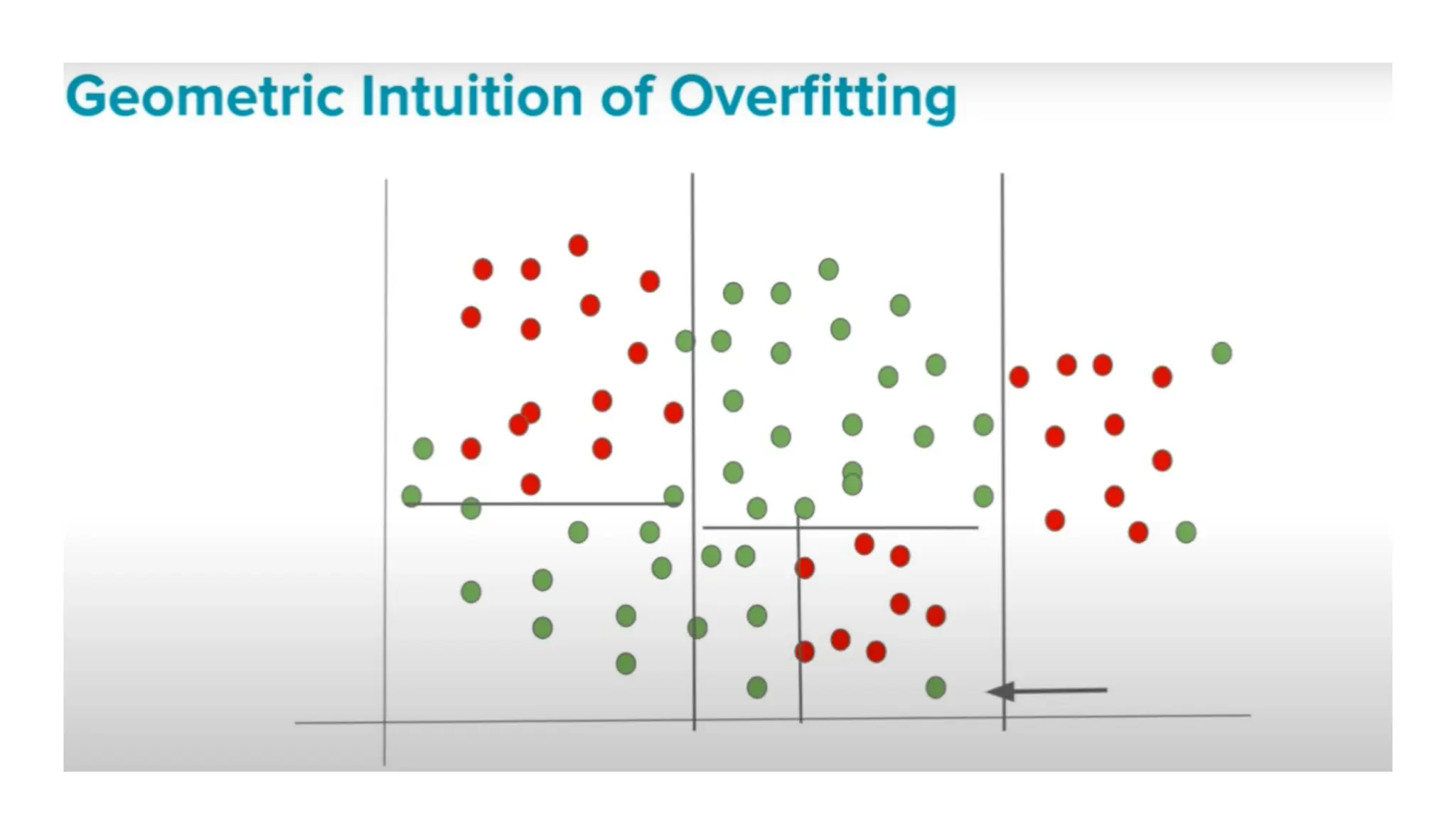

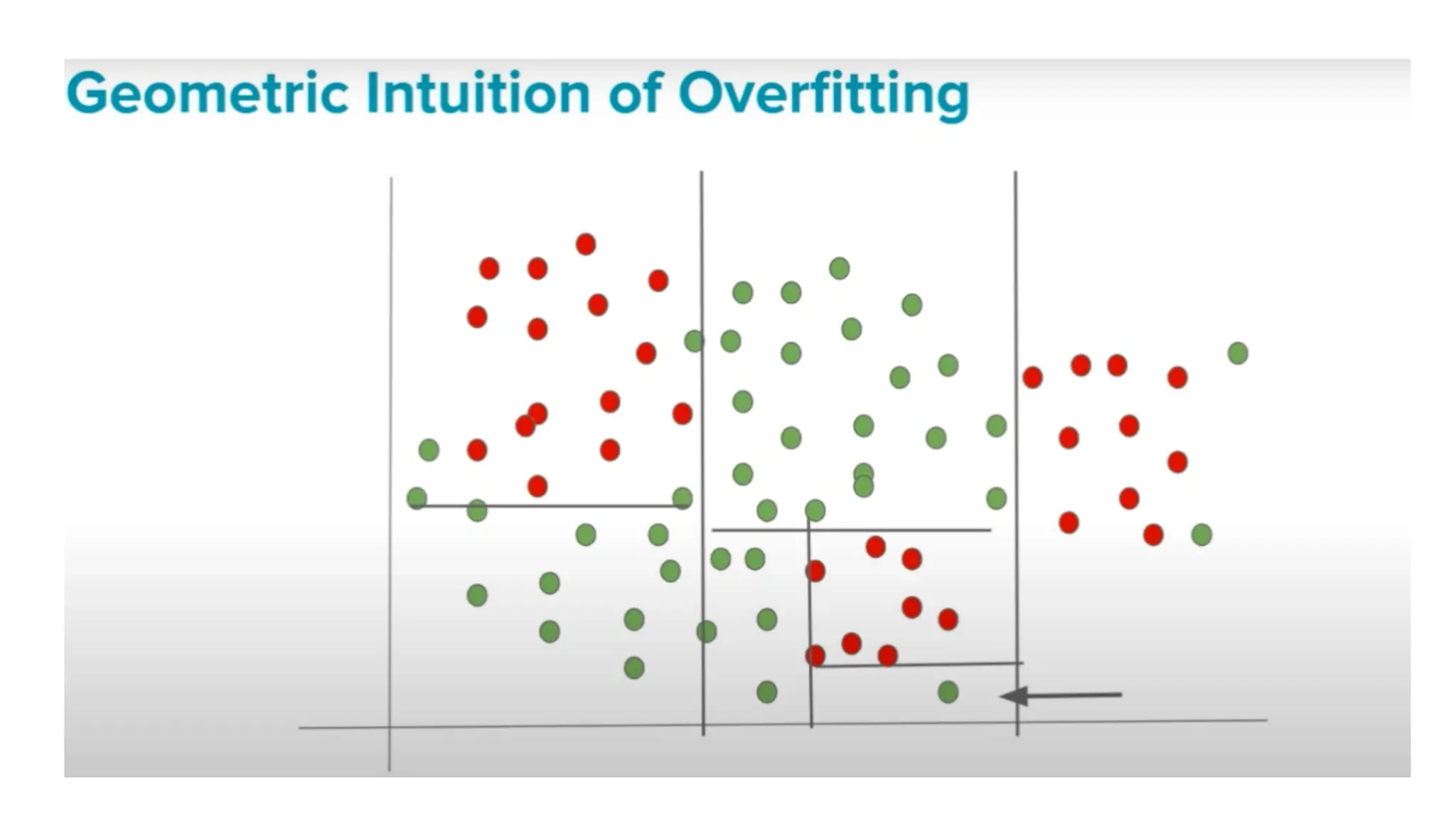

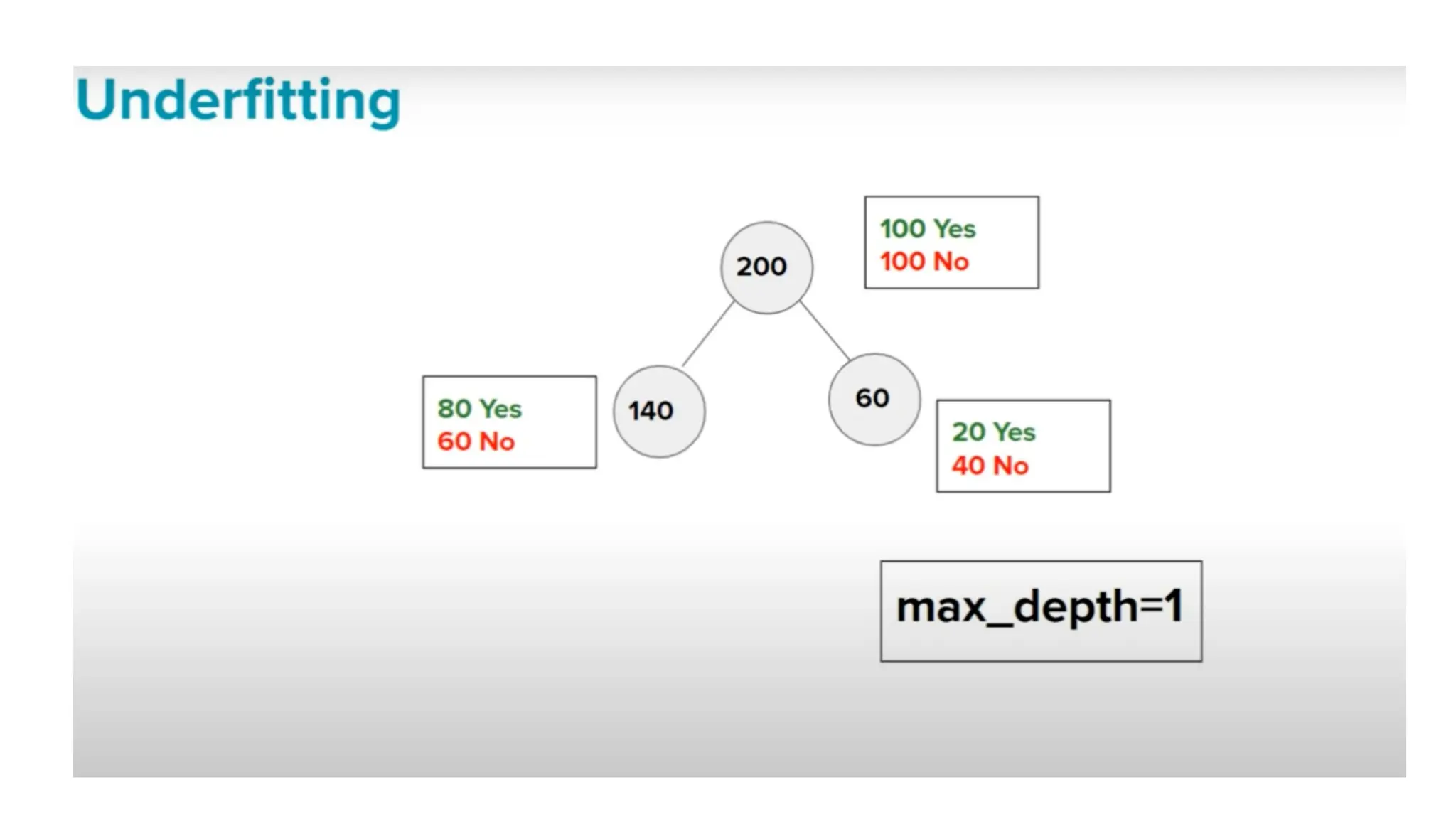

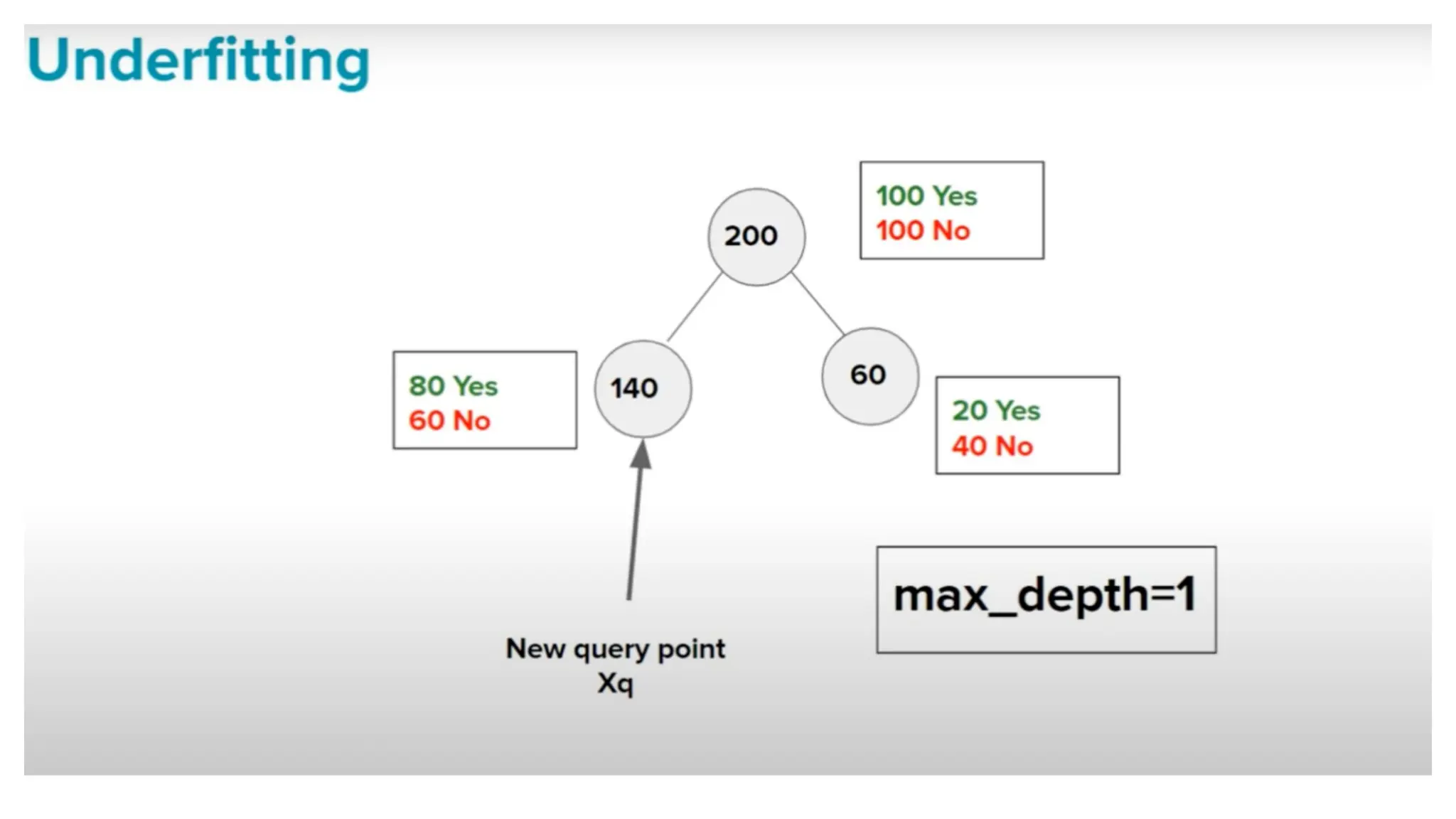

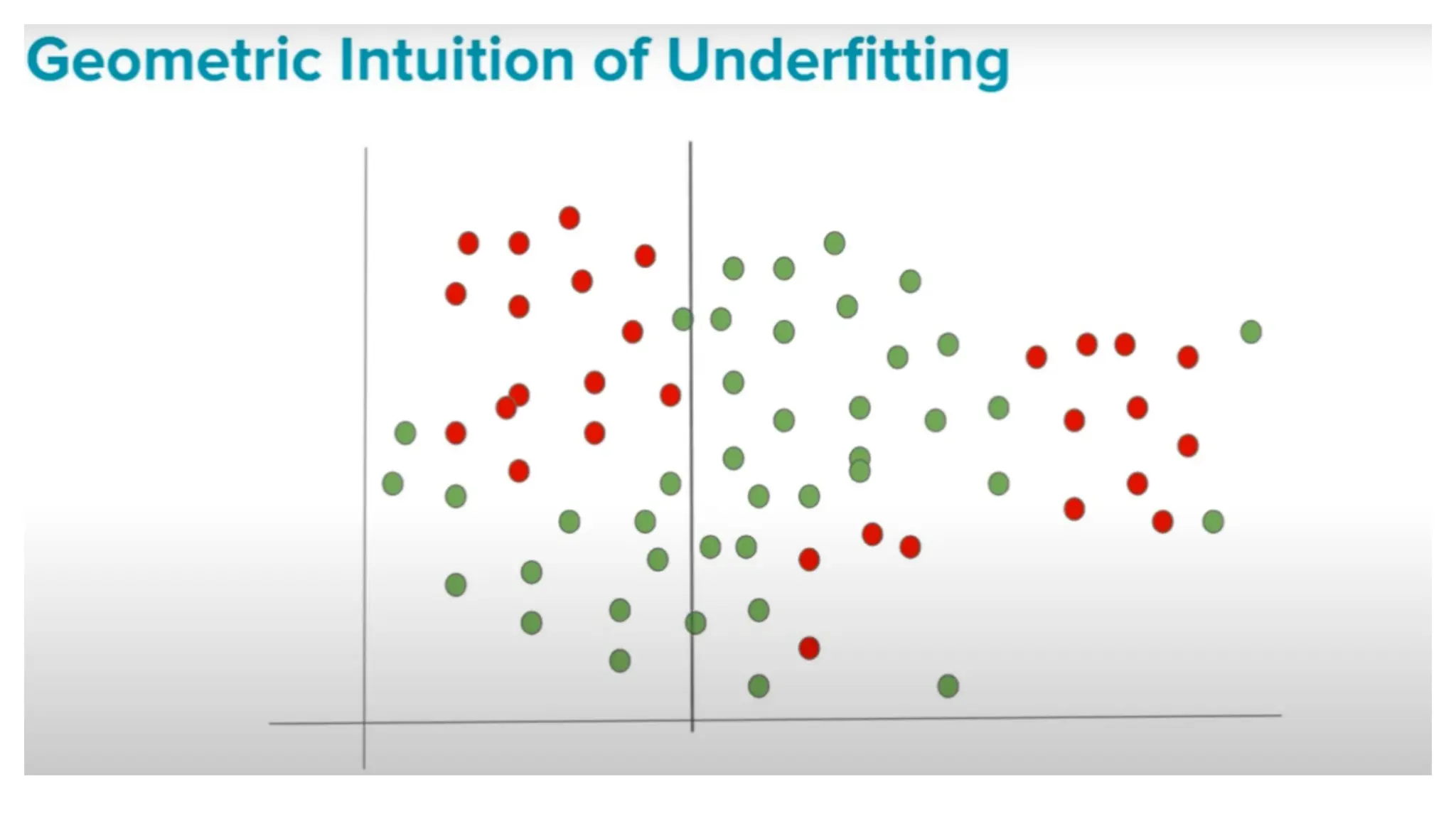

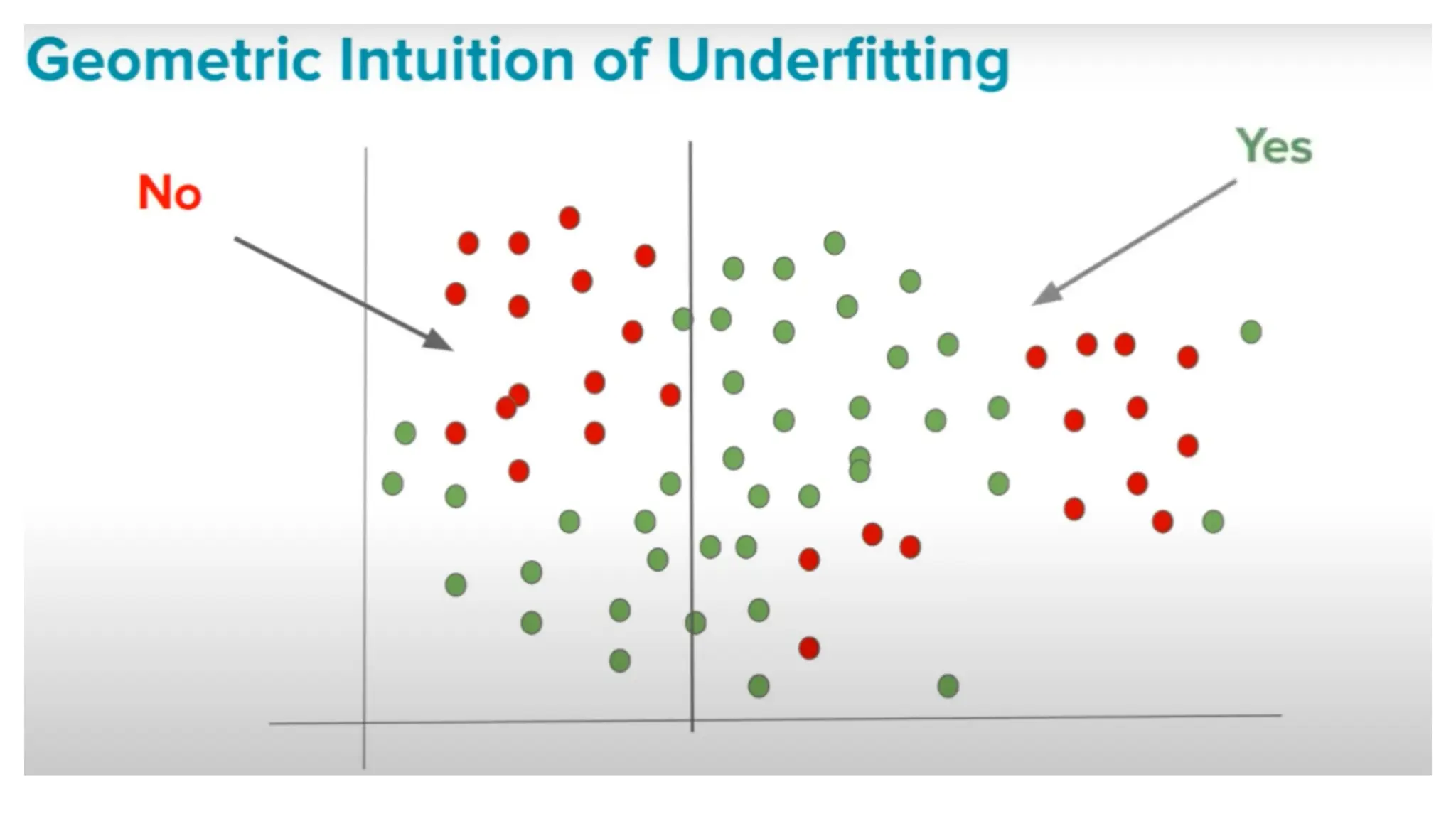

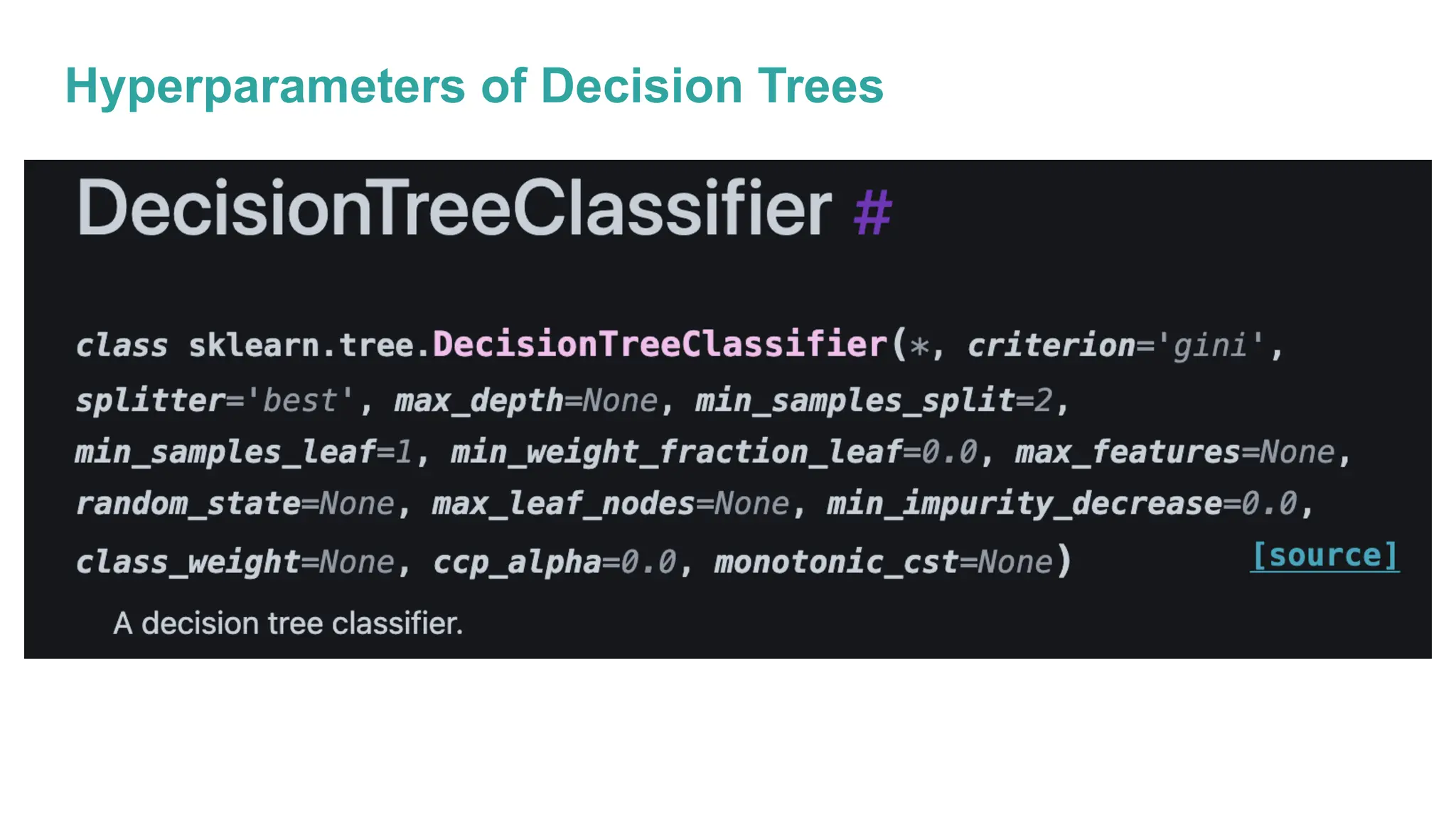

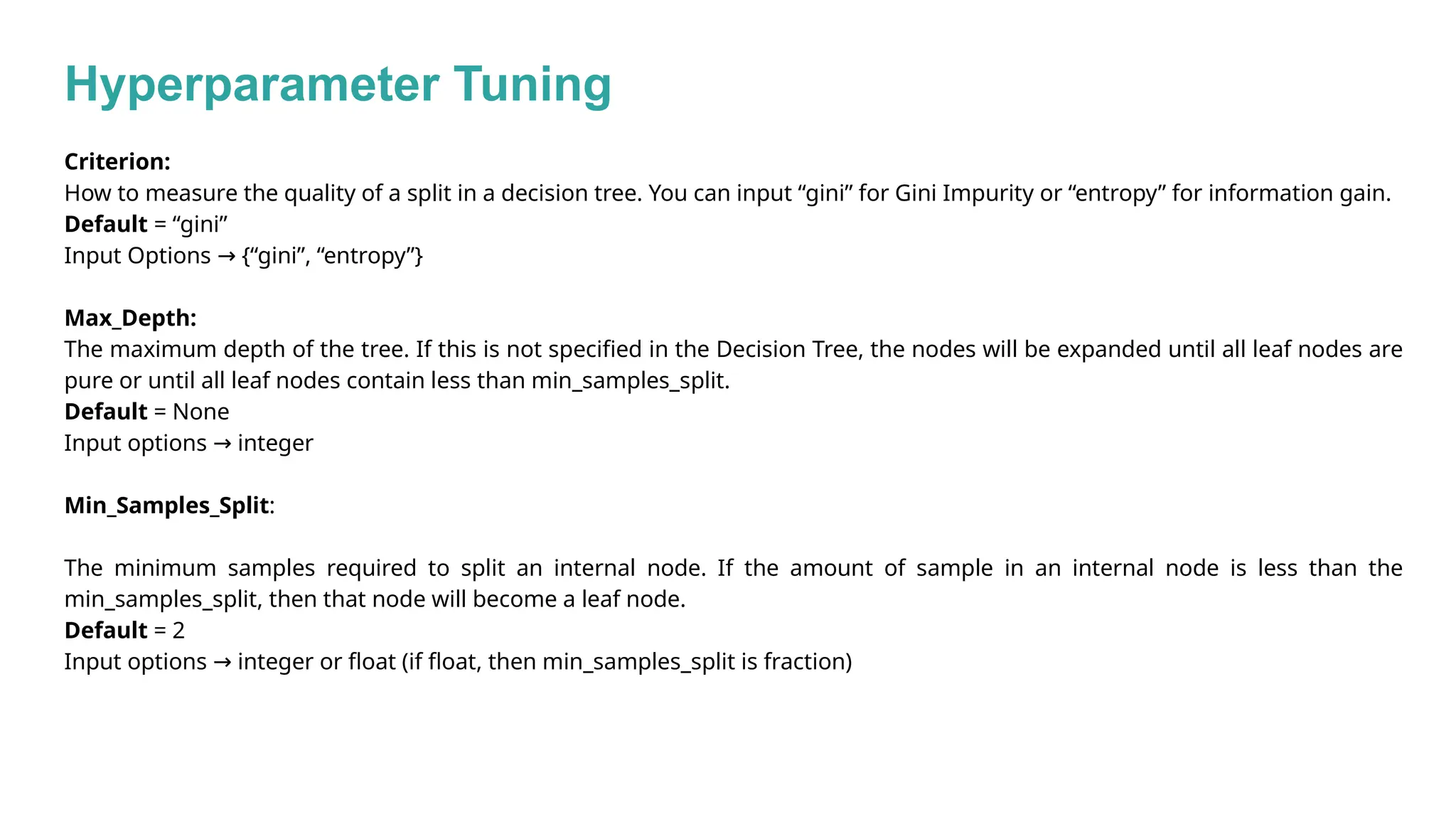

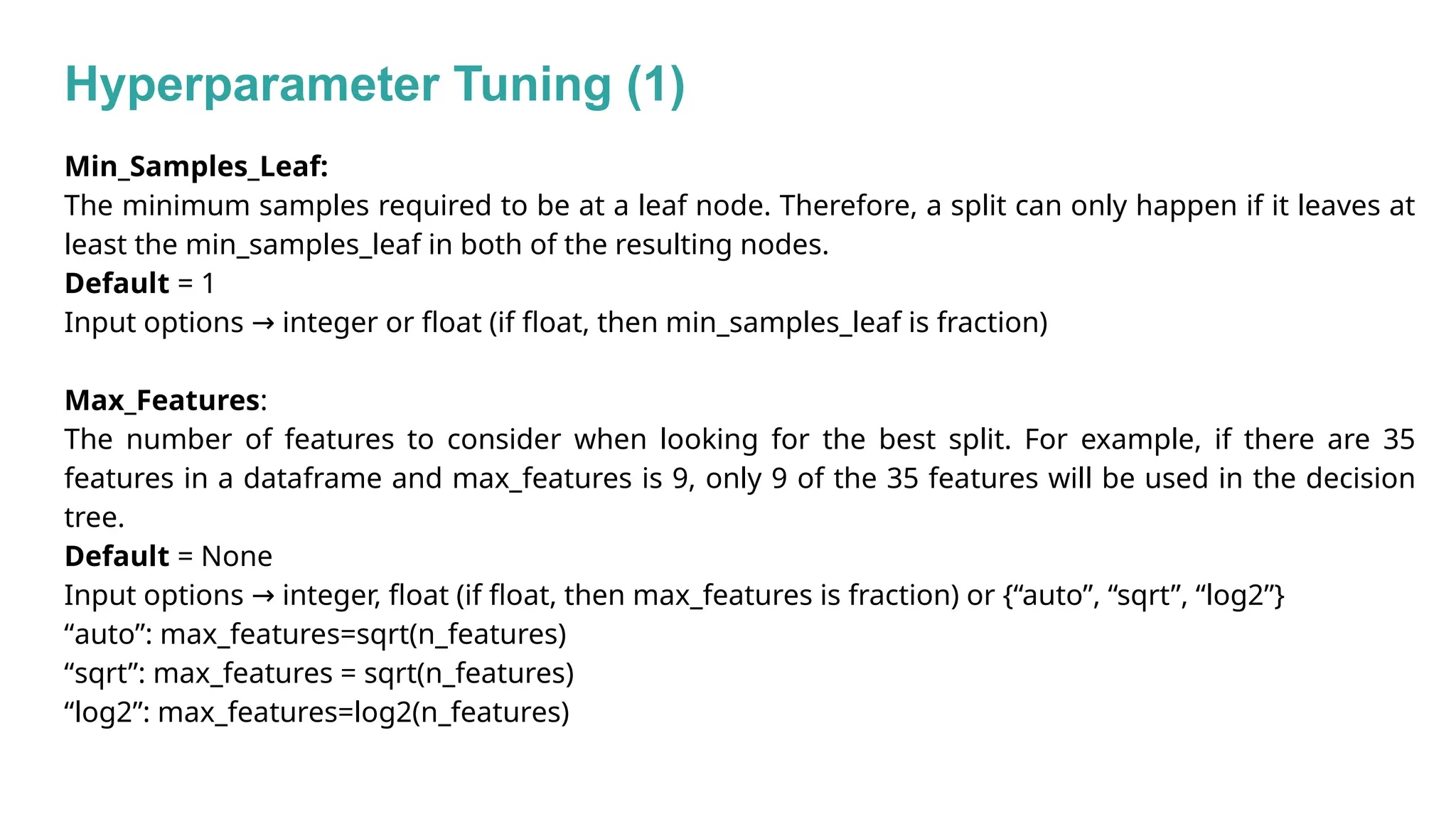

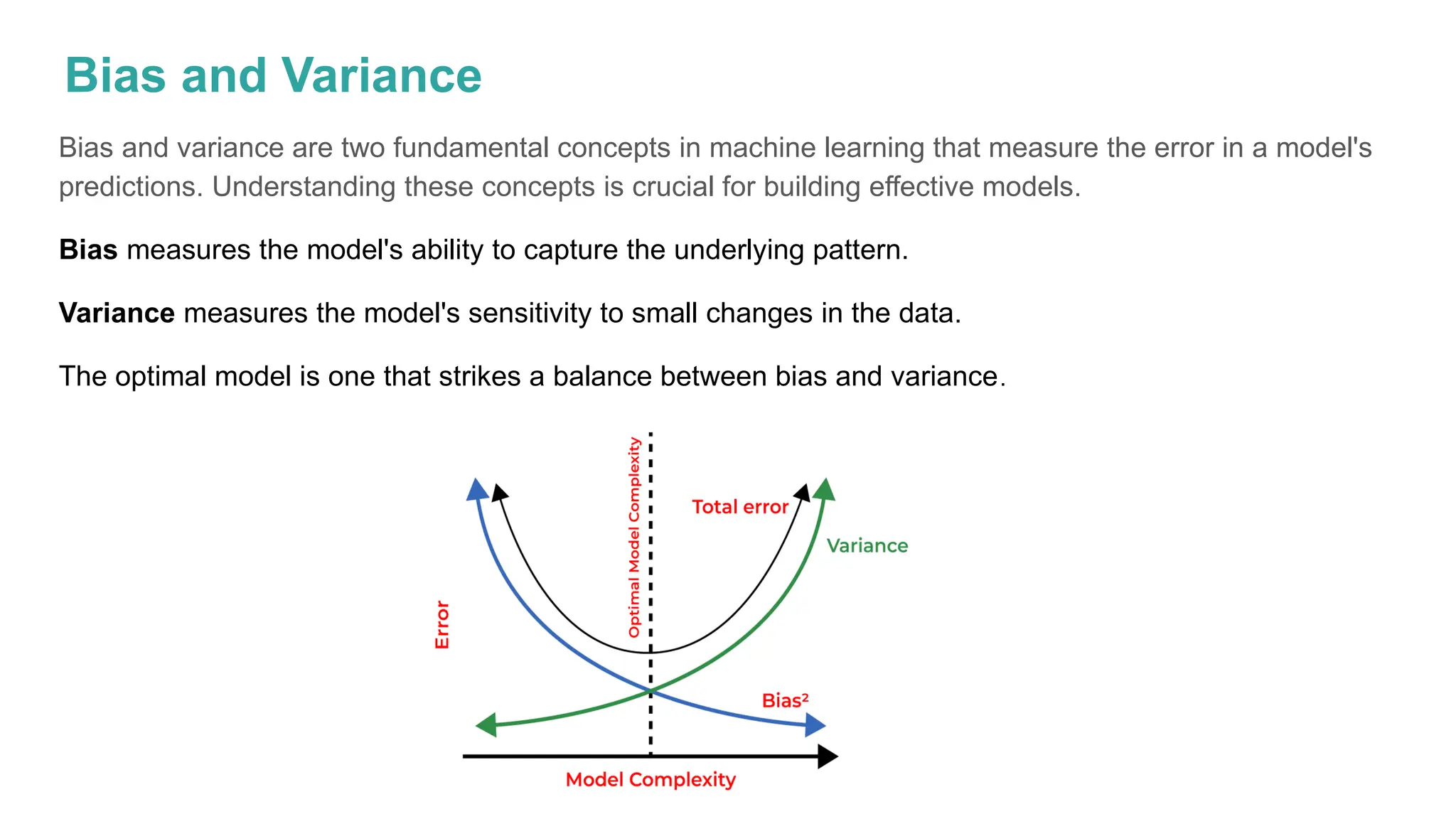

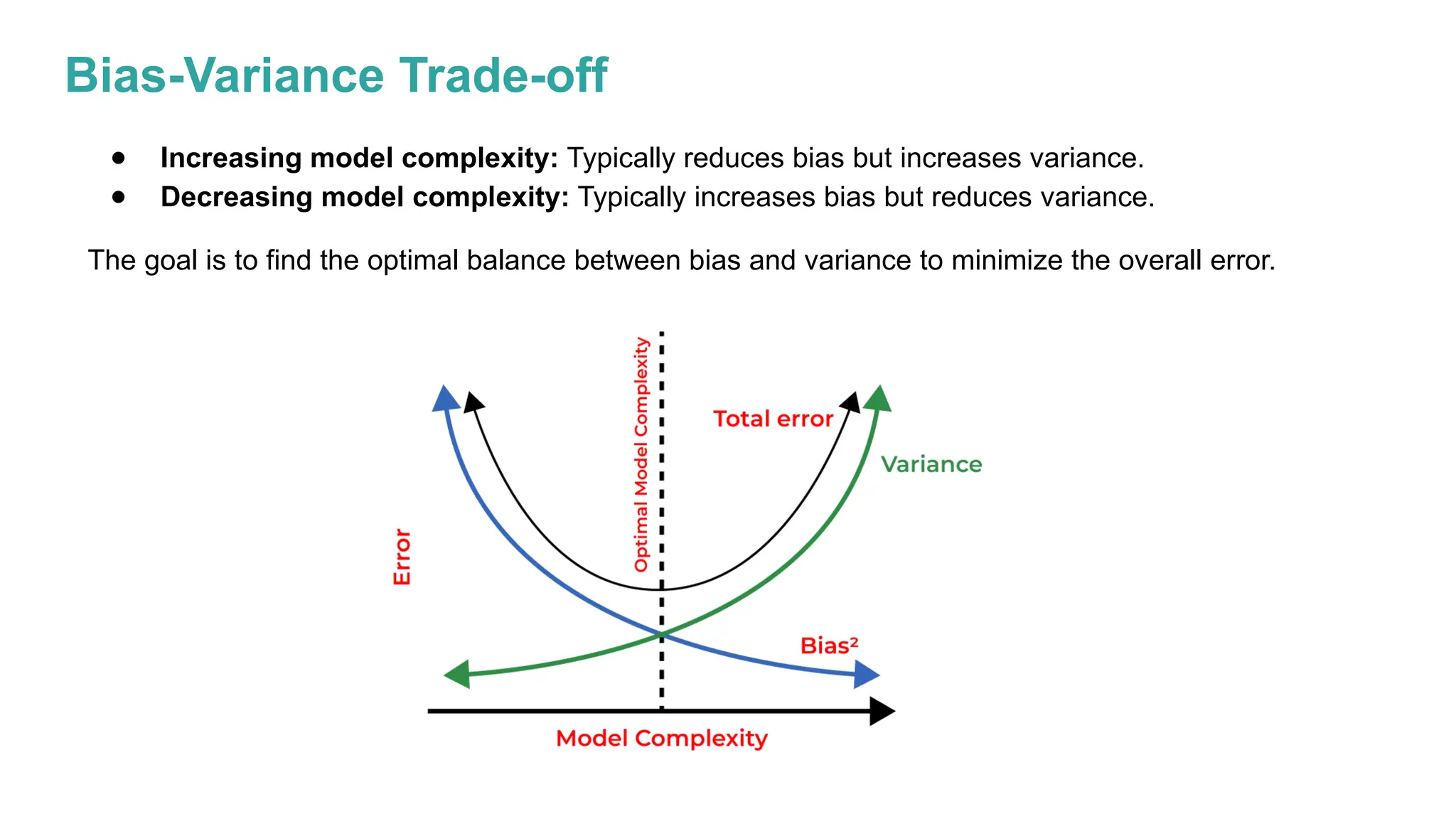

The document discusses key issues in decision tree learning, including overfitting, computational complexity, and instability. It also outlines hyperparameters that affect performance, along with methods for mitigation. Additionally, it highlights the importance of balancing bias and variance for effective model building.