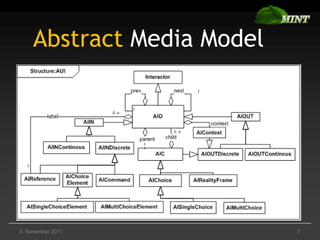

The document discusses the model-based design and generation of gesture-based user interface navigation controls, emphasizing the need for a precise and declarative modeling language to enable flexible prototyping of multimodal interfaces. It outlines a use case involving a gesture-based navigation system and introduces custom notations for modeling abstract media and behaviors. The conclusions highlight the advantages of detailed modeling for multimodal interactions and the intention to develop open-source tools and frameworks for further enhancement.

![Use Case: Test and Evaluation

Gesture-based Interface Navigation

:IN:hand_gestures

NoHands

[one_hand] [no_hands]

OneHand

Navigation previous / next /

Predecessor Command

closer / farer closer /farer

Ticker tick previous wait_one

start_p

started tick previous

select confirmed

next

[1s]/tick previous

next selected [confirm]

Successor

tick next start_n

tick select select confirm

• Rapid model-based Design and Comparison

of three variants

4. November 2011 The Augmented “Drag-and-Drop 5](https://image.slidesharecdn.com/gesturecontrolfeuerstack-111104090644-phpapp02/85/Model-based-Design-and-Generation-of-a-Gesture-based-User-Interface-Navigation-Control-5-320.jpg)

![Abstract Behavior Model

AUI:AIO:AIChoiceElement:

AISingleChoiceElement

Presenting

drop listed

initialized next||prev||parent

focus

dragging defocus /aio=find(act);

organize aio.focus

drag focused

organized

present Selection

H

organize unchosen

[in(focused)] choose

suspend unchoose / aios=find(parent.childs.chosen);

aios.all.unchoose

chosen

suspended

4. November 2011 9](https://image.slidesharecdn.com/gesturecontrolfeuerstack-111104090644-phpapp02/85/Model-based-Design-and-Generation-of-a-Gesture-based-User-Interface-Navigation-Control-9-320.jpg)

![Mode Model

(Example: Gesture Interactor)

IR:IN:HandGesture:FlexibleTicker

NoHands

right_hand_appeared right_hand_disappeared

Right Hand

Navigation

Speed Predecessor Command

started [1s]/tick

[t]/tick

entry/start_ticker

tick previous wait_one

exit/stop_ticker previous start_p

tick

select confirmed

next

SpeedAdjustment previous

closer closer next selected confirm

normal faster fastest

entry/ entry/ entry/ Successor

t = 1200ms; farer t = 1000ms; farer t = 800ms;

restart_ticker restart_ticker restart_ticker tick next start_n select

tick

4. November 2011 10](https://image.slidesharecdn.com/gesturecontrolfeuerstack-111104090644-phpapp02/85/Model-based-Design-and-Generation-of-a-Gesture-based-User-Interface-Navigation-Control-10-320.jpg)