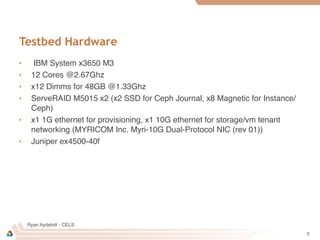

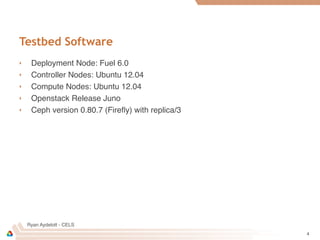

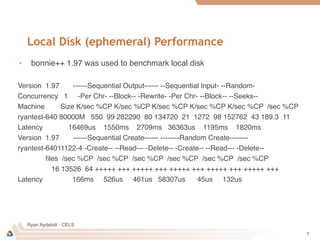

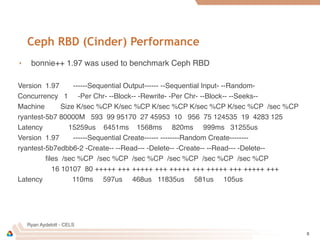

The document outlines a testbed configuration for a 13-node OpenStack cluster using Mirantis Fuel 6.0 and various hardware specifications. It details performance testing for network and disk operations using iperf and bonnie++, revealing performance ranges and issues, particularly under different MTU settings and configurations. The conclusion notes that while network performance was solid, it could be improved with better hardware and a more optimized Ceph setup.