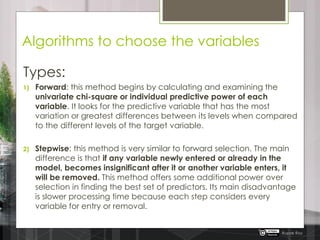

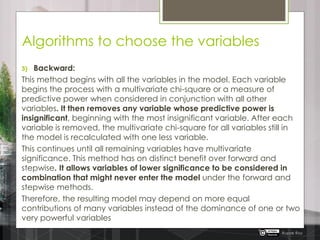

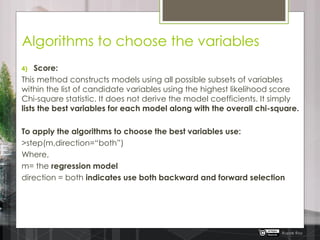

The document discusses various methods for feature/variable selection in machine learning, including forward, stepwise, backward, and score methods. Each technique has its unique approach, benefits, and limitations regarding the selection of predictive variables. The step function for applying these algorithms is also provided.