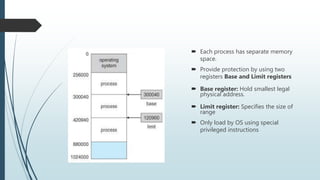

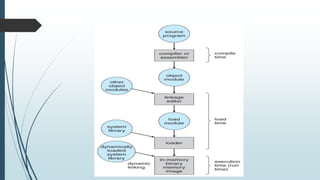

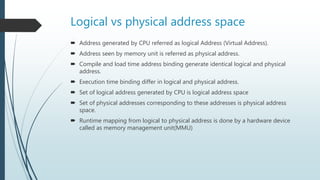

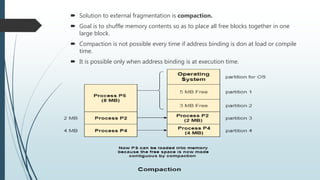

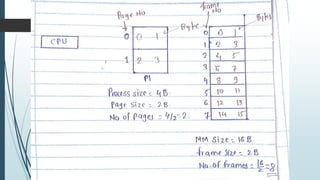

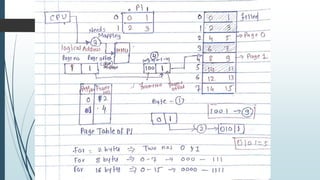

This document discusses different memory management strategies used in operating systems. It describes basic hardware components like main memory, registers, and cache. It then covers address binding techniques, logical vs physical address spaces, and dynamic loading and linking of processes. The rest of the document discusses paging as a memory management strategy, including hardware support through page tables, protection using valid-invalid bits, and sharing of pages between processes.