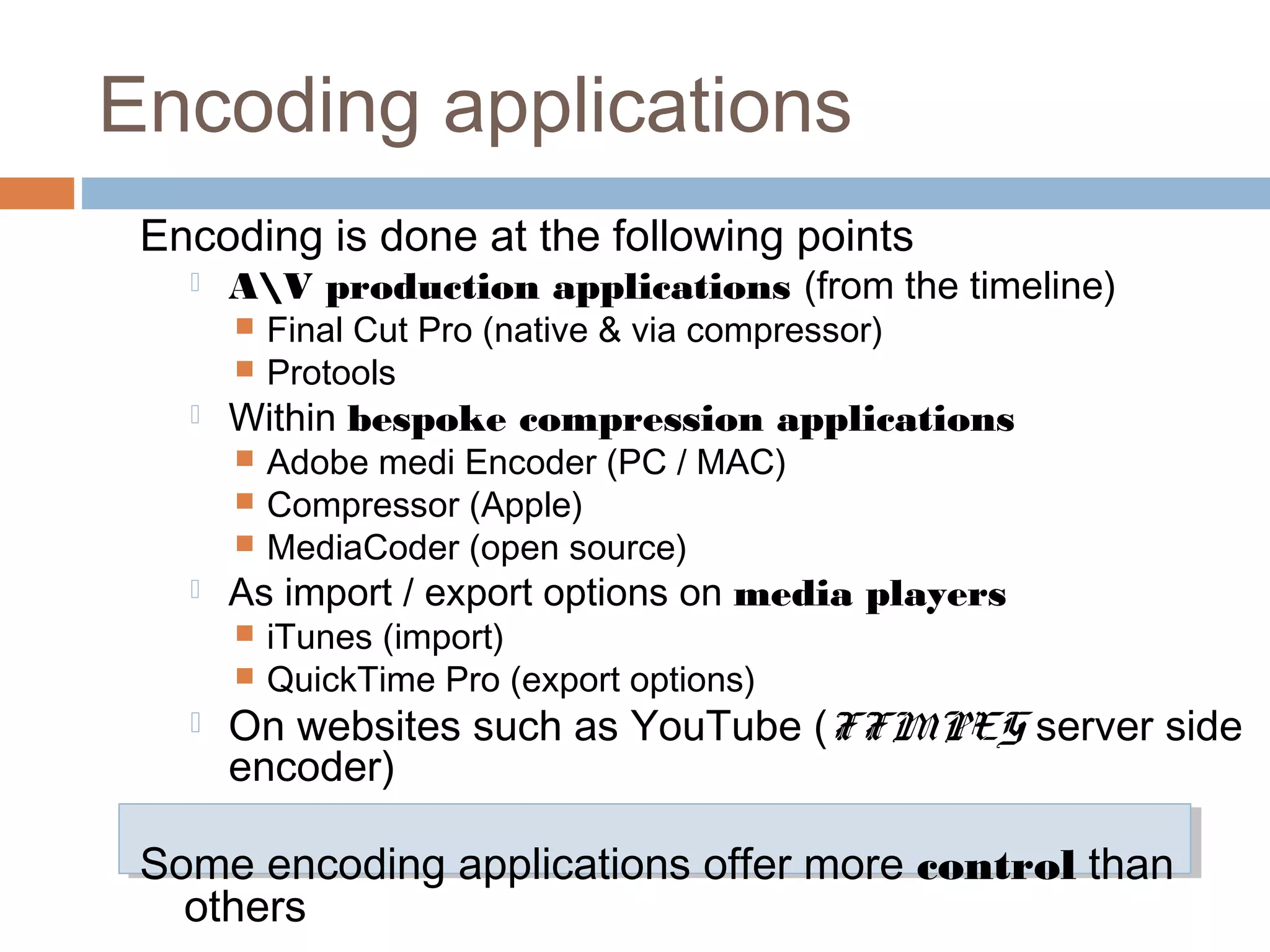

Encoding refers to converting media files between formats, usually to compress them for storage and transmission. It involves removing redundant data to reduce file sizes while maintaining quality. Lossy encoding is used for audio and video and discards some data, resulting in degraded quality compared to the original. Lossless encoding maintains the original quality by identifying and removing only purely repetitive data. The goals of encoding are compression to smaller file sizes and formatting for compatibility with devices and software.

![Lossless and lossy

compression

Lossless

Refers to any file type that is a true (verbatim) copy of

the original

No quality has been lost in saving a file in the following

formats

Lossless Audio – Flac, WavPac, Monkey’s Audio, ALAC

Lossless Video – Animation Codec, Huffyuv, Uncompressed

Lossless Graphics – Gif, PNG, Tiff

A basic example of lossless compression methods

include RLE (Run Length Encoding)

Using the following as an abstraction of the data used to

store a segment of audio –

[AAAAABBCCCCCDEEEEEEE]= 20bytes

RLE would look at the ‘run lengths’ or repeated adjacent

runs of data and summarise them as A5B2C5D1E7 =

10bytes](https://image.slidesharecdn.com/mediaencoding2013-14-130922131533-phpapp02/75/Media-Encoding-9-2048.jpg)