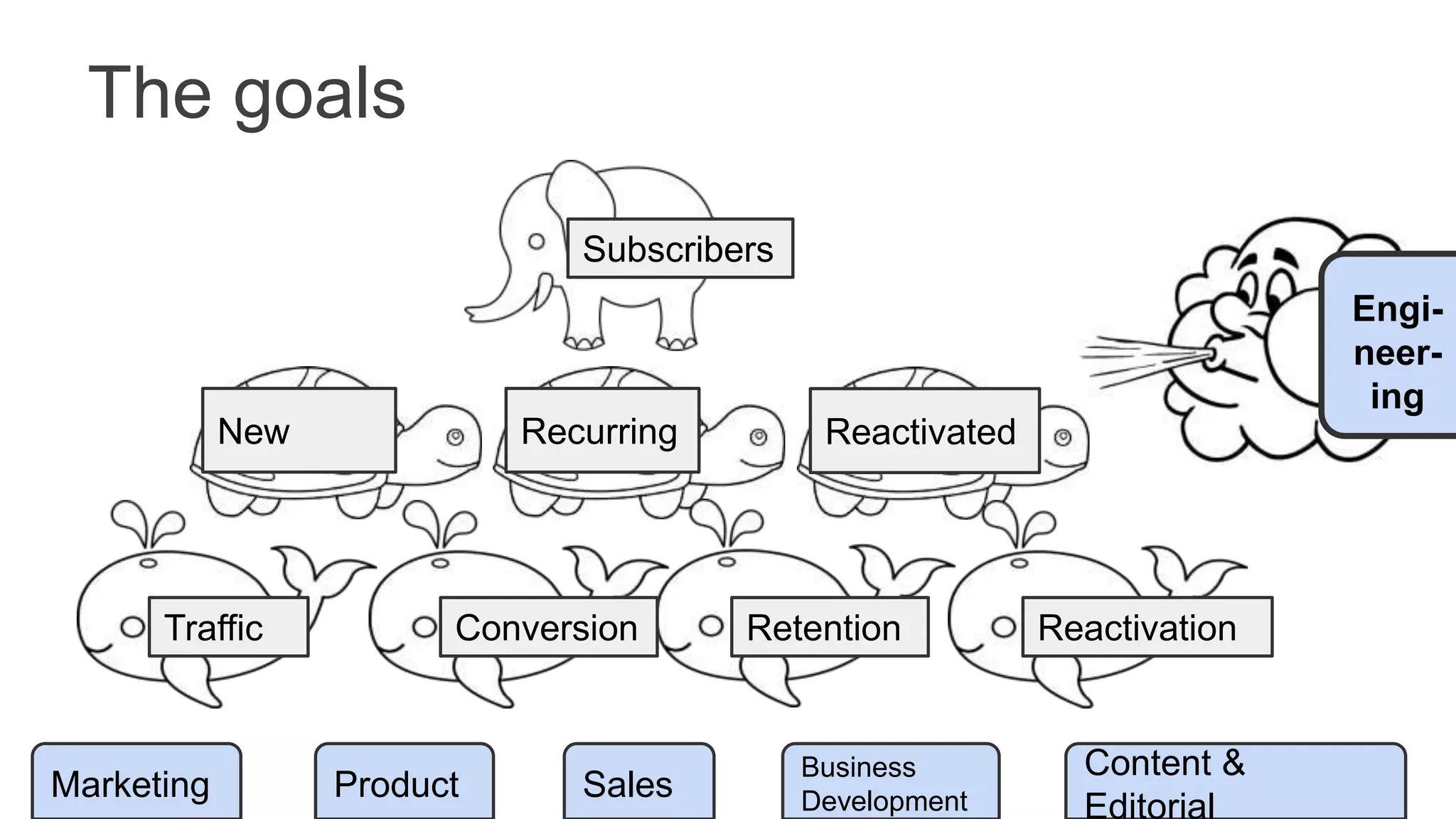

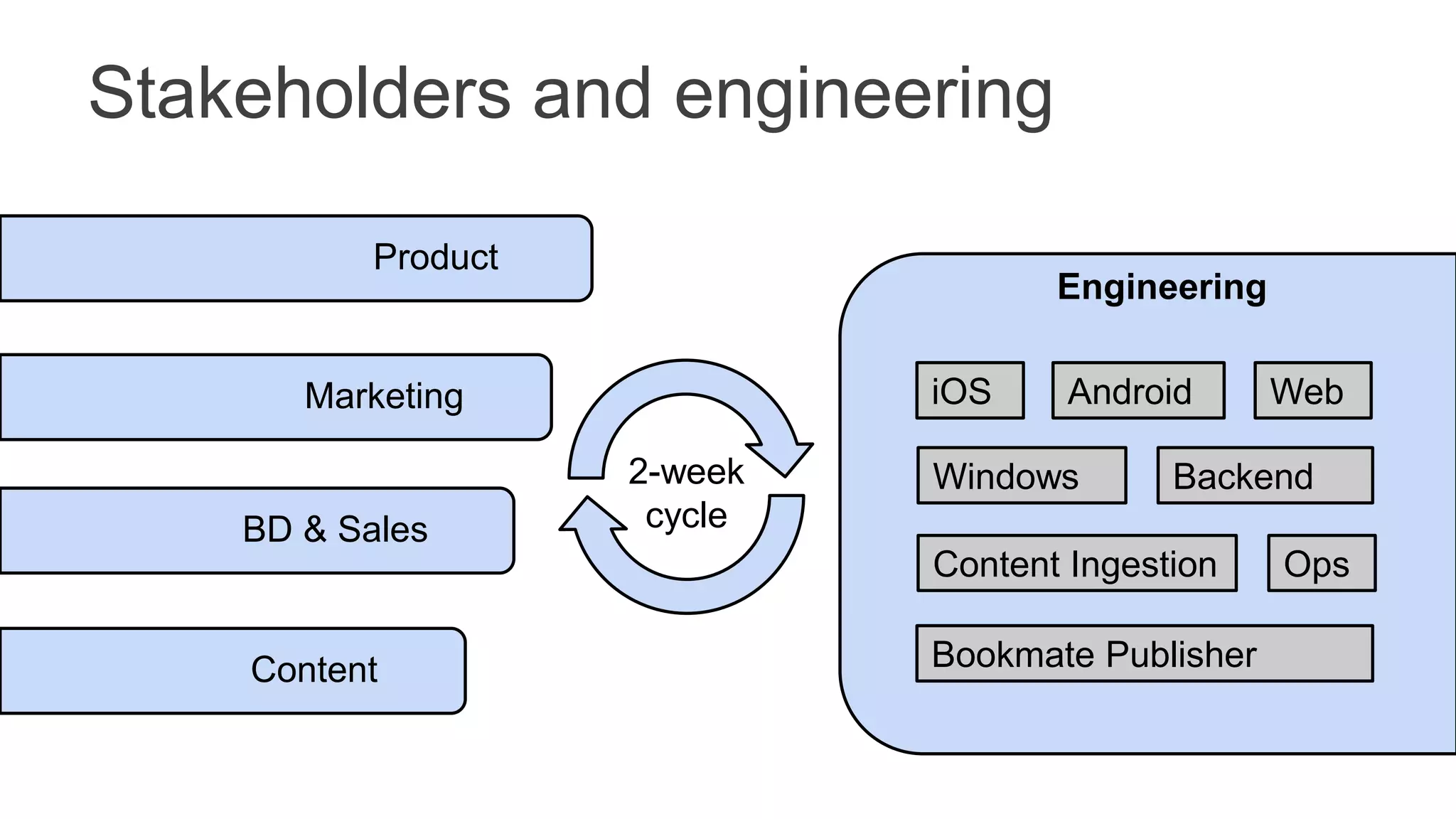

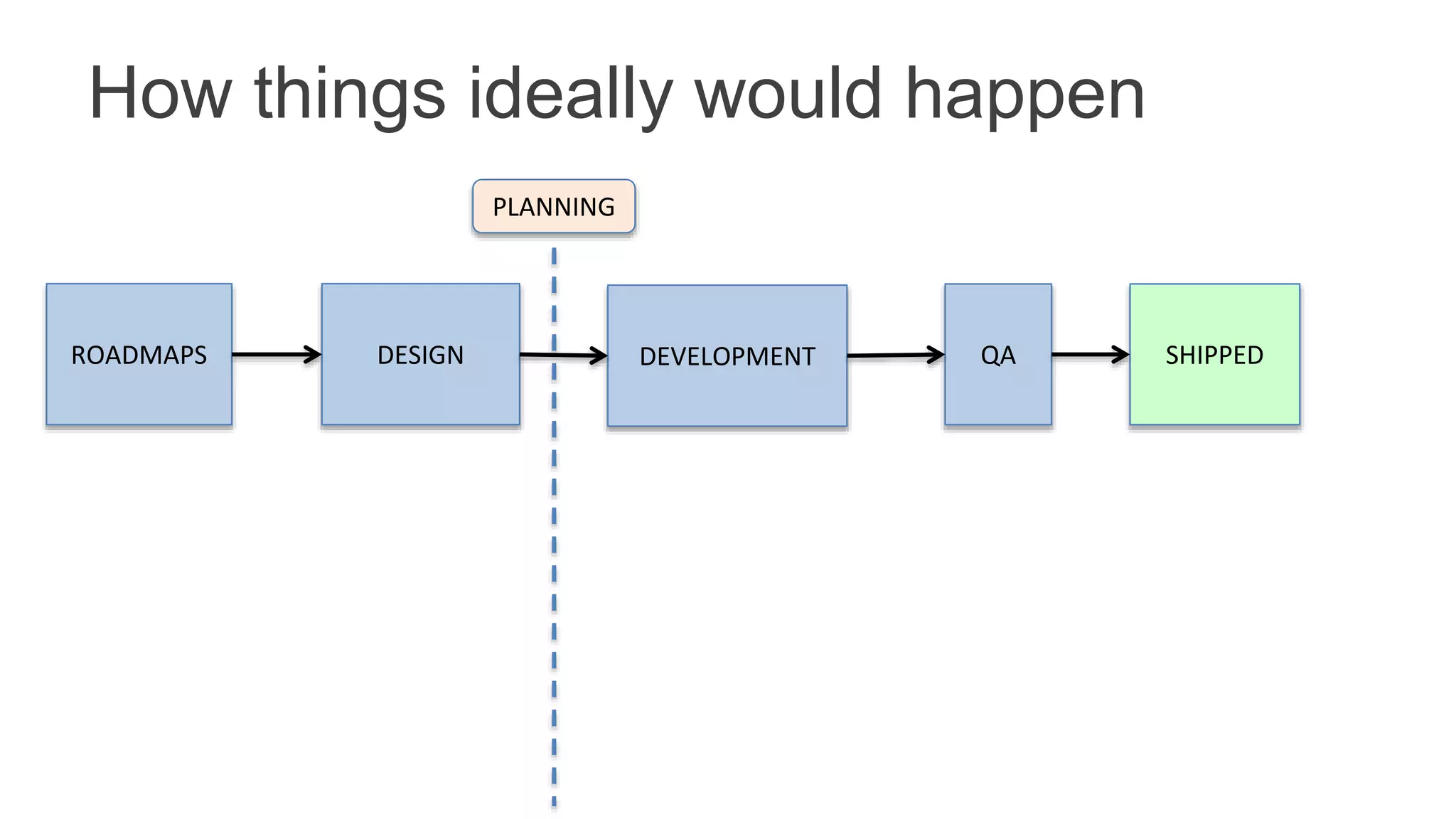

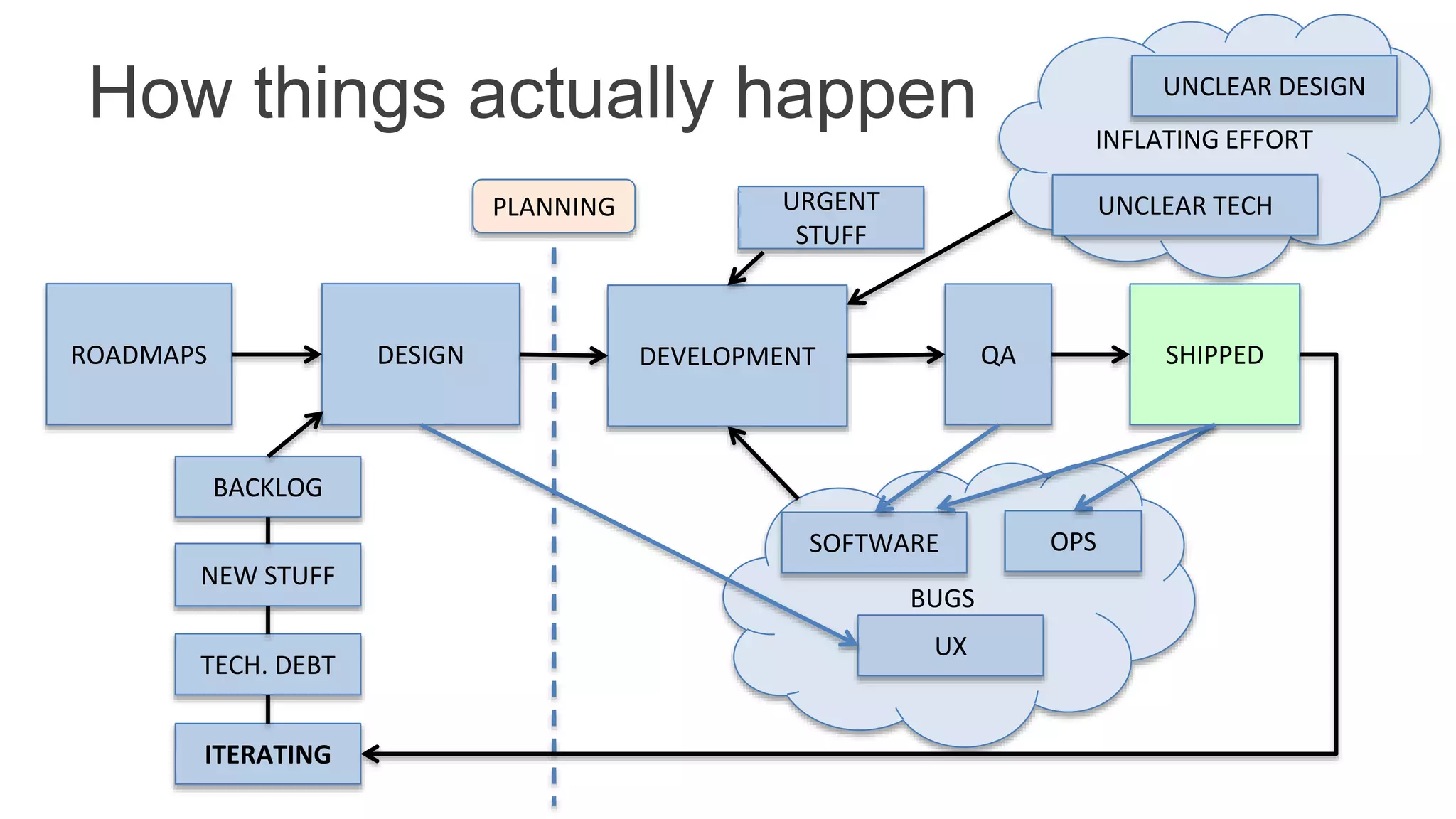

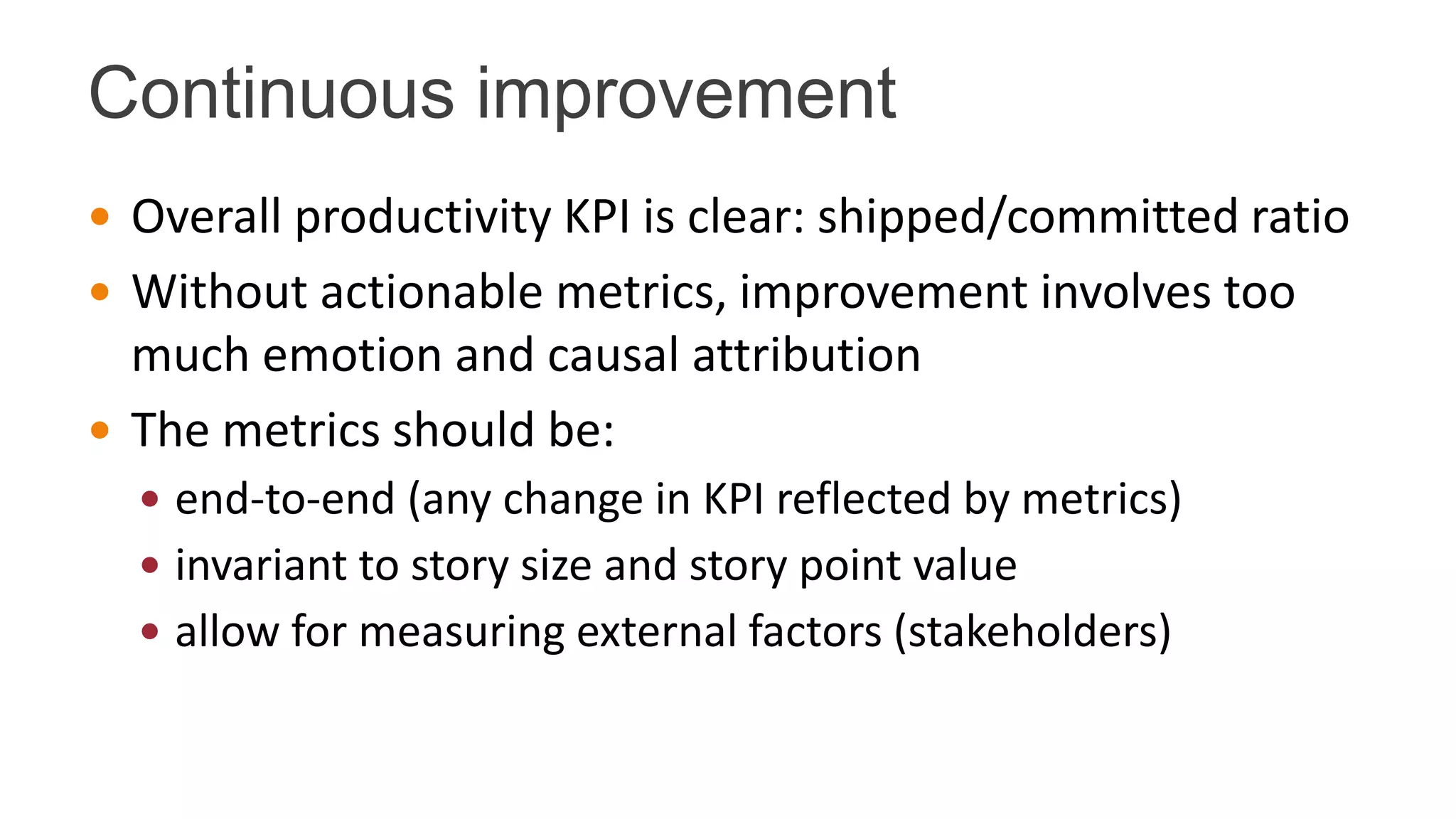

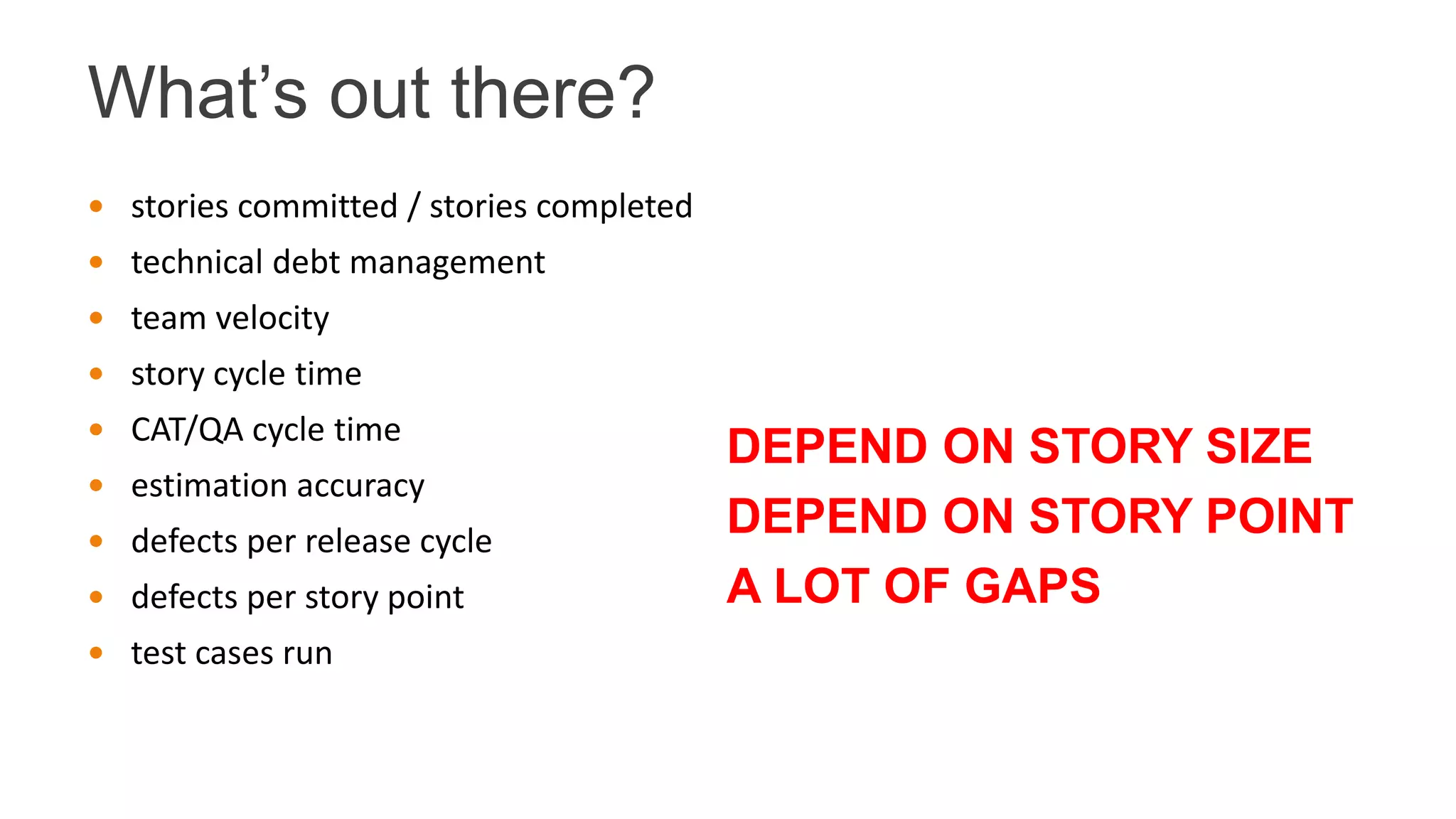

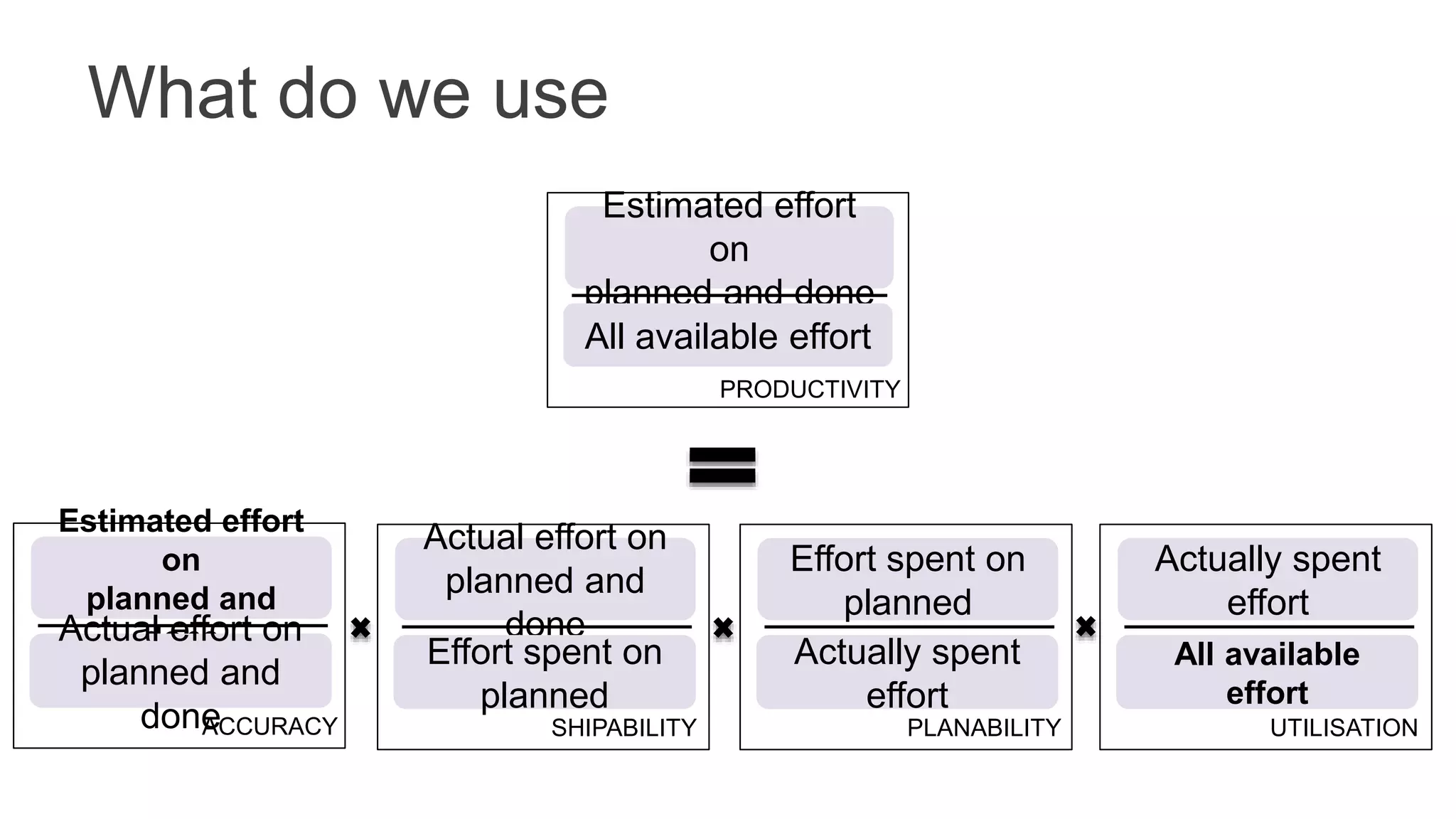

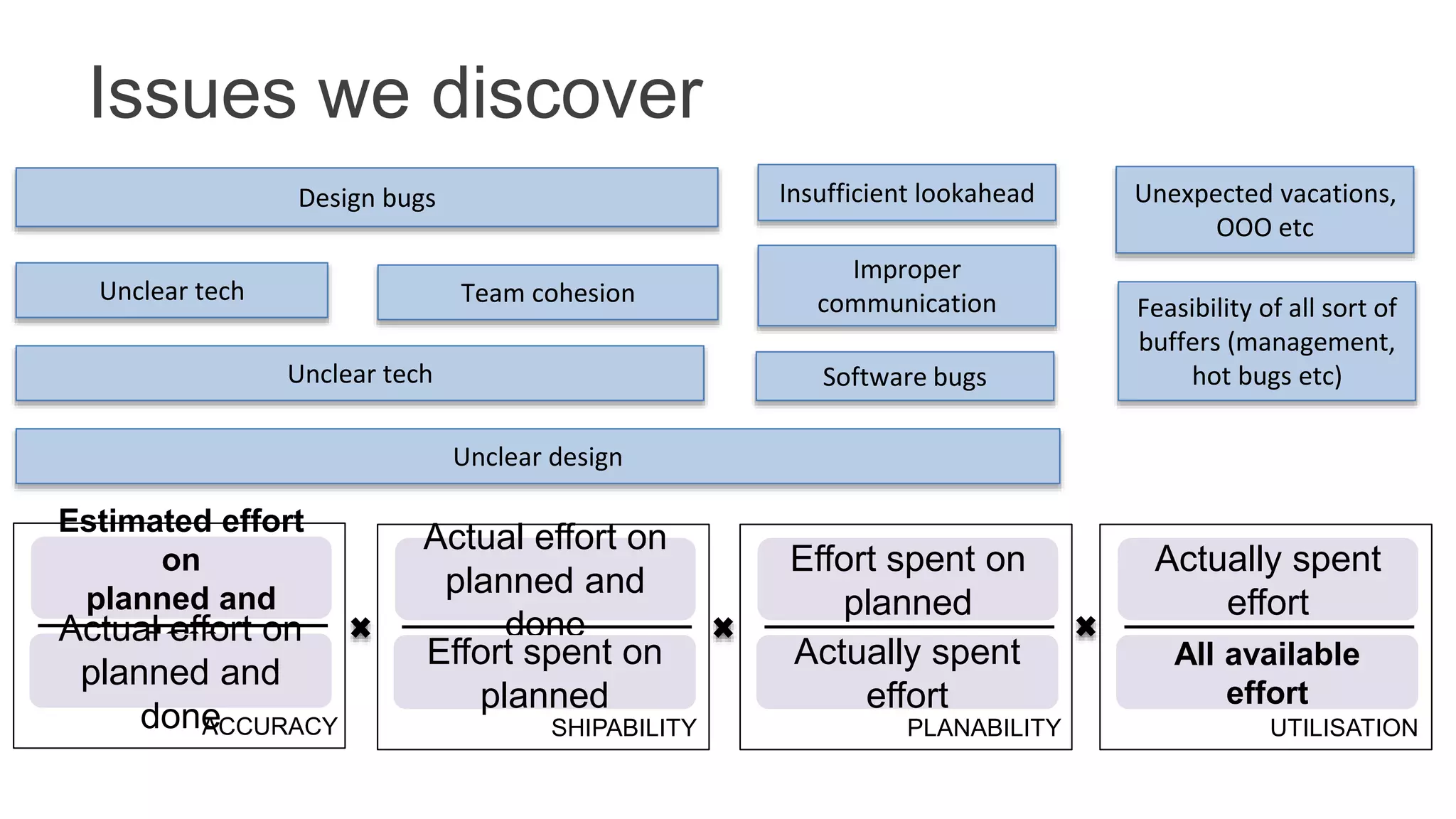

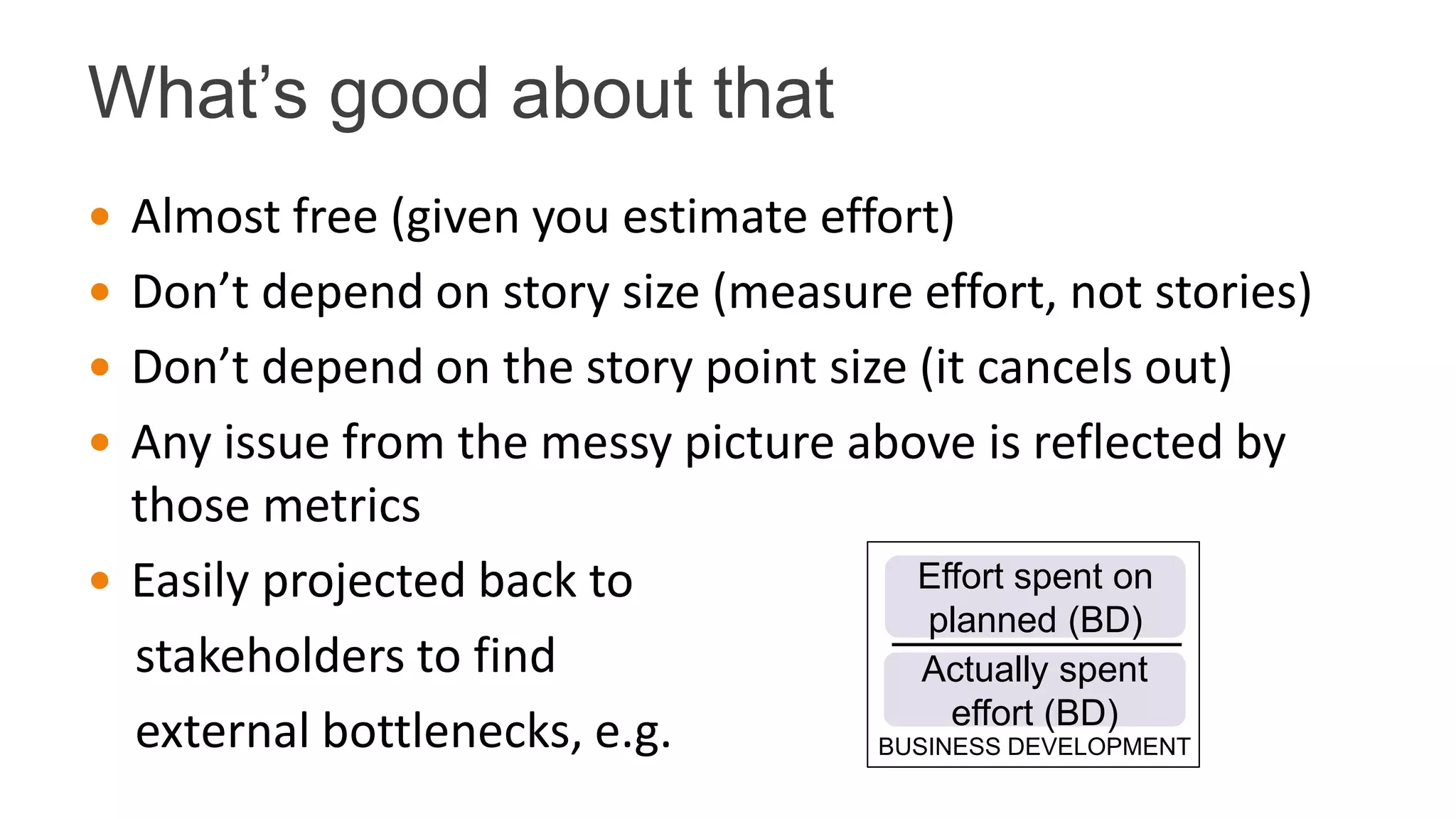

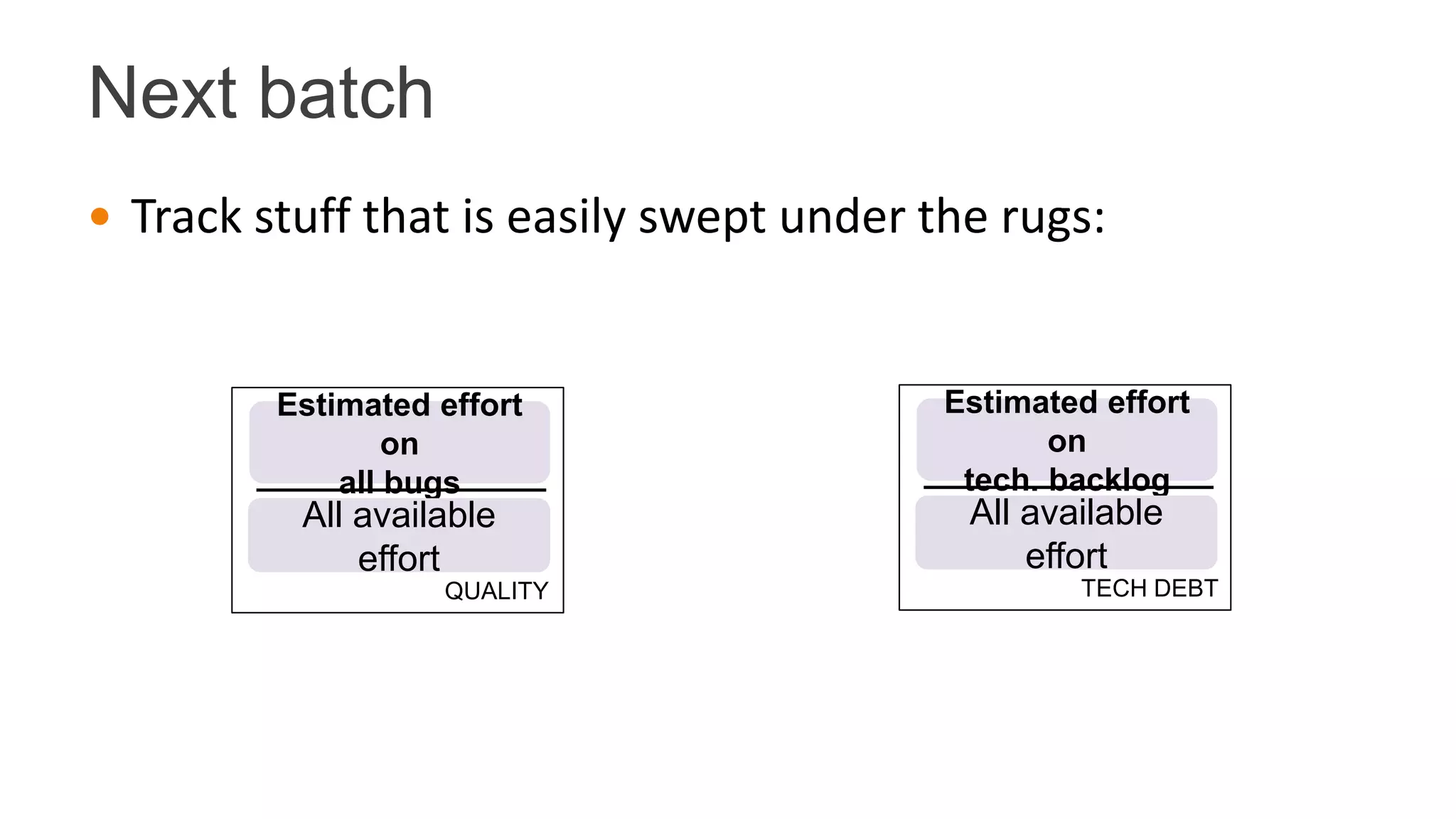

The document discusses the challenges of measuring agile process improvement in a complex B2C environment, particularly within Bookmate, which has numerous stakeholders and fluctuating priorities. It emphasizes the importance of actionable metrics that can accurately reflect team productivity, such as ship/commit ratios, while acknowledging the impact of external factors and technical debt. The proposed metrics aim to provide clarity on various aspects of team performance and bottlenecks without eliminating underlying issues.