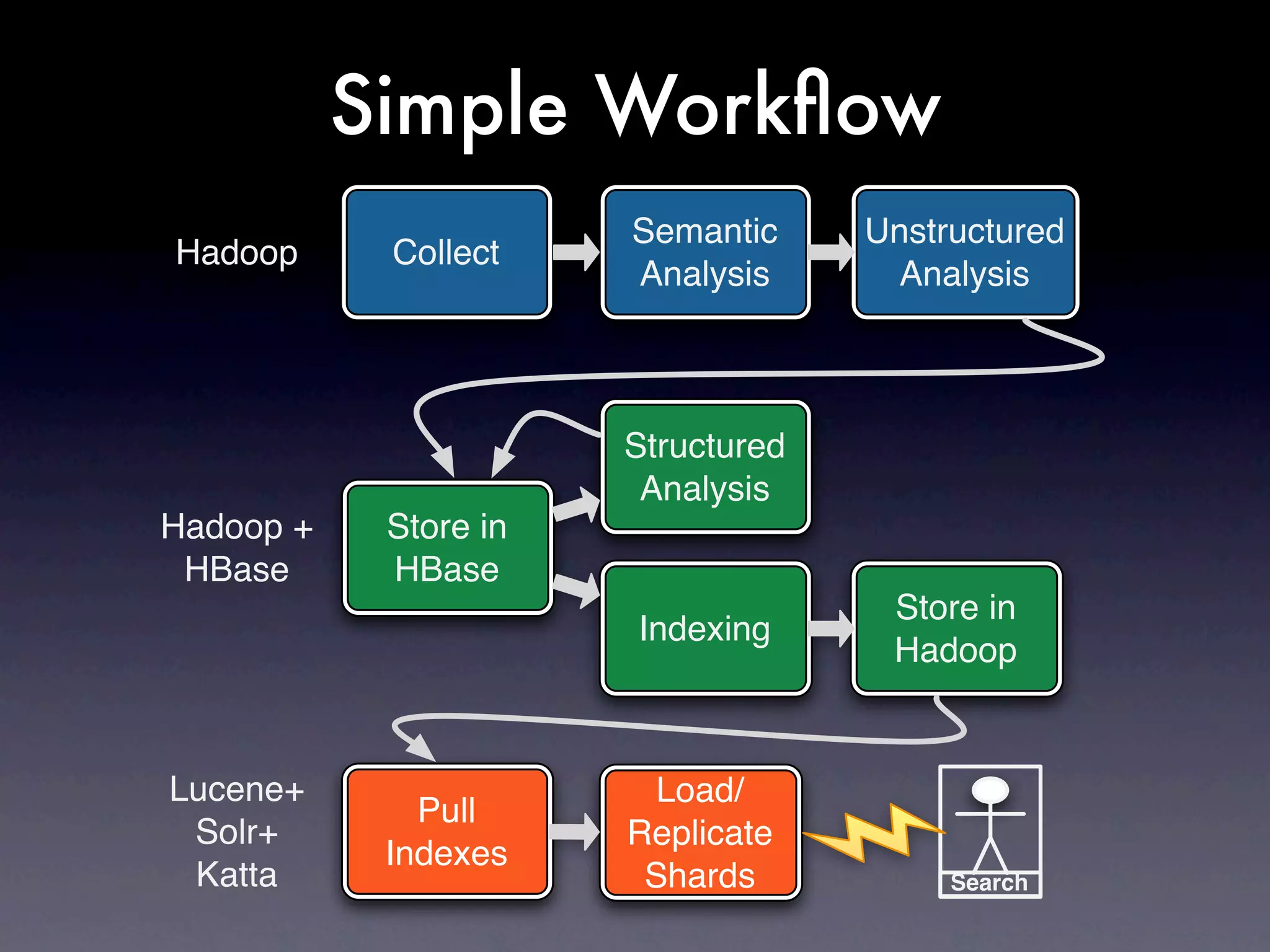

The document discusses strategies for building scalable systems in the era of big data, emphasizing the importance of engineering and operations in managing unstructured and interconnected data. It highlights key concepts such as recovery-oriented computing, gradual deployment, and the necessity for extensive testing in production environments. The author stresses adapting to failures, the unique challenges of engineering at scale, and the need for a strong computer science foundation.