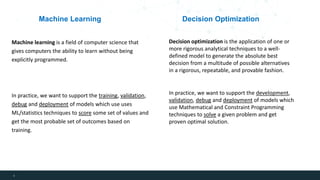

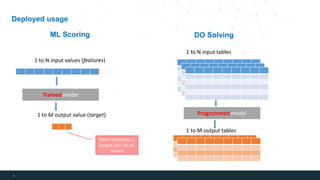

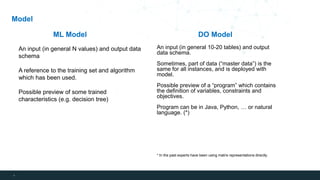

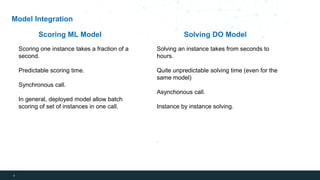

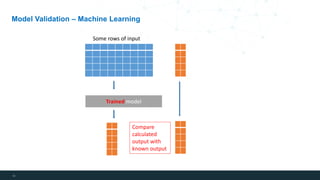

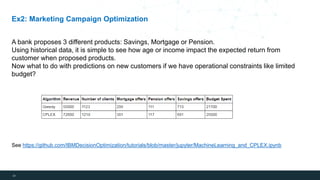

The document compares machine learning and decision optimization, highlighting the differences in their methodologies and applications. While machine learning enables models to learn from data for predictions, decision optimization employs analytical techniques to find the best solutions among alternatives. It emphasizes the importance of recognizing when to use each approach individually or in combination to improve decision-making in various contexts.