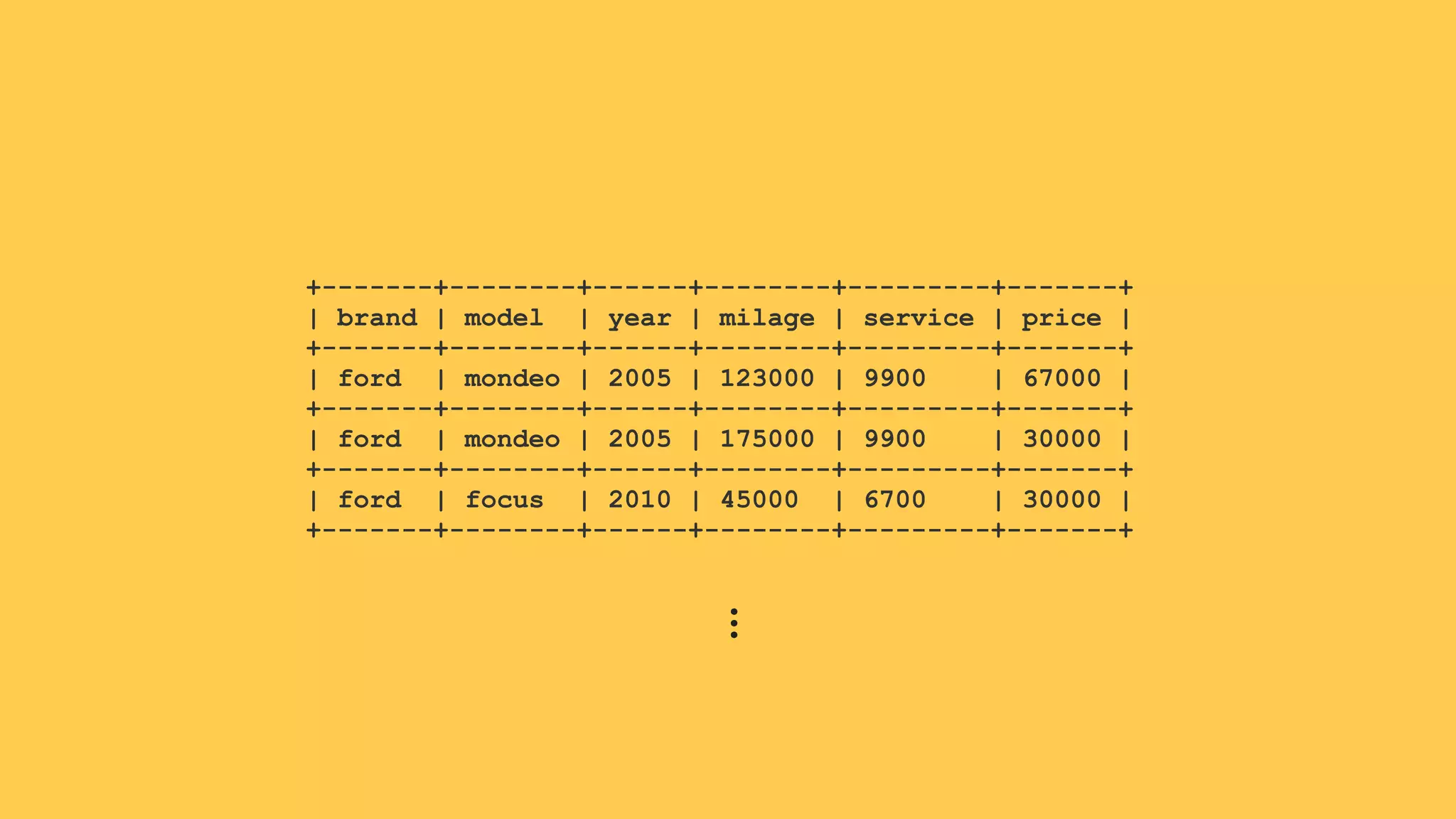

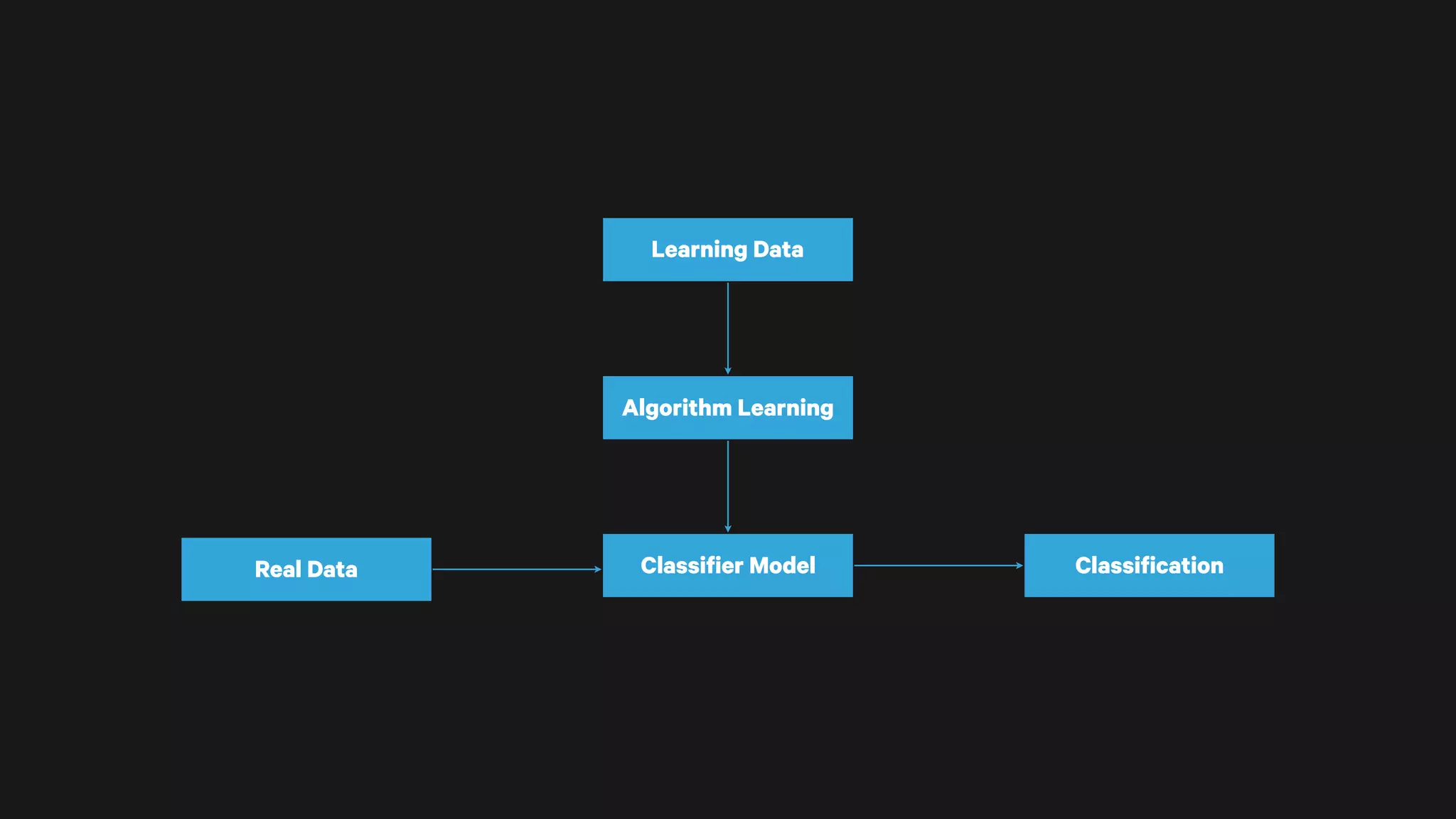

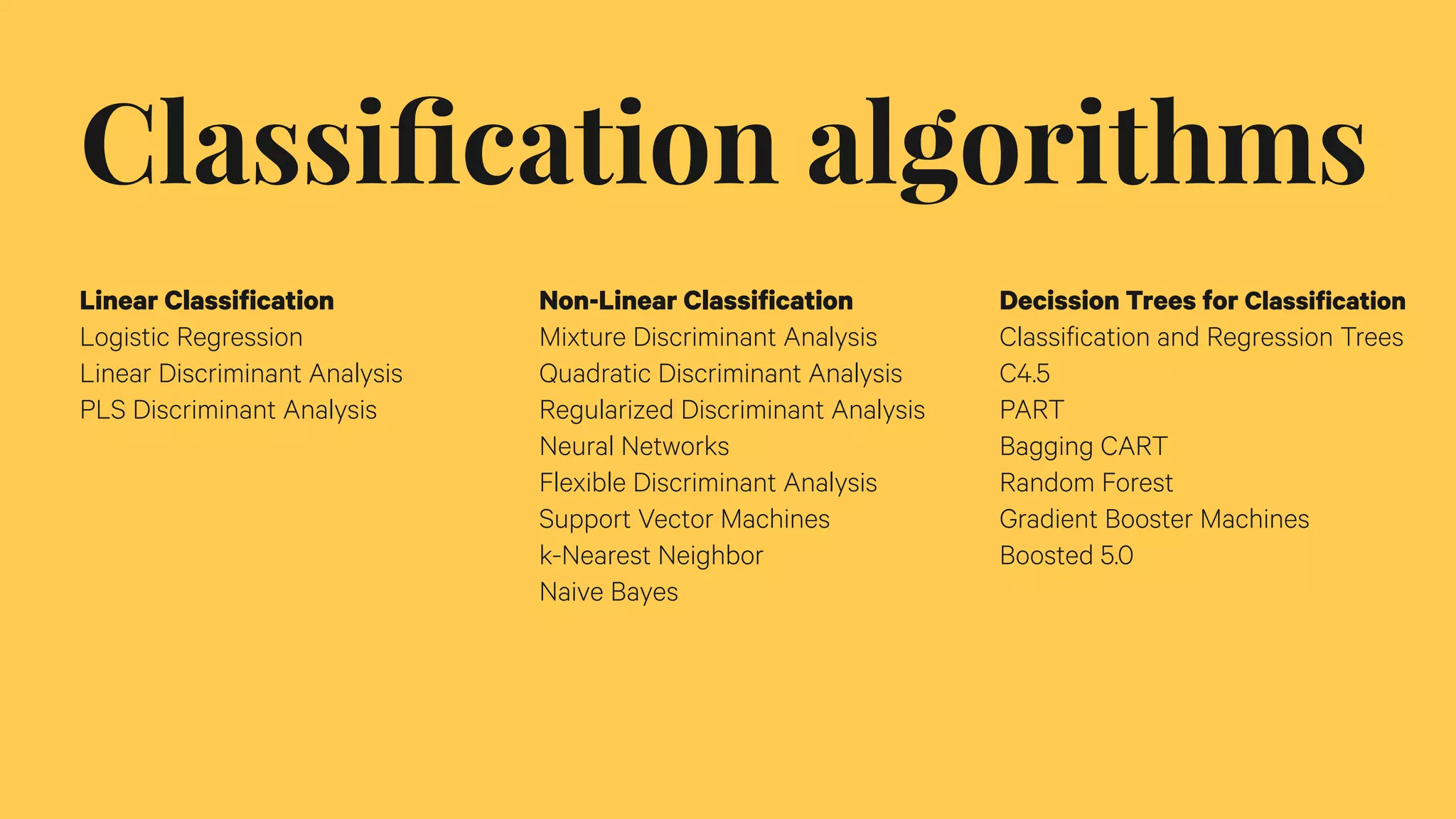

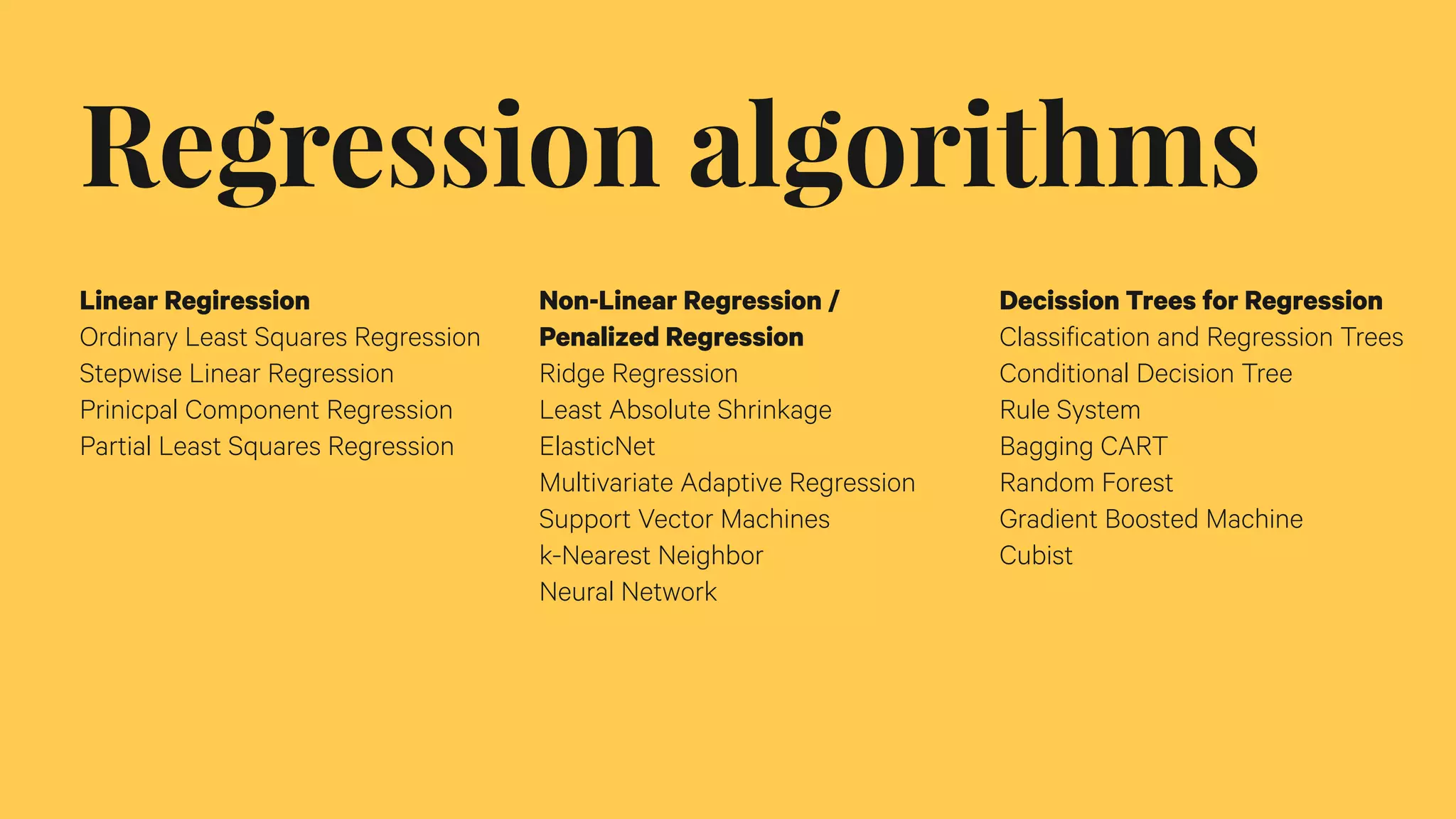

This document discusses machine learning techniques and their applications. It provides examples of how machine learning algorithms can be used to classify data, detect patterns, and make predictions. The key steps in a machine learning process are described, including data collection, preprocessing, algorithm training, evaluation, and more. Various machine learning algorithms for tasks like classification, regression, and clustering are also outlined.

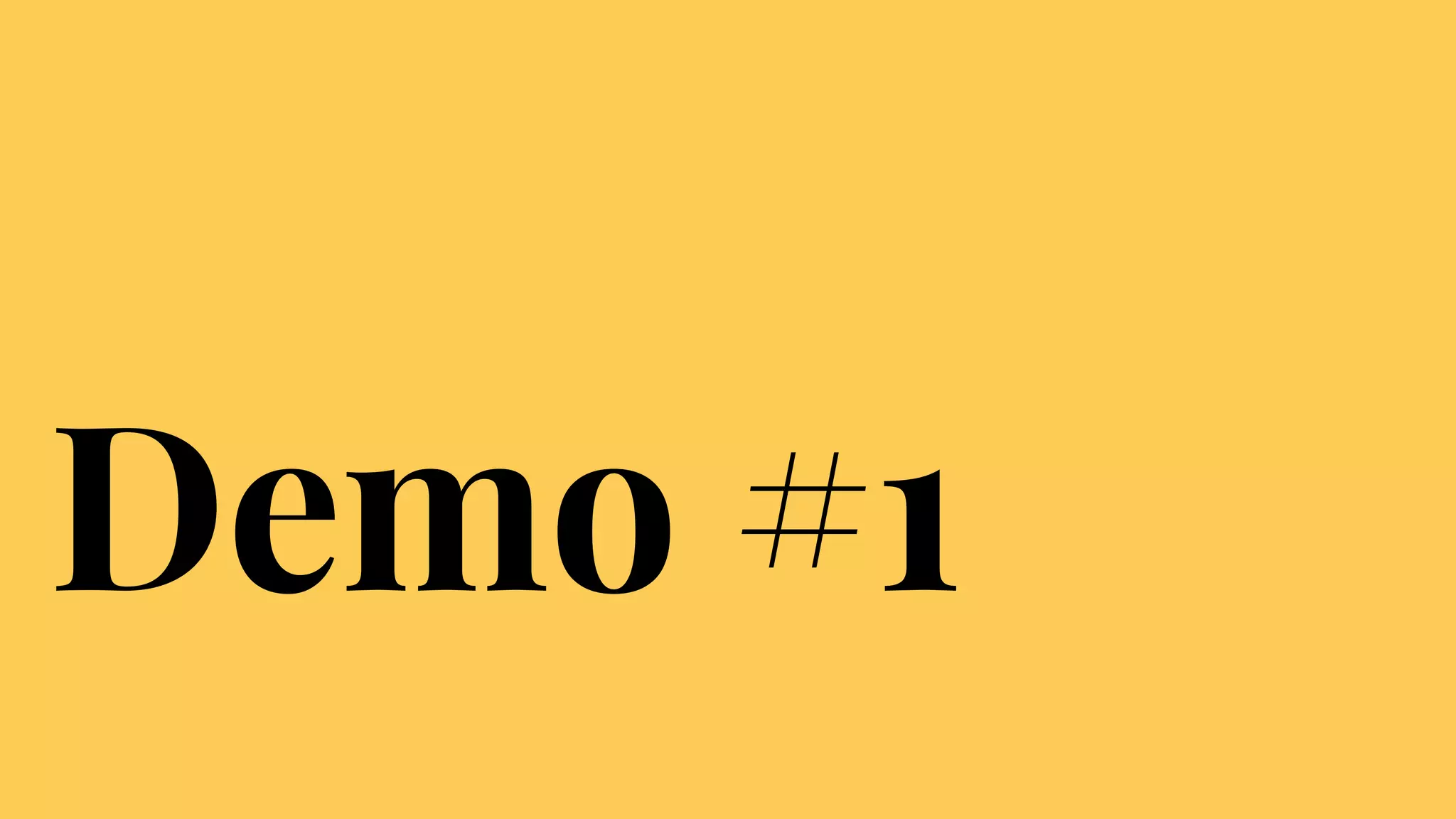

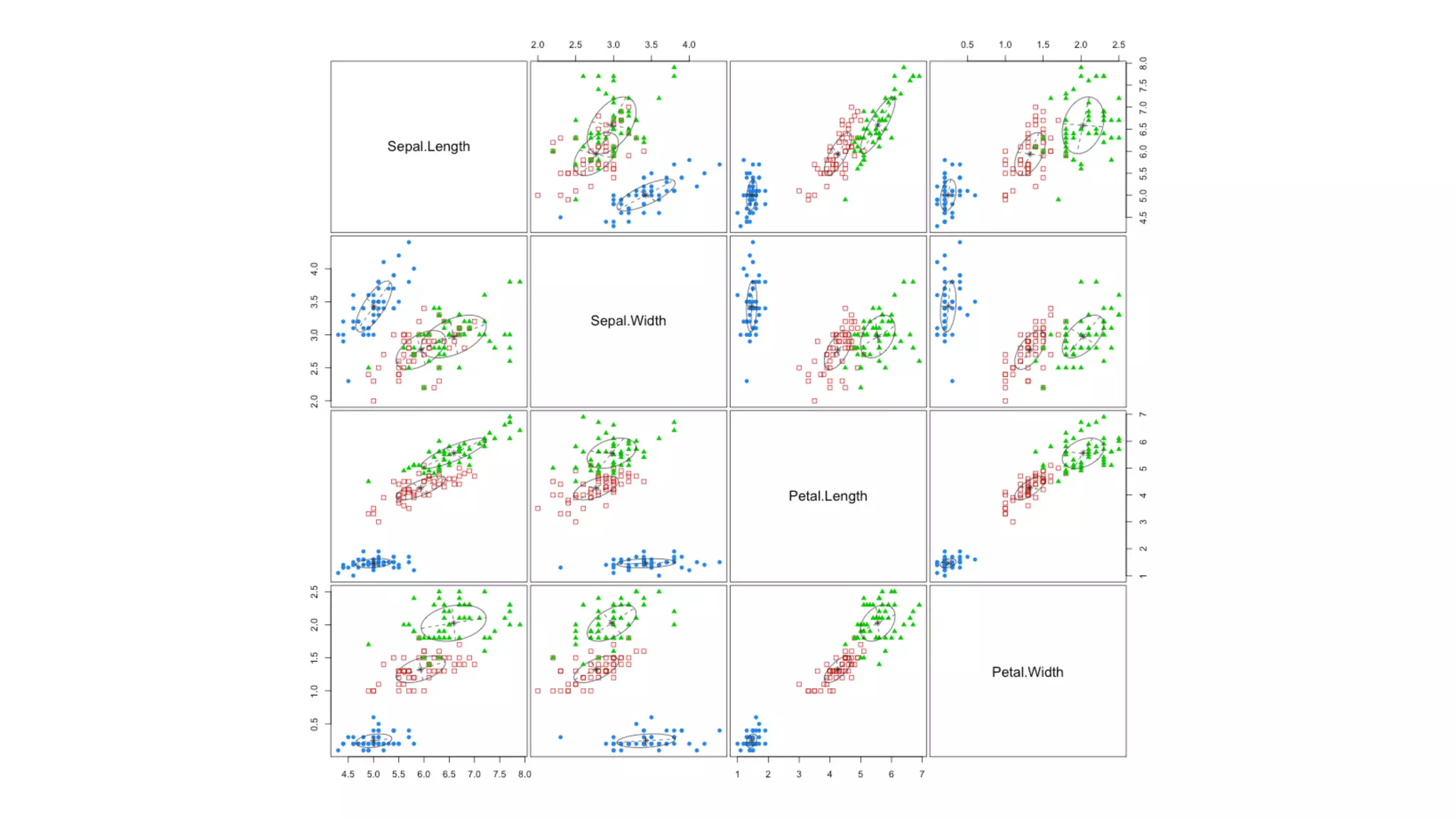

![> dataset(iris)

> head(iris)

Sepal.Length Sepal.Width Petal.Length Petal.Width Species

1 5.1 3.5 1.4 0.2 setosa

2 4.9 3.0 1.4 0.2 setosa

3 4.7 3.2 1.3 0.2 setosa

4 4.6 3.1 1.5 0.2 setosa

5 5.0 3.6 1.4 0.2 setosa

6 5.4 3.9 1.7 0.4 setosa

> tail(iris)

Sepal.Length Sepal.Width Petal.Length Petal.Width Species

145 6.7 3.3 5.7 2.5 virginica

146 6.7 3.0 5.2 2.3 virginica

147 6.3 2.5 5.0 1.9 virginica

148 6.5 3.0 5.2 2.0 virginica

149 6.2 3.4 5.4 2.3 virginica

150 5.9 3.0 5.1 1.8 virginica

> plot(iris[,1:4])](https://image.slidesharecdn.com/machinelearningfordevelopers-160117082414/75/Machine-learning-for-developers-54-2048.jpg)

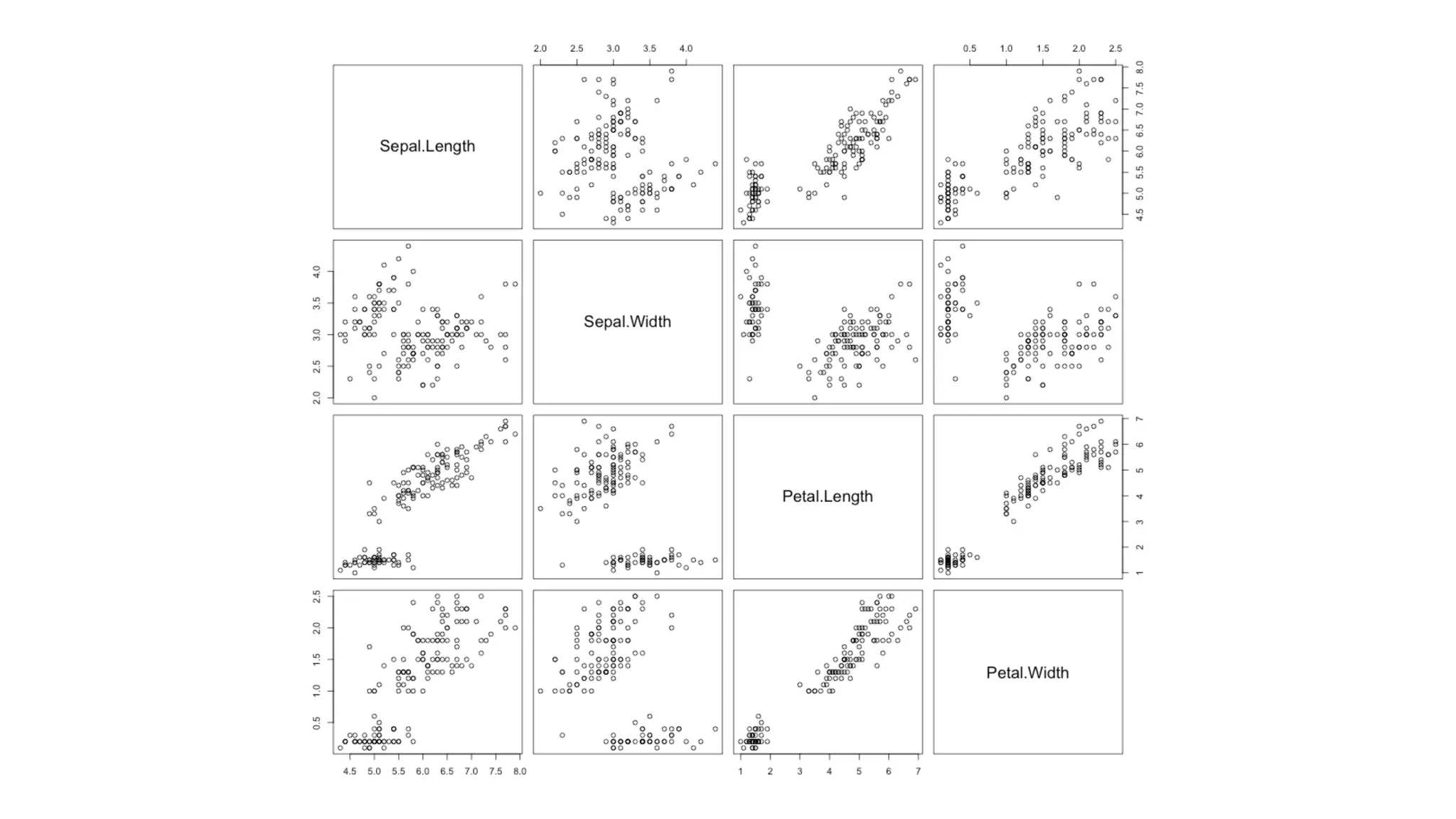

![> library(mclust)

> class = iris$Species

> mod2 = MclustDA(iris[,1:4], class, modelType = „EDDA")

> table(class)

class

setosa versicolor virginica

50 50 50](https://image.slidesharecdn.com/machinelearningfordevelopers-160117082414/75/Machine-learning-for-developers-56-2048.jpg)

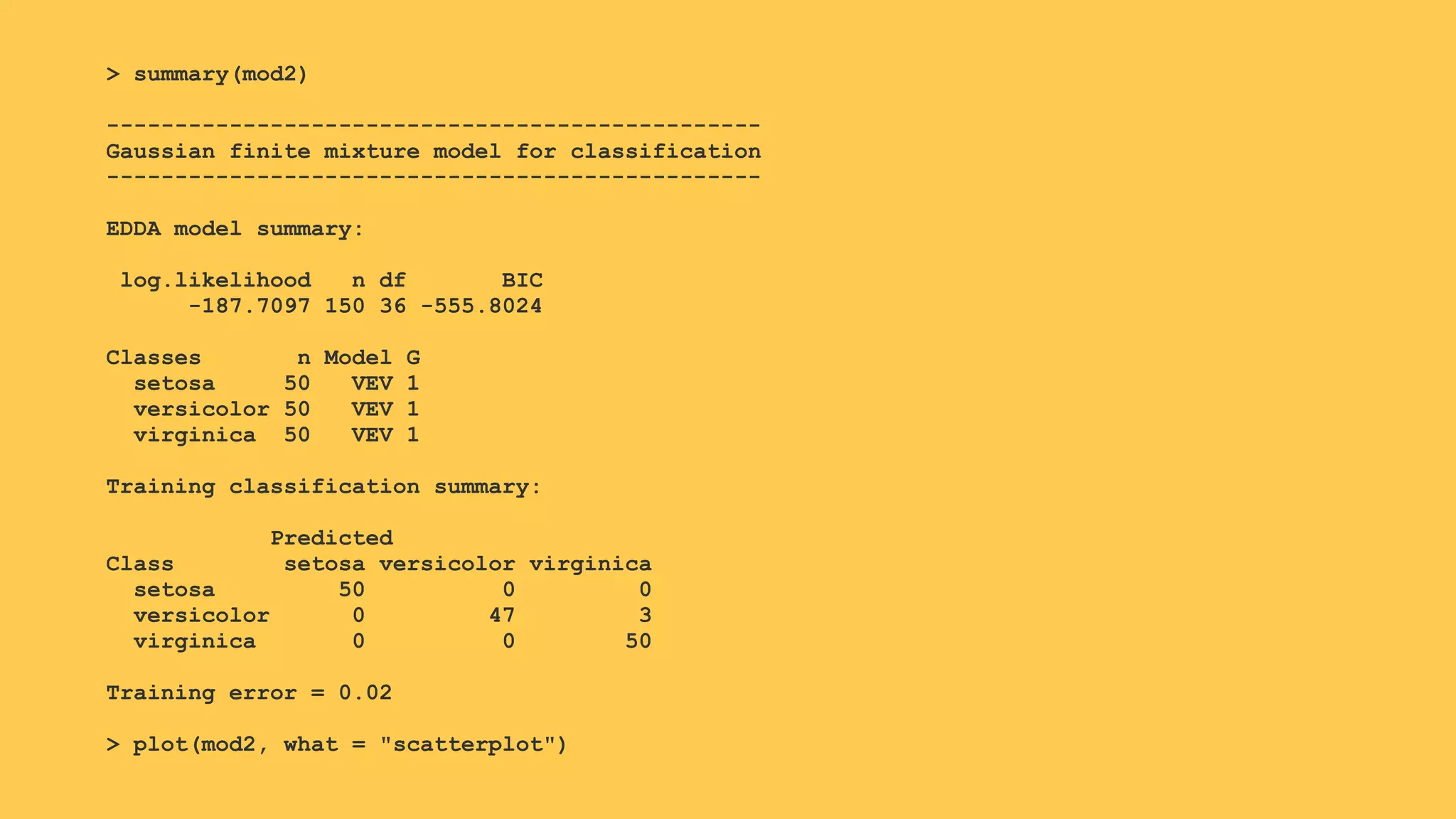

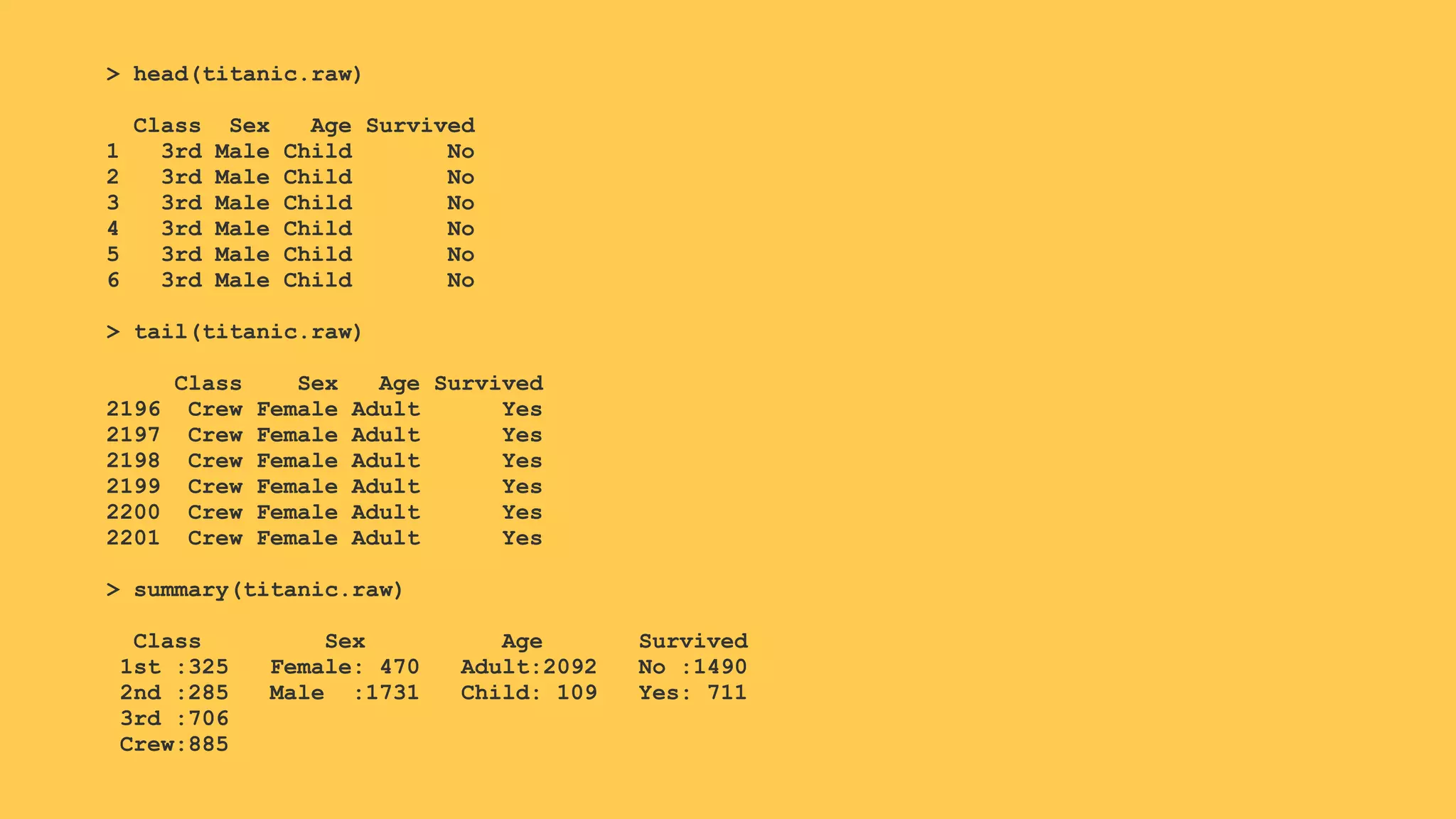

![> library(arules)

Ładowanie wymaganego pakietu: Matrix

Dołączanie pakietu: ‘arules’

> rules <- apriori(titanic.raw)

Apriori

Parameter specification:

confidence minval smax arem aval originalSupport support minlen maxlen target ext

0.8 0.1 1 none FALSE TRUE 0.1 1 10 rules FALSE

Algorithmic control:

filter tree heap memopt load sort verbose

0.1 TRUE TRUE FALSE TRUE 2 TRUE

Absolute minimum support count: 220

set item appearances ...[0 item(s)] done [0.00s].

set transactions ...[10 item(s), 2201 transaction(s)] done [0.00s].

sorting and recoding items ... [9 item(s)] done [0.00s].

creating transaction tree ... done [0.00s].

checking subsets of size 1 2 3 4 done [0.00s].

writing ... [27 rule(s)] done [0.00s].

creating S4 object ... done [0.00s].](https://image.slidesharecdn.com/machinelearningfordevelopers-160117082414/75/Machine-learning-for-developers-61-2048.jpg)

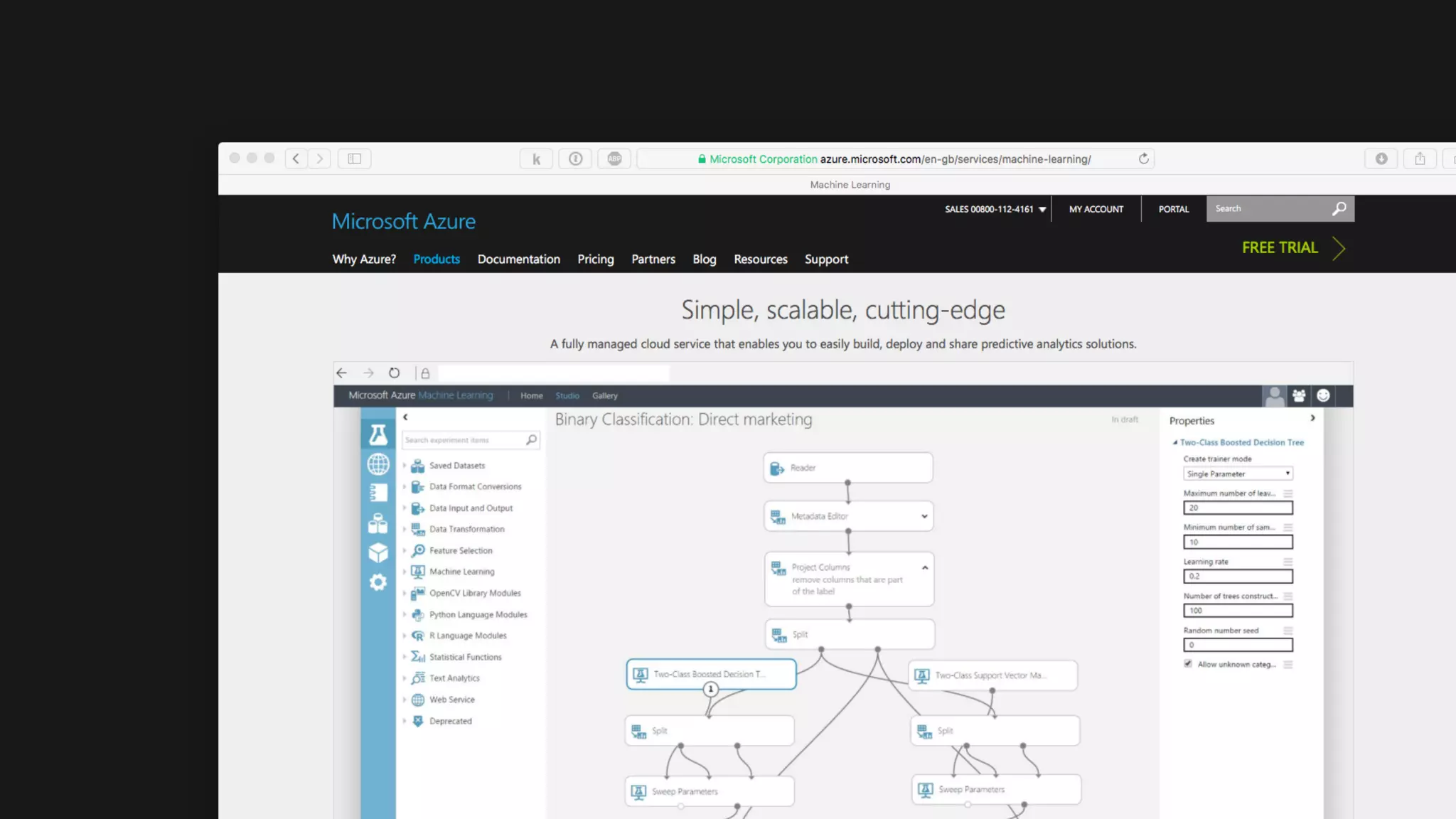

![> rules <- apriori(titanic.raw,

+ parameter = list(minlen=2, supp=0.005, conf=0.8),

+ appearance = list(rhs=c("Survived=No", "Survived=Yes"),

+ default="lhs"),

+ control = list(verbose=F))

> rules.sorted <- sort(rules, by="lift")

> subset.matrix <- is.subset(rules.sorted, rules.sorted)

> subset.matrix[lower.tri(subset.matrix, diag=T)] <- NA

> redundant <- colSums(subset.matrix, na.rm=T) >= 1

> which(redundant)

{Class=2nd,Sex=Female,Age=Child,Survived=Yes} 2

{Class=1st,Sex=Female,Age=Adult,Survived=Yes} 4

{Class=Crew,Sex=Female,Age=Adult,Survived=Yes} 7

{Class=2nd,Sex=Female,Age=Adult,Survived=Yes} 8

> rules.pruned <- rules.sorted[!redundant]](https://image.slidesharecdn.com/machinelearningfordevelopers-160117082414/75/Machine-learning-for-developers-62-2048.jpg)