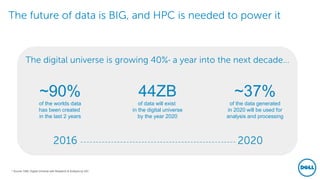

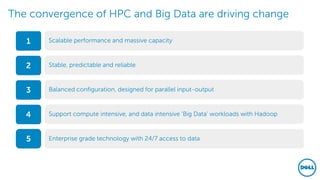

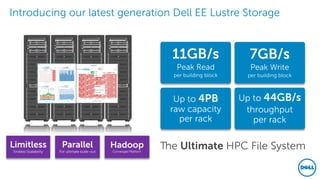

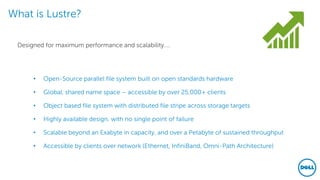

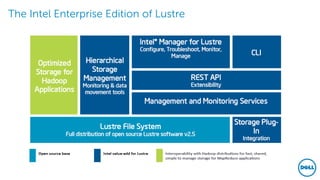

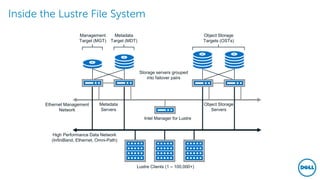

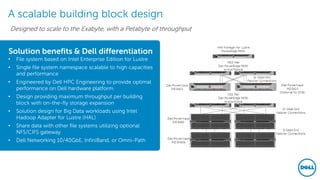

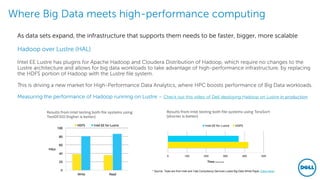

The document discusses the importance of high-performance computing (HPC) in managing the exponential growth of big data, predicting that by 2020, 90% of global data will be generated in just two years. It introduces Dell's Enterprise Edition Lustre storage solution, which offers significant scalability, performance, and reliability for handling data-intensive workloads. The text emphasizes the seamless integration of Lustre with Apache Hadoop to enhance big data processing and analytics capabilities.