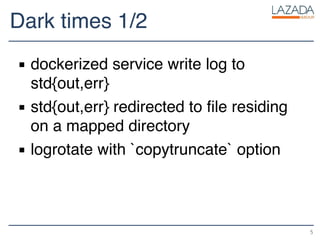

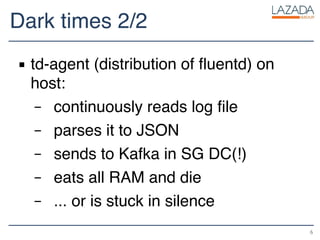

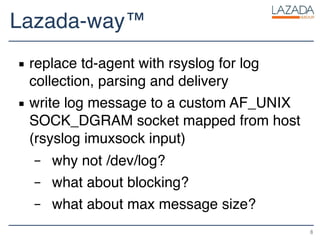

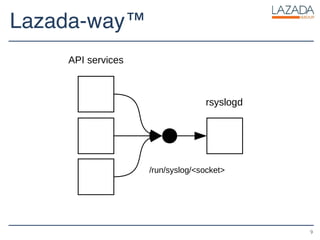

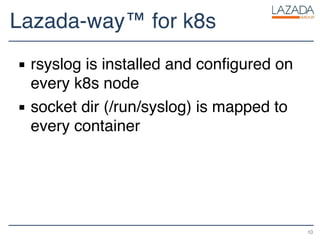

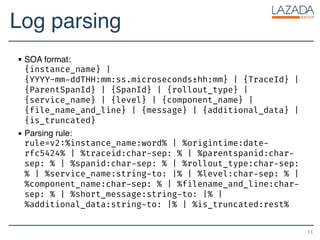

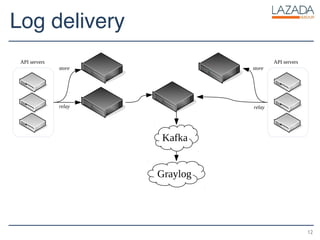

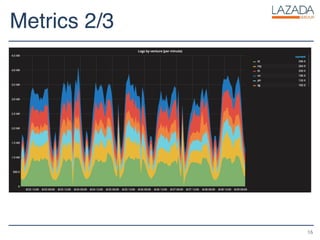

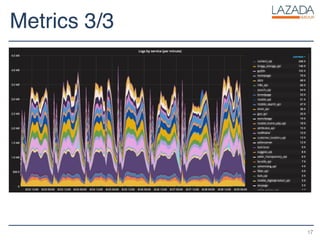

The document outlines a logging strategy in a Dockerized environment, covering log message handling, collection, delivery, and storage for microservices at a company with multiple ventures. It discusses best practices, a transition from using td-agent to rsyslog for efficiency, and provides a detailed log parsing format. Additionally, it includes metrics monitoring through Prometheus and future plans for improvements in reliability and developer collaboration.