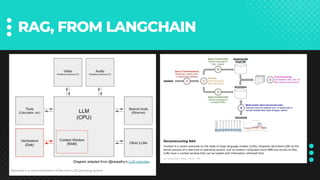

The document outlines a webinar on production-level management of large language models (LLMs) scheduled for December 6, 2023, featuring panelists who discuss LLM operations, tooling, and prototyping techniques. Key topics include aligning processes for building LLM applications, utilizing various industry-standard tools such as Langsmith, and strategies for improving model performance and user experience. The webinar also highlights the importance of retrieval-augmented generation and practical approaches for engineers and data scientists in the evolving AI landscape.

![📇INDEX (THE DATABASE)

Split docs into chunks

1.

Create embeddings for each chunk

2.

Store embeddings in vector store index

3.

Embeddings Vector Store Index

Documents

Raw Source

Documents Chunked Documents

[0.1,0.4,-0.6,...]

[0.2,0.3,-0.4,...]

[0.8,0.3,-0.1,...]](https://image.slidesharecdn.com/lactoforum-231206204658-e3ceadcb/85/LLMs-in-Production-Tooling-Process-and-Team-Structure-45-320.jpg)

![🐕RETRIEVERS

Embeddings Vector Store Index

Documents

Raw Source

Documents Chunked Documents

[0.1,0.4,-0.6,...]

Query

INPUT

[0.1,0.4,-0.6,...]

Find Nearest Neighbors

Context: From source 1

Context: From source 2

Context: From source

🐕

[0.2,0.3,-0.4,...]

[0.8,0.3,-0.1,...]](https://image.slidesharecdn.com/lactoforum-231206204658-e3ceadcb/85/LLMs-in-Production-Tooling-Process-and-Team-Structure-46-320.jpg)

![[0.1, 0.4, -0.6, ...]

Ryan was ...

Query

Find Nearest

Neighbours

(cosine similarity)

Vector Database

App Logic

INPUT

“Query...”

Embedding Model](https://image.slidesharecdn.com/lactoforum-231206204658-e3ceadcb/85/LLMs-in-Production-Tooling-Process-and-Team-Structure-47-320.jpg)

![[0.1, 0.4, -0.6, ...]

Use the provided context to answer the user's query.

You may not answer the user's query unless there is specific

context in the following text.

If you do not know the answer, or cannot answer, please respond

with "I don't know".

Context:

{context}

User Query:

{user_query}

Query

Embedding Model Chat Model

Prompt Templates

INPUT

“Query...”

Find Nearest

Neighbours

(cosine similarity)

Vector Database

App Logic](https://image.slidesharecdn.com/lactoforum-231206204658-e3ceadcb/85/LLMs-in-Production-Tooling-Process-and-Team-Structure-48-320.jpg)

![Embedding Model Chat Model

Vector Store

Find Nearest

Neighbours

(cosine similarity)

Return document(s)

from

Nearest Neighbours

[0.1, 0.4, -0.6, ...]

Prompt Templates

Vector Database

App Logic App Logic

Use the provided context to answer the user's query.

You may not answer the user's query unless there is specific

context in the following text.

If you do not know the answer, or cannot answer, please respond

with "I don't know".

Context:

{context}

User Query:

{user_query}

Context: ref 1

Context: ref 2

Context: ref 3

Context: ref 4

Ryan was ...

Query

INPUT

“Query”](https://image.slidesharecdn.com/lactoforum-231206204658-e3ceadcb/85/LLMs-in-Production-Tooling-Process-and-Team-Structure-49-320.jpg)

![Embedding Model Chat Model

Vector Store

Find Nearest

Neighbours

(cosine similarity)

Return document(s)

from

Nearest Neighbours

[0.1, 0.4, -0.6, ...]

Prompt Templates

Vector Database

App Logic App Logic

Use the provided context to answer the user's query.

You may not answer the user's query unless there is specific

context in the following text.

If you do not know the answer, or cannot answer, please respond

with "I don't know".

Context:

{context}

User Query:

{user_query}

Context: ref 1

Context: ref 2

Context: ref 3

Context: ref 4

Answer

Query

INPUT

OUTPUT

“Query”](https://image.slidesharecdn.com/lactoforum-231206204658-e3ceadcb/85/LLMs-in-Production-Tooling-Process-and-Team-Structure-50-320.jpg)

![Embedding Model Chat Model

Vector Store

Find Nearest

Neighbours

(cosine similarity)

Return document(s)

from

Nearest Neighbours

[0.1, 0.4, -0.6, ...]

Prompt Templates

Vector Database

App Logic App Logic

Use the provided context to answer the user's query.

You may not answer the user's query unless there is specific

context in the following text.

If you do not know the answer, or cannot answer, please respond

with "I don't know".

Context:

{context}

User Query:

{user_query}

Context: ref 1

Context: ref 2

Context: ref 3

Context: ref 4

Answer

Query

INPUT

OUTPUT

“Query”

Dense Vector Retrieval

In-Context Learning](https://image.slidesharecdn.com/lactoforum-231206204658-e3ceadcb/85/LLMs-in-Production-Tooling-Process-and-Team-Structure-51-320.jpg)

![Embedding Model Chat Model

Vector Store

Find Nearest

Neighbours

(cosine similarity)

Return document(s)

from

Nearest Neighbours

[0.1, 0.4, -0.6, ...]

Prompt Templates

Vector Database

App Logic App Logic

Use the provided context to answer the user's query.

You may not answer the user's query unless there is specific

context in the following text.

If you do not know the answer, or cannot answer, please respond

with "I don't know".

Context:

{context}

User Query:

{user_query}

Context: ref 1

Context: ref 2

Context: ref 3

Context: ref 4

Answer

Query

INPUT

OUTPUT

“Query”

Dense Vector Retrieval

In-Context Learning](https://image.slidesharecdn.com/lactoforum-231206204658-e3ceadcb/85/LLMs-in-Production-Tooling-Process-and-Team-Structure-52-320.jpg)