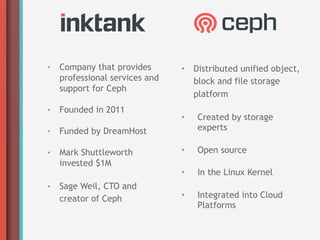

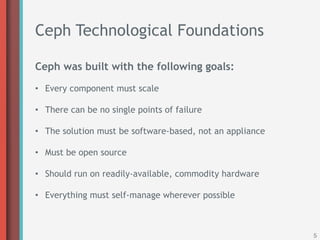

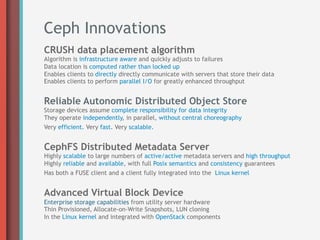

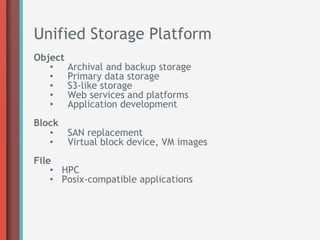

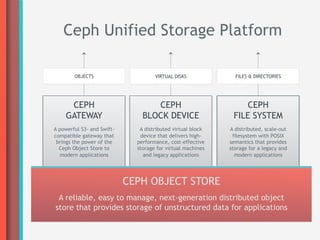

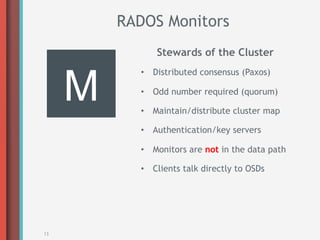

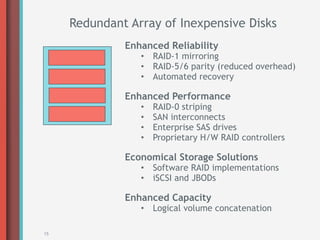

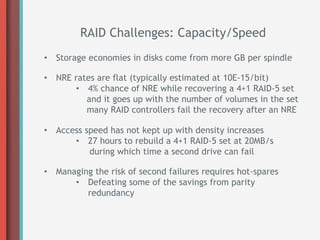

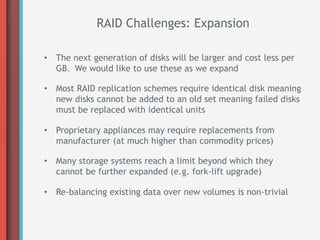

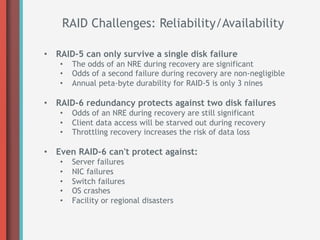

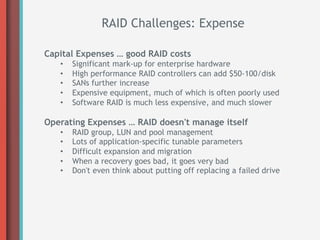

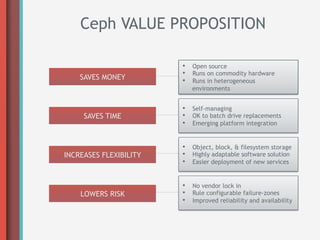

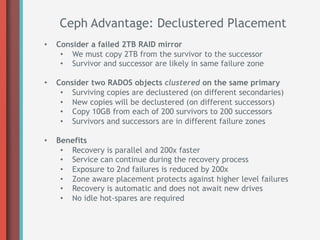

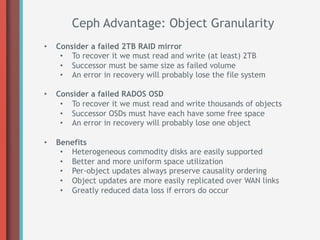

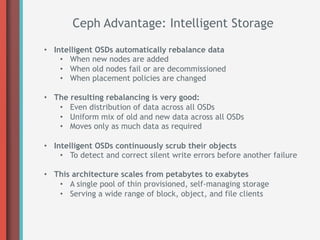

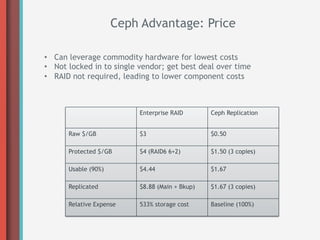

Inktank presents Ceph, a scalable, open-source storage solution that addresses challenges faced by traditional RAID systems by offering software-based, self-managing architecture on commodity hardware. Ceph's advantages include enhanced reliability, parallel data recovery, and flexible storage capabilities for various application types, significantly reducing costs compared to enterprise RAID solutions. The document outlines Ceph's architecture, innovations, and provides resources for further exploration and support.