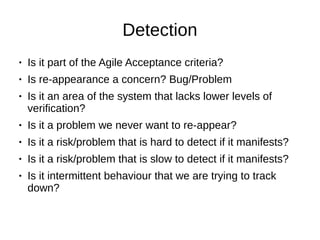

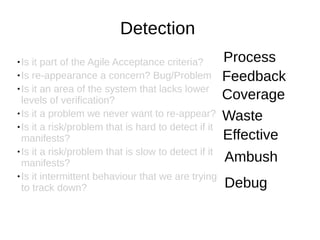

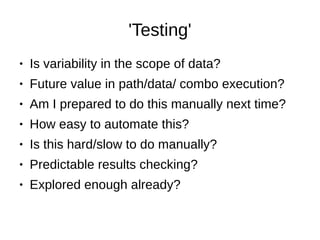

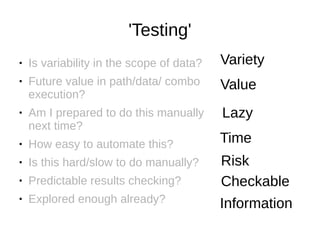

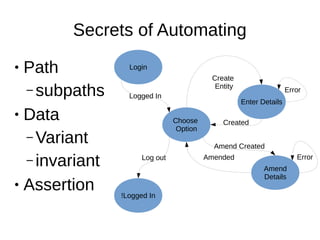

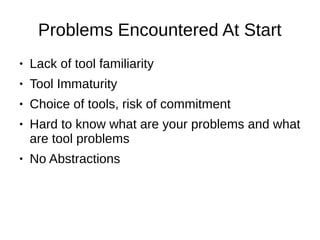

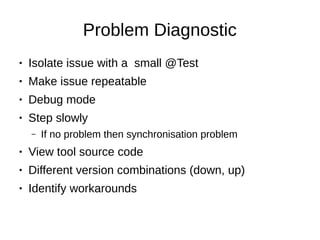

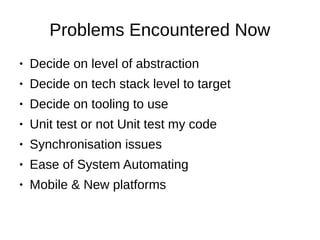

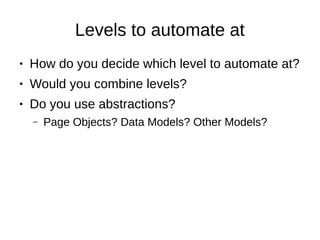

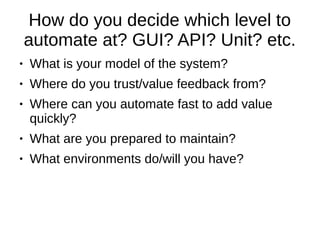

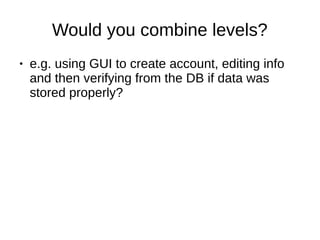

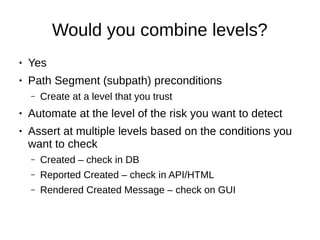

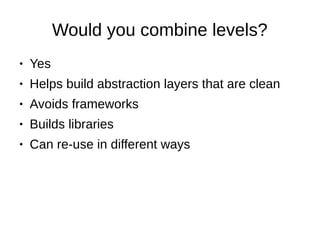

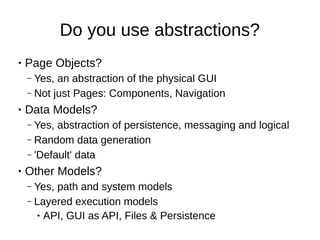

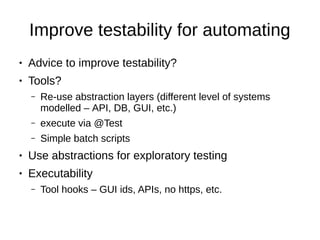

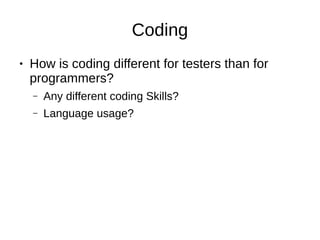

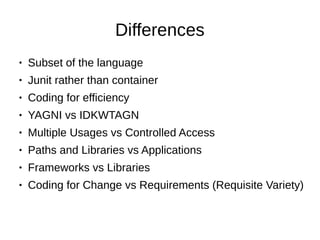

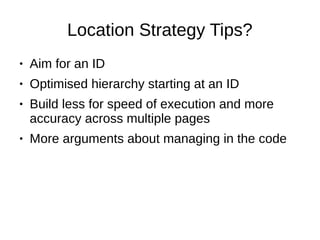

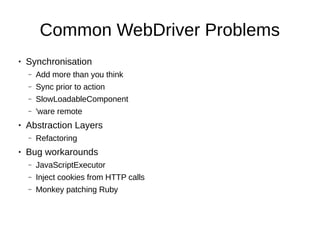

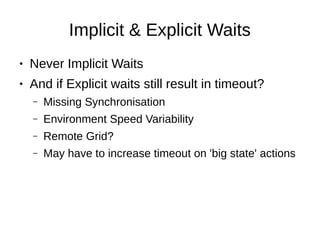

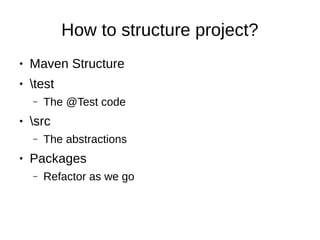

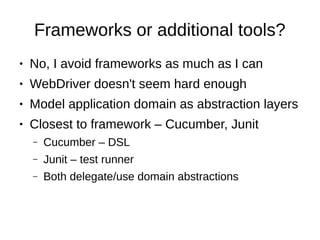

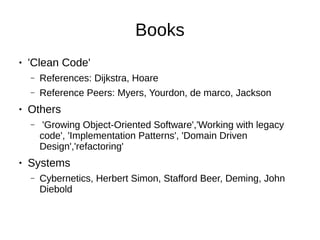

The webinar by Alan Richardson focuses on automating testing and discusses key lessons learned, including how to determine what to automate, challenges faced, and decisions made throughout the automation process. Key topics include the differentiation of 'detection' and 'testing', the levels at which to automate, and coding considerations for both testing and production. Additionally, it covers various strategies for improving testability and addresses common issues encountered with automation tools and practices.