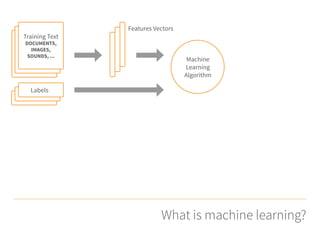

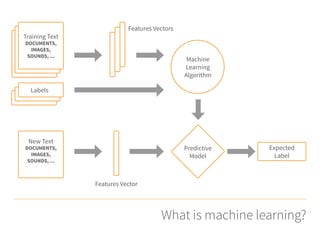

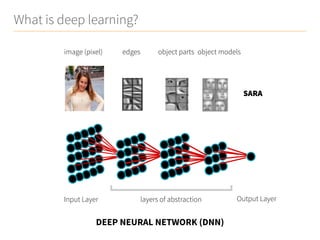

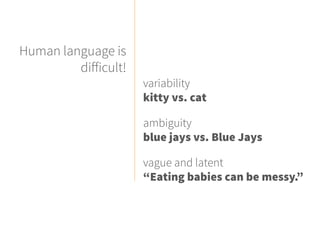

1) The presentation introduced deep learning and how it can be applied to natural language processing tasks like information extraction and question answering.

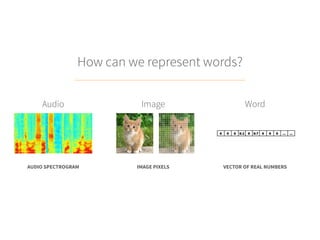

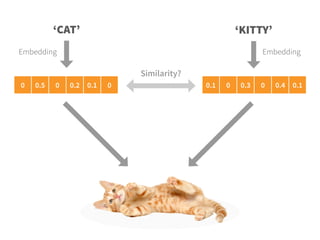

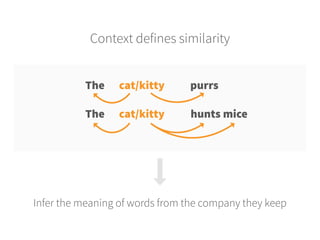

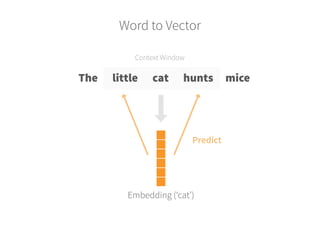

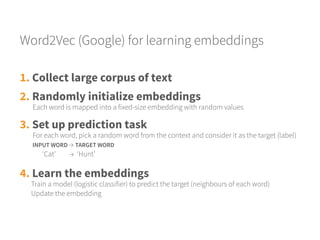

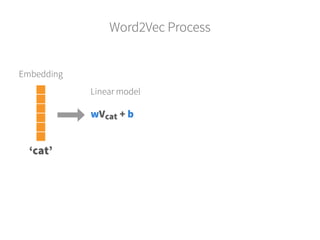

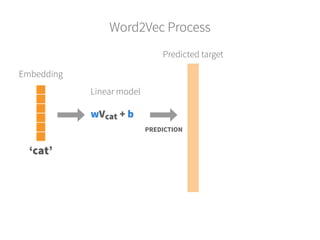

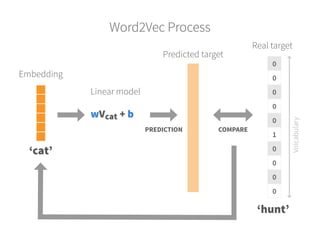

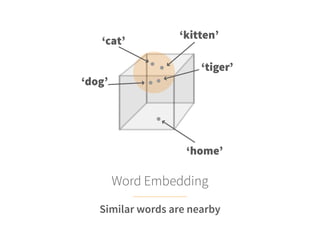

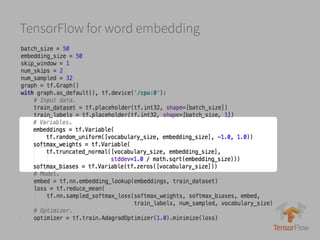

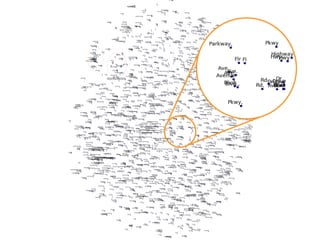

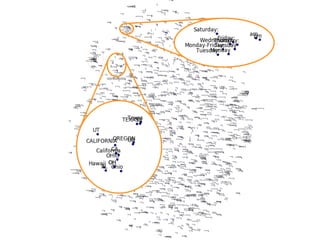

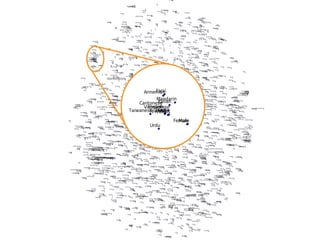

2) Word embeddings and neural networks were discussed as methods to represent words and detect patterns in language.

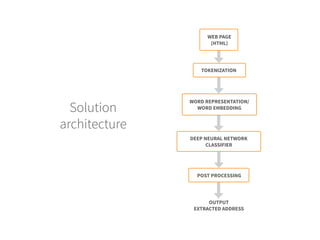

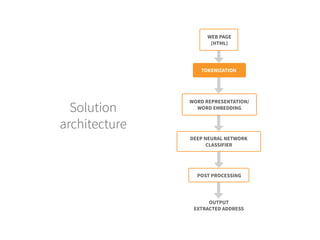

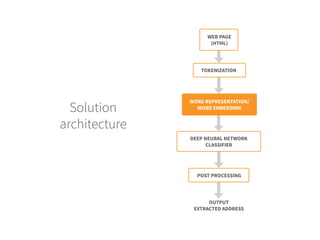

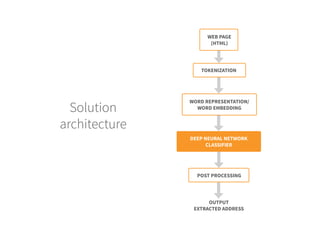

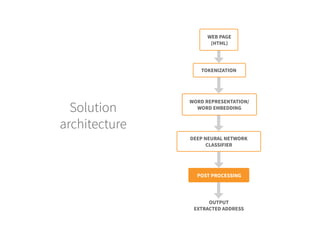

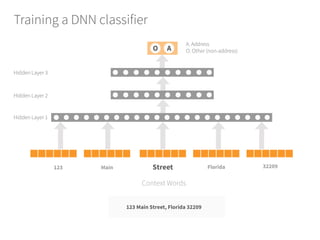

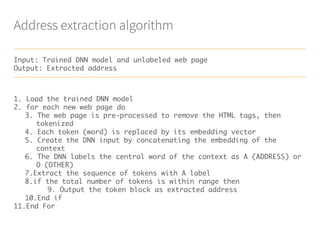

3) An example of using these techniques for address extraction from web pages was provided, including tokenization, word embeddings, and a deep neural network classifier.